Speech.framework on iOS 10

Overview

The next conference is the next innovations. Judging by the mood we are waiting for the cancellation of keyboards and input devices. Apple in iOS 10 introduced to developers the opportunity to work with speech. My colleague Geor Casapidi has already described the capabilities of Siri in his article , and I will talk about Speech.framework . The material discussed in the article is implemented in the demo application what_i_say. At the time of writing, there is no official documentation, so we will be based on what Henry Mason said .

Work with speech is completely tied to access to the Internet, and this is not surprising, since it uses the same Siri engine. The developer is given the opportunity to either recognize the live speech, or already recorded in the file. Difference in request object:

SFSpeechURLRecognitionRequest - used for recognition from a file;SFSpeechAudioBufferRecognitionRequest - used to recognize dictation.

Consider working with real-time dictation.

All work is based on the concept:

But since Speech.framework does not have a special API for working with a microphone, the developer will need to use AVAudioEngine .

Application Authorization

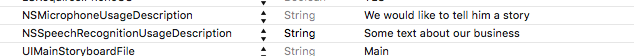

First of all, the developer needs to add two keys to the Info.plist file.

NSMicrophoneUsageDescription - why you need access to the microphoneNSSpeechRecognitionUsageDescription - what is access to speech recognition for NSSpeechRecognitionUsageDescription

This is important, because without them there will be an inevitable crash.

Next, we need to process the authorization status:

- (void)handleAuthorizationStatus:(SFSpeechRecognizerAuthorizationStatus)s { switch (s) { case SFSpeechRecognizerAuthorizationStatusNotDetermined: // [self requestAuthorization]; break; case SFSpeechRecognizerAuthorizationStatusDenied: // [self informDelegateErrorType:(EVASpeechManagerErrorSpeechRecognitionDenied)]; break; case SFSpeechRecognizerAuthorizationStatusRestricted: // break; case SFSpeechRecognizerAuthorizationStatusAuthorized: { // } break; } } At the stage of acquaintance with Speech.framework, I did not find the use-case of using the status of SFSpeechRecognizerAuthorizationStatusRestricted . I assume that this status was planned for limited access rights, but so far there are no additional options for Speech Recognition. Well, wait for the official documentation.

When you first start the application after installation, the recognizer will return the status of SFSpeechRecognizerAuthorizationStatusNotDetermined , so you need to request authorization from the user:

- (void)requestAuthorization { [SFSpeechRecognizer requestAuthorization:^(SFSpeechRecognizerAuthorizationStatus status) { [self handleAuthorizationStatus:status]; }]; } The API has neither methods nor notifications for tracking status changes. The system will forcefully restart the application if the user changes the authorization status.

Customization

For successful speech recognition work, we need four objects:

@property (nonatomic, strong) SFSpeechRecognizer *recognizer; @property (nonatomic, strong) AVAudioEngine *audioEngine; @property (nonatomic, strong) SFSpeechAudioBufferRecognitionRequest *request; @property (nonatomic, strong) SFSpeechRecognitionTask *currentTask; and implement the protocol:

@interface EVASpeechManager () <SFSpeechRecognitionTaskDelegate> recognizer - the object that will process requests.

Of the important:

- initialized with the initWithLocale: locale. If the locale is not supported, then nil will be returned. At the time of this writing, the following locales were supported:

ro-RO en-IN he-IL tr-TR en-NZ sv-SE fr-BE it-CH de-CH pl-PL pt-PT uk-UA fi-FI vi-VN ar-SA zh-TW es- ES en-GB yue-CN th-TH en-ID ja-JP en-SA en-AE da-DK fr-FR sk-SK de-AT ms-MY hu-H ca ca ES ko-KR fr-CH nb -NO en-AU el-GR ru-RU zh-CN en-US en-IE nl-BE es-CO pt-BR es-US hr-HR fr-CA zh-HK es-MX it-IT id-ID nl-NL cs-CZ en-ZA es-CL en-PH en-CA en-SG de-DE.

The recognizer does not switch the locale automatically. It works within the locale specified during initialization. Those. if the initialization was en-US locale, and the user speaks another language, then the recognizer will work out and return an empty answer;

- has a

queueproperty (by defaultmainQueue), which makes it possible to process callback'and asynchronously. This will allow you to process a large enough text without delay.

audioEngine - an object that will connect the input signal from the microphone (dictated speech) and the output signal to the buffer.

self.audioEngine = [[AVAudioEngine alloc] init]; AVAudioInputNode *node = self.audioEngine.inputNode; AVAudioFormat *recordingFormat = [node outputFormatForBus:bus]; [node installTapOnBus:0 bufferSize:1024 format:recordingFormat block:^(AVAudioPCMBuffer * _Nonnull buffer, AVAudioTime * _Nonnull when) { [self.request appendAudioPCMBuffer:buffer]; }]; request is a speech recognition request.

From the interesting:

- The request has a property SFSpeechRecognitionTaskHint, which can take one of four values:

- SFSpeechRecognitionTaskHintUnspecified

- SFSpeechRecognitionTaskHintDictation

- SFSpeechRecognitionTaskHintSearch

- SFSpeechRecognitionTaskHintConfirmation

According to the description, it can be assumed that it is planned to divide the requests also into types, i.e., ordinary dictation, dictation for information retrieval, dictation for confirmation of something. Alas, now they are not used anywhere, except as markers. I suppose there will be a development of this link.

currentTask is a task object for processing a single request.

Of the important:

errorproperty. Here you can get an error if the task was failed;- two properties

finishing/cancelledby which you can determine whether the task was completed or canceled. When you cancel a task, the system instantly cancels further processing and recognition, but the developer in any case can get that part of the recognized one that has already been processed. At the completion of the task, the system will return a fully processed result.

Using

Launch

After successfully setting up the speech manager, you can proceed to the work itself.

You must create a query:

self.request = [[SFSpeechAudioBufferRecognitionRequest alloc] init]; and pass it to the recognizer:

Important! BeforeaudioEnginerequest to the resolver, you need to activateaudioEngineto receive the audio stream from the microphone.

- (void)performRecognize { [self.audioEngine prepare]; NSError *error = nil; if ([self.audioEngine startAndReturnError:&error]) { self.currentTask = [self.recognizer recognitionTaskWithRequest:self.request delegate:self]; } else { [self informDelegateError:error]; } } By accepting the request, the system will return the task object to us. You will need it later to stop the process.

Stop

The request object cannot be reused while it is active, otherwise the “reason: 'SFSpeechAudioBufferRecognitionRequest cannot be re-used'” crash. Therefore, it is important at the end of the process to stop the task and audioEngine:

- (void)stopRecognize { if ([self isTaskInProgress]) { [self.currentTask finish]; [self.audioEngine stop]; } } Result processing

At the end of the task, the result will be delivered to the SFSpeechRecognitionTaskDelegate delegate SFSpeechRecognitionTaskDelegate :

process the recognized text in the method:

- (void)speechRecognitionTask:(SFSpeechRecognitionTask *)task didFinishRecognition:(SFSpeechRecognitionResult *)recognitionResult { self.buffer = recognitionResult.bestTranscription.formattedString; } and errors in the method:

- (void)speechRecognitionTask:(SFSpeechRecognitionTask *)task didFinishSuccessfully:(BOOL)successfully { if (!successfully) { [self informDelegateError:task.error]; } else { [self.delegate manager:self didRecognizeText:[self.buffer copy]]; } } Errors

While working with the application, I encountered errors:

Error: SessionId=com.siri.cortex.ace.speech.session.event.SpeechSessionId@439e90ed, Message = Timeout for 30000 ms

Error: SessionId=com.siri.cortex.ace.speech.session.event.SpeechSessionId@714a717a, Message = Empty recognition

the first error arrived when, after a small dictation, the speech was finished (the user stopped speaking), and the recognizer continued to work;

the second error was when the recognizer did not receive any audio stream and the process was canceled by the user.

Both errors were caught in the delegate method:

- (void)speechRecognitionTask:(SFSpeechRecognitionTask *)task didFinishSuccessfully:(BOOL)successfully { if (!successfully) { // task.error } } Conclusion

After the first acquaintance with Speech.framework, it became clear that he was still “raw”. Much needs to be finalized and tested. The beginning is not bad, there is a striving towards artificial intelligence and working without means of entering information. In combination with IoT, there are great opportunities for managing gadgets, home, car. For now we wait, we train and we investigate further.

Links

')

Source: https://habr.com/ru/post/304714/

All Articles