Web scraping updated data with Node.js and PaaS

This is the fourth article in the cycle about web scraping using Node.js:

This is the fourth article in the cycle about web scraping using Node.js:

- Web scraping with Node.js

- Web scraping on Node.js and problem sites

- Node.js web scraping and bot protection

- Web scraping updated data with Node.js

In previous articles, we reviewed the retrieval and parsing of pages, the recursive passage of links, the organization and fine-tuning of the request queue, the analysis of Ajax sites, the handling of some server errors, the initialization of sessions, and methods for overcoming bot protection.

This article deals with topics such as web-scraping regularly updated data, tracking changes and using cloud platforms to run scripts and save data. More attention is paid to the separation of tasks of web scraping and processing of finished data, as well as what should be avoided when working with updated sites.

The purpose of the article is to show the whole process of creating, deploying and using a script, from setting the task to obtaining the final result. As usual, for example, the real problem is used, which are often found on freelance exchanges.

Formulation of the problem

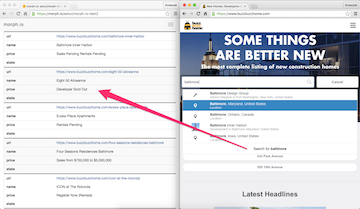

Suppose the customer wants to track real estate data on the Buzzbuzzhome site. He is only interested in the city of Baltimore. For each property offer, the customer wants to see a link to the offer page, the name of the object and price information (or what is written on the site instead of the price).

Suppose the customer wants to track real estate data on the Buzzbuzzhome site. He is only interested in the city of Baltimore. For each property offer, the customer wants to see a link to the offer page, the name of the object and price information (or what is written on the site instead of the price).

The customer needs the ability to periodically update the information. In fact, he needs the opportunity at any time to get an Excel-compatible file, in which there will be only actual offers, and in which among all the offers, new ones (which were not in the last requested file) will be marked and changed from the last time. The customer is sure that only the price information will be changed.

The customer wants to place the script on the network and work with it through the web interface. He agrees to create a free account somewhere, independently run the script in the cloud (press a button in the browser) and download the CSV. Data privacy does not bother him, so you can use public accounts.

Site analysis

On the Buzzbuzzhome website, you can get information of interest to us in two ways: drive ' Baltimore ' into the search field on the main page (there will be a pop-up hint ' Baltimore, Maryland, United States '), or find Baltimore in the catalog (select the 'Cities' in the main menu, select " Maryland " from the list of states, then select " Baltimore " from the list of cities). In the first case, we get a map with markers and a list loaded via Ajax, and in the second - a boring list of links.

The fact that the site has a simple old school catalog is a great success. If it were not there, we would have to overcome a number of difficulties, and I’m not talking about analyzing Ajax traffic, but about the data itself. The fact is that in the list next to the map, in addition to the proposals for Baltimore, there is a lot of garbage that just got on the map. This is because the search is performed not by address, but by geographic coordinates. In addition, it is worth considering that the search results by coordinates often do not contain objects whose address is not specified exactly or not fully (even if this site does not do this, it cannot be guaranteed that it will not do so in the future). If the garbage can be simply filtered by address, then there is no unique solution for the missing objects and we would have to specify with the customer what to do.

Fortunately, there is a separate page in the catalog on our site, where all the objects with the Baltimore address are listed. Pages of individual objects easily parse (at least the name and price information).

Data acquisition

If you do not think about updating data, then the task is extremely simple. Even easier than it was in the first article, since there is no recursive passage through the links. We get a list of links to real estate offers, put in a queue, parsim each page of the offer and get an array of results. For example, like this:

var needle = require('needle'); var cheerio = require('cheerio'); var tress = require('tress'); var resolve = require('url').resolve; var startURL = 'https://www.buzzbuzzhome.com/city/united-states/maryland/baltimore'; var results = []; var q = tress(work); q.drain = done; start(); function start(){ needle.get(startURL, function(err, res){ if (err) throw err; var $ = cheerio.load(res.body); $('.city-dev-name>a').each(function(){ q.push(resolve(startURL, $(this).attr('href'))); }); }); } function work(url, cb){ needle.get(url, function(err, res){ if (err) throw err; var $ = cheerio.load(res.body); results.push([ url, $('h1').text().trim(), $('.price-info').eq(0).text().replace(/\s+/g, ' ').trim() ]); cb(); }); } function done(){ // - results // , console.log(results); } This way you can make sure that we are able to get the right data. For this, it is not even necessary to know what we will do with them next. It is important that in our case there is little data, which means they can be processed all in one step, and not each line separately.

Saving data

Perhaps you can omit a detailed analysis of hosting options, such as VPN, PaaS (like Heroku), or virtual hosting with support for Node.js. This is already a lot and well written. You can immediately start with the fact that there are specialized PaaS solutions for scraper that can significantly reduce labor costs. This is not about “universal scraper”, requiring only fine tuning of parsing, such as screen-scraper , but about full-fledged platforms for running your own scripts. Until recently, ScraperWiki was the leader in this niche, but now this service has been switched to read-only mode and is gradually turning into something else. A new leader in this niche, I would call the service Morph.io . It is convenient, free and very easy to deploy. Morph.io users are asked to fit in 512 megabytes of memory and in 24 hours of the script, but if someone doesn’t have enough of it, you can try to negotiate individually. There are a lot of advantages to this service: a ready-made web interface sharpened for scraping, a convenient API, automatic daily launch, web hooks, alerts and so on. The main disadvantage of Morph.io is the lack of private accounts. You can store all sorts of passwords, for example, in secret environment variables, but the script itself and the resulting data are visible to everyone.

( On Habré already there was an article about Morph.io , only in the context of Ruby )

On Morph.io, data is stored in SQLite, so we don’t have to worry about the choice of storage type. If you create a data.sqlite database in the current directory, it will be available for download from the scraper page. Also, a table with the name data will be displayed (first 10 rows) on the scraper page and will be available for download as a CSV.

If we still neglect tracking changes, then saving the data can be implemented like this:

var sqlite3 = require('sqlite3').verbose(); // ... , function done(){ var db = new sqlite3.Database('data.sqlite'); db.serialize(function(){ db.run('DROP TABLE IF EXISTS data'); db.run('CREATE TABLE data (url TEXT, name TEXT, price TEXT)'); var stmt = db.prepare('INSERT INTO data VALUES (?, ?, ?)'); for (var i = 0; i < results.length; i++) { stmt.run(results[i]); }; stmt.finalize(); db.close(); }); } You can, of course, use any ORM, but this is someone you like.

Cloud Deployment

On the Morph.io site, authorization via GitHub is used, and the scraper itself is made on the basis of git-repositories from there. That is, you can start a customer account on GitHub, upload the correct files there, and then he can create a scraper on Morph.io, run it and save the data.

It is enough to upload three files to the repository: scraper.js , package.json and README.md . The text from README.md will be displayed on the main page of the scraper (just like on GitHub). In package.json enough to specify dependencies. If the default version of Node.js does not suit you, you can immediately specify one of the versions supported on Heroku. When you start the scraper for the first time, the service itself will understand by the extension of the main file that the scraper is written in Node.js, set up the entire environment itself and install the dependency modules itself. If the Morph.io server is overloaded - the script will be queued, but usually it is not for long.

Tracking changing data

Tasks to track changing data, from the point of view of the scraper, are divided into simple and complex.

Simple tasks are when the data in the source changes noticeably slower than they are scrapped. Even if you have to optimize and parallelize queries - it is still a simple task. Also, in simple tasks, the scrapping of the next version of the changing data can actually be done in one run of the script. Let it be not every time, but the main thing is that there is no need to implement the “resume”. Simple tasks suggest that there are no conflicts or they do not particularly care about the customer. In a simple task, you can get the next version of the data using web scraping, and then calmly do everything you need with it.

Difficult tasks are when you have to abandon the scrapping and choose another engineering solution.

Our task is simple. Even in one stream, data can be obtained in a minute or two. Data updates are needed no more than several times a day (it should be clarified with the customer, but usually so). You can simply save the old and the new version, for example, in two different tables and merge them.

Let's get another table (let's call it new ). Let it be created, filled with the results of the scraping, merzhitsya and deleted. In the data table, we add a state column to which we will write, for example, ' new ' if the entry is new and ' upd ' if the entry is old, but has changed from the previous version. Specific notations are not important, as long as they are well read in the final CSV. Before merging, the entire column is reset to NULL .

Table blending is performed in three SQL queries.

INSERT INTO data SELECT url, name, price, "new" AS state FROM new WHERE url IN ( SELECT url FROM new EXCEPT SELECT url FROM data ); DELETE FROM data WHERE url IN ( SELECT url FROM data EXCEPT SELECT url FROM new ) UPDATE data SET state = "upd", price = ( SELECT price FROM new WHERE new.url = data.url ) WHERE url IN ( SELECT old.url FROM data AS old, new WHERE old.url = new.url AND old.price <> new.price ) Those who chose Node.js, “not to learn a second language,” may want to use SQL minimum and implement the basic logic in Javascript. This is mainly a holivarny question, but there is one point worth considering: the stability of the script on a low-powered hosting service is much higher if you use a language that is specifically designed for this to work with data. Requests are not the most complex, and the customer will be pleased if the script drops less often.

The corresponding script fragment looks like this:

var sqlite3 = require('sqlite3').verbose(); // ... , function done(){ var db = new sqlite3.Database('data.sqlite'); db.serialize(function(){ db.run('DROP TABLE IF EXISTS new'); db.run('CREATE TABLE new (url TEXT, name TEXT, price TEXT)'); var stmt = db.prepare('INSERT INTO new VALUES (?, ?, ?)'); for (var i = 0; i < results.length; i++) { stmt.run(results[i]); }; stmt.finalize(); db.run('CREATE TABLE IF NOT EXISTS data (url TEXT, name TEXT, price TEXT, state TEXT)'); db.run('UPDATE data set state = NULL'); db.run('INSERT INTO data SELECT url, name, price, "new" AS state FROM new ' + 'WHERE url IN (SELECT url FROM new EXCEPT SELECT url FROM data)'); db.run('DELETE FROM data WHERE url IN (SELECT url FROM data EXCEPT SELECT url FROM new)'); db.run('UPDATE data SET state = "upd", price = (SELECT price FROM new WHERE new.url = data.url) ' + 'WHERE url IN (SELECT old.url FROM data AS old, new WHERE old.url = new.url AND old.price <> new.price)'); db.run('DROP TABLE new'); db.close(); }); } Such a script can be uploaded on Github , uploaded to Morph.io and run. For the sake of readability, it is worth making the text of requests in separate string variables, but readability is not important for the customer.

The script described in this article is good only for small amounts of data. At the moment in Baltimore there are only about 25 real estate offers (on Buzzbuzzhome) and the scripping is set in a couple of minutes. That is, if the script crashes due to an error on the server, it can simply be restarted, since nothing changes in the database until the scraping is completed.

At the same time, there are about a thousand offers for New York on the same site, so the scrapping takes 40-50 minutes, and the Buzzbuzzhome server is very sickly. Solving such a task, we would have to add server error handling (trite, we return the failed task to the queue and set the scrapping for a short pause, see the second article) so that the script does not fall and the customer does not have to restart it every hour. What you shouldn’t do is save the partial results of the scraping between restarts in the database. In real life, this may lead to the fact that some of the “updated” data will be very outdated.

Further, if the task were to track the entire Buzzbuzzhome catalog, it would make sense to scrap and update the data for each city separately. I would have to store in a separate table (or something else) the data on which city was updated a long time ago. This is too big a task for Morph.io (at least we don’t meet the limits), so we would have to deploy the script in a more powerful cloud (and write the web interface to it). The time spent on scrapping the entire catalog would be measured in days, and the data would become outdated faster than it was scraped. This would not suit any real customer, so it would have to be very parallelized work. How exactly - it already depends on specific requirements, but it would definitely have to.

Conclusion

Finally, it is worth emphasizing that the task of scraping regularly updated data, although it presupposes the preservation of data between launches, does not at all imply mandatory deployment in the cloud. You can give the same script to the customer along with instructions for installing and running his operating system from the terminal, and everything will work fine. Just have to explain how to get CSV from SQLite, so it is better to add automatic export of the current version of the data. However, in this case we will have no reason at all to use the database instead of, for example, a JSON file. The real need for databases appears only when scrapping huge amounts of data that simply do not fit in memory, and storing them in ordinary files is extremely inconvenient. But this is a topic for a separate article.

')

Source: https://habr.com/ru/post/304708/

All Articles