Spark Summit 2016: review and experience

In June, one of the largest big data and data science events in the world was held - Spark Summit 2016 in San Francisco. The conference brought together two and a half thousand people, including representatives of the largest companies (IBM, Intel, Apple, Netflix, Amazon, Baidu, Yahoo, Cloudera, and so on). Many of them use Apache Spark, including contributors to open source and vendors of their own development in big data / data science based on Apache Spark.

At Wrike, we are actively using Spark for analytics tasks, so we could not miss the opportunity to learn firsthand what is new in this market. We are pleased to share our observations.

Schedule, video, slides: https://spark-summit.org/2016/schedule/

Twitter: https://twitter.com/spark_summit/status/741015491045642241

Trends

If you briefly summarize what was said at the conference, you get something like this:

Spark 2.0 has been announced. The speakers from DataBricks (the conference organizers and the main product developers) said that he was carrying something new. In some reports, the ins and outs of these changes were revealed - this is an improved task scheduler that allows you to optimize the work of the code on the DataFrame, even if the code is not written in the best way, as well as the new spark streaming and the development of SparkSQL.

Streaming. Once again the trend was confirmed that modern business is moving towards real-time processes, analytics, etl, etc., it becomes more reactive and requires fast tools.

More and more attention is paid to distributed machine learning: for complex models of deep learning, a lot of data is needed. The conference discussed how Spark can speed up the learning process.

Several reports addressed the problems of cloud infrastructure providers for analytics and machine learning - how to make users trust the clouds, make sure that it is cheap and reliable.

Many speakers focused on the fact that Spark today is becoming a system that brings together a huge number of data sources. Because of this, it is often used by business analysts and product analytics as a tool for etl and reporting, so significant efforts are now being made to improve SparkSQL

And, as was the case at large conferences, companies were measured by their contribution to the community and the number of commits to Open-Source.

A little about personal experiences

There were concerns that, due to the large number of participants, we would encounter crowds at the check-in counters, but the process was well thought out and automated: approach the check-in machine, enter the first and last name, and after a short wait, the participant’s badge pops up. It was possible to take pockets and a ticket for issuing t-shirts and other small items from the summit staff. In general, there are no complaints about the organization - the navigation was thought out, there is plenty of information about where it is happening now. Hustle arose only at dinner on the first day, but, standing in line, there was a good opportunity to talk with other participants and discuss the reports. Of course, at any time at the conference it was possible to find coffee points with drinks and snacks.

In the intervals between interesting reports, we could go to the exhibition hall and study products built on the Spark base or somehow related to it. My colleague and I were physically unable to get on all the reports. Fortunately, videos and presentations of all speeches are freely available .

A few words about the exhibition. I was amazed how strongly the ecosystem is developed and how many products for analytics are currently on the market. Commercial, open source and shareware solutions - for every taste.

At the exhibition were employees of companies providing various storage systems (promising performance gains in accessing data), consulting companies (promising help in deploying Spark or applying machine learning to business), private cloud providers (sharpened under hadoop / spark), various integrators , visualization systems , graphical etl programming , cloud platforms , machine learning libraries and even tools for debugging and profiling applications over Spark . In other words, the whole world around Spark! Below are a few solutions that especially caught our attention.

Interesting solutions

MemSQL — a scalable in-memory database for working with relational objects — promised particular efficiency with numeric data.

Couchbase is a non-trust database; it turns out there is an enterprise solution based on a redis server

The H2O machine learning library and its bundle with Spark.

Of course, you can not go past the new products from DataBricks, Intel and IBM. With the first, everything is pretty obvious, but before that, TAP solution from Intel was not heard. IBM's Bluemix cloud is widely known . This time they actively promoted the motto “Learn. Create. Collaborate. ”

The presentation of the ZoomData visualization system was quite interesting. The system connects to a huge number of sources and can output data in real time. At first glance, the decision is very similar to the Tableau with a live-connection, but the guys came up with details in their own way.

We also found the product from Startio interesting . This is software that can be downloaded and installed, so the monetization scheme is not very clear: whether paid support is planned, or there are paid versions of the platform with a large number of features. As I understood from the description, you immediately and free get a large distributed analytics system, with monitoring, management, etc.

There was also an interesting presentation by the Mesosphere employees - they represented the open-source version of DC / OS - the “operating system” above DC. In essence, these are Mesos, Zookeeper, Marathon, onsul in one bottle and with beautiful GUI, which combines machines into one pool of resources. They also have a paid enterprise version - a rather interesting product for distributed computing and modern cloud platforms.

And at the summit, we saw guys who make Cray supercomputers and by default provide servers with installed analytics solutions, including Spark.

Bright presentations

- Riak TS from Basho NoSQL key-value storage, oriented to the TimeSeries Data format, promises resiliency (100% data availability), autoscaling, data co-location, ability to use SQL and, in particular, optimized SQL— range queries, support for Java, Python, Node.js and other popular languages, multicluster replication and, of course, a connector to Apache Spark.

- GridGain (by the way, our compatriots) presented their developments built on their own open-source solution - Apache Ignite. In short, this is a technology of 2 main components: 1. In-Memory MapReduce and 2.Ignite FileSystem (In-Memory file system). This thing can be very useful for the infrastructure based on the components of the hadoop ecosystem, as it allows you to speed up computations hundreds of times with little or no code changes, working with the YARN cluster manager and being compatible with HDFS. Another useful Apache-Ignite In-Memory Data Fabric case is a shared in-memory repository that allows Apache Spark jobs to exchange data an order of magnitude faster.

- There were also funny projects that promised us a tool for analytics without writing a line of code. Project Seahorse - Visual Spark from the company deepsense.io. This UI application is a la Matlab Simulink or LabView - a graphical tool for creating Spark pipelines for tasks from graphic blocks.

We also heard calls to use Apache Spark as a back-end engine for various web services, where there is or is a large load of data over which you will need to do analytical processing and use the results - either as immediately after the product’s output for the user, or as intermediate layer for subsequent services. One such project is Eclair.js . In fact, these are js-wrappers over the Apache Spark API, which allow using JavaScript (NodeJS) to create pipelines for computations, including using the js syntax for implementing mappers and reducers.

About architecture

There was no separate report on this product, but Kafka flashed on almost every slide about streaming and was simply taken for granted. Usually for streaming they draw a lot of resources, it all flies to Kafka and from there to Spark Streaming, microservices are sucked in or something else optional.

Mesos - surprised a huge number of both commercial and open source software based on this cluster manager. Stratio, mesosphere, cray, etc. are products that are already working, despite the fact that the technology itself is still raw. Many noted that there are enough problems, but Mesos looks like a very serious bid for the future - this is a universal cluster manager, easy to use, deploy and customize. Report on this topic: https://youtu.be/LG5HE9gI07A

Baidu and Yahoo talked about how they built deep-learning based on Spark. And everywhere there was a server of parameters, perhaps this is the project. For example, on this server were stored vectors built using word2vec.

Python and Spark

Python over Spark works slowly if you use lambdas and python objects. The problem is known, there are several ways to solve it - using only the DataFrame API, where, in fact, the python only indicates which functions should be used, and the java (scala) Spark functions are used. But as soon as you add a lambda function, everything breaks down - the data is first deserialized by Spark, then serialized, transferred to the side of Python, it deserializes the data, processes it, and again serializes and sends it back. The problem is that the objects of Python are not understood by the Rock and vice versa. Various solutions were proposed: from writing functions on Scala, followed by a call from Python, and more deeply embedding the interpreter in Spark, for example, using Jython. But the Apache Arrow project looks the most ambitious and tasty (video of the report: https://youtu.be/abZ0f5ug18U - in fact, realize the format of objects that is common for all languages - then you can exchange data without loss - conveniently and quickly! We believe, wait and whenever possible we help!

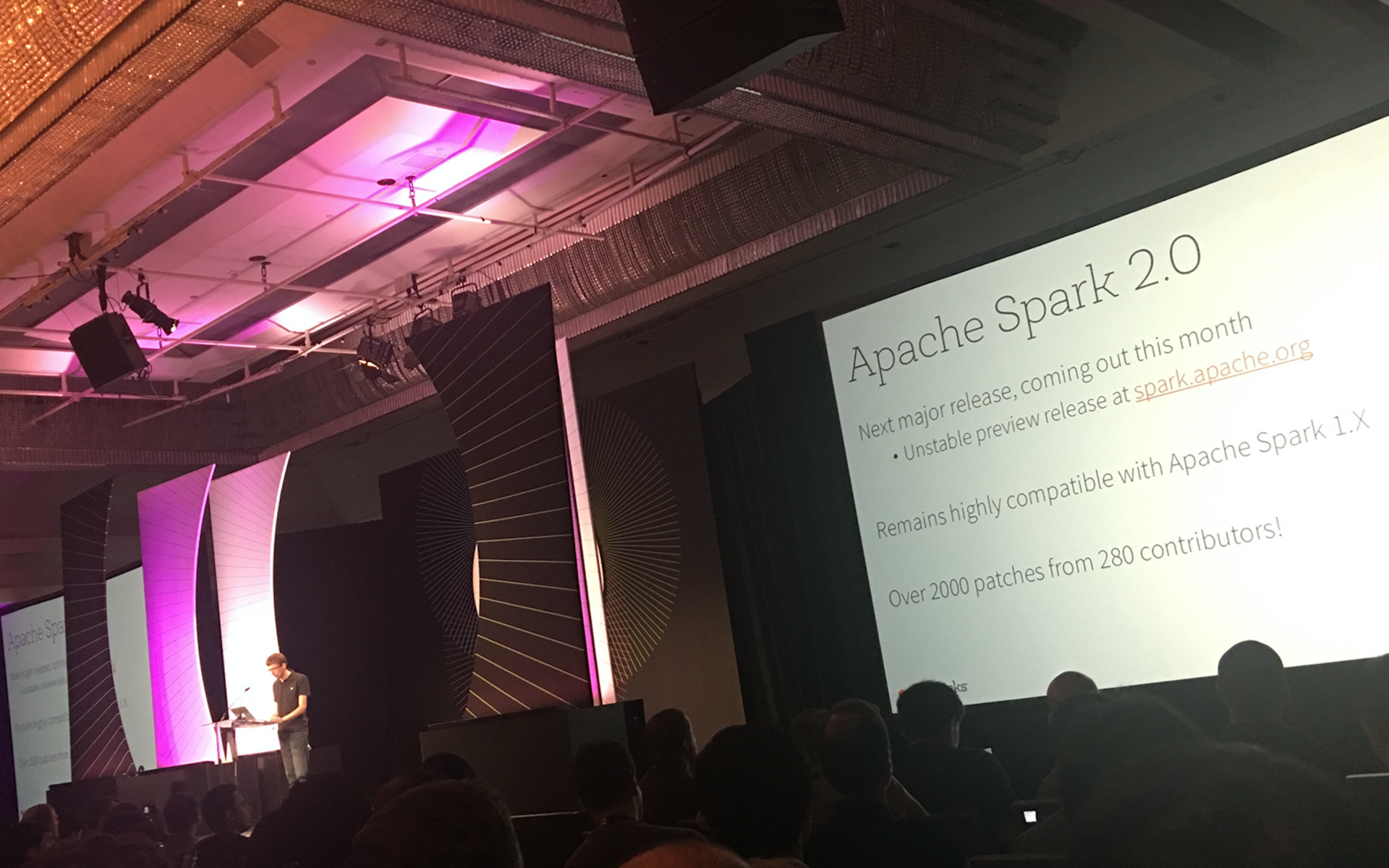

CTO Databricks and one of the creators of Apache Spark - Matei Zaharia talks about the new global release

Spark 2.0

In June, promise to release a new version of the Spark, which includes a number of improvements. This is a new Catalyst scheduler, it builds an execution tree and tries to optimize performance: calculate constants in advance, filter data to a lower level and execute it as far as possible before joins. It promises productivity growth of almost 70%, but it is not entirely clear in which cases.

Also introduced is the new Streaming API. Many said that etl should be close to real-time and streaming, and changes to the API are a pain. Now work with the data stream will occur as with an infinite DataFrame - you just read stream and do standard operations, plus you can write SQL!

Example:St = sqlContext.read.format('json').open('<path>') wasSt = sqlContext.read.format('json').stream('<path>') has become

And then everything is as usual, you can even use SQL. Profit!

In version 2.0 or 2.1, they promised full support for the SQL 2003 standard. As noted earlier, Spark is also used in classic analytics fields, so the developers try to strengthen the indicators in the SQL interface.

Video reports:

Apache Spark 2.0 - https://youtu.be/fn3WeMZZcCk ,

Structuring Spark: Dataframes, Datasets And Streaming - https://youtu.be/1a4pgYzeFwE ,

A Deep Dive Into Structured Streaming - https://youtu.be/rl8dIzTpxrI ,

Deep Dive Into Catalyst: Apache Spark 2 0'S Optimizer - https://youtu.be/UBeewFjFVnQ

Machine learning

We would like to mention several Machine Learning presentations.

MLlib 2.0, in which the persistence API appeared, that is, the ability to save trained models written in one language (for example, Python) and use them in any other supported Apache Spark (for example, Java). It is noteworthy that the same logic works for pipelines as well. Without exaggeration, this is a breakthrough, as the sharing of models between DataScience teams and developers is one of the most painful topics in the industry. Also, almost all MLlib algorithms now support the DataFrame-based API.

- The contributors of the ML framework for large amounts of data H2O told us about Sparkling Water - a tool for easy integration of the Apache Spark and H2O frameworks. According to the developers themselves, they have connected the power of parallel processing of large data arrays and a powerful engine for machine learning on this data. Judging by their presentation, working with Sparkling Water according to the logic of using API is not much different from Spark. For example, the inverse conversion of H2OFrame to Spark DataFrame or RDD does not require duplication of data at all, but simply creates a DataFrame or RDD wrapper. As for the conversion of RDD / DataFrame to H20, then duplication of data is required. In general, H2O and MLlib have non-intersecting functionality, which can be useful in many cases.

A little about the atmosphere

When you see so many people involved in big data, machine learning, analytics, science, etc., it is very motivating, I want to keep up and go forward. The air was saturated with talk that AI is a new electricity (here we refer to the Andrew Ng report) and those who do not master it will be left behind as a manufactory with manual labor that soon connecting to the AI business will be like plugging into socket, and big data will be able to answer any questions, and Spark is the cornerstone in the future of new smart systems.

Andrew Ng, the well-known founder of Sourcesera, convinces us that AI is a new fuel.

A lot of talk was that the cloud is fast and cheap. That's right, if your business is built on a recommendation system, like, say, Netflix. In this case, you need an army of Spark engineers and a cloud where you can deploy infrastructure in just hours. But if you are working on a research project, and analytics is used only for internal needs, it is worth considering. Almost everyone, said that the future was with Spark, did not forget to mention that they have a solution that “simplifies life”. So it is important not to fall for the bait of marketing, buying unnecessary. But to study these areas in any case necessary!

What we learned from this event

We at Wrike are on the right track. Having got acquainted with a number of successful large companies and having talked about their analytical infrastructure and technologies, we were convinced that in many respects we used the same solutions and chose the right path of development.

Spark is developing, developing actively and in the near future it will become much easier to implement in production.

We have valuable contacts of people who work on similar problems. Networking a success.

We looked at the market for proposals in this area, if something cannot be collected by ourselves - we know from whom to buy.

Slightly expanded their technical competence.

Charged with new ideas and plans.

What we miscalculated

You should prepare for such events and, at a minimum, think about how you will exchange contacts, etc. We forgot to print business cards, because of which we had to type in our email on someone else’s phone, take badges, etc.

It will not be superfluous to look in advance at the exhibition complexes and decide on the questions; perhaps, some products will be of interest to other departments of your company. Also, if you study the materials in advance, you can prepare questions on the reports.

Why and who needs to go to the summit

This is a great opportunity to take a look at the big data market, built around Spark. If you are considering buying a solution, this is the perfect place to see what is on the market and compare.

You can make useful connections with the Spark community.

This is a great opportunity to ask what and how it works in other companies.

As an afterword I would like to add that we personally talked with the main distributors of Apache Spark - the guys from DataBricks. They presented us a tool a la Jupiter on steroids for working with Apache Spark. These are ipython-laptops, which work on top of the clouds, but have a developed system for monitoring the status of separately running cells (jobs), advanced logic for sharing notebooks and user accounting. From the same tab, you can change the version of Sparks / languages / cloud settings (cluster). At the same time, DataBricks product has a free edition. Also, the guys are planning to make a custom sharing of finished auto-update dashboards.

If you forgot to tell something, ask questions, we will be happy to answer in the comments.

')

Source: https://habr.com/ru/post/304570/

All Articles