How HTTP / 2 will make the web faster

Hypertext Transfer Protocol ( HTTP ) is a simple, limited and incredibly boring protocol underlying the World Wide Web. In essence, HTTP allows you to read data from network-connected resources, and for decades it acts as a fast, secure, and high-quality “intermediary.”

In this review article we will talk about the use and benefits of HTTP / 2 for end users, developers, and organizations seeking to use modern technology. Here you will find all the necessary information about HTTP / 2, from the basics to more complex issues.

Contents :

- What is HTTP / 2?

- What was HTTP / 2 created for?

- What was bad for HTTP 1.1?

- HTTP / 2 features

- How does HTTP / 2 work with https?

- Differences between HTTP 1.x, SPDY and HTTP / 2

- The main advantages of HTTP / 2

- HTTPS, SPDY and HTTP / 2 performance comparison

- Browser HTTP / 2 Support and Availability

- How to start using HTTP / 2?

What is HTTP / 2?

HTTP was developed by the creator of the World Wide Web, Tim Berners-Lee . It made the protocol simple to provide high-level data transfer functions between web servers and clients.

')

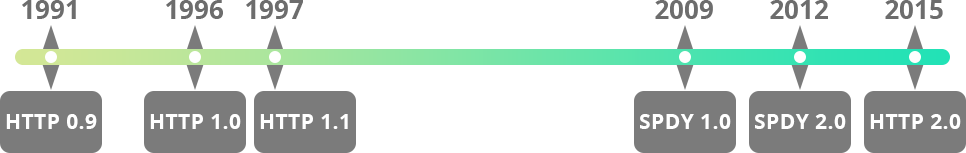

The first documented version of HTTP — HTTP 0.9 — was released in 1991. In 1996, HTTP 1.0 appeared. A year later, HTTP 1.1 was released with minor improvements.

In February 2015, the HTTP Task Force of the Engineering Council of the Internet ( IETF ) revised the HTTP protocol and developed the second major version as HTTP / 2. In May of the same year, the implementation specification was officially standardized as a response to the HTTP-compatible protocol SPDY , created by Google. The discussion on "HTTP / 2 vs. SPDY" will be conducted throughout the article.

What is a protocol?

To say “HTTP / 2 vs. HTTP / 1”, let's first recall what the term “protocol” itself, often referred to in this article, means. A protocol is a set of rules governing the mechanisms for transferring data between clients (for example, web browsers requesting data) and servers (computers containing this data).

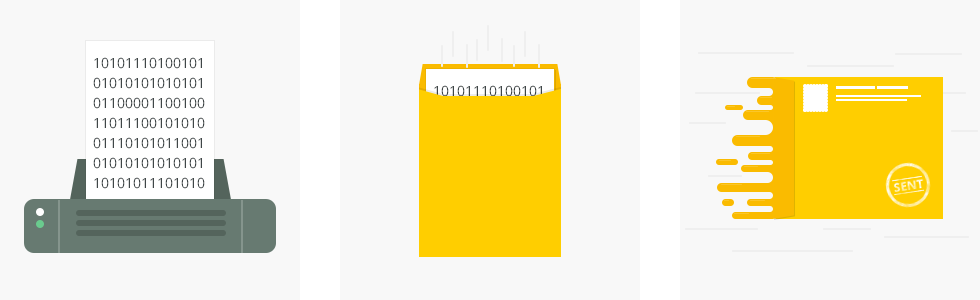

Protocols usually consist of three main parts: the header (header), payload (payload) and footer (footer). The title comes first and contains the source and destination addresses, as well as other information, such as size and type. The payload is information that is transmitted via a protocol. The footer is transmitted last and acts as a control field for routing client-server requests to recipients. Header and footer allow you to avoid errors in the transfer of useful data.

If we draw an analogy with a regular paper letter: the text (payload) is placed in an envelope (header) with the recipient's address. Before sending the envelope is sealed and a postage stamp (footer) is pasted on it. Of course, this is a simplified view. Transfer of digital data in the form of zeros and units is not so simple, it requires the use of a new dimension to cope with the growing technological challenges associated with the explosive use of the Internet.

The HTTP protocol initially consisted of two main commands:

GET - receiving information from the server,

POST - accepts data for storage.

This simple and boring set of commands — data acquisition and request transfer — formed the basis of a number of other network protocols. The protocol itself is another step towards improving UX, and for its further development it is necessary to implement HTTP / 2.

What was HTTP / 2 created for?

Since its inception in the early 1990s, HTTP has only been subjected to serious revision several times. The latest version, HTTP 1.1, has been in use for over 15 years. In the era of dynamic content updates, resource-intensive multimedia formats, and an excessive desire to increase the performance of the web, the technology of the old protocols has become obsolete. All these trends require significant changes that are provided by HTTP / 2.

The main goal of developing a new version of HTTP was to provide three properties that are rarely associated with the network protocol alone, without the need for additional network technologies — simplicity, high performance and robustness. These properties are provided by reducing delays in processing browser requests using measures such as multiplexing, compression, prioritizing requests and sending data initiated by the server (Server Push).

As HTTP enhancements, mechanisms such as flow control, upgrade and error handling are used. They allow developers to provide high performance and stability of web applications. The collective system allows servers to efficiently transmit more content to clients than they requested, which prevents constant requests for information until the site is fully loaded in the browser window. For example, the ability to send data initiated by the server (Server Push) provided by HTTP / 2 allows the server to send all the content of the page at once, except for what is already in the browser cache. Protocol overhead is minimized by efficiently compressing the HTTP headers, which improves performance when processing each browser request and server response.

HTTP / 2 was designed to be interchangeable and compatible with HTTP 1.1. The introduction of HTTP / 2 is expected to give impetus to the further development of the protocol.

Mark Nottingham, Head of the IETF HTTP Working Group and member of the W3C TAG :

“... we do not change all HTTP - methods, status codes and most of the headers remain the same. We only reworked it from the point of view of improving the efficiency of use, so that it was more benign to the Internet ... "

It is important to note that the new version of HTTP is coming as an extension for its predecessor, and it is unlikely to replace HTTP 1.1 in the foreseeable future. The implementation of HTTP / 2 does not imply automatic support for all types of encryption available in HTTP 1.1, but definitely encourages the use of more interesting alternatives, or an additional update of encryption compatibility in the near future. However, in the comparisons "HTTP / 2 vs. HTTP 1" and "SPDY vs. HTTP / 2", the hero of this article comes out on top in performance, security, and reliability.

What was bad for HTTP 1.1?

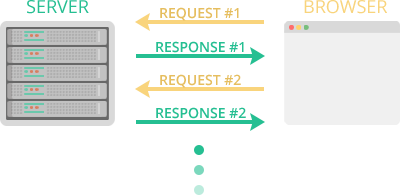

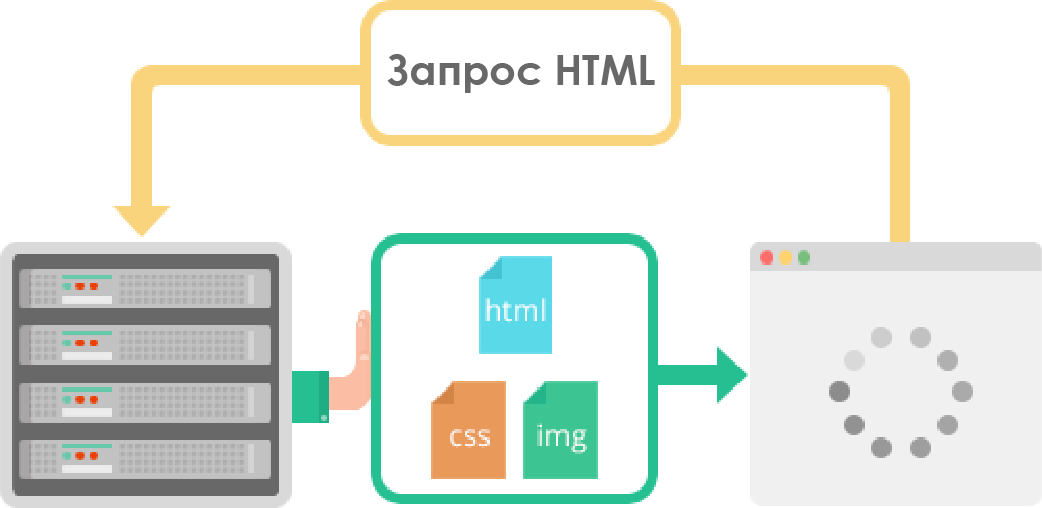

HTTP 1.1 allows you to process only one incoming request per TCP connection, so the browser has to establish several connections in order to process several requests at the same time.

But parallel use of multiple connections leads to TCP overload, therefore, to unfair monopolization of network resources. Browsers that use multiple connections to process additional requests take up the lion’s share of available network resources, resulting in lower network performance for other users.

Browser sending multiple requests leads to duplication of data in the transmission networks, which, in turn, requires the use of additional protocols to accurately extract the necessary information on the end nodes.

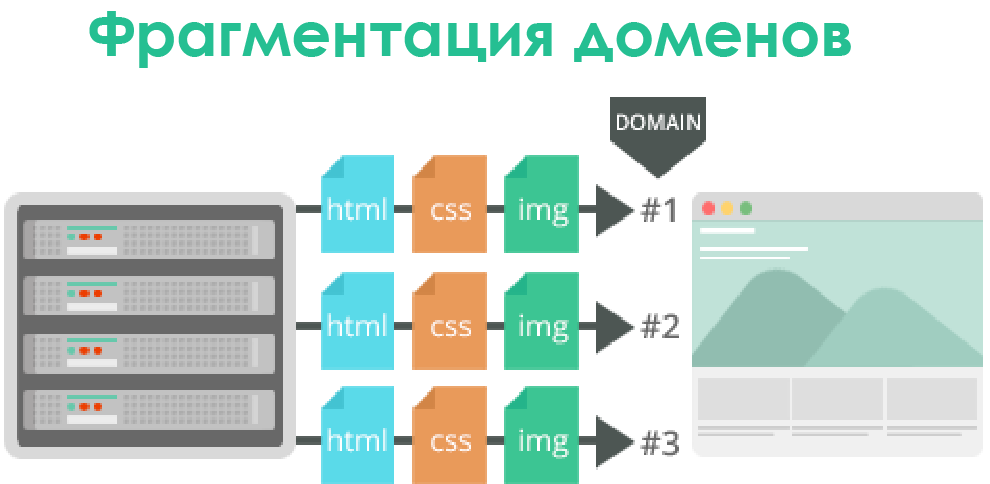

The network industry actually had to hack these restrictions using techniques such as domain sharding, concatenation, embedding and spriting data, as well as a number of others. The inefficient use of HTTP 1.1 basic TCP connections causes poor resource prioritization and, as a result, exponential performance degradation as the complexity, functionality, and size of web applications increase.

The evolving network is already lacking the capabilities of HTTP technologies. Developed many years ago, the key properties of HTTP 1.1 allow you to use several unpleasant loopholes that worsen the security and performance of websites and applications.

For example, using Cookie Hack, hackers can reuse a previous work session to gain unauthorized access to a user's password. And the reason is that HTTP 1.1 does not provide final authentication tools. Realizing that HTTP / 2 will look for similar loopholes, its developers have tried to increase the security of the protocol with the help of improved implementation of TLS features .

HTTP / 2 features

Multiplexed streams

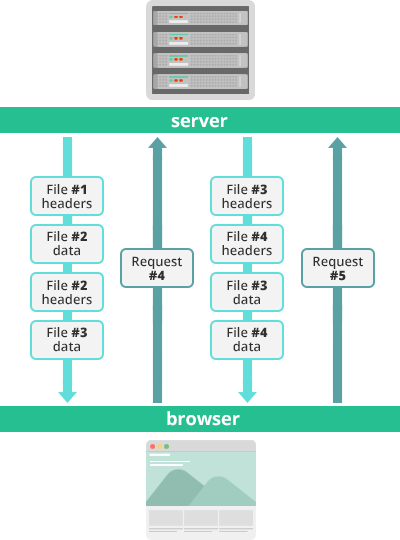

A two-way sequence of text frames sent over HTTP / 2, which the client and server exchange between themselves, is called a “stream”. In earlier versions of HTTP, it was possible to broadcast only one stream at a time, with a slight delay between different streams. Transmitting in this way large volumes of media content was too inefficient and resource intensive. To solve this problem in HTTP / 2, a new binary frame layer is used.

This layer allows you to turn data sent from the server to the client into a manageable sequence of small independent frames. And when receiving the entire frame set, the client can restore the transmitted data in its original form. This scheme also works when transmitting in the opposite direction - from the client to the server.

Binary frame formats allow you to simultaneously exchange multiple, independent sequences transmitted in both directions, without any delay between threads. This approach gives a lot of advantages:

- Parallel multiplexed requests and responses do not block each other.

- Despite the transmission of multiple data streams, a single TCP connection is used for the most efficient use of network resources.

- You no longer need to use optimization hacks , like sprites, concatenation, domain fragmentation and others that negatively affect other areas of network performance.

- Delays are lower, network performance is higher, better ranking by search engines.

- In the network and IT-resources, operating costs and capital investments are reduced.

Due to the described possibility, data packets from different streams are interleaved through a single TCP connection. At the end point, these packets are then separated and presented as separate data streams. In HTTP 1.1 and earlier versions, for parallel transmission of multiple requests would have to establish the same number of TCP connections, which is a bottleneck in terms of overall network performance, despite the rapid transmission of more data streams.

Sending data on server initiative (Server Push)

This feature allows the server to send additional cached information to the client that it did not request, but which may be needed in future requests. For example, if a client requests a resource X, which refers to a resource Y, then the server can send Y along with X, without waiting for the client to make an additional request.

Resource Y received from the server is cached on the client for future use. This mechanism saves “request-response” cycles and reduces network latency. Initially, Server Push appeared in the SPDY protocol. Thread identifiers containing pseudo-headers like

:path initiate the transmission of additional information by the server, which should be cached. The client must either explicitly allow the server to transfer itself cached resources via HTTP / 2, or interrupt initiated threads that have a special identifier.Other HTTP / 2 features, known as Cache Push, allow you to proactively update or invalidate the cache on the client. In this case, the server is able to determine the resources that the client may need, which he did not actually request.

The implementation of HTTP / 2 demonstrates high performance when working with initiative transferred resources:

- Initiatively transferred resources are stored in the client's cache.

- The client can reuse these resources on different pages.

- The server can multiplex the initiatively transmitted resources along with the requested information within the same TCP connection.

- The server can prioritize the resources that are proactively transferred. This is a key difference in terms of performance between HTTP / 2 and HTTP 1.

- The client may reject the resources transferred to maintain the efficiency of the repository, or may disable the Server Push function altogether.

- The client may also limit the number of simultaneously multiplexed streams with initiatively transmitted data.

In non-optimal methods such as Inlining, push-functionality is also used to make the server respond to requests. At the same time, Server Push is a protocol-level solution that helps to avoid fussing with optimization hacks.

HTTP / 2 multiplexes and prioritizes the stream with the initiatively transmitted data for the sake of performance improvement, as is the case with other request-response flows. There is a built-in security mechanism, according to which the server must be authorized in advance for the subsequent initiative transfer of resources.

Binary protocol

The latest version of HTTP has undergone significant changes in terms of features, and demonstrates conversion from text to binary protocol. To complete the cycles, the HTTP 1.x response request processes text commands. HTTP / 2 solves the same problem with binary commands (consisting of ones and zeros). This makes it easier to work with frames and simplifies the implementation of commands that could be confused due to the fact that they consist of text and optional spaces.

It will be more difficult to read binary commands than similar text commands, but on the other hand, networks will be easier to generate and parse frames. Semantics remains unchanged. Browsers using HTTP / 2 convert text commands to binary before sending to the network. The frames binary layer is not backward compatible with clients and servers using HTTP 1.x. It is a key factor in providing significant performance gains over SPDY and HTTP 1.x. What are the advantages of using binary commands for Internet companies and online services:

- Low overhead when parsing data is a critical advantage of HTTP / 2 compared to HTTP 1.

- Lower probability of errors.

- Less network load.

- Efficient use of network resources.

- Solving security problems, such as response splitting attacks, stemming from the textual nature of HTTP 1.x.

- Other HTTP / 2 features are implemented, including compression, multiplexing, prioritization, flow control, and efficient TLS processing.

- Compact commands simplify their processing and implementation.

- Higher efficiency and robustness for processing data transferred between the client and the server.

- Reduced network latency and increased throughput.

Thread prioritization

HTTP / 2 allows the client to give preference to specific data streams. Although the server does not have to follow such client instructions, it nevertheless helps the server optimize the distribution of network resources according to the requirements of the end users.

Prioritization is carried out by assigning each flow of dependencies (Dependencies) and weight (Weight). Although all threads, in fact, and so depend on each other, weight assignment is still added in the range from 1 to 256. Details of the prioritization mechanism are still under discussion. However, in real-world conditions, the server rarely manages such resources as CPU and connections to the database. The complexity of the implementation itself does not allow servers to perform requests for prioritizing threads. Continuing work in this direction is particularly important for the success of HTTP / 2 in the long term, because the protocol allows you to process multiple threads within a single TCP connection.

Prioritization will help to separate the requests coming to the server at the same time according to the needs of the end users. And processing data streams in random order only undermines the efficiency and convenience of HTTP / 2. At the same time, a well-thought out and widely used mechanism for prioritizing flows will give us the following advantages:

- Efficient use of network resources.

- Reducing the time of delivery of requests for primary content.

- Increase page loading speed.

- Optimize data transfer between client and server.

- Reducing the negative effect of network latency.

Compress Header State

In order to make the best impression on users, modern websites should be rich in content and graphics. HTTP is a stateless protocol, that is, each client request must contain as much information as possible for the server to perform the corresponding operation. As a result, data streams contain numerous duplicate frames, because the server should not store information from previous client requests.

If the website contains a lot of media content, the client sends a bunch of almost identical frames with headers, which increases the delay and leads to excessive consumption of non-infinite network resources. Without additional optimization of the combination of prioritized data streams, we will not be able to achieve the desired standards of parallelism performance.

In HTTP / 2, this is solved by compressing a large number of redundant frames with headers. Compression is done using the HPACK algorithm; it is a simple and secure method. The client and server store the list of headers used in previous queries.

HPACK compresses the value of each header before sending it to the server, which then searches for encrypted information in a list of previously received values in order to recover the full header information. Compression with HPACK provides incredible advantages in terms of performance, and also provides:

- Effective thread prioritization.

- Effective use of multiplexing mechanisms.

- Reduces overhead when using resources. This is one of the first questions discussed when comparing HTTP / 2 with HTTP 1 and SPDY.

- Encoding large and frequently used headers, which allows you not to send the entire frame with the header. The transmitted size of each stream decreases rapidly.

- Resistance to attacks, for example, CRIME - exploits of data streams with compressed headers.

Differences between HTTP 1.x and SPDY

The basic semantics of the HTTP application in the last HTTP / 2 iteration remained unchanged, including status codes, URIs, techniques, and header files. HTTP / 2 is based on SPDY, a Google-based alternative to HTTP 1.x. The main differences lie in the mechanisms for processing client-server requests. The table reflects the main differences between HTTP 1.x, SPDY and HTTP / 2:

| HTTP 1.x | SPDY | HTTP2 |

|---|---|---|

| SSL is not required, but is recommended. | SSL is required. | SSL is not required, but is recommended. |

| Slow encryption. | Fast encryption. | Encryption has become even faster. |

| One client-server request for one TCP connection. | Multiple client / server requests for one TCP connection. Implemented simultaneously on the same host. | Multi-Host Multiplexing. Implemented on multiple hosts in one instance. |

| No header compression. | Introduced header compression. | Advanced header compression algorithms are used, which improves performance and security. |

| No thread prioritization. | Introduced thread prioritization. | Improved thread prioritization mechanisms. |

How HTTP / 2 works with https

HTTPS is used to establish a high-security network connection, which plays a large role in the processing of important business and user information. The main objectives of the attackers - banks, processing financial transactions, and health care institutions, which accumulate medical history. HTTPS works as a layer that protects against persistent cyber threats, although repelling complex attacks aimed at valuable corporate networks is not only due to security concerns.

Browser-based HTTP / 2 support includes HTTPS encryption, and actually improves overall security performance when working with HTTPS. The key features of HTTP / 2, allowing you to ensure the security of digital communications in a sensitive network environment, are:

- fewer TLS handshakes

- lower consumption of resources on the client and server side,

- Improved reuse of existing web sessions, but without the vulnerabilities specific to HTTP 1.x.

HTTPS is used not only in well-known companies and to ensure cybersecurity. This protocol is also useful for owners of online services, regular bloggers, online stores and even users of social networks. HTTP / 2 requires the freshest, most secure version of TLS, so all online communities, company owners and webmasters need to make sure that their websites use HTTPS by default.

The usual procedures for configuring HTTPS include using web hosting plans, purchasing, activating and installing security certificates, and updating the site itself so that it can use HTTPS.

The main advantages of HTTP / 2

The network industry should replace the outdated HTTP 1.x with another protocol, the benefits of which will be useful for ordinary users. The transition from HTTP 1.x to HTTP / 2 is almost entirely due to the maximum increase in the potential of technological advantages, in order to meet current expectations.

From the point of view of e-commerce and Internet users, the more irrelevant content and rich multimedia on the network, the slower it works.

HTTP / 2 was created with a view to improving the efficiency of client-server data exchange, which allows businessmen to increase the coverage of their market segments, and for users to get access to high-quality content faster. Among other things, today the web is more situational than ever before.

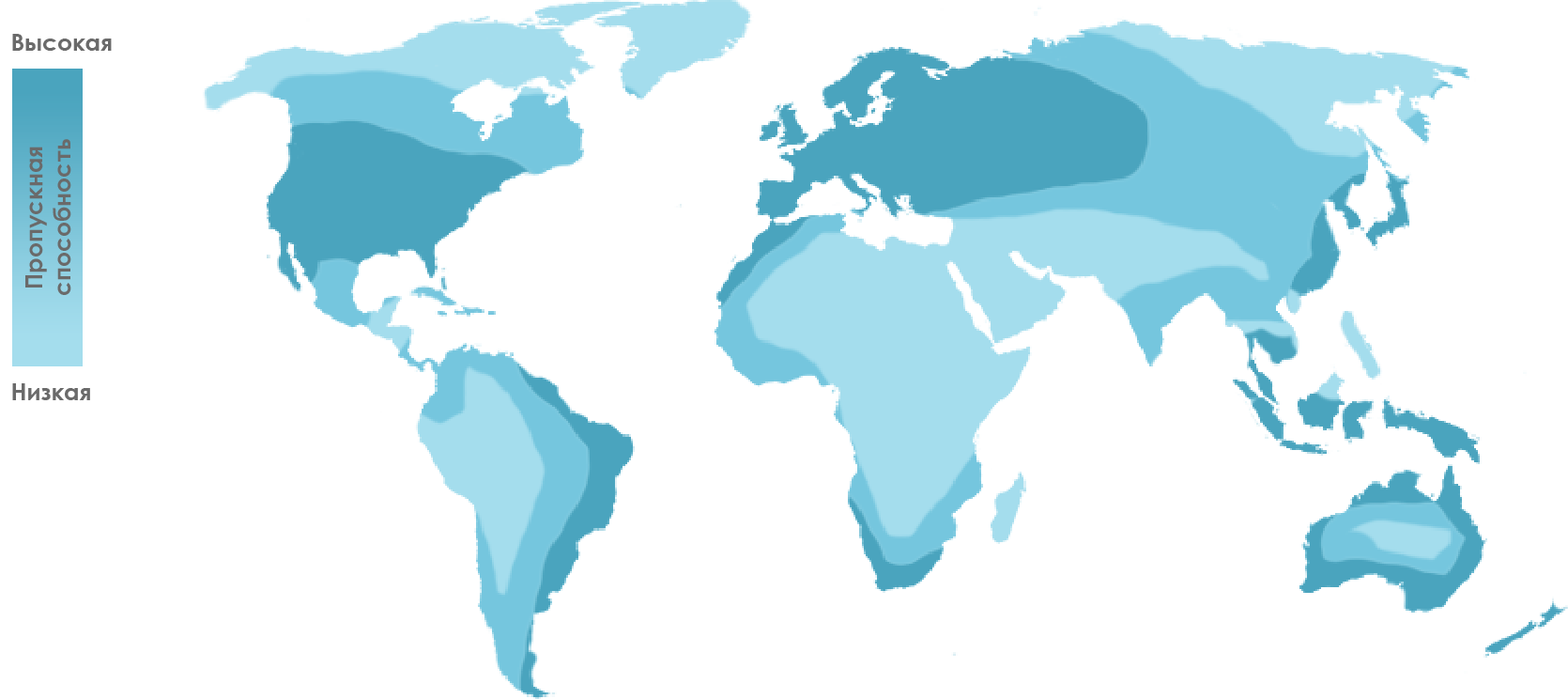

Internet access speeds vary by network and geographic location. The share of mobile users is growing rapidly, which requires ensuring a sufficiently high speed of the Internet on mobile devices of any form factors, even if overloaded cellular networks are not able to compete with broadband access. A complete solution to this problem is HTTP / 2, which is a combination of fully revised and revised network and data transfer mechanisms. What are the main benefits of http / 2?

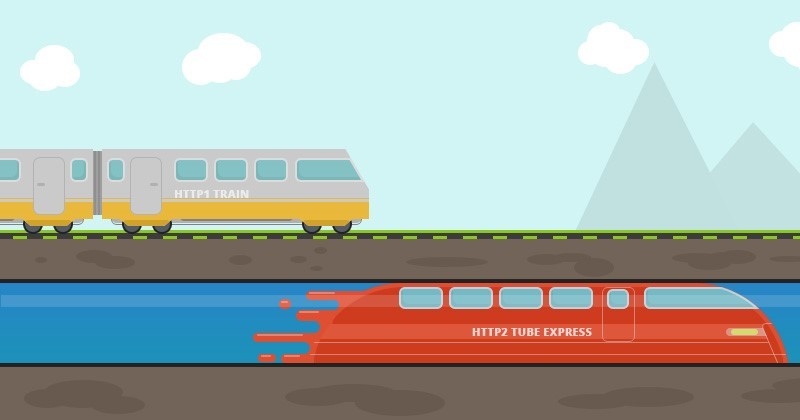

Network performance

This concept reflects the cumulative effect of all HTTP / 2 innovations. Benchmark results (see chapter “Comparison of HTTPS performance, SPDY and HTTP / 2”) demonstrate an increase in performance when using HTTP / 2 compared to its predecessors and alternative solutions.

The ability of the protocol to send and receive more data in each client-server data exchange cycle is not an optimization hack, but a real, accessible and practical advantage of HTTP / 2. As an analogy, a comparison of a vacuum train with a conventional one can be given: the absence of friction and air resistance allows the vehicle to move faster, take more passengers and use the available channels more efficiently without installing more powerful engines. It also reduces the weight of the train and improves its aerodynamics.

Multiplexing-like technologies help you simultaneously transmit more data. Like a big passenger plane, with several floors stuffed with seats.

What happens when data transfer mechanisms sweep away all barriers to network performance? High speed sites have side effects: users get more pleasure, optimization for search services is improving, resources are being used more efficiently, the audience and sales volumes are growing, and much more.

Fortunately, the implementation of HTTP / 2 is incomparably more practical than the creation of vacuum tunnels for large passenger trains.

Mobile network performance

Every day, millions of users access the network from their mobile devices. We live in the “post-PCD era”, many people use smartphones as their primary device for accessing online services and performing most routine computing tasks on the go, instead of sitting in front of desktop computers.

HTTP / 2 was designed with the current trends in network usage. The task of leveling a small bandwidth of the mobile Internet is well solved by reducing the delay due to multiplexing and header compression. Thanks to the new version of the protocol, the performance and security of data exchange on mobile devices reaches the level typical for desktops. This immediately has a positive effect on online business opportunities to reach potential audiences.

Internet is cheaper

Since the creation of the World Wide Web, the cost of using the Internet has rapidly declined. The main objectives of the development of network technologies have always been to expand access and increase its speed. However, the decline in prices, apparently, has stalled, especially in the light of allegations regarding the monopoly of telecommunications providers.

Increased bandwidth and increased efficiency in data exchange when deploying HTTP / 2 will allow providers to reduce operating costs without reducing access speed. In turn, the reduction in operating expenses will allow providers to move more actively in the low price segment, as well as to offer higher access speeds within the framework of existing tariffs.

Expansive reach

The densely populated regions of Asia and Africa are still lacking access to the Internet at an acceptable speed. Providers try to extract the maximum profit by offering their services only in large cities and developed areas. Thanks to the advantages of HTTP / 2, it will be possible to reduce the load on the network by allocating part of the resources and bandwidth to residents in remote and less developed areas.

Multimedia saturation

Today, Internet users practically require multimedia-rich content and services with instant page load. At the same time, for successful competition, site owners need to regularly update their content. The cost of appropriate infrastructure is not always high for Internet startups, even with the use of cloud services by subscription. The advantages and technological features of HTTP / 2 probably will not help greatly reduce file sizes, but they will remove several bytes from the overhead of transferring “heavy” media content between the client and servers.

Improving mobile internet experience

Progressive online companies to effectively reach a rapidly growing mobile audience follow the Mobile-First strategy. Perhaps the main limitation affecting the use of the mobile Internet is not the most outstanding characteristics of the hardware components of smartphones and tablets. This results in longer delays in processing requests. HTTP / 2 can reduce download times and network delays to an acceptable level.

More efficient use of the network

"Heavy" media content and sites with a complex design lead to a noticeable increase in resource consumption when the client and the server process browser requests. Although web developers have developed acceptable optimization hacks, the emergence of a sustainable and reliable solution in the form of HTTP / 2 was inevitable. , , — , .

Security

HTTP/2 . HPACK , . , , HTTP/2 « » (Security by Obscurity): , HTTP-. , Transport Layer Security (TLS1.2).

HTTP/2 . , . , HTTP/2, SPDY, HTTP 1.x. HTTP/2 SPDY, HTTP. - .

HTTP/2 SEO

SEO- - . - , , SEO- . - . , , , , . , , .

-. . SEO-, -, . HTTP/2 , - .

HTTP/2 . -, , HTTP/2, SEO. -, - HTTP/2 .

HTTPS, SPDY HTTP/2

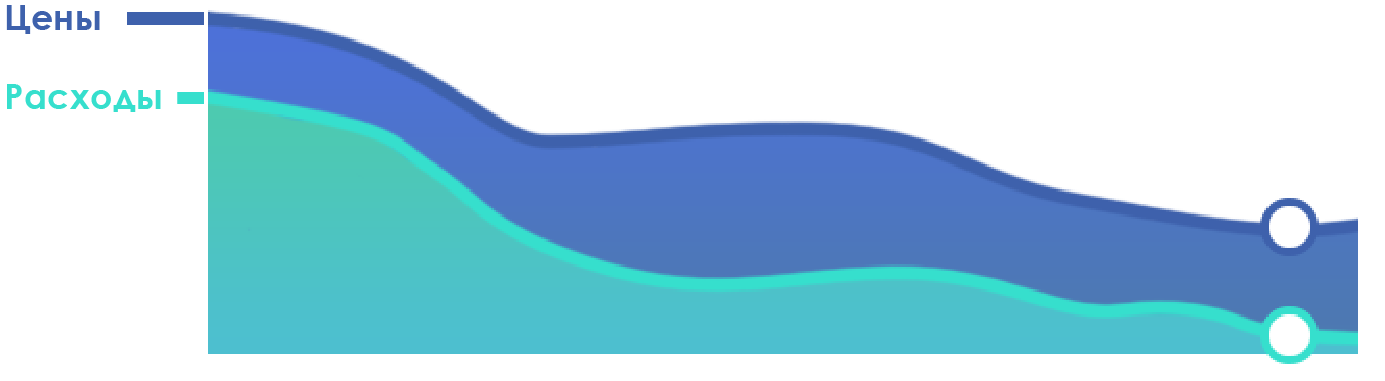

.

HTTP/2 , , , , .

:

:

- : HTTP/2 , . SPDY . HTTPS .

- : HTTP/2- , .

- TCP- : () HTTP/2 SPDY , , .

- : HTTP/2 SPDY. HTTPS - .

HTTP/2

HTTP/2 , . , HTTP 1.x, HTTP/2 . , . , — . , - . : , HTTP/2.

HTTP/2 — «» . Chrome Firefox , Safari HTTP/2 2014. IE Windows 8.

, Android Browser, Chrome Android iOS, Safari iOS8 , HTTP/2. , .

: Apache Nginx

-, , HTTP/2 . , HTTP/2, .

Nginx -, 66% - , HTTP / 2. HTTP/2 Apache, mod_spdy . Google Apache 2.2 , . Apache Software Foundation.

HTTP/2?

HTTP/2 :

- , HTTPS:

- SSL TLS.

- .

- .

- HTTPS.

- , HTTP/2 . Nginx , Apache 2015 ( 2.4). HTTP/2 .

- , . Apache. - , HTTP/2.

- HTTP/2 .

Conclusion

HTTP/2. , , HTTP 1.x, - . HTTP/2 , HTTP 1.x .

, HTTP/2 — . - , , , - -.

Source: https://habr.com/ru/post/304518/

All Articles