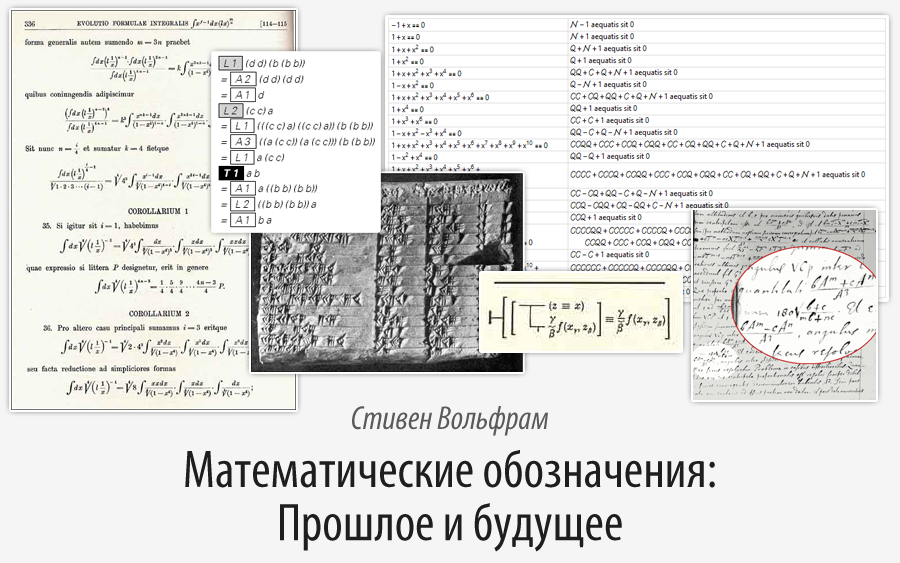

Mathematical notation: Past and Future

Translation of Stephen Wolfram's post " Mathematical Notation: Past and Future (2000) ".

Many thanks to Kirill Guzenko KirillGuzenko for his help in translating and preparing the publication.

Content

Summary

Introduction

Story

Computers

Future

Notes

- Empirical laws for mathematical notation

- Printed designations against screen

- Written notation

- Fonts and symbols

- Search for mathematical formulas

- Non - visual notation

- Evidence

- Selection of characters

- Frequency distribution of characters

- Parts of speech in mathematical notation

Transcript of speech presented at the section “MathML and Mathematics on the Web” of the first International Conference MathML in 2000.

Summary

Most of the mathematical notation has been around for more than five hundred years. I will look at how they were developed, what it was in ancient and medieval times, what designations introduced Leibniz, Euler, Peano and others, as they became common in the 19th and 20th centuries. The question of the similarity of mathematical notation with that which unites ordinary human languages will be considered. I will talk about the basic principles that have been discovered for ordinary human languages, which of them are used in mathematical notation and which are not.

According to historical trends, mathematical notation, like natural language, could be incredibly difficult to understand by computer. But over the past five years, we have introduced in Mathematica the ability to understand something very close to standard mathematical notation. I will talk about the key ideas that made this possible, as well as about the features in the mathematical notation that we discovered along the way.

')

Large mathematical expressions — unlike regular text fragments — are often computed results and are created automatically. I will talk about the processing of such expressions and what we have taken in order to make them more understandable to people.

Traditional mathematical notation represents mathematical objects, not mathematical processes. I will talk about attempts to develop a notation for algorithms, about the experience of implementing this in APL, Mathematica, in programs for automatic proofs and other systems.

An ordinary language consists of lines of text; mathematical notation often also contains two-dimensional structures. The question of using more general structures in mathematical notation and how they relate to the limit of people's cognitive abilities will be discussed.

The scope of application of a particular natural language usually limits the sphere of thought of those who use it. I will look at how traditional mathematical notation limits the possibilities of mathematics, as well as what generalizations of mathematics can be like.

Introduction

When this conference was being held, people thought that it would be great to invite someone to give a speech on the foundations and general principles of mathematical notation. And there was an obvious candidate - Florian Cagiori - the author of the classic book called “ The History of Mathematical Notation ”. But after a small investigation, it turned out that there was a technical problem in inviting Dr. Cagiori - he died at least seventy years ago.

So I have to replace it.

I suppose there were no other options. As it turns out that there is almost no one who is alive at the moment and who has been engaged in basic research of mathematical notation.

In the past, mathematical notation was usually done in the context of the systematization of mathematics. So, Leibniz and some other people were interested in such things in the middle of the 17th century. Babbage wrote a heavy work on this topic in 1821. And at the turn of the 19th and 20th centuries, in the period of serious development of abstract algebra and mathematical logic, there is another surge of interest and activity in this topic. But after that there was almost nothing.

However, it is not particularly surprising that I became interested in such things. Because with Mathematica one of my main goals was to take another big step in systematization of mathematics. And my more general goal with respect to Mathematica was to extend computational power to all types of technical and mathematical work. This task has two parts: how the calculations occur inside, and how people direct these calculations to get what they want.

One of the greatest achievements of Mathematica , about which probably most of you know, is the combination of high generality of computations from the inside and the preservation of practicality based on transformations of symbolic expressions, where symbolic expressions can represent data, graphics, documents, formulas - yes anything. .

However, it is not enough just to do the calculations. It is also necessary that people somehow tell Mathematica what calculations they want to make. And the main way to let people interact with something so complex is to use something like a language.

Languages usually appear in the course of some phased historical process. But computer languages in historical terms are very different. Many were created almost entirely at once, often by one person.

So what does this job involve?

Well, this is what this work was for me in relation to Mathematica : I tried to imagine what kind of calculations people would produce at all, which fragments in this computational work are repeated again and again. And then, actually, I gave the names of these fragments and implemented them as built-in functions in Mathematica .

We mostly repelled from the English language, since the names of these fragments are based on simple English words. That is, this means that a person who simply knows English will already be able to understand something written in Mathematica .

However, of course, Mathematica is not English. It is rather a highly adapted fragment of the English language, optimized for transmitting information about the calculations in Mathematica .

One would think that perhaps it would be a good idea to explain with Mathematica in ordinary English. In the end, we already know English, so it would not be necessary for us to learn something new in order to communicate with Mathematica .

However, I believe that there are very good reasons why it is better to think in Mathematica than in English when we reflect on the various kinds of calculations that Mathematica produces.

However, we also know that forcing a computer to fully understand natural language is an extremely difficult task.

OK, so what about mathematical notation?

Most people who work in Mathematica are familiar with at least some mathematical notation, so it would seem quite convenient to explain with Mathematica in terms of the usual mathematical notation.

But one would think that it would not work. One might think that the situation will result in something resembling a situation with natural languages.

However, there is one amazing fact - he surprised me quite a lot. Unlike natural human languages, a very good approximation that a computer can understand can be made for ordinary mathematical notation. This is one of the most serious things that we developed for the third version of Mathematica in 1997 [the current version of Wolfram Mathematica - 10.4.1 - was released in April 2016 - approx. Ed.]. And at least some of what we did, was included in the MathML specification .

Today I want to talk about some general principles in mathematical notation, which I happened to discover, and what this means in the context of today and the future.

In fact, this is not a mathematical problem. This is much closer to linguistics. It is not about what mathematical notation could be, but about what mathematical notation is used in reality - how it developed in the course of history and how it is connected with the limitations of human cognition.

I think mathematical notation is a very interesting field of study for linguistics.

As you can see, linguistics mainly studied spoken languages. Even punctuation remained virtually unheeded. And, as far as I know, no serious studies of mathematical notation from the point of view of linguistics have ever been conducted.

Usually in linguistics there are several directions. In one deal with issues of historical changes in languages. The other examines how language learning affects individuals. In the third, empirical models of some language structures are created.

Story

Let's talk about the story first.

Where did all the mathematical notations that we currently use come from?

This is closely related to the history of mathematics itself, so we have to touch on this issue a little. You can often hear the opinion that today's mathematics is the only conceivable implementation of it. What arbitrary abstract constructions could be.

And over the past nine years, that I was engaged in one big research project, I clearly understood that such a view on mathematics is not true. Mathematics in the form in which it is used is a teaching not about arbitrary abstract systems. This is the doctrine of a concrete abstract system that historically originated in mathematics. And if you look into the past, you can see that there are three main areas from which mathematics emerged in the form in which we now know it - this is arithmetic , geometry and logic .

All these traditions are quite old. Arithmetic originates from the time of ancient Babylon. Perhaps, geometry also comes from those times, but it was certainly already known in ancient Egypt. The logic comes from ancient Greece.

And we can observe that the development of mathematical notation - the language of mathematics - is strongly associated with these areas, especially with arithmetic and logic.

It should be understood that all three directions appeared in various spheres of human existence, and this greatly influenced the notation used in them.

Arithmetic probably arose from the needs of commerce, for such things as, for example, the expense of money, and then arithmetic was picked up by astrology and astronomy. Geometry appears to have arisen from land surveying and similar tasks. And logic, as we know, was born out of an attempt to systematize the arguments given in natural language.

It is noteworthy, by the way, that another, very old field of knowledge, which I will mention later - grammar - has in fact never been integrated with mathematics, at least until very recently.

So let's talk about the early traditions in the notation in mathematics.

First, there is arithmetic. And the most basic thing for arithmetic is numbers . So what designations were used for numbers?

Well, the first representation of numbers, which is known for certain - cuttings on bones, made 25 thousand years ago. It was a unary system : to represent the number 7, it was necessary to make 7 cuttings, and so on.

Of course, we cannot know for sure that this very representation of numbers was the very first. I mean, we could not find evidence of any other, earlier representations of numbers. However, if someone in those days invented some unusual representation for numbers, and placed them, for example, in rock art, then we may never know that it was a representation of numbers - we can perceive it simply as fragments of jewelry.

Thus, numbers can be represented in a unary form. And the impression is that this idea has been revived many times and in different parts of the world.

But if you look at what happened besides this, then you can find quite a few differences. This is a bit like how different types of constructions for sentences, verbs, etc., are implemented in different natural languages.

And, in fact, one of the most important questions about numbers, which, I believe, will emerge many more times - how strong should the correspondence be between ordinary natural language and the language of mathematics ?

Or here's the question: it is connected with positional notation and repeated use of numbers.

As you can see, in natural languages there are usually words such as " ten ", " one hundred ", " one thousand ", " one million " and so on. However, in mathematics we can represent ten as " one zero " (10), one hundred as " one zero zero " (100), one thousand as " one zero zero zero " (1000) and so on. We can reuse this one digit and receive something new, depending on where it appears in the number.

Well, this is a complex idea, and it took people thousands of years to really accept and realize it. And their inability to accept it earlier had great consequences in the notation used by them both for numbers and for other things.

As is often the case in history, the right ideas appear very early and remain in oblivion for a long time. More than five thousand years ago, the Babylonians, and perhaps even before them, the Sumerians developed the idea of the positional representation of numbers . Their number system was sixties , not decimal , like ours. From them we inherited the presentation of seconds, minutes and hours in the form that exists now. But they had the idea of using the same numbers to designate factors of various degrees of sixty.

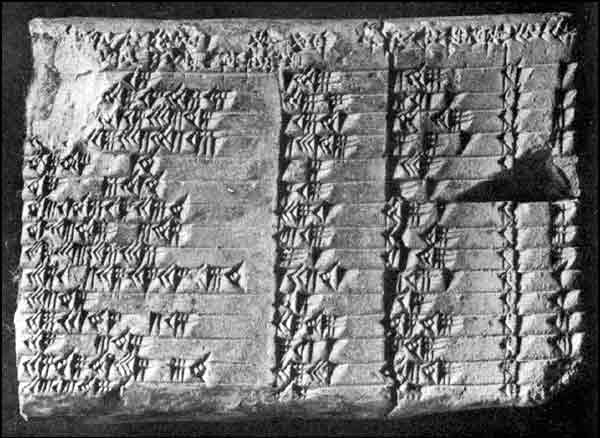

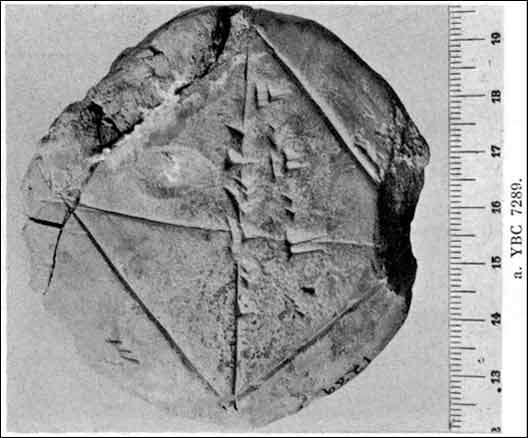

Here is an example of their notation.

From this picture you can understand why archeology is so difficult. This is a very small piece of baked clay. About half a million similar Babylonian tablets were found. And about one in a thousand - that is, about 400 in total - contain some kind of mathematical notes. Which, by the way, is higher than the ratio of mathematical texts to ordinary ones in the modern Internet. In general, while MathML is not widely spread, this is a rather complicated issue.

But, in any case, the small symbols on this plate look a bit like the prints of the tiny birds. But almost 50 years ago, in the end, researchers determined that this cuneiform tablet from the time of Hammurabi was around 1750 BC. - in fact, is a table of what we now call the Pythagorean triples .

Well, this Babylonian knowledge was lost for humanity by almost 3000 years. And instead, schemes based on natural languages were used, with separate symbols for ten, a hundred, and so on.

So, for example, among the Egyptians, the symbol of a lotus flower was used to designate a thousand, a bird was used for hundreds of thousands, and so on. Each degree of ten had a separate symbol for its designation.

And then another very important idea appeared, which neither the Babylonians nor the Egyptians had thought of. It consisted in the designation of numbers by numbers - that is, not to designate the number seven by seven units of something, but only one symbol.

However, the Greeks, perhaps, like the Phoenicians earlier, had this idea. Well, in fact, she was somewhat excellent. It was to designate a sequence of numbers through a sequence of letters in their alphabet. That is, the unit corresponded to alpha, beta - two, and so on.

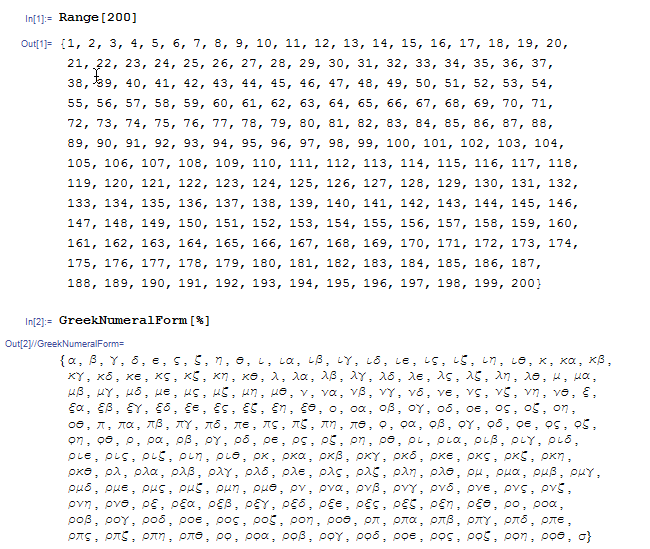

Here is the list of numbers in Greek notation [you can download the Wolfram Language Package, which allows you to represent numbers in various ancient notations here - approx. Ed.].

(I think that this is how system administrators from Plato's Academy would adapt their version of Mathematica ; their imaginary -600th version (or so) of Mathematica .)

There are many problems with this number system. For example, there is a serious problem of version control: even if you decide to delete some letters from your alphabet, you must leave them in numbers, otherwise all your previously written numbers will be incorrect.

That is, it means that there are various outdated Greek letters remaining in the number system - like a Koppa to denote the number 90 and a sampi to denote the number 900. However, I included them in the character set for Mathematica , therefore the Greek form of writing numbers works fine here.

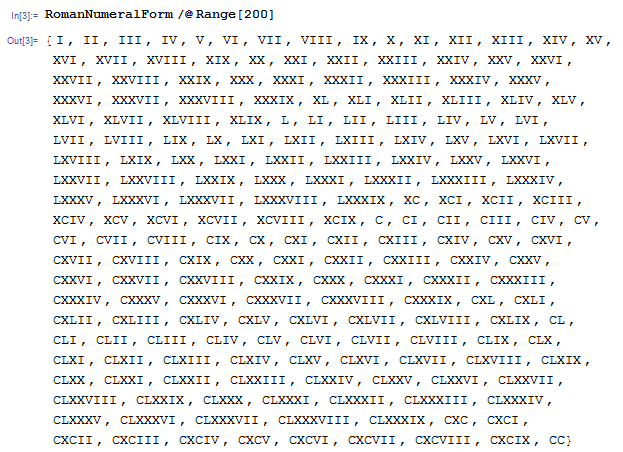

After some time, the Romans developed their own form of writing numbers, with which we are well acquainted.

Let it be now and not quite clear that their numbers were originally conceived as letters, but this should be remembered.

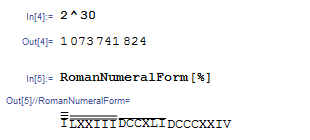

So let's try the Roman form of writing numbers.

This is also a rather inconvenient way to write, especially for large numbers.

There are some interesting points. For example, the length of the represented number recursively increases with the size of the number.

And in general, such a presentation for large numbers is full of unpleasant moments. For example, when Archimedes wrote his work about the number of sand grains, the volume of which is equivalent to the volume of the universe (Archimedes estimated their number at 10 51 , however, I suppose the correct answer would be about 10 90 ), he used ordinary words instead of symbols to describe such a large number.

But in reality there is a more serious conceptual problem with the idea of representing numbers as letters: it becomes difficult to invent a representation of character variables — some character objects with numbers behind them . Because any letter that could be used for this character object can be confused with a number or a fragment of a number.

The general idea about the symbolic designation of some objects through letters has been known for quite some time. Euclid, in fact, used this idea in his writings on geometry.

Unfortunately, the original works of Euclid are not preserved. However, there are a few hundred years younger versions of his work. Here is one written in Greek.

And on these geometric figures you can see points that have a symbolic representation in the form of Greek letters. And in the description of the theorems there is a set of moments in which points, lines and angles have a symbolic representation in the form of letters. So the idea of the symbolic representation of some objects in the form of letters originates at least from Euclid.

However, this idea could have appeared before. If I could read Babylonian, I could probably tell you for sure. Here is a Babylon tablet in which the square root of two is represented, and which uses the Babylonian letters for designations.

I believe that the baked clay is more durable than papyrus, and it turns out that we know that the Babylonians wrote more than they wrote about people like Euclid.

In general, this inability to see the ability to enter names for numeric variables is an interesting case where languages or symbols limit our thinking. This is something that is undoubtedly discussed in ordinary linguistics. In the most common formulation, this idea sounds like the Sapir-Whorf hypothesis (the hypothesis of linguistic relativity).

Of course, for those of us who have spent some of their lives developing computer languages, this idea is very important. That is, I know for sure that if I think in the Mathematica language, many concepts will be simple enough for my understanding, and they will not be so simple if I think in some other language.

But in any case, without variables everything would be much more complicated. For example, how do you introduce a polynomial?

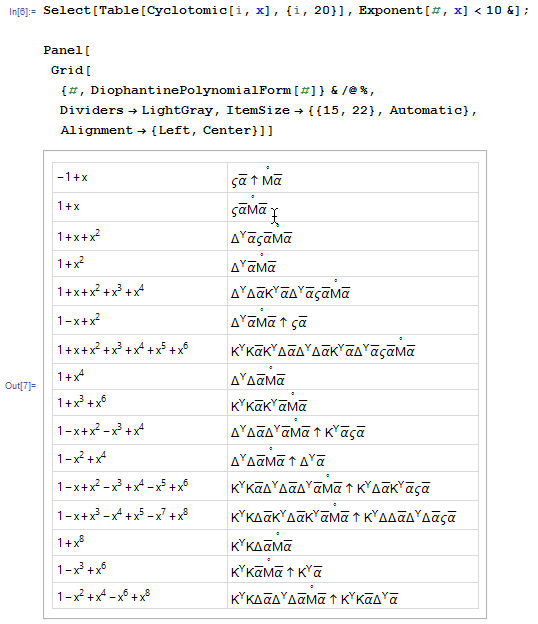

Well, Diofant - the one that came up with Diophantine equations - faced the problem of representing polynomials in the middle of the 2nd century AD As a result, he came to use certain letter-based names for squares, cubes, and so on. Here is how it worked.

At least now it would seem to us extremely difficult to understand the notation for Diophantus for polynomials. This is an example of not very good notation. I suppose the main reason, besides limited extensibility, is that these designations make mathematical connections between polynomials unobvious and do not highlight the most interesting moments for us.

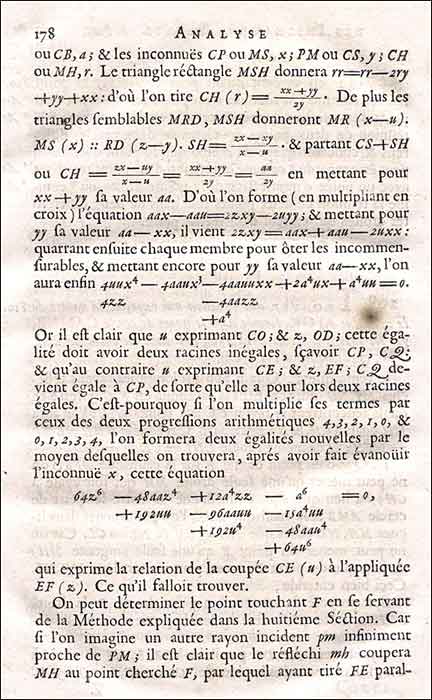

There are other schemes for specifying polynomials without variables, such as the Chinese scheme , which included the creation of a two-dimensional array of coefficients.

The problem here is, again, extensibility. And this problem with graph-based notation comes up again and again: a piece of paper, a papyrus or whatever - they are all limited to two dimensions.

, ?

, - . . - - , . 13 .

, , , , , . : , , 80-.

, , , , 1202 , , , .

, 15 , .

. 16 17 . . . - , .

, , , , . , , 17 , , « » .

. , , — .

, " zetetics ", :

, , , .

, ?

, , - , . — . , - .

: , — .

+ , , , " et " ( «»), 15 .

- 1579 , , , , — , , .

17 , . , Rx — . .

, . — , . . , , , . , , , , 400 . , .

, , . , — , . , , , , IntegerQ .

. , — . .

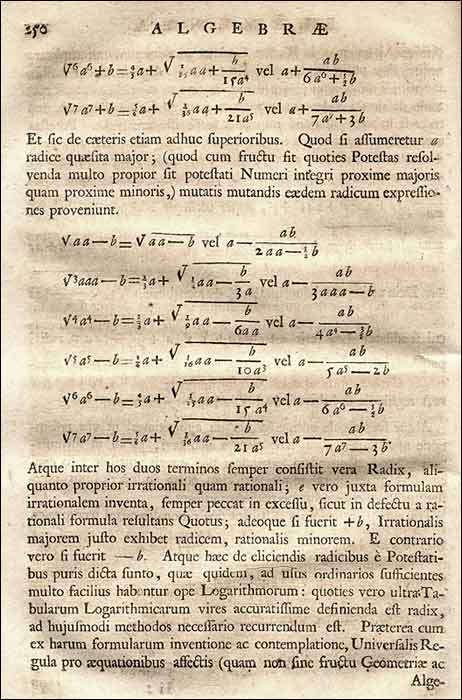

Here is an example.

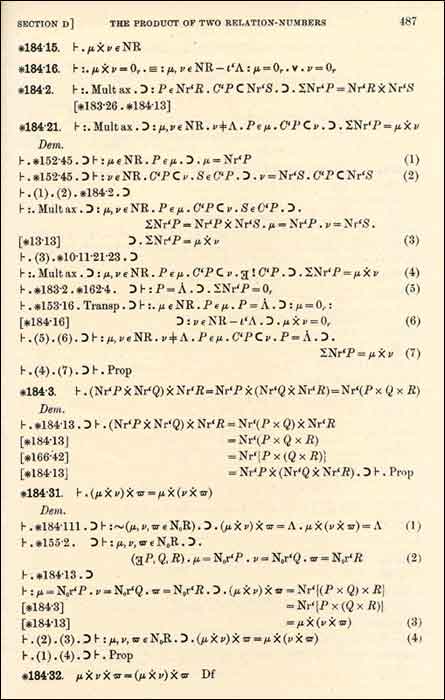

Principia , , . , . Principia , . . .

. . , , . -, , , .. , , .

, , . — , 14 , , , .

, , . , , , — .

, , . . 1675 . " omn. ", , omnium . 29 1675 .

. S . , . , .

11 " d ". , - . , , .

, . - — . , . , : " , ? ".

. , , . , .

.

, , .

18 . , , . , , .

, . , . , , .

, , .

, , , .

— , , .

. , , xx x 2 . .

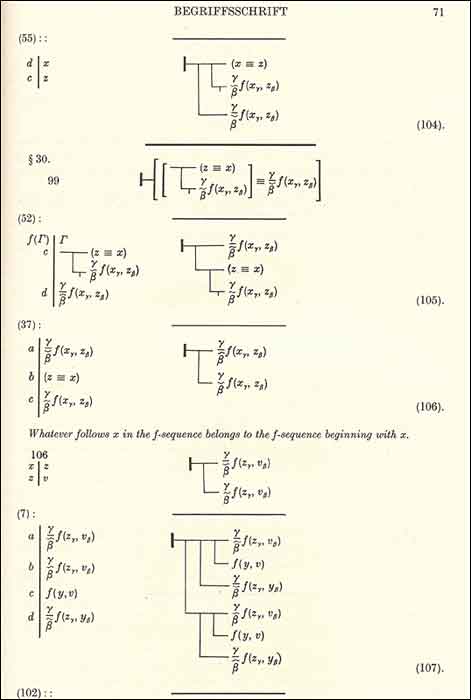

19 , . , , , , . , 1879 , .

. , , . 19 , . , .

, . " ".

, . , , , . , .

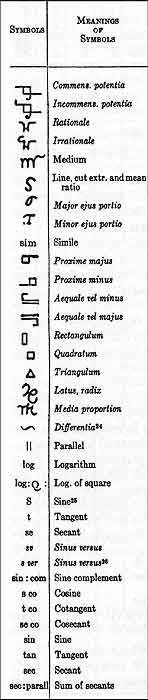

, . . Here is an example:

, 80- 19 , , - .

, , . . , — . , Formulario Mathematico , , — .

, , , . . . Principia Mathematica , .

, " , - ". Principia Mathematica .

. , : .

, , TrueType Type 1, . , , , .

, , . , , .

, .

?

- 20 , , . , .

, - . , , , .

- , , . , , .

, . , . , , , . , , , - 19 , .

, — , , . , , , , , . , — , , .

, , . , - - . .

, , , .

, .

. , , . . , .

Computers

Here's the question: can computers be made to understand these designations?

It depends on how systematized they are and how much sense can be extracted from some given fragment of mathematical writing.

Well, I hope, I managed to convey the idea that the notation developed as a result of ill-considered random historical processes. There were several people, such as Leibniz and Peano, who tried to approach this issue more systematically. But mostly the designations appeared in the course of solving some specific problems - just as it happens in ordinary spoken languages.

And one of the things that surprised me is that, in fact , an introspective study of the structure of mathematical notation has never been conducted .

The grammar of ordinary spoken languages has evolved over the centuries. No doubt many Roman and Greek philosophers and orators paid much attention to her. And, in fact, already around 500 BC. e. Panini amazingly detailed and clearly spelled grammar for Sanskrit. In fact, the Panini grammar was remarkably similar in structure to the specification of the rules for creating computer languages in the Backus-Naur form , which is currently in use.

And there were grammars not only for languages - in the last century an infinite number of scientific works on the correct use of language and the like have appeared.

But, despite all this activity in relation to ordinary languages, in fact, absolutely nothing has been done for the language of mathematics and mathematical notation. This is really quite strange.

There were even mathematicians who worked on the grammar of ordinary languages. An early example was John Wallis, who invented the formula of Wallace's work for pi, and he wrote works on the grammar of the English language in 1658. Wallis was the very man who started all this turmoil with the correct use of " will " or " shall ".

At the beginning of the 20th century, in mathematical logic, they spoke about different layers of a well-formed mathematical expression: variables inside functions inside predicates inside functions inside connection words inside quantifiers . But not about what it all meant for the designation of expressions.

Some certainty appeared in the 50s of the 20th century, when Chomsky and Backus independently developed the idea of context-free languages . The idea came to work on the rules of substitution in mathematical logic, mainly due to Emil Post in the 20s of the 20th century. But, curiously, both Chomsky and Bakus had the same idea in the 1950s.

Backus applied it to computer languages: first to Fortran , then to ALGOL . And he noted that algebraic expressions can be represented in context-free grammar.

Chomsky applied this idea to ordinary human language. And he noted that with some degree of accuracy, ordinary human languages can also be represented by context-free grammars .

Of course, linguists, including Chomsky, have spent years demonstrating how this idea does not correspond to reality. But the thing that I have always noted, but from a scientific point of view I considered the most important, is that, as a first approximation, it is still true - that ordinary natural languages are context-free .

So Chomsky studied ordinary language, and Backus studied things like ALGOL. However, none of them considered the question of developing a more advanced mathematics than a simple algebraic language. And, as far as I can tell, almost no one has dealt with this issue since then.

But, if you want to see if you can interpret some mathematical notation, you need to know what type of grammar they use.

Now I have to tell you that I considered the mathematical notation to be something too random for the computer to correctly interpret it. In the early nineties, we were excited to give Mathematica the opportunity to work with mathematical notation. And in the course of the implementation of this idea, we had to deal with what is happening with mathematical notation.

Neil Soiffer spent many years working on the editing and interpretation of mathematical notation, and when he joined us in 1991, he tried to convince me that it was possible to work with mathematical notation, both with input and with output.

The data output part was pretty simple: in the end, TROFF and TEX had already done a lot of work in this direction.

The question was data entry.

In fact, we have already figured out something about the conclusion. We realized that at least at some level, many mathematical notations can be represented in some context-free form. Since many people know a similar principle from, say, TEX, it would be possible to customize everything through working with nested structures.

But what about the input data? One of the most important moments was what you always encounter when parsing: if you have a string of text with operators and operands, how do you specify what is being grouped with what?

So let's say you have a similar mathematical expression.

Sin [x + 1] ^ 2 + ArcSin [x + 1] + c (x + 1) + f [x + 1]

What does it mean? To understand this, you need to know the priorities of the operators - which act stronger and which are weaker with respect to operands.

I suspected that for this there is no serious justification in any articles on mathematics. And I decided to investigate this. I walked through the most diverse mathematical literature, showed different people some random fragments of mathematical notation and asked them how they would interpret them. And I discovered a very curious thing: there was an amazing coherence of people's opinions in determining the priorities of the operators . Thus, it can be argued: there is a certain sequence of priorities of mathematical operators .

It can be said with some certainty that people represent precisely this sequence of priorities when they look at fragments of mathematical notation.

Having discovered this fact, I became much more optimistic about the possibility of interpreting the mathematical notation introduced. One of the ways in which you can always do this is to use templates . That is, it is enough just to have a template for the integral and fill the cells of the integrand, a variable, and so on. And when a template is inserted into a document, everything looks as it should, however, there is still information about what the template is, and the program understands how to interpret it. And many programs really do work.

But overall, this is extremely inconvenient. Because if you try to quickly enter data or edit it, you will find that the computer is beeping to you and does not allow you to do those things that obviously should be available to you for implementation.

To give people the opportunity to enter in free form is a much more difficult task . But this is what we want to implement.

So what does it entail?

First of all, the mathematical syntax should be carefully thought out and unambiguous. Obviously, such a syntax can be obtained by using an ordinary programming language with a string-based syntax. But then you will not get the familiar mathematical notation.

Here is the key problem: traditional mathematical notation contains ambiguities . At least, if you want to present it in a fairly general form. Take, for example, " i ". What is it - Sqrt [-1] or the variable " i "?

In an ordinary text InputForm in Mathematica, all such ambiguities are solved in a simple way: all Mathematica embedded objects start with a capital letter .

But the title “ I ” is not very similar to what Sqrt [-1] denotes in mathematical texts. And what to do with it? And here's the key idea: you can make another character, which also seems to be a capital “ i ”, but this will not be the usual capital “ i ”, but the square root of -1 .

One would think: Well, why not just use two “ i ” that would look the same - just like in mathematical texts - but one of them will be special? Well, that would be confusing. You will need to know what kind of “ i ” you are typing, and if you move it somewhere or do something similar, you will get confused.

So that means there must be two " i ". What should the special version of this symbol look like?

We had an idea - to use a double outline for the character. We tried a variety of graphical representations. But the idea with a double outline was the best. In some ways, it corresponds to the tradition in mathematics to designate specific objects with a double outline.

So, for example, a capital R could be variable in math entries. But R with a double outline is already a specific object that denotes a set of real numbers.

Thus, the double-marked " i " is a specific object that we call ImaginaryI . Here's how it works:

An idea with a double outline solves many problems.

Including the largest - integrals. Suppose you are trying to develop a syntax for integrals. One of the key questions is what could the " d " mean in the integral? What if this is a parameter in the integrand? Or variable? It turns out a terrible confusion.

Everything becomes very simple if you use DifferentialD or " d " with a double outline. And the result is a well-defined syntax.

You can integrate x to degree d divided by the square root of x + 1 . Here's how it works:

It turns out that only a few small changes in the basis of the mathematical notation are required to make it unambiguous. It's amazing. And very cool. Because you can simply enter something consisting of mathematical notation in a free form, and it will be perfectly understood by the system. And this is what we implemented in Mathematica 3.

Of course, for everything to work as it should, you need to deal with some of the nuances. For example, to be able to enter anything in an effective and easy-to-remember way. We have been thinking about it for a long time. And we came up with some good and general schemes for the implementation of this.

One of them is entering things like degrees as superscripts. In plain text input, the symbol ^ is used to indicate the degree. The idea is to use control - ^ , with which you can enter an explicit superscript. The same idea for the combination control - / , with which you can enter a "two-story" fraction.

Having a clear set of principles like this is important in order to make everything work together in practice. And it works. Here’s how a pretty complex expression might look like:

But we can take fragments from this result and work with them.

And the point is that this expression is completely understandable for Mathematica , that is, it can be calculated. It follows from this that the execution results ( Out ) are objects of the same nature as the input data ( In ), that is, they can be edited, their parts can be used separately, their fragments can be used as input data, and so on.

To make all this work, we had to generalize the usual programming languages and analyze something. Previously, the opportunity to work with a whole "zoo" of special characters as operators was introduced. However, it is probably more important that we have implemented support for two-dimensional structures. So, in addition to prefix operators, there is support for overfix operators and others.

If you look at this expression, you can say that it does not quite look like traditional mathematical notation. But it is very close. And it undoubtedly contains all the features of the structure and forms of writing the usual mathematical notation. And the important thing is that no one who owns the usual mathematical notation will have difficulty in interpreting this expression.

Of course, there are some cosmetic differences from what could be seen in a regular math textbook. For example, how are trigonometric functions written, and so on.

However, I’m willing to bet that the StandardForm in Mathematica is better and clearer to represent this expression. And in the book that I wrote for many years about a scientific project that I was engaged in, I used only StandardForm to introduce anything.

However, if you need full compliance with conventional textbooks, you will need something else. And here is another important idea implemented in Mathematica 3: to separate StandardForm and TraditionalForm .

Any expression I can always convert to TraditionalForm .

In fact, TraditionalForm always contains enough information to be uniquely converted back to StandardForm.

But TraditionalForm looks almost like ordinary mathematical notation. With all these rather strange things in traditional mathematical notation, like writing sine squared x instead of sine x squared, and so on.

So what about entering a TraditionalForm?

You may have noticed the dotted line to the right of the cell [in other pins the cells were hidden to simplify the pictures - approx. Ed.]. They mean that there is some dangerous moment. However, let's try editing something.

We can perfectly edit everything. Let's see what happens if we try to figure it out.

Here, there was a warning. In any case, we will continue anyway.

Well, the system understood what we want.

In fact, we have several hundred heuristic rules for interpreting expressions in the traditional form. And they work quite well. It is good enough to go through large volumes of obsolete mathematical notation, defined, for example, in TEX, and automatically and unambiguously convert them into meaningful data in Mathematica .

And this opportunity is quite inspiring. Because for the same outdated text in natural language there is no way to convert it into something meaningful. However, in mathematics there is such an opportunity.

Of course, there are some things related to mathematics, mainly on the output side, with which there are substantially more difficulties than with regular text. Part of the problem is that mathematics is often expected to work automatically. You can not automatically generate a lot of text that will be quite meaningful. However, in mathematics, calculations are made that can produce large expressions.

So you need to come up with how to break the expression in lines so that everything looks neat enough, and in Mathematica we did a good job on this task. And there are several interesting questions connected with it, such as the fact that during the editing of an expression the optimal splitting into lines can constantly change in the course of work.

And this means that there will be such nasty moments as if you are typing, and suddenly the cursor jumps back. Well, I think we solved this problem in a rather elegant way. Let's take an example.

Did you see it? There was a funny animation that appears momentarily when the cursor should move backwards. You may have noticed her. However, if you were typing, you probably would not have noticed that the cursor moved back, although you might have noticed it, because this animation makes your eyes automatically look at this place. From the point of view of physiology, I suppose it works due to nerve impulses that do not flow into the visual cortex, but directly into the brain stem, which controls eye movements. So, this animation makes you unconsciously move your gaze to the right place.

Thus, we were able to find a way to interpret standard mathematical notation. Does this mean that now all the work in Mathematica should now be carried out within the framework of traditional mathematical notation? Should we enter special characters for all the operations presented in Mathematica ? Thus, a very compact notation can be obtained. But how reasonable is that? Will it be readable?

Perhaps the answer is no.

I think the fundamental principle is hidden here: someone wants to represent everything in the notation, and not to use anything else .

And someone does not need special symbols. And someone uses in Mathematica FullForm . However, it is very tiring to work with this form. Perhaps that is why the syntax of languages like LISP seems so difficult - in fact, this is the FullForm syntax in Mathematica .

Another possibility is that you can assign special designations to everything. It turns out something like APL or some fragments of mathematical logic. Here is an example of this.

Pretty hard to read.

Here is another example from the original Turing article, which contains designations for the universal Turing machine, again - an example of not the best notation.

It is also relatively unreadable.

The question is what lies between two such extremes as LISP and APL. I think this problem is very close to the one that arose when using very short names for commands.

For example, Unix. Early versions of Unix looked great when there were a small number of short commands for typing. But the system has grown. And after some time there were already a large number of teams consisting of a small number of characters. And most mere mortals could not remember them. And everything began to look completely incomprehensible.

, , . . , . . But not more. , , .

. , , .

, .

, . . , .

, , , - , . .

— . , , , . , — , , . , .

, , " e " — , " t ", . , . MathWorld , — 13 500 , , [ , , , — . .].

, " e " — . , " a " . . , π — , θ, α, φ, μ, β . — Γ Δ.

Good. , . ?

, , . , " " . , AMS, .

, .

StandardForm Mathematica , . , , . TraditionalForm . ,

, , .

, , .Future

, .

, , ?

- 2500 , . , .

?

— . Mathematica , , , . , - ASCII [ Mathematica Unicode — . .].

, . ASCII, , , . , Mathematica -> ,

. , Mathematica .

. , Mathematica ., . , . # , , , , , , . . , , , . , , - .

— ? , , - , , — . . ?

, . , .

-, , . . , - , . , , .

? ? — , , ? - ?

, . . , — , , , - . , , , , .

, , , .

, ?

, . , .

, . , , , .

, , . , - . . , .

. , .

— — . , . , , .

. .

Thank you very much.

Notes

, .

- . — , . , - .

: .

, 60 19 . — a i . — — , , ,

a .

a .. , , - . — . , .

. , , — f , - .

, . , , , .

, 20- 20 , - .

, , - .

Mathematica . :

k[x_][y_]:=ix

s[x_][y_][z_]:= x[z][y[z]]

n , , , Nest[s[s[k[s]][k]],k[s[k][k]],n] , s[k[s]][s[k[s[k[s]]]][s[k[k]]]] , s[k[s]][k] , — s[k[s[s[k][k]]]][k] . .

, , . - . , , .

.

, , .

.

-, . , - . , . , , , x x .

, - . , , , .

, — — Mathematica . , , .

, .

, . A New Kind of Science , , .

, , , .

— .

.

, , .

, , , .

, , - . , — , - .

- .

Mathematica 3 1100 , .

— — . , , — .

. . Times- , Courier, sans serif. Courier . , , , , .

() . , . , , , , , .

Here's what we got:

fonts.wolfram.com, , , Mathematica .

[ Wolfram|Alpha , Wolfram Language, MathematicalFunctionData — . .].

, . .

, . , . , , , , .

, Mathematica .

functions.wolfram.com , .

- .

, , , , , , . , , 50 000.

Mathematica , 1991 . , - .

-, .

Proof of

- .

, .

Mathematica . Theorema .

— , — . :

( f NAND ):

{f[f[a,a],f[a,a]]==a,f[a,f[b,f[b,b]]]==f[a,a], f[f[a,f[b,c]],f[a,f[b,c]]]==f[f[f[b,b],a],f[f[c,c],a]]}

, f[a,b]==f[b,a] :

(ab) Nand[a,b] . L == , A == , T == .

- .

2500 , .

— , . . , ( , ).

.

, , .

, , , NAND, NOR, XOR.

NAND -:

. , . .

NAND, , . , :

MathWorld.

, , . .

, , 17 , .

, , .

, , .

. , . , .

.

, , , .

.

?

, (, AND OR, NAND). .

, , , .

Wolfram info-russia@wolfram.com

Source: https://habr.com/ru/post/304502/

All Articles