Unity: how we created a generator of houses for AssetStore or return to Khrushchev

The reason for writing this article was this article about "Khrushchev", which raised an interesting topic for me about software generation of meshes in Unity .

My article is not intended for those who have long been working on Unity, it is unlikely that there will be something new for people familiar with the intricacies of Unity. Also, for completely newbies, there are possible unobvious "difficulties" and why you need to invent something when so everything is in the editor. The most suitable audience for us are developers who already know how to do something in Unity, but they haven’t yet solved the task of dynamically changing the meshes in the editor. Also, perhaps some will benefit from our experience with the inspector.

')

The author does not pretend to have absolute knowledge of the subject area, I just want to tell in my own words the solution of some tasks that appeared during the creation of an asset.

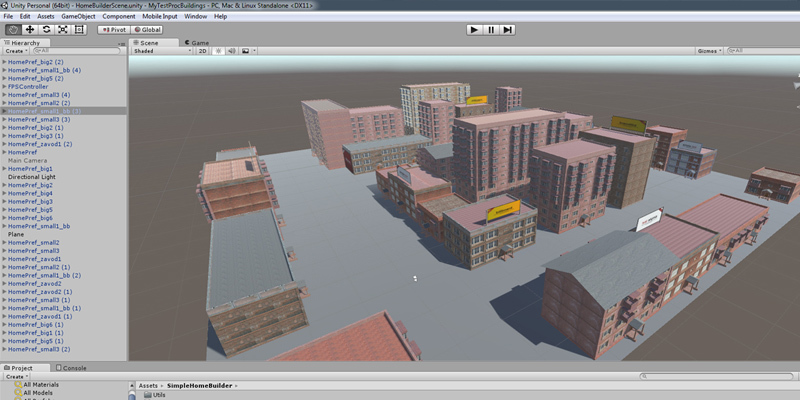

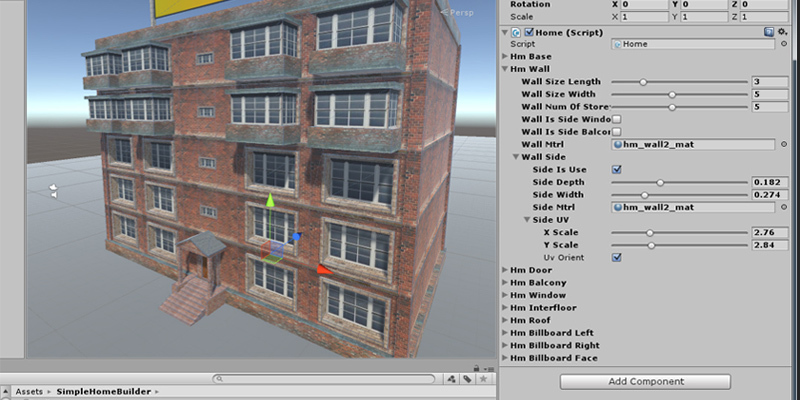

After reading the above article, I immediately realized that this is what is needed for our project. Originally we were going to create houses from exported fbx files. Of course, we didn’t do one-on-one as described, we pushed away from the basic idea and immediately set ourselves the task: editing and viewing buildings directly in the Unity editor without switching to the running game mode. As a result of the previous idea, it turned out to fit all the building settings in one script and, as a result, in the object inspector it turned out to edit the entire structure entirely in one place.

Small retreat

I'll start with self-irony. Our small team some time ago began to develop games for mobile platforms. Starting with the ambitious task of creating your own game engine, we started to work. According to my own experience of developing in other areas of activity and reading books about creating game engines, it was decided to write everything in pure C ( PureC ) . Several key subsystems of the engine were implemented: an optimized memory manager; graphic renderer; a hierarchy of objects based on the component approach ( publication on Habré ) to the creation of composite models; tied LUA for the possibility of scripting created games; implemented the ability to store in a local SQLite database everything and everything (including Lua scripts) and so on. After quite a long time implementing all of the above and mastering the interfaces provided by OpenGL, we came to a disappointing conclusion: we can create a full-fledged engine, but very soon.

Switch to Unity

Naturally, in parallel with the creation of its own engine, we were interested in the achievements of the gaming industry. We were very attracted by the Unreal Engine , but the minimum project of 30 ... 45MB for mobile platforms immediately cooled us, and we decided to look for other solutions, Shiva approached more or less, after a brief study of this engine, we decided to look for more options.

Finally, we recently tried our hand at Unity. The C # syntax in Unity was partially mastered, but it was quite difficult to get used to the fact that, thanks to the garbage collection system ( GC ), it was not always necessary to free up the resources that were no longer needed.

Selected topics for work

Before describing further work, I’ll immediately tell you about the limitations: I started studying Unity 5 right away, that is, all the examples should be workable in the 5th version of Unity, for the previous 4th, and even more so the 3rd version, I can’t give any guarantees. From my own experience I can say that some of the lessons / scripts I came across for the 4th version turned out to run on 5-ke, some required reformatting to the new version and were successfully launched, and some could not be started, had to change the command syntax . I don’t know anything about backward compatibility of versions (small addition: before publishing an asset, the following scripts were successfully tested on Unity 4.5.0).

For the visual content of the game, it was decided to saturate the surrounding background with various buildings. Immediately I wanted to see in the editor the approximate appearance of the buildings. In order to be able to "run" the script directly in the editor, it is necessary to write the line [ExecuteInEditMode], i.e., before the class inherited from MonoBehaviour. Create the MyTest.cs script in the project and modify it using the following template:

using UnityEngine; [ExecuteInEditMode] public class MyTest : MonoBehaviour { // , // // } When changing any field in the inspector, the script method (if implemented) Update () will be called. In order to save on the size of the scripts collected for the game, this method can be "screened" with a couple of #if UNITY_EDITOR ... #endif directives, thus drawing an approximate template of the directly modified script in the component inspector:

using UnityEngine; [ExecuteInEditMode] public class MyTest : MonoBehaviour { public int i=10; #if UNITY_EDITOR void Update () { Debug.Log ("Update"); } #endif } Attach this script to any component on the scene and change the value of the i field in the inspector, the Update will be immediately displayed in the console (more precisely, the number of Update messages will increase), the script will “expect” changes in public fields the rest of the time.

It should be noted that if there are a large number of components with this script on the stage, the scene will noticeably slow down. To get rid of this, you need to move the change handler in the inspector to the script in a special folder Editor. The contents of this folder will not fall into the finished project. Let's create an Editor folder with the MyTestInspector.cs script in it:

using UnityEngine; using UnityEditor; using System.Collections; [CustomEditor(typeof(MyTest))] public class MyTestInspector : Editor { // public override void OnInspectorGUI() { // DrawDefaultInspector (); // MyTest mt = target as MyTest; // , // mt.DoRefresh(); } } Let's look at the modified MyTest script:

using UnityEngine; [ExecuteInEditMode] public class MyTest : MonoBehaviour { public int i=10; public void DoRefresh () { Debug.Log ("Update"); } } Setup inspector for more convenient work

After studying the official certificate and googling, we first focused on a variant that provided for redrawing public properties in the inspector and reacting to their change by appropriate methods in the script, but then it was decided to split the original data for editing into interrelated data structures with which it is easy to operate without losing in about 130 simultaneously visible settings for building a building. After that, the need for "own inspector" disappeared. However, we hope to return to this approach in the future when creating and editing components. If anyone is interested, I can give links: here and here .

Some subtleties of creating an interface

In the usual creation of a public variable of type int or float, like this:

public int iterator=2; public float dx=0.5f; In the inspector, they are displayed as simple editing fields, with frequent changes of these values in the process of work, it is terribly annoying to constantly hit the mouse in the fields, enter meaningful numbers that are slightly different from each other, and look at the changes made, it is much more convenient to use a different approach. When declaring fields immediately before a variable (in the line above), specify the allowable range for the input values:

[Range(-10, 10)] public int iterator=2; [Range(0.0f, 1.0f)] public float dx=0.5f; After such additions in front of the variables, in the inspector it is enough to move the slider to smoothly or abruptly change the value.

In order not to show all the fields in the script at the same time, and we have about 130 of them, we can resort to grouping strongly related values into one class, and already this class should be declared a public field in the script. In order to be able to save changes of fields in separate instances of classes and show fields in the inspector, it is necessary to write the line [System.Serializable] before declaring the class (again, the line above), we end up with:

[System.Serializable] public class LeftRightSide { [Range(0, 100)] public int leftSide=3; [Range(0, 100)] public int rightSide=20; } After you declare this class as a public field in your script, a hidden / expandable block of fields will appear in the inspector to edit the contents of the class described above. The hierarchical nesting of various classes into each other is possible and it is limited only by common sense. Such a simple technique allows, firstly: grouping data related to each other, and secondly: it simplifies navigation in the inspector. We describe the entire script:

using UnityEngine; [ExecuteInEditMode] public class MyTest : MonoBehaviour { public LeftRightSide leftRight=new LeftRightSide(); public void DoRefresh () { Debug.Log ("Update"); } } [System.Serializable] public class LeftRightSide { [Range(0, 100)] public int leftSide; [Range(0, 100)] public int rightSide; } Variable directly in the mesh editor

Preparing components for drawing a mesh. In order to be able to edit and show the mesh, you can use the features of the editor, for example: create an empty object on the scene, then add the MeshFilter and MeshRenderer to it through the menu items Component-> Mesh-> Mesh Filter and Component-> Mesh-> Mesh Renderer respectively. The first component is responsible for the “internal” geometry of the mesh, the second is associated with drawing the mesh on the screen. There is another, more reliable way to add these components. When creating a script, it is necessary to instruct to add the above two components, if they are missing on the component to which the script is attached. To do this, before declaring the descendant class MonoBehaviour, write the string [RequireComponent (typeof (MeshFilter), typeof (MeshRenderer))]. Sample script preparation:

using UnityEngine; using System.Collections; [RequireComponent(typeof(MeshFilter), typeof(MeshRenderer))] [ExecuteInEditMode] public class MyTest : MonoBehaviour { //... } A bit of theory (those who know how to work with meshes in Unity can skip this section)

To display something in the mesh of a component, it is necessary to fill the mesh with the required data. The very minimum of which consists of a set of vertices and so-called facets (this name has synonyms, for example: triangles, faces, facets, an array of indices), that is, the rules for associating vertices with each other. Additionally, the possibility of drawing textures will be considered, that is, you will need to use uv-coordinates. Normals will also be shown briefly. Immediately make a reservation, here I consider only the "classic" work of shaders, without considering the work of the particle system, the grid mode of operation, and others. That is, the mode of drawing by triangles based on vertices and the rules of their "bundle" will be considered. In general, a detailed review of this topic, i.e. meshes, shaders, vertex bundles, facets, normals, texture coordinates, vertex buffers, matrices and other things can (and should) take up several books, so I’ll confine myself to the minimum amount of information that should be enough to build dynamic meshes displayed directly in the editor.

Knowing how to draw a triangle, you can draw an arbitrarily complex shape, combining triangles together. For example, for a square, a rhombus, a parallelepiped, a trapezium, and generally a quadrangle, two triangles are enough. To draw more complex shapes, more triangles are needed, but the principle will not change. That is, the minimum and sufficient primitive for drawing everything else, we agree to consider a triangle.

I’ll briefly mention shaders: shaders are specially written and compiled programs that draw triangles according to certain rules. In fact, everything is somewhat more complicated, but for brevity we will accept such an interpretation. I will only mention that when drawing the next triangle, the shader does not know anything about the neighboring triangles.

The memory in the computer is linear, and wherever there is a large / composite data block (that is, structure, class, array, etc.), its contents are immediately behind each other, and access to its elements is also best organized linearly. And since the mesh is a complex structure, which can contain fairly large volumes of different, but united by the type of specific data, then the management of this data will also be properly organized by linear access. That is, it is necessary to create and fill in arrays with the appropriate data, and then "attach" these arrays to the mesh.

I will list some data types we need for building meshes:

Vertices (vertices) - consist of an array of data of type Vector3, that is, of a structure containing three consecutive data of type float, which is nothing more than the spatial coordinates of a single vertex along the x, y and z axes.

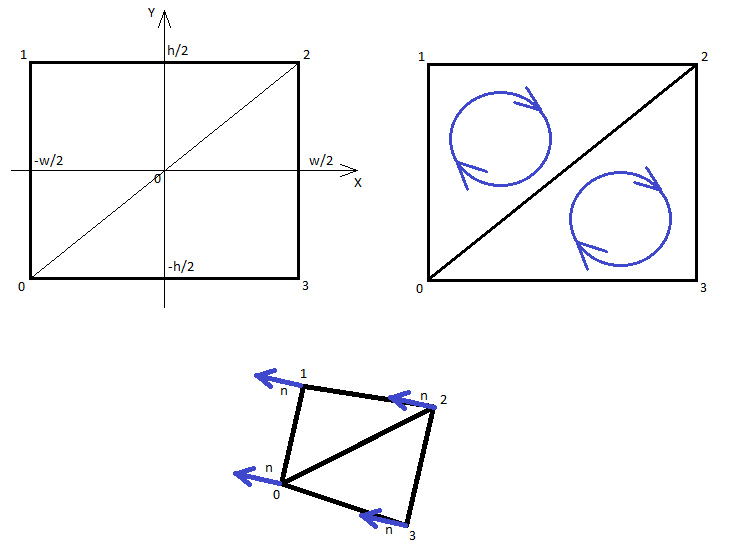

Vertex indices or facets (triangles) - consist of an array of int data type, but here you need to take into account that whole numbers must be grouped by 3 into one triangle. Consider this point in more detail, the fact is that to describe a single triangle (that is, a minimally drawn primitive), you need to specify 3 vertex indices. The first three numbers define the drawing of the first triangle, the second three - the second, etc. It is also important to mention the order of traversing vertices in a triangle. If you enumerate vertices (that is, vertex indices) clockwise, the triangle "looks at you", that is, you see it, otherwise the triangle will not appear and you will not see it, but if you visually "bypass" it from the opposite side, he will be visible to you (that is, from a new perspective, the enumeration of the vertices "will change" and will be clockwise). As you might guess, the total length of the entire array will be equal to the number of triangles multiplied by three.

Normals (normals) - consist of an array of data of type Vector3, this is an array of "perpendiculars" to the vertices, the dimension of the array of normals and the array of vertices is the same. The absolute length of each normal vector is equal to one, in essence, a “angle of rotation” of a vertex is formed. Why do we need these normals? They are needed in order to properly consider the lighting of the triangle. Knowing the angles between the normal, the beam of the light source and the eye of the observer, we can calculate the illumination. Normals are created and computed not for triangles, as you might expect, namely for the vertices separately. If the normal is set only to a triangle, then the shader does not know how the normal from the triangle to the triangle should change (because the shader knows nothing about neighboring triangles), the drawn figure will look albeit illuminated, but strongly angular. The fact is that the shader, when processing each triangle, uniformly changes some parameters between the vertices from one to another, including the normals to the vertices. This results in a smooth change in illumination, even within a single triangle. If the normals to the vertices of the triangle diverge from each other, then the triangle will look "convex", if the normals converge, then the triangle will be "concave", if the normals are parallel, the triangle will be flat. Neighboring triangles will be built on the same principle, they will coincide the corresponding vertices with the normals, and if the triangles are at different, but not very different angles, then the transition between triangles will be smooth, and the border between them will be slightly different.

UV coordinates (uv) - consist of an array of data of type Vector2, that is, of a structure containing two variables of type float, which are the x and y coordinates of the "inside" texture. Here you need to tell more. The lower left corner of the texture corresponds to the uv coordinate (0, 0), the upper left is (0, 1), the upper right is (1, 1) and the lower right is (1, 0). If you take coordinates in the range [0 ... 1], then the whole texture will be drawn partially or completely, depending on the values you specify. You can take values outside the specified range, then the texture will be repeated as many times as you specify, for example, the uv coordinate is selected (2, 3.5), then the x axis will repeat the texture 2 times, and the y axis 3.5 times. In order for the texture to be repeated, you need to set the necessary flags for this. In many cases, flags are set by default. The dimension of the array of uv coordinates is the same as the dimension of the vertex array, that is, each vertex corresponds to the texture coordinate uv.

Summarize the above. To create and draw a mesh, you need to create arrays of vertices, indexes of vertices, uv-coordinates and normals.

Look at the figure below, it shows schematically the placement of the vertices of the rectangle relative to the center of coordinates. The vertex indices are indicated near the corners of the rectangle, that is, their index in the array of vertices. I recommend when building any shapes to create them with a "geometric center" at the origin. This is useful if you need to rotate and / or scale your shape with a predictable result. After creating a mesh, you can easily move all its vertices in the direction you want.

Let's start creating a mesh so far only with vertices and indexes, change the above script by example:

MyTest.cs file

using UnityEngine; using System.Collections; [RequireComponent(typeof(MeshFilter), typeof(MeshRenderer))] [ExecuteInEditMode] public class MyTest : MonoBehaviour { // public Banner banner = new Banner(); void Start () { } // public void DoRefresh() { // 3 // // 3*1=3 Vector3[] v=new Vector3[3]; // int[] f=new int[3]; // // Mesh tmpMesh; // float w2 = banner.bWidth / 2; // float h2 = banner.bHeight / 2; // // , z v [0] = new Vector3 (-w2, -h2, 0); //0- v [1] = new Vector3 (-w2, h2, 0); //1- v [2] = new Vector3 (w2, h2, 0); //2- // // , // f [0] = 0; f [1] = 1; f [2] = 2; // tmpMesh = new Mesh (); // tmpMesh.vertices = v; // tmpMesh.triangles = f; // , //"" GetComponent<MeshFilter> ().mesh = tmpMesh; } } [System.Serializable] public class Banner { [Range(0.0f, 1.0f)] public float bWidth=0.5f; [Range(0.0f, 1.0f)] public float bHeight=0.5f; } Create an "empty" component on the scene (GameObject-> Create Empty menu) and attach a script to it, you should see a pink triangle, if the triangle is not visible, rotate the camera around the component. Try to change the width and height of the banner in the inspector, you should immediately see the changes in the triangle. Let's make a rectangle. To do this, change the contents of the DoRefresh () method to the following:

MyTest.cs

public void DoRefresh () { // 4 // 0-, 1- 2- // 0-, 2- 3- // 2 , // 3*2=6 Vector3[] v=new Vector3[4]; int[] f=new int[6]; Mesh tmpMesh; float w2 = banner.bWidth / 2; float h2 = banner.bHeight / 2; v [0] = new Vector3 (-w2, -h2, 0); v [1] = new Vector3 (-w2, h2, 0); v [2] = new Vector3 (w2, h2, 0); v [3] = new Vector3 (w2, -h2, 0); //3- // // , // //1- f [0] = 0; f [1] = 1; f [2] = 2; //2- f [3] = 0; f [4] = 2; f [5] = 3; tmpMesh = new Mesh (); tmpMesh.vertices = v; tmpMesh.triangles = f; GetComponent<MeshFilter> ().mesh = tmpMesh; } After editing the script and switching to the Unity environment, our triangle will “complete” to the rectangle. Now let's change the color of the rectangle. To do this, you need to change the script in 2 places, at the very top, where the public class Banner is created, you need to add the line public Material bannerMaterial; i.e:

public Banner banner = new Banner(); // public Material bannerMaterial; And also at the very end of the DoRefresh () method, add the line GetComponent <MeshRenderer> () .material = bannerMaterial; i.e:

GetComponent<MeshFilter> ().mesh = tmpMesh; // , GetComponent<MeshRenderer> ().material = bannerMaterial; After this, a material type variable appears in the inspector, where you can assign a material, and if you change its value, the rectangle will immediately react to the material change, it will "repaint", but it will still be filled with one color (in Unity 4.5.0, texture coordinates). This is due to the fact that the uv coordinates were not given to the mesh, let's fix it. You will have to replace the DoRefresh () method again with the following text:

MyTest.cs

public void DoRefresh() { Vector3[] v=new Vector3[4]; int[] f=new int[6]; // uv 4 Vector2[] uv=new Vector2[4]; Mesh tmpMesh; float w2 = banner.bWidth / 2; float h2 = banner.bHeight / 2; v [0] = new Vector3 (-w2, -h2, 0); v [1] = new Vector3 (-w2, h2, 0); v [2] = new Vector3 (w2, h2, 0); v [3] = new Vector3 (w2, -h2, 0); f [0] = 0; f [1] = 1; f [2] = 2; f [3] = 0; f [4] = 2; f [5] = 3; // uv uv [0] = new Vector2 (0, 0); //0- , uv [1] = new Vector2 (0, 1); //1- , uv [2] = new Vector2 (1, 1); //2- , uv [3] = new Vector2 (1, 0); //3- , tmpMesh = new Mesh (); tmpMesh.vertices = v; tmpMesh.triangles = f; tmpMesh.uv = uv; // GetComponent<MeshFilter> ().mesh = tmpMesh; GetComponent<MeshRenderer> ().material = bannerMaterial; } Now, if you have a picture attached to the material, it will stretch across the entire rectangle. But still not enough realism. To add realism, you need to take into account the lightness, and for this you need to create normals and add them to the mesh. In our particular case, this is simple. The mesh is drawn in the XOY plane, that is, perpendicular to the Z axis. It remains to determine the sign of the z-coordinate of the normal. The normals must come from the vertices into that half-space (meaning the half-space of the front side of the triangle) from which they are visible. Edit the DoRefresh () method again:

MyTest.cs

public void DoRefresh() { Vector3[] v=new Vector3[4]; int[] f=new int[6]; Vector2[] uv=new Vector2[4]; // Vector3[] n = new Vector3[4]; Mesh tmpMesh; float w2 = banner.bWidth / 2; float h2 = banner.bHeight / 2; v [0] = new Vector3 (-w2, -h2, 0); v [1] = new Vector3 (-w2, h2, 0); v [2] = new Vector3 (w2, h2, 0); v [3] = new Vector3 (w2, -h2, 0); f [0] = 0; f [1] = 1; f [2] = 2; f [3] = 0; f [4] = 2; f [5] = 3; uv [0] = new Vector2 (0, 0); uv [1] = new Vector2 (0, 1); uv [2] = new Vector2 (1, 1); uv [3] = new Vector2 (1, 0); // , , // , Z for (int i=0; i<4; i++) { n[i]=new Vector3(0, 0, -1); } tmpMesh = new Mesh (); tmpMesh.vertices = v; tmpMesh.triangles = f; tmpMesh.uv = uv; tmpMesh.normals = n; // GetComponent<MeshFilter> ().mesh = tmpMesh; GetComponent<MeshRenderer> ().material = bannerMaterial; } Now, if you change the intensity of the light source, the direction of the light, you can immediately see the results on the rectangle.

For this I will take my leave, the article has turned out to be quite large.Please send in a personal all noticed errors and inaccuracies.

PS: The artist from me is weak, so the schematic drawing was not entirely clear. Also, I can not publish the full source code for the construction of buildings, as the project is commercial.

Source: https://habr.com/ru/post/304466/

All Articles