Backup Trends - The Golden Age of Floppy Disks and the Modern Look at Network Backup

Film archive, almost modernity

Historically, the first backup methods were fairly simple: the documents were either directly copied (which made it possible to save data from them, if it was a technical matter), or they were transferred to tape. Film with black and white photographs can be stored for up to 130 years without significant distortion, and from it you can print multiple copies of the document.

Naturally, with the advent of the ability to digitize documents, almost everything changed at once. And I would like to talk about that bright period - from the beginning of the 90s to the present, when technologies have changed quite a lot. And we begin, perhaps, with the fact that almost all digital media is extremely short-lived and unreliable.

')

The problem of backing up data began to rise sharply, perhaps, only in the last centuries. Of course, in Egypt, Ancient Greece, in some ancient states in the territory of modern China there were paper or papyrus libraries, but the backup industry as such did not exist.

Backups were needed industrially at the very beginning of the twentieth century, when there were really very large accumulated data. The first mass digital data carriers were floppy disks: first eight-inch (few old-timers remember these hefty envelopes with one-sided floppy disks), then much more compact five-inch ones (5.25).

Let me remind you that the floppy disk was an envelope with anti-friction linings, inside which there was a plastic disk with a magnetic coating. In fact, the development of magnetic film technology, but only with the replacement of, in fact, the film on the disk. A floppy disk was read with the help of a drive head, which was actually sliding along the disk surface. Floppy disks as they were used could go sharply into denial due to physical wear and tear. The more you read or write, the more scratches remain on the disk from a badly calibrated drive head, from the fact that the disk went wave after storage or transportation and began to bump into the head, simply because it is spinning inside the envelope. Any speck of dust falling inside the envelope became a source of scratches, not critical for the data, but still unpleasant. The second problem was in storage - diskettes were demagnetized. Some could withstand decades, but more often refused in 2-3 years.

Before the advent of hard disks from floppy disks, they were loaded as on the main system disk, and it was normal practice to place two disk drives in the system unit: one for the OS diskette, and the second for operational data. If the software was large, then you had to play “insert disk 2”. For example, looking ahead, our backup recovery system was placed on 4 floppy disks of 3.5 inch format, 1.44 megabytes each, it was necessary to insert them one by one as they were loaded.

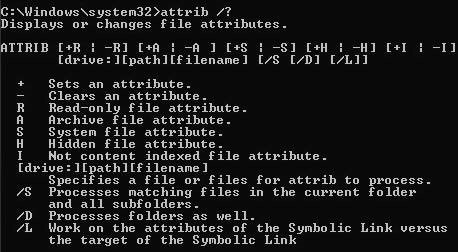

An important cultural moment. Please note that in the same “home” DOS file there were four attributes: hidden, read-only, system and archive. Read-only is understandable for what, hidden - so that the user does not accidentally stumble, the system one is a bundle of RO and hidden plus special warnings from the OS when dealing with the file, and the archive warning is a flag that the file was saved. When the file was changed, the attribute was removed, and the file was again copied to a floppy at the end of the week.

The usual capacity of a 5.25 floppy disk was first 180 kilobytes, then “folk” 360 kilobytes, and then 1.2 megabytes. At the same time, the disc itself allowed in fact to write more: for example, on a 360-kilobyte diskette it was possible to store more than 500 kilobytes. People's LifeHack looked like this: take a 360-kilobyte diskette and format it under 1.2 megabytes. Then carefully check the same "Skandisk" or "Disk

Then a rather successful pair of a small hard disk and floppy disks appeared. 20 and 50 megabyte HDD, common in the series of 386-x personal computers, then seemed just infinity. It was quite difficult to backup them entirely on diskettes, therefore only key data was saved.

A little earlier than this period, widespread non-critical data was widely used on videotape, that is, on ordinary videotapes. A special device capable of writing on a magnetic film and correcting errors was used. Since the percentage of reading errors was simply shocking, a rather high level of redundancy was used. Actually, it was on analog storage technologies that data recovery algorithms were tested. The most common way is to write data by some development of the Hamming code in N parts, where on any N-1 (if one of any of the segments is damaged), the rest can be restored. A technology that is most certainly familiar to you from a RAID level.

With the advent of 3.5-inch floppy disks (these are floppy disks with a hard case), there is an opportunity to somehow not worry about the fact that when storing (not reading-writing, namely storing or transporting) data does not disappear. The physical body is well protected disc from accidental damage. Interestingly, the tax backing provided this carrier with a long life in Russia: they took digital reports for a very long time precisely and only on such diskettes. Perhaps somewhere else still doing it. Therefore, they were sold even in transitions and at a high price.

In the same period, widespread in the home segment of the program like Double Space (later DrvSpace) - what we would call the driver for working with a hard disk with the ability to record data. All the data that was received on the recording was first compressed, all the reading data was first unzipped. All this was done transparently to the user. It turned out that it was possible to get 70 megabytes from a 50-megabyte HDD. The users liked it. A natural problem was that if a disk was damaged, quite often everything was killed, and not just one section. Probably, in terms of backing up important data, this is where an important change occurred in the minds of ordinary users.

Why I touched this class of software - because later, in modern times, almost all software and hardware systems for storing data or backups will begin to use certain methods of storage virtualization. And this double space was one of the simplest, most ancient and at the same time mass variants. Later, both deduplication, and decoupling from iron, and hyperconvergence will appear, but for now it was just a new method of working with the disk.

It was assumed that the technology of magnetic recording will develop further. There are ZIP-floppy disks. People scared them a bit: like a floppy disk, and it seems like you can write at least 100 megabytes. Unfortunately (or fortunately), they did not last long — the Japanese at that time confidently solved the problem of recording their favorite classical music into the player, and the magnetic media did not fit. The first CDs appeared.

The happy owners of the first drives very quickly discovered a bug in them: they could read, but they could not write. With the advent of writing CD drives, the whole idea of backup changed dramatically. When the rock album “CD-R” of the group “750 megabytes” made its way into the music hits, the backup was made quite simply. 15 minutes a week to pick up really big data - that was awesome. Many had entire libraries of such disks.

Unfortunately, they also proved to be short-lived. R-blanks (for one-time recording) lasted longer, and RW-disks (multiple), especially widespread, not of very good quality, “erased” in 2-3 years lying in a dry cool place. Therefore, they were not suitable for backup, and in the data centers the good old magnetic tape continued to be used.

Spread mirror mirrored RAIDs.

Somewhere at this point, HDDs became so cheap that storage became possible to do on them. It is clear that technologies, approaches, methodology and basic algorithms were worked out for a long time, but it was at the time of searching for new storage media (put on optical media, flash-memory, fantasizing about bio-carriers) it was necessary to do reliable and durable things on unreliable material. The whole history of humanity is arranged, by the way.

Hence the massive use of the ideas of the Hamming code, storage with multiple duplication of disk subsystems, the distribution of data between them. Good old archivers with the ability to protect data were brought up, patents for data transmission in noisy channels were reused - and here we have what we have today. In most modern hi-end stores in data centers, you can swipe scrap in a big way, and the data is likely to remain in full.

The emergence and mass distribution of flash-memory has finally changed the mind - no one else considered the media as something permanent. It was about the constant “moving off” of the data, updating and rewriting.

We generally moved up to this period in a very understandable paradigm. The first thing we started selling was a product that had a friendly GUI (a rarity for system utilities in the early 2000s) and allowed the regular end user (not the admin) to backup quickly, to be able to recover from it and inculcate the habit of copying important data. Snapshots technology was required, there was a lot of work with the disk on an almost physical level, many OS features. Backup was done on external media or neighboring hard drives, and in general everything was predictable. Here is a little more detail from one of our first employees .

The fundamental change began with the corporate segment - it was necessary to make a backup to the network. Why from corporate? Because they had the idea of a geo-distributed data center was the norm. Already later, after working out the technologies there, we provided such opportunities to the user. At first, the home user did not understand at all why all this was necessary, and we were preparing to develop the idea of copying to the cloud with difficulty.

However, the idea spread quickly enough, and we began to face a sharp increase in the load on storage capacity in remote data centers. It was necessary to change the architecture several times, to revise the approaches and to work out the whole story with the optimal (and at the same time economical) iron for storage. Echoes of this story are in a post about our data centers and their support .

Obviously, the next idea, which directly stems from the current trends of virtualization and data distribution, is the decoupling of data from home users' terminals. Already, they are perfectly familiar with how a backup from a phone can be rolled onto a new “handset” (and everything will be exactly the same), see their files from a business trip when accessing backups through a web agent, use the same “Yandex.Disk” or "Dropbox", and in general are ready to give data to the network relatively freely. The channels have also grown, except for Kamchatka, which is leaping over the satellite, in Russia with the Internet almost everywhere is almost order. And thanks to the spread of 3G and LTE, the normal channel is almost everywhere on the planet.

It is clear that in the development paradigms of data storage, and in the history of backups, you can dig for a long time. If you're interested, tell me, and I will continue a little later. In the meantime, the most important trend: from a boring but important hygienic procedure, backup turned into something automatic (came home with a tablet - everything was preserved) and taken for granted. And everything goes to the fact that soon the backup as such will not remain, you will not need a separate backup copy as an instance, most likely, ordinary data storage will maintain a sufficient amount of redundancy at a low level so as not to separate its data and some data in the user's mind. a copy of them.

We are still going to this in small steps. In the new release, for example, there is a local backup for mobile phones, plus they just made it possible to choose a data center to store an open or encrypted copy, for example, to add data to the selected country.

If interesting

Then look at Beta Acronis True Image 2017

Source: https://habr.com/ru/post/304438/

All Articles