Select query travel through Postgres internals

Only a few days left until the PG Day'16 Russia conference, the schedule can be viewed on our website. We are working hard, but nevertheless we manage to prepare for you translations of the most interesting materials about PostgreSQL. Today we bring to your attention the translation of Pat Shaughnessy 's article on the behavior of the Select query.

Preparing for this presentation in the summer, I decided to study some parts of the PostgreSQL source code in C. I ran a very simple select query and watched what Postgres does with it using the LLDB debugger C. How did Postgres understand my query? How did he find the data I was looking for?

')

This post is an informal journal of my journey through the insides of PostgreSQL. I will describe the path I traveled and what I saw in the process. I use a series of simple conceptual diagrams to explain how Postgres fulfilled my request. In case you understand C, I will also leave you some landmarks and pointers that you can search if you suddenly decide to delve into the insides of the Postgres.

The source code for PostgreSQL delighted me. It turned out to be clean, well documented and easy to understand. Find out for yourself how Postgres works from the inside by joining me on a journey to the depths of the tool that you use every day.

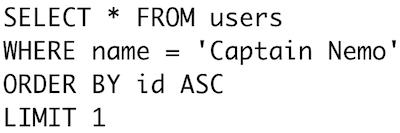

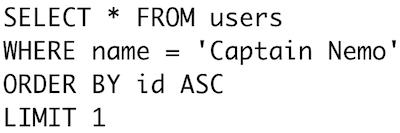

Here is an example request from the first half of my presentation . We will follow Postgres while he searches for Captain Nemo:

Finding one single name in a text column should be easy enough, right? We will hold tight to this select query, exploring the insides of the Postgres, as deep-sea divers hold on to the rope to find their way back to the surface.

What does Postgres do with this SQL string? How does he understand what we mean? How does he know what data we are looking for?

Postgres processes each SQL command that we send it in four steps.

First, PostgreSQL parses (“parses”) our SQL query and converts it into a series of stored in memory data structures of the C language - parse tree . Next, Postgres analyzes and rewrites our query, optimizing and simplifying it using a series of complex algorithms. After that, it generates a plan to search our data. Like a person with obsessive compulsive disorder who does not leave the house until his portfolio is carefully packed, Postgres will not launch our request until he has a plan. Finally, Postgres executes our query. In this presentation, I will briefly touch on the first three steps, and then focus on the last one: the execution of the request .

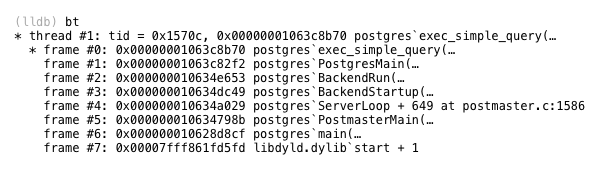

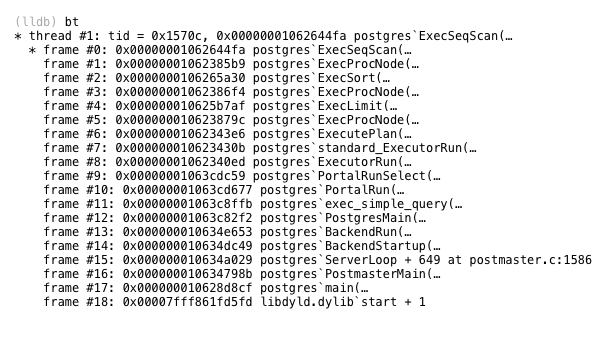

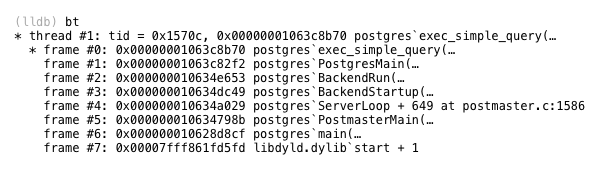

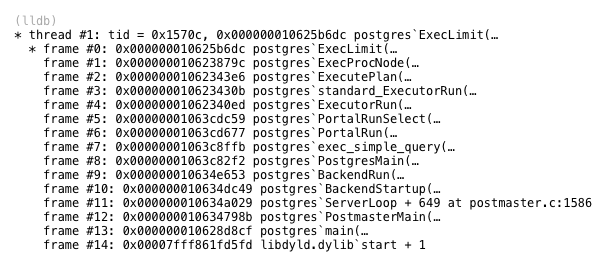

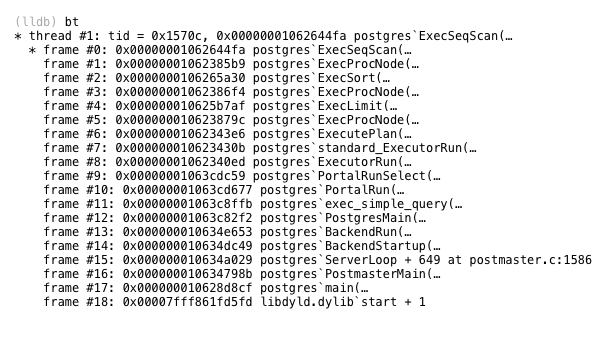

The C function inside the Postgrece that performs this four-step process is called exec_simple_query. You can find the link to it below along with the LLDB trace, which shows details of exactly when and how Postgres calls exec_simple_query.

How does Postgres understand the SQL string we sent to it? How does he find meaning in keywords and SQL expressions of our select query? Through a process called parsing ( parsing ). Postgres converts our SQL string to an internal data structure, which it understands - the parse tree.

It turns out Postgres uses the same parsing technology as Ruby, namely, a parser generator called Bison . Bison works during the postgrese build process and generates a parser code based on a set of grammar rules. The generated parser code is what works inside Postgres when we send SQL commands to it. Each grammar is called when the generated parser finds the corresponding pattern or syntax in the SQL string and inserts a new C memory structure into the parsing tree.

Today I will not waste time on a detailed explanation of how the parsing algorithm works. If you are interested in such topics, I advise you to read my book Ruby Under a Microscope . In the first chapter, I take a detailed look at an example of the LALR parsing algorithm used by Bison and Ruby. Postgres parses the SQL query in exactly the same way.

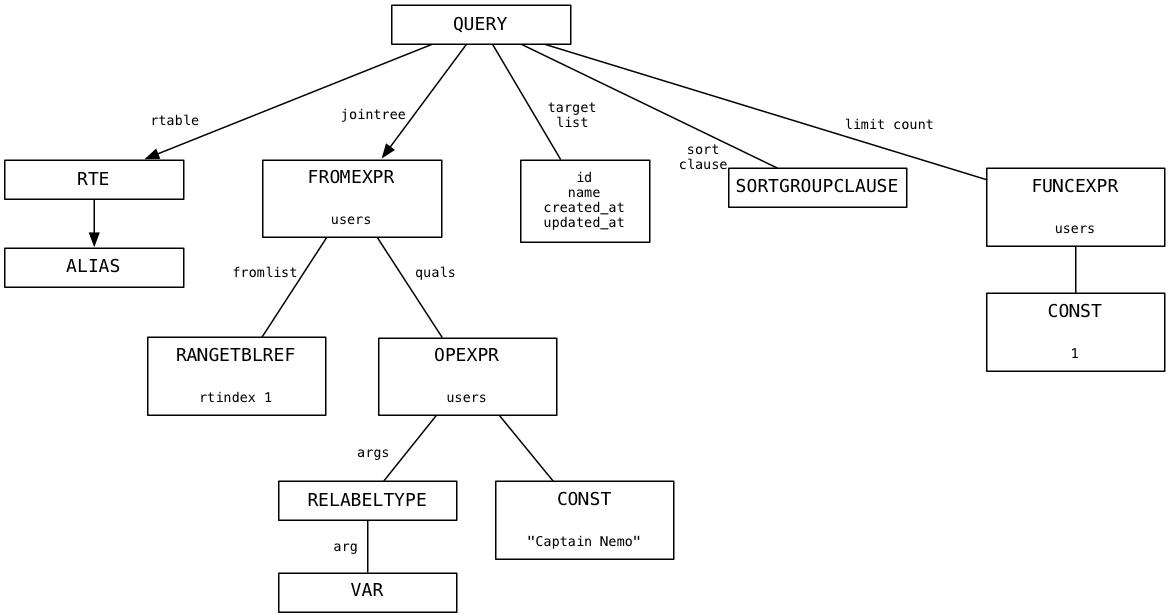

Using LLDB and activating some logging C code, I watched as the Postgres parser created the following parse tree for our search query for Captain Nemo:

At the top is the node representing the complete SQL query, and below are the child nodes or branches that represent different pieces of SQL query syntax: the target list (list of columns), the from condition (list of tables), the where condition, the sort order, and the number of records.

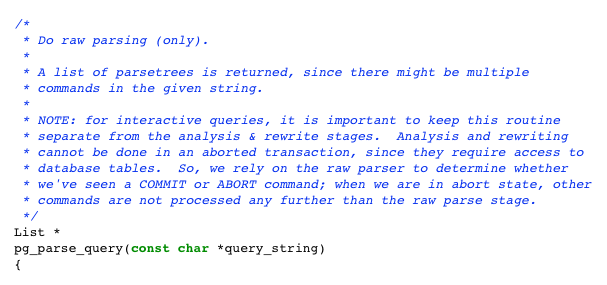

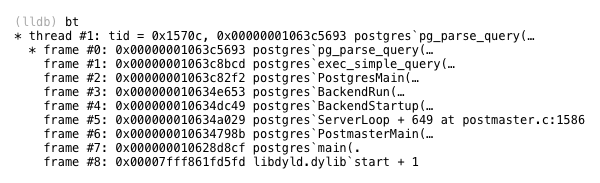

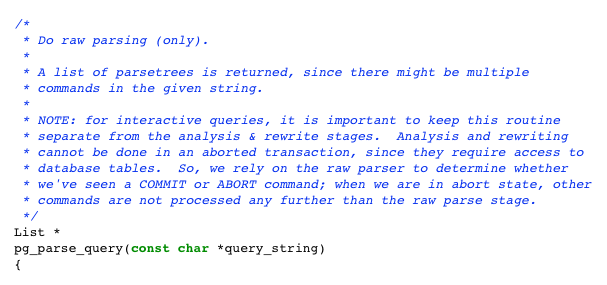

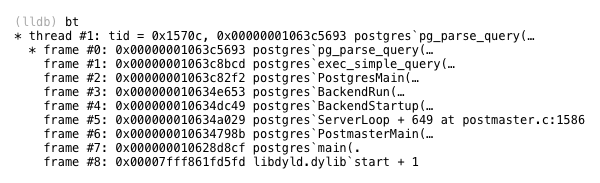

If you want to learn more about how Postgres parses SQL queries, follow the order of execution from exec_simple_query through another C function called pg_parse_query.

As you can see, the Postgres source code has many useful and detailed comments that not only explain what is happening, but also point to important design decisions.

The parse tree above probably seemed familiar to you - it is almost exactly the same abstract syntax tree (AST), which we observed earlier in ActiveRecord. Remember the first part of the presentation : ActiveRecord generated our select query about Captain Nemo when we executed this Ruby query:

We saw that ActiveRecord created an internal AST representation when we called methods such as where and first. Later (see the second post ) we watched how gem Arel converted AST into our sample select query using an algorithm based on the visitor pattern.

If you think it’s very ironic that the first thing Postgres does with your SQL query is to convert it from string back to AST. The process of parsing in Postgres cancels everything that ActiveRecord did before that, all the hard work that gem Arel did was in vain! The only reason to create the SQL string was to access Postgres over the network. But as soon as Postgres received the request, he converted it back to AST, which is a much more convenient and useful way to submit requests.

After learning this, you can ask: Is there a better way? Is there no other way to conceptually explain to Postgres what data we need without writing an SQL query? Without learning a complicated SQL language or additional overhead due to using ActiveRecord and Arel? It seems a monstrous waste of time to go that long way, creating an SQL string from AST just to convert it back to AST again. Maybe you should use a NoSQL solution instead?

Of course, the AST used by Postgres is very different from the AST used by ActiveRecord. In ActiveRecord, AST consists of Ruby objects, and in Postgres it consists of a series of in-memory C language structures. The idea is one, but the implementation is very different.

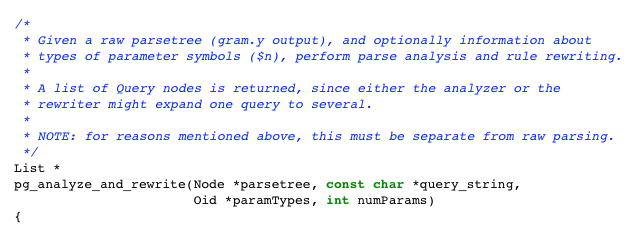

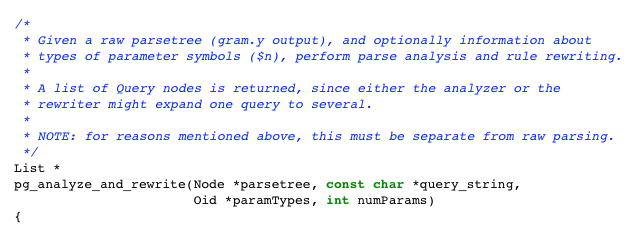

Once Postgres has generated the parsing tree, it converts it to another tree using a different set of nodes. It is known as the query tree . Returning to the exec_simple_query C function, you can see that another C function is called further - pg_analyze_and_rewrite.

Without going into details, the analysis and rewriting process uses a number of complex algorithms and heuristics in an attempt to optimize and simplify your SQL query. If you executed a complex select query with nested queries and a set of inner and outer join, then the scope for optimization is huge. It is possible that Postgres will reduce the number of nested queries or unions to produce a simpler query that will execute faster.

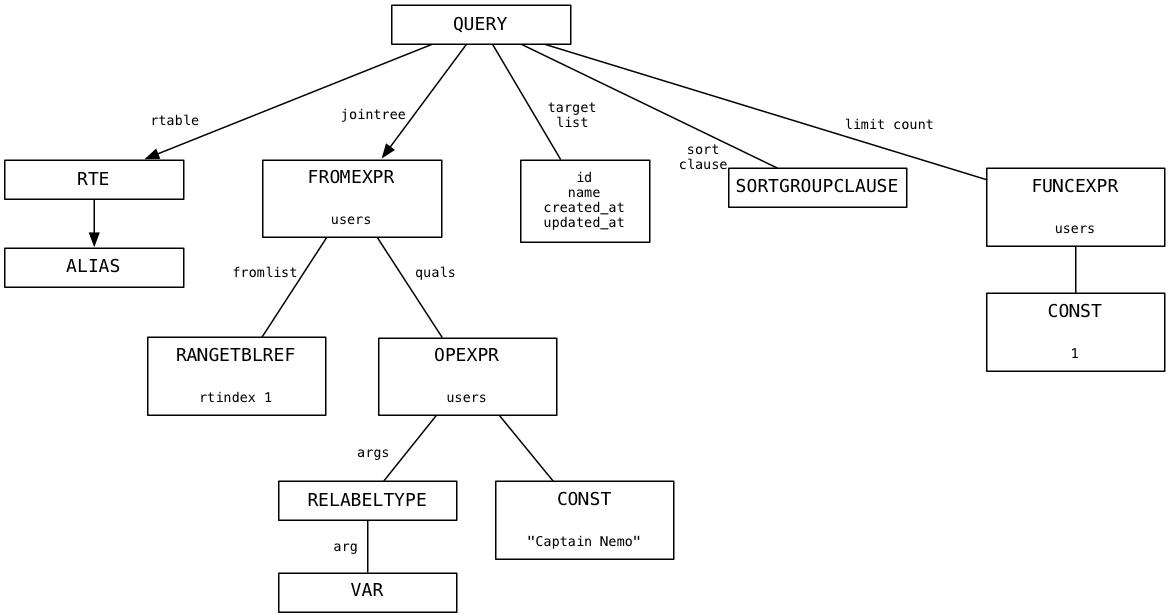

For our simple query select pg_analyze_and_rewrite created the following query tree:

I will not pretend that I understand in detail the algorithms behind pg_analyze_and_rewrite. I just noticed that for our example the query tree is very similar to the parse tree. This means that the select query was so simple that Postgres could not make it even easier.

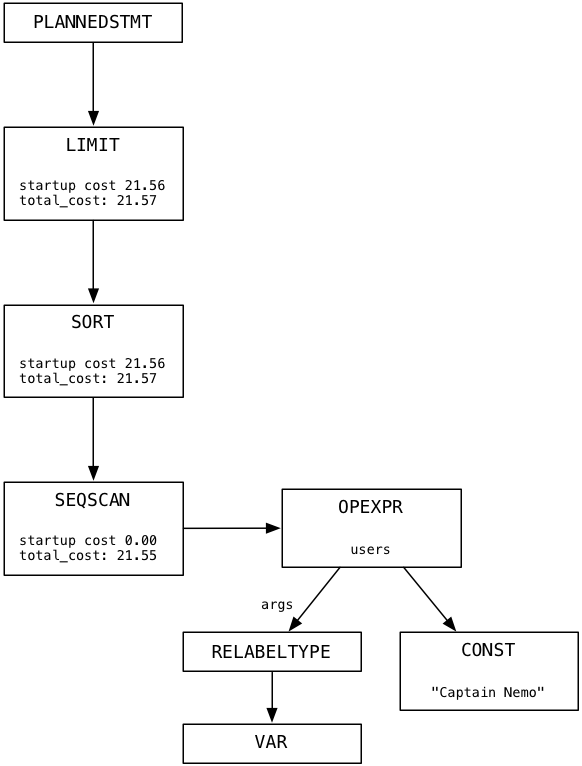

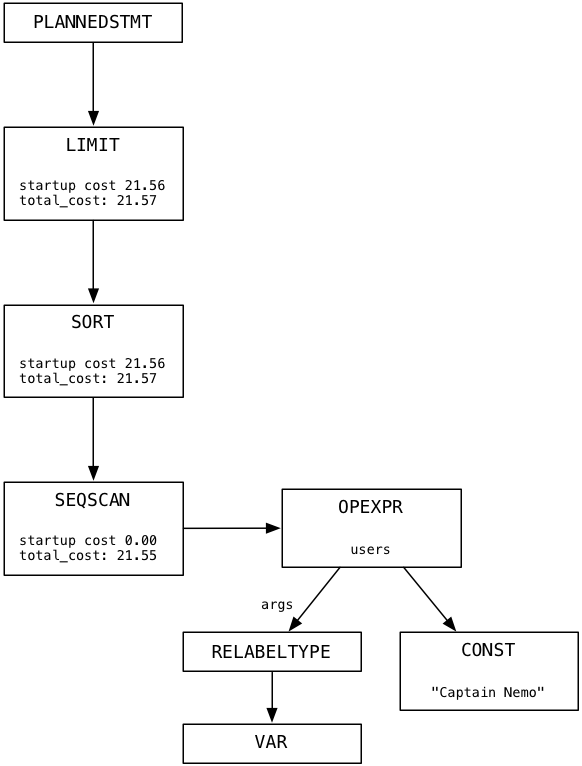

The last thing the Posgres does before starting to fulfill our request is creating a plan. This process involves the creation of a third node tree, which is a list of instructions for the Postgres. Here is the plan tree for our select query:

Imagine that each node in the plan tree is some kind of machine or worker. The plan tree resembles a data pipeline or a conveyor belt in a factory. In my simple example, the tree has only one branch. Each node of the plan tree takes data from the output of the underlying node, processes it, and returns the result as input data for the node above. In the next paragraph, we will follow Postgres while he fulfills the plan.

The C function that starts the process of scheduling a request is called pg_plan_queries.

Notice the startup_cost and total_cost values in each node. Postgres uses these values to estimate how long it will take to execute the plan. You do not need to use the C debugger to see the execution plan for your request. Simply add the SQL EXPLAIN command to the beginning of the query. Like this:

This is a great way to understand what Postgres is doing internally with one of your queries and why it may be slow or inefficient, despite the complex scheduling algorithms in pg_plan_queries.

At this point, Postgres has parsed your SQL string and converted it back to AST. Then he optimized it and rewrote it, probably simplifying it. After this, Postgres wrote a plan to follow to find and return the data you are looking for. Finally, the time has come for Postgres to fulfill your request! How will he do it? By following the plan, of course!

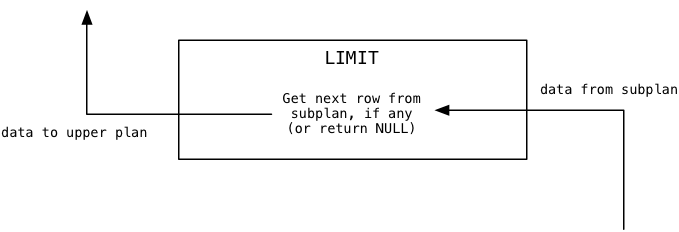

Let's start at the top of the plan tree and move down. If you skip the root node, the first worker that Postgres uses for our request for Captain Nemo is called Limit. The Limit node, as you might have guessed, executes the SQL LIMIT command, which limits the result to a certain number of records. Also, this plan node executes the OFFSET command, which initiates a window with the result set, starting from the specified line.

When the Limit node is first called, Postgres calculates what the values of limit and offset should be, since they can be tied to the result of some dynamic calculation. In our example, the offset is 0, and limit is 1.

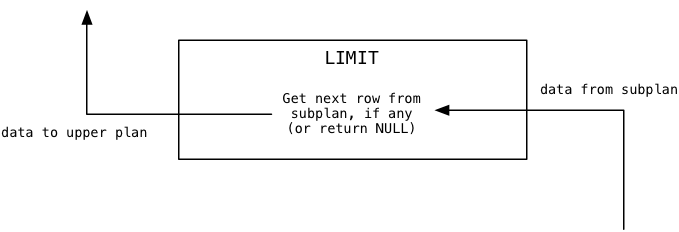

Next, the Limit plan node repeatedly calls the subplan — in our case, this Sort — until it reaches the offset value:

In our example, the offset is zero, so this loop will load the first data value and stop iterating. Then Postgres will return the last data value loaded from the subplan to the calling or superior plan. For us, this will be the very first value from the subplan.

Finally, when Postgres continues to call the Limit node, it will transmit the data values from the subplan one by one:

Since in our example limit is 1, Limit will immediately return NULL, indicating to the higher plan that there is no more data available.

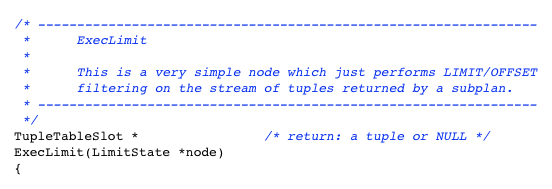

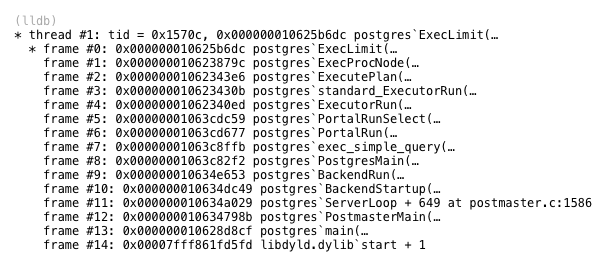

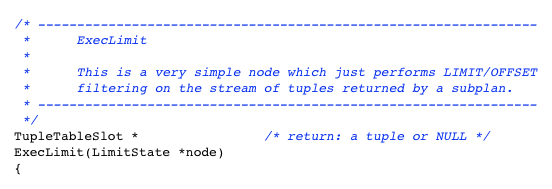

Postgres executes a Limit node using a code from a file called nodeLimit.c

You can see that Postgres source code uses words like tuple (a set of values, one from each column) and subplan . In this example, the subplan is the Sort node, which is located in the plan under the Limit.

Where do the data values that Limit filters come from? From the Sort node located under Limit in the plan tree. Sort loads data values from its subplan and returns them to the calling Limit node. This is what Sort does when the Limit node calls it the first time to get the first data value:

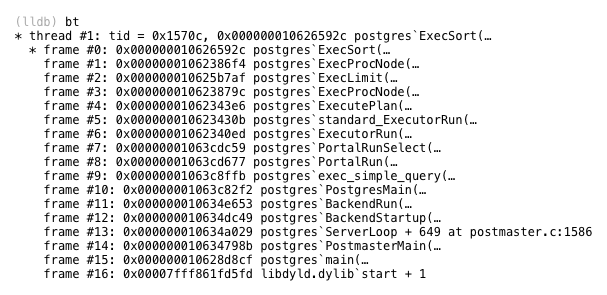

As you can see, Sort does not function at all like Limit. It immediately loads all available data from the subplan into the buffer before returning anything. It then sorts the buffer using the Quicksort algorithm and finally returns the first sorted value.

For the second and subsequent calls, Sort simply returns additional values from the sorted buffer, and it no longer needs to call the subplan again:

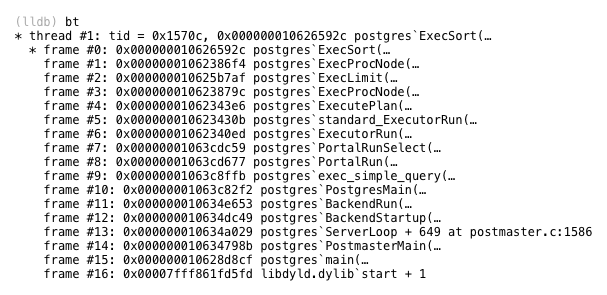

The Sort plan node is executed by a C function called ExecSort:

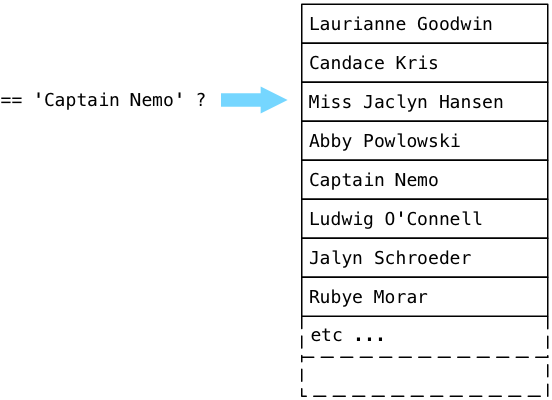

Where does ExecSort get values? From its sublan - the SeqScan node located at the very bottom of the plan tree. SeqScan stands for sequential scan , which means viewing all values in the table and returning values that correspond to the specified filter. To understand how scanning works with our filter, let's imagine a table of users filled with fictitious names and try to find Captain Nemo in it.

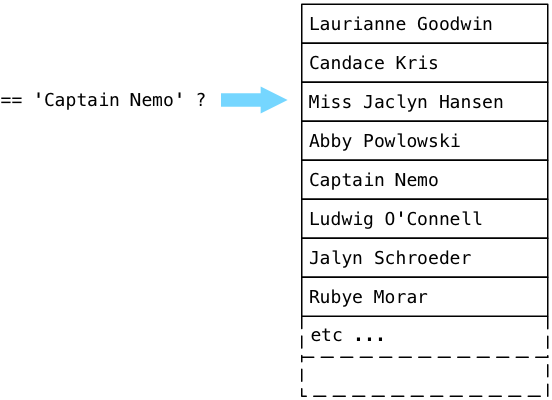

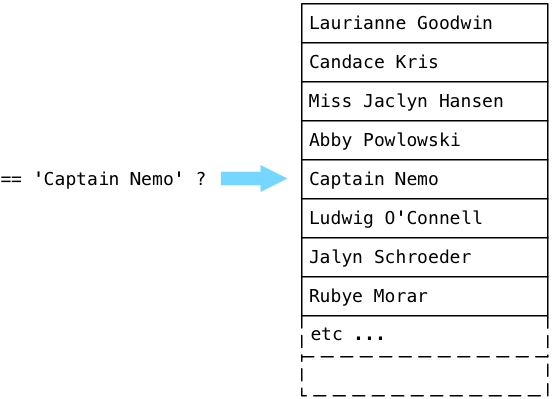

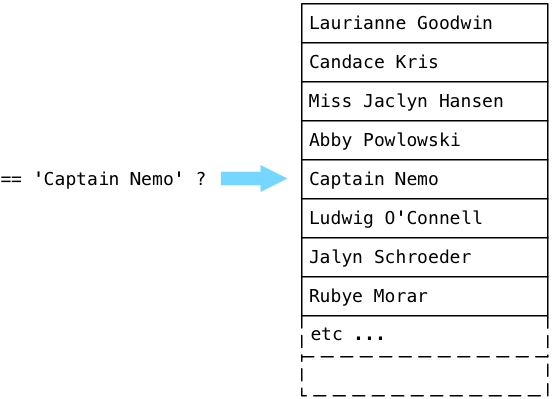

Postgres starts with the first entry in the table (which is called a relation in the Postgres source code) and starts boolean expressions from the plan tree. Simply put, Postgres asks the question: “Is this Captain Nemo?” Since Laurianne Goodwin is not Captain Nemo, Postgres moves on to the next entry.

No, Candace is not Captain Nemo either. Postgres continues:

... and finally finds Captain Nemo!

Postgres executes the SeqScan node using a C function called ExecSeqScan.

Here we are at the end! We followed the simple select query all the way through the insides of the Postgres and saw how it was parsed, rewritten, planned, and finally executed. After doing many thousands of lines of C code, Postgres found the data we were looking for! Now all he has to do is return the Captain Nemo line back to our Rails application, and ActiveRecord can create a Ruby object. Finally, we can return to the surface of our application.

But Postgres does not stop! Instead of simply returning the data, he continues to scan the user table, although we have already found Captain Nemo:

What is going on? Why does Postgres waste his time continuing to search, despite having already found the necessary data?

The answer lies in the plan tree, in the Sort node. Recall that to sort all users, ExecSort first loads all values into the buffer, repeatedly calling the subplan until the values run out. This means that ExecSeqScan will continue to scan to the end of the table until it gathers all the appropriate users. If our table contained thousands or even millions of records (imagine that we are working on Facebook or Twitter), ExecSeqScan would have to repeat the cycle for all user records and perform a comparison for each of them. Obviously, this is slow and inefficient, and we will slow down more and more as new user records are added to the table.

If we have only one entry about Captain Nemo, ExecSort "sorts" only this suitable entry, and ExecLimit will pass it through its offset / limit filter ... but only after ExecSeqScan passes through all the names.

How to solve this problem? What if the execution of our SQL queries on the user table takes more and more time? The answer is simple: we create an index.

In the next and last post of this series, we will learn how to create indexes in Postgres and avoid using ExecSeqScan. But most importantly, I'll show you what the postgres index looks like: how it works and why it speeds up requests like this.

Preparing for this presentation in the summer, I decided to study some parts of the PostgreSQL source code in C. I ran a very simple select query and watched what Postgres does with it using the LLDB debugger C. How did Postgres understand my query? How did he find the data I was looking for?

')

This post is an informal journal of my journey through the insides of PostgreSQL. I will describe the path I traveled and what I saw in the process. I use a series of simple conceptual diagrams to explain how Postgres fulfilled my request. In case you understand C, I will also leave you some landmarks and pointers that you can search if you suddenly decide to delve into the insides of the Postgres.

The source code for PostgreSQL delighted me. It turned out to be clean, well documented and easy to understand. Find out for yourself how Postgres works from the inside by joining me on a journey to the depths of the tool that you use every day.

In search of Captain Nemo

Here is an example request from the first half of my presentation . We will follow Postgres while he searches for Captain Nemo:

Finding one single name in a text column should be easy enough, right? We will hold tight to this select query, exploring the insides of the Postgres, as deep-sea divers hold on to the rope to find their way back to the surface.

Picture as a whole

What does Postgres do with this SQL string? How does he understand what we mean? How does he know what data we are looking for?

Postgres processes each SQL command that we send it in four steps.

First, PostgreSQL parses (“parses”) our SQL query and converts it into a series of stored in memory data structures of the C language - parse tree . Next, Postgres analyzes and rewrites our query, optimizing and simplifying it using a series of complex algorithms. After that, it generates a plan to search our data. Like a person with obsessive compulsive disorder who does not leave the house until his portfolio is carefully packed, Postgres will not launch our request until he has a plan. Finally, Postgres executes our query. In this presentation, I will briefly touch on the first three steps, and then focus on the last one: the execution of the request .

The C function inside the Postgrece that performs this four-step process is called exec_simple_query. You can find the link to it below along with the LLDB trace, which shows details of exactly when and how Postgres calls exec_simple_query.

exec_simple_query

view on postgresql.org

Parsing

How does Postgres understand the SQL string we sent to it? How does he find meaning in keywords and SQL expressions of our select query? Through a process called parsing ( parsing ). Postgres converts our SQL string to an internal data structure, which it understands - the parse tree.

It turns out Postgres uses the same parsing technology as Ruby, namely, a parser generator called Bison . Bison works during the postgrese build process and generates a parser code based on a set of grammar rules. The generated parser code is what works inside Postgres when we send SQL commands to it. Each grammar is called when the generated parser finds the corresponding pattern or syntax in the SQL string and inserts a new C memory structure into the parsing tree.

Today I will not waste time on a detailed explanation of how the parsing algorithm works. If you are interested in such topics, I advise you to read my book Ruby Under a Microscope . In the first chapter, I take a detailed look at an example of the LALR parsing algorithm used by Bison and Ruby. Postgres parses the SQL query in exactly the same way.

Using LLDB and activating some logging C code, I watched as the Postgres parser created the following parse tree for our search query for Captain Nemo:

At the top is the node representing the complete SQL query, and below are the child nodes or branches that represent different pieces of SQL query syntax: the target list (list of columns), the from condition (list of tables), the where condition, the sort order, and the number of records.

If you want to learn more about how Postgres parses SQL queries, follow the order of execution from exec_simple_query through another C function called pg_parse_query.

pg_parse_query

view on postgresql.org

As you can see, the Postgres source code has many useful and detailed comments that not only explain what is happening, but also point to important design decisions.

All the work of the dog down the drain

The parse tree above probably seemed familiar to you - it is almost exactly the same abstract syntax tree (AST), which we observed earlier in ActiveRecord. Remember the first part of the presentation : ActiveRecord generated our select query about Captain Nemo when we executed this Ruby query:

We saw that ActiveRecord created an internal AST representation when we called methods such as where and first. Later (see the second post ) we watched how gem Arel converted AST into our sample select query using an algorithm based on the visitor pattern.

If you think it’s very ironic that the first thing Postgres does with your SQL query is to convert it from string back to AST. The process of parsing in Postgres cancels everything that ActiveRecord did before that, all the hard work that gem Arel did was in vain! The only reason to create the SQL string was to access Postgres over the network. But as soon as Postgres received the request, he converted it back to AST, which is a much more convenient and useful way to submit requests.

After learning this, you can ask: Is there a better way? Is there no other way to conceptually explain to Postgres what data we need without writing an SQL query? Without learning a complicated SQL language or additional overhead due to using ActiveRecord and Arel? It seems a monstrous waste of time to go that long way, creating an SQL string from AST just to convert it back to AST again. Maybe you should use a NoSQL solution instead?

Of course, the AST used by Postgres is very different from the AST used by ActiveRecord. In ActiveRecord, AST consists of Ruby objects, and in Postgres it consists of a series of in-memory C language structures. The idea is one, but the implementation is very different.

Parse and rewrite

Once Postgres has generated the parsing tree, it converts it to another tree using a different set of nodes. It is known as the query tree . Returning to the exec_simple_query C function, you can see that another C function is called further - pg_analyze_and_rewrite.

pg_analyze_and_rewrite

view on postgresql.org

Without going into details, the analysis and rewriting process uses a number of complex algorithms and heuristics in an attempt to optimize and simplify your SQL query. If you executed a complex select query with nested queries and a set of inner and outer join, then the scope for optimization is huge. It is possible that Postgres will reduce the number of nested queries or unions to produce a simpler query that will execute faster.

For our simple query select pg_analyze_and_rewrite created the following query tree:

I will not pretend that I understand in detail the algorithms behind pg_analyze_and_rewrite. I just noticed that for our example the query tree is very similar to the parse tree. This means that the select query was so simple that Postgres could not make it even easier.

Plan

The last thing the Posgres does before starting to fulfill our request is creating a plan. This process involves the creation of a third node tree, which is a list of instructions for the Postgres. Here is the plan tree for our select query:

Imagine that each node in the plan tree is some kind of machine or worker. The plan tree resembles a data pipeline or a conveyor belt in a factory. In my simple example, the tree has only one branch. Each node of the plan tree takes data from the output of the underlying node, processes it, and returns the result as input data for the node above. In the next paragraph, we will follow Postgres while he fulfills the plan.

The C function that starts the process of scheduling a request is called pg_plan_queries.

pg_plan_queries

view on postgresql.org

Notice the startup_cost and total_cost values in each node. Postgres uses these values to estimate how long it will take to execute the plan. You do not need to use the C debugger to see the execution plan for your request. Simply add the SQL EXPLAIN command to the beginning of the query. Like this:

This is a great way to understand what Postgres is doing internally with one of your queries and why it may be slow or inefficient, despite the complex scheduling algorithms in pg_plan_queries.

Limit plan node execution

At this point, Postgres has parsed your SQL string and converted it back to AST. Then he optimized it and rewrote it, probably simplifying it. After this, Postgres wrote a plan to follow to find and return the data you are looking for. Finally, the time has come for Postgres to fulfill your request! How will he do it? By following the plan, of course!

Let's start at the top of the plan tree and move down. If you skip the root node, the first worker that Postgres uses for our request for Captain Nemo is called Limit. The Limit node, as you might have guessed, executes the SQL LIMIT command, which limits the result to a certain number of records. Also, this plan node executes the OFFSET command, which initiates a window with the result set, starting from the specified line.

When the Limit node is first called, Postgres calculates what the values of limit and offset should be, since they can be tied to the result of some dynamic calculation. In our example, the offset is 0, and limit is 1.

Next, the Limit plan node repeatedly calls the subplan — in our case, this Sort — until it reaches the offset value:

In our example, the offset is zero, so this loop will load the first data value and stop iterating. Then Postgres will return the last data value loaded from the subplan to the calling or superior plan. For us, this will be the very first value from the subplan.

Finally, when Postgres continues to call the Limit node, it will transmit the data values from the subplan one by one:

Since in our example limit is 1, Limit will immediately return NULL, indicating to the higher plan that there is no more data available.

Postgres executes a Limit node using a code from a file called nodeLimit.c

Execlimit

view on postgresql.org

You can see that Postgres source code uses words like tuple (a set of values, one from each column) and subplan . In this example, the subplan is the Sort node, which is located in the plan under the Limit.

Running the Sort plan node

Where do the data values that Limit filters come from? From the Sort node located under Limit in the plan tree. Sort loads data values from its subplan and returns them to the calling Limit node. This is what Sort does when the Limit node calls it the first time to get the first data value:

As you can see, Sort does not function at all like Limit. It immediately loads all available data from the subplan into the buffer before returning anything. It then sorts the buffer using the Quicksort algorithm and finally returns the first sorted value.

For the second and subsequent calls, Sort simply returns additional values from the sorted buffer, and it no longer needs to call the subplan again:

The Sort plan node is executed by a C function called ExecSort:

ExecSort

view on postgresql.org

Running the SeqScan plan node

Where does ExecSort get values? From its sublan - the SeqScan node located at the very bottom of the plan tree. SeqScan stands for sequential scan , which means viewing all values in the table and returning values that correspond to the specified filter. To understand how scanning works with our filter, let's imagine a table of users filled with fictitious names and try to find Captain Nemo in it.

Postgres starts with the first entry in the table (which is called a relation in the Postgres source code) and starts boolean expressions from the plan tree. Simply put, Postgres asks the question: “Is this Captain Nemo?” Since Laurianne Goodwin is not Captain Nemo, Postgres moves on to the next entry.

No, Candace is not Captain Nemo either. Postgres continues:

... and finally finds Captain Nemo!

Postgres executes the SeqScan node using a C function called ExecSeqScan.

ExecSeqScan

view on postgresql.org

What are we doing wrong?

Here we are at the end! We followed the simple select query all the way through the insides of the Postgres and saw how it was parsed, rewritten, planned, and finally executed. After doing many thousands of lines of C code, Postgres found the data we were looking for! Now all he has to do is return the Captain Nemo line back to our Rails application, and ActiveRecord can create a Ruby object. Finally, we can return to the surface of our application.

But Postgres does not stop! Instead of simply returning the data, he continues to scan the user table, although we have already found Captain Nemo:

What is going on? Why does Postgres waste his time continuing to search, despite having already found the necessary data?

The answer lies in the plan tree, in the Sort node. Recall that to sort all users, ExecSort first loads all values into the buffer, repeatedly calling the subplan until the values run out. This means that ExecSeqScan will continue to scan to the end of the table until it gathers all the appropriate users. If our table contained thousands or even millions of records (imagine that we are working on Facebook or Twitter), ExecSeqScan would have to repeat the cycle for all user records and perform a comparison for each of them. Obviously, this is slow and inefficient, and we will slow down more and more as new user records are added to the table.

If we have only one entry about Captain Nemo, ExecSort "sorts" only this suitable entry, and ExecLimit will pass it through its offset / limit filter ... but only after ExecSeqScan passes through all the names.

Next

How to solve this problem? What if the execution of our SQL queries on the user table takes more and more time? The answer is simple: we create an index.

In the next and last post of this series, we will learn how to create indexes in Postgres and avoid using ExecSeqScan. But most importantly, I'll show you what the postgres index looks like: how it works and why it speeds up requests like this.

Source: https://habr.com/ru/post/304258/

All Articles