History of programming languages: 100% "pure" C, without a single "plus"

The popularity of the C programming language is difficult to overestimate, especially recalling its past merits. Probably, every developer, at least, knows about its existence, and, as a maximum, tried to program on it. C is the predecessor of languages such as C ++, Objective-C, C #, Java.

Microsoft has chosen C-like syntax for developing its native language for its .Net platform. Moreover, C is written many operating systems.

')

Of course, C is not perfect: the creators of the language - Ken Thompson and Dennis Ritchie - have been refining it for a long time. Standardization of C is still ongoing. It exists more than 45 years and is actively used.

Not one but often two programming languages are often associated with it - C / C ++. However, the following discussion deals specifically with “pure” C.

The C language dates back to the language ALGOL (stands for ALGorithmic Language), which was established in 1958 in conjunction with the committee of European and American computer scientists at a meeting at the Swiss High School of Technical Zurich. The language was the answer to some of the shortcomings of the FORTRAN language and an attempt to correct them. In addition, the development of C is closely related to the creation of the UNIX operating system, on which Ken Thompson and Dennis Ritchie also worked.

UNIX

The Multiple Access Computer, Man and Computer project, began as a purely research at MIT in 1963.

As part of the MAS project, the CTSS (Compatible Time-Sharing System) operating system was developed. In the second half of the 60s, several other time-sharing systems were created, for example, BBN, DTSS, JOSS, SDC and Multiplexed Information and Computing Service (MULTICS) including.

Multics is a joint development of MIT, Bell Telephone Laboratories (BTL) and General Electric (GE) to create a time-sharing OS for the GE-645 computer. The last computer running Multics turned off on October 31, 2000.

However, BTL departed from this project in early 1969.

Some of his employees (Ken Thompson, Dennis Ritchie, Stew Feldman, Doug McIlroy, Bob Morris, Joe Ossanna) wanted to continue working independently. Thompson worked on the Space Travel game on the GE-635. It was written first for Multics, and then rewritten in Fortran under GECOS on GE-635. The game modeled the bodies of the solar system, and the player had to put the ship somewhere on the planet or satellite.

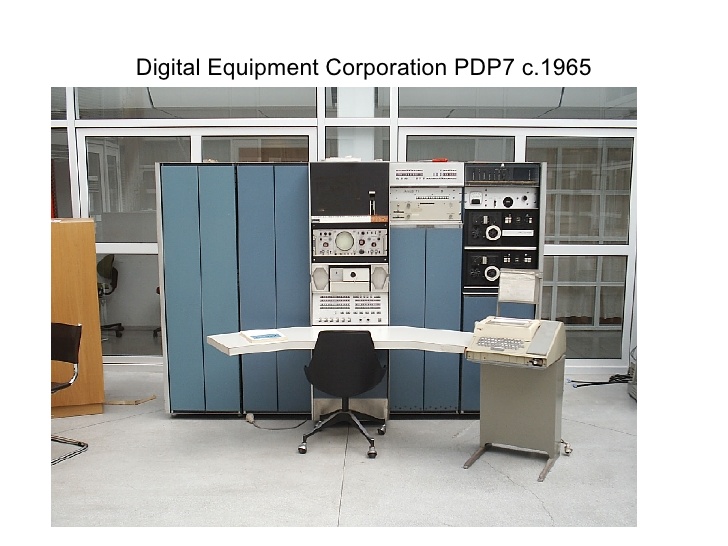

Neither the software nor the hardware of this computer was suitable for such a game. Thompson was looking for an alternative, and rewrote the game under the ownerless PDP-7. The memory was 8K of 18-bit words, and there was also a vector display processor for outputting beautiful graphics for that time.

Image from slideshare.net

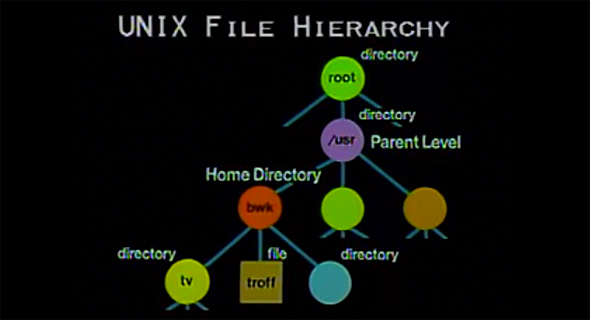

Thompson and Ritchie were fully cross-assembler at GE and transferred the code to punched tapes. Thompson did not actively like it, and he began writing an OS for the PDP-7, starting with the file system. This is how UNIX appeared.

Thompson wanted to create a comfortable computing environment, designed in accordance with his design, using any means available. His ideas, which, obviously looking back, absorbed many of the innovations of Multics, including the concept of process as the basis of management, the tree-like file system, the command interpreter as a user program, a simplified representation of text files and generalized access to devices.

PDP-7 UNIX also marked the beginning of the high-level language B, which was created under the influence of the BCPL language. Dennis Ritchie said that B is C without types. BCPL was placed in 8 KB of memory and was thoroughly reworked by Thompson. In gradually grew in C.

Image from it-world.com

By 1973, the C language was strong enough, and most of the UNIX kernel, originally written in assembler PDP-11/20, was rewritten in C. It was one of the very first operating system kernels, written in a language other than assembly language.

It turns out that C is a “by-product” obtained during the creation of the UNIX operating system.

Progenitors of C

Inspired by the language ALGOL-60, the Mathematical Laboratory of the University of Cambridge, together with the Computer Department of the University of London, created in 1963 the CPL (Combined Programming Language) language.

The CPL language was considered difficult, and in response to this, Martin Richardson created the BCPL language in 1966, the main purpose of which was to write compilers. Now it is practically not used, but in due time because of good portability it played an important role.

BCPL was used in the early 1970s in several interesting projects, including the OS6 operating system and partly in the nascent Xerox PARC development.

BCPL served as the ancestor of the Bee language (B), developed in 1969 by the well-known AT & T Bell Telephone Laboratories, no less familiar by Ken Thompson and Dennis Ritchie.

Like the rest of the operating systems of the time, UNIX was written in assembler. Debugging programs in assembler real flour. Thompson decided that a high level language was needed for further OS development and came up with a small language B. Thompson took BCPL as a basis. Language B can be considered as C without types.

In many details, BCPL, B, and C are different syntactically, but for the most part they are similar. Programs consist of a sequence of global declarations and function declarations (procedures). In BCPL, procedures can be nested, but cannot refer to non-static objects defined in the procedures that contain them. B and C avoid such a restriction by introducing a stricter one: there are no nested procedures at all. Each of the languages (with the exception of the most ancient versions of B) supports separate compilation and provides the means to include text from named files.

In contrast to the ubiquitous syntax change that occurred during the creation of B, the main semantics of BCPL — its type structure and the rules for evaluating expressions — remained untouched. Both languages are typeless, or rather they have a single data type - “word” or “cell”, a set of bits of fixed length. Memory in these languages is an array of such cells, and the meaning of the contents of the cell depends on the operation that is applied to it. For example, the "+" operator simply adds its operands using the machine instruction add, and other arithmetic operations are also indifferent to the meaning of its operands.

Neither BCPL, nor B, nor C allocate character data in the language; they consider strings as vectors of integers and supplement the general rules with several conventions. In both BCPL and B, a string literal means the address of a static region initialized with string characters packed into cells.

How was C created

In 1970, Bell Labs acquired the PDP-11 computer for the project. Since B was ready to work on the PDP-11, Thompson rewrote the UNIX part to B.

But model B and BCPL implied costs when working with pointers: the rules of a language, defining a pointer as an index in an array of words, made pointers indexes of words. Each execution of a pointer during execution generated a pointer scaling to the address of the byte that the processor was expecting.

Therefore, it became clear that in order to cope with symbols and byte addressing, as well as prepare for the upcoming hardware support for floating-point calculations, typing is needed.

In 1971, Ritchie began to create an expanded version of B. At first, he called it NB (New B), but when the language became very different from B, the name was changed to C. That's what Ritchie himself wrote about it:

I wanted the structure not only to characterize an abstract object, but also to describe a set of bits that could be read from the catalog. Where could the compiler hide the pointer to the name that semantics require? Even if the structures were conceived more abstract, and the place for pointers could be hidden somewhere, as if I solved the technical problem of correctly initializing these pointers when allocating memory for a complex object, perhaps a structure containing arrays that contain structures, and so to arbitrary depth?

The solution consisted in a decisive jump in the evolutionary chain between the typeless BCPL and the typed C. It excluded the materialization of the pointer in the repository, and instead generated its creation when the name of the array was mentioned in the expression. The rule that has been preserved in today's C is that the values – arrays, when they are mentioned in the expression, are converted into pointers to the first of the objects that make up this array.

The second innovation, which most clearly distinguishes C from its predecessors, is this more complete type structure and especially its expressiveness in the syntax of declarations. NB offered the basic types int and char together with arrays of them and pointers to them, but no other ways to build them.

A generalization was required: for an object of any type, it should be possible to describe a new object that combines several such objects into an array, gets it from a function, or is a pointer to it.

Image from the Book of C Language: M. Waite, S. Prata, D. Martin

For any object of this composite type, there was already a way to point to an object that is part of it: index the array, call a function, use an indirect call operator with the pointer. Similar reasoning led to the syntax of a name declaration, which reflects the syntax of the expression where these names are used. So

int i, * pi, ** ppi;declares an integer, a pointer to an integer, and a pointer to a pointer to an integer. The syntax of these declarations reflects the fact that i, * pi, and ** ppi all result in an int type when used in an expression. In a similar way

int f (), * f (), (* f) ();declare a function that returns an integer, a function that returns a pointer to an integer, a pointer to a function that returns an integer;

int * api [10], (* pai) [10];declare an array of pointers to integer, a pointer to an array of integers.

In all these cases, the declaration of a variable resembles its use in an expression, whose type is what is at the beginning of the declaration.

70s: “Time of Troubles” and False Dialects

By 1973, the language was stable enough for UNIX to be rewritten. The transition to C provided an important advantage: portability. By writing a C compiler for each of the machines in Bell Labs, the development team could port UNIX to them.

Regarding the emergence of C language, Peter Moylan writes in his book “The case against C”: “A language was needed that could circumvent some of the hard and fast rules built into most high-level languages and ensure their reliability. We needed a language that would allow us to do something that before it could be implemented only in assembly language or at the level of machine code. ”

C continued to develop in the 70s. In 1973–1980s, the language grew a bit: the structure of types received unsigned, long types, union and enumeration, the structures became close to object – classes (all that was needed was notation for literals).

The first book on C. The C Programming Language, written by Brian Kernighan and Dennis Ritchie and published in 1978, became the bible of C programmers. In the absence of an official standard, this book — also known as K & R, or “White Paper,” as C fans love to call it — has actually become the standard.

Image from learnc.info

In the 70s, C programmers were few and most of them were UNIX users. However, in the 1980s, C went beyond the narrow confines of the UNIX world. C compilers have become available on various machines running different operating systems. In particular, C began to spread on the fast-growing IBM PC platform.

K & R introduced the following language features:

• structures (data type struct);

• long integer (data type long int);

• unsigned integer (unsigned int data type);

• operator + = and similar ones (old operators = + introduced the C compiler vocabulary analyzer into error, for example, when comparing expressions i = + 10 and i = + 10).

K & R C is often considered the most important part of the language that the C compiler must support. For many years, even after the release of ANSI C, it was considered the minimum level that programmers should adhere to, wishing to achieve maximum portability from their programs, because not all compilers supported ANSI C at that time, and good code in K & R C was also true for ANSI C.

Along with the growing popularity, problems appeared. The programmers who wrote the new compilers took as their basis the language described in K & R. Unfortunately, in K & R some features of the language were described vaguely, so the compilers often interpreted them at their discretion. In addition, the book did not clearly distinguish between what is a feature of the language and what is a feature of the UNIX operating system.

After publishing K & R C, several features were added to the language, supported by AT & T compilers, and some other manufacturers:

• functions that do not return a value (with the void type), and pointers that do not have a type (with the void * type);

• functions that return associations and structures;

• names of the fields of these structures in different namespaces for each structure;

• assignment of structures;

• constants specifier (const);

• a standard library that implements most of the functions introduced by various manufacturers;

• enumeration type (enum);

• fractional single precision number (float).

The situation was worsened by the fact that after the publication of K & R, C continued to develop: new possibilities were added to it and old ones were cut out of it. Soon there was an obvious need for an exhaustive, accurate and up-to-date description of the language. Without such a standard, dialects of the language began to appear, which interfered with portability - the strongest side of the language.

Standards

In the late 1970s, the C language began to crowd out BASIC, which at that time was the leader in the field of microcomputer programming. In the 1980s, it was adapted to the IBM PC architecture, which led to a significant jump in its popularity.

The development of the C language standard was undertaken by the American National Standards Institute (ANSI). When it was in 1983, a committee X3J11 was formed, which was engaged in the development of the standard. The first version of the standard was released in 1989 and was named C89. In 1990, making small changes to the standard, it was adopted by the International Organization for Standardization ISO. Then he became known under the ISO / IEC 9899: 1990 code, but the name associated with the year of adoption of the standard, C90, was fixed among the programmers. The latest version of the standard is ISO / IEC 9899: 1999, also known as C99, which was adopted in 2000.

Among the innovations of the standard C99 is worth paying attention to the change in the rule regarding the place of declaration of variables. Now new variables could be declared in the middle of the code, and not just at the beginning of the composite block or in the global scope.

Some features of C99:

• inline functions;

• declaration of local variables in any program text operator (as in C ++);

• new data types, such as long long int (to facilitate the transition from 32-bit to 64-bit numbers), the explicit Boolean data type _Bool, and the complex type to represent complex numbers;

• arrays of variable length;

• support for limited pointers (restrict);

• named initialization of structures: struct {int x, y, z; } point = {.y = 10, .z = 20, .x = 30};

• support for single-line comments beginning with //, borrowed from C ++ (many C compilers supported them earlier as an add-on);

• several new library functions, such as snprintf;

• Several new header files, such as stdint.h.

The C99 standard is now more or less supported by all modern C compilers. Ideally, code written in C in compliance with the standards and without the use of hardware- and system-dependent calls, became both hardware-and platform-independent code.

In 2007, work began on the following C standard. December 8, 2011 published a new standard for the C language (ISO / IEC 9899: 2011). Some features of the new standard are already supported by GCC and Clang compilers.

The main features of C11:

• multithreading support;

• improved Unicode support;

• generic macros (type-generic expressions, allow static overload);

• anonymous structures and unions (simplify accessing nested structures);

• object alignment control;

• static statements (static assertions);

• removal of the dangerous gets function (in favor of secure gets_s);

• quick_exit function;

• _Noreturn function specifier;

• A new exclusive file open mode.

Despite the existence of a standard of 11 years, many compilers still do not fully support even the C99 version.

What is C criticized for?

It has a fairly high threshold of entry, which makes it difficult to use it in learning as a first programming language. When programming in C, you need to take into account many details. “Being born in a hacker environment, it stimulates an appropriate programming style, often unsafe, and encourages writing confusing code, ” Wikipedia writes .

More profound and reasoned criticism was expressed by Peter Moylan. He devoted a total of 12 pages to C criticism. Let's give a couple of fragments:

Problems with modularity

Modular programming in the C language is possible, but only if the programmer adheres to a number of fairly rigid rules:

• Each module must have exactly one header file. It should contain only exported function prototypes, descriptions and nothing else (except comments).

• The external caller about this module should be aware only of the comments in the header file.

• To verify integrity, each module must import its own header file.

• To import any information from another module, each module must contain #include lines, as well as comments indicating what is actually being imported.

• Function prototypes can only be used in header files. (This rule is necessary because C has no mechanism for checking that a function is implemented in the same module as its prototype; so using a prototype may mask the “missing function” error - “missing function”).

• Any global variable in the module, and any function other than the one imported through the header file must be declared static.

• A compiler warning “function call without prototype” should be provided; such a warning should always be considered an error.

• The programmer must ensure that each prototype specified in the header file has a function implemented under the same name in the same module (that is, non-static in conventional C terminology). Unfortunately, the nature of C language does not automatically verify this.

• You should be suspicious of any use of the grep utility. If the prototype is not located in its place, then this is most likely a mistake.

• Ideally, programmers working in the same team should not have access to each other’s source files. They should only share object modules and header files.

The obvious difficulty is that few people will follow these rules, because the compiler does not require them to strictly observe. A modular programming language at least partially protects good programmers from the chaos that bad programmers create. And the C language is not able to do this.

Image from smartagilee.com

Pointer issues

Despite all the advances in the theory and practice of data structures, pointers remain a real stumbling block for programmers. The work with pointers accounts for a significant part of the time spent on debugging the program, and it is they that create most of the problems that complicate its development.

You can distinguish between important and unimportant pointers. Important in our understanding is the pointer needed to create and maintain a data structure.

A pointer is considered unimportant if it is not necessary to implement the data structure. In a typical C program, unimportant pointers are much more than important ones. There are two reasons for this.

The first is that among programmers using C, it has become a tradition to create pointers even where other methods of access are already inferior to them, for example, when viewing array elements.

The second reason is the rule of the C language, according to which all parameters of functions should be passed by value. When you need the equivalent of a Pascal VAR parameter or an Ada language inout parameter, the only solution is to pass a pointer. This largely explains the poor readability of C programs.

The situation is exacerbated when it is necessary to pass an important pointer as an input / output parameter. In this case, the function must be passed a pointer to a pointer, which creates difficulties even for the most experienced programmers.

C - alive

According to the data of June 2016, the TIOBE index, which measures the growth in popularity of programming languages, showed that C ranks 2nd:

Let someone say that Sy is outdated, that his wide distribution is the result of luck and active PR. Let someone say that without UNIX, the C language would never have been created.

However, C has become a kind of standard. He, one way or another, passed the test of time, unlike many other languages. C-developers are still in demand, and the IT community remembers the creators of the language with a kind word.

Source: https://habr.com/ru/post/304034/

All Articles