"Cut the Gordian knot" or overcoming the problems of encrypting information in Windows

A modern operating system is a complex, hierarchical process for processing and managing information. Actual versions of Windows OS are no exception. In order to integrate protection into the Windows environment, there is often a lack of integration at the application level. However, when it comes to encrypting information in a Windows OS environment, everything becomes much more complicated.

The main "headache" of the developer of encryption tools in this process is to ensure "encryption transparency", i.e. it is necessary to harmoniously integrate into the structure of the operating system processes and at the same time ensure that users are not involved in the encryption process and even more so its maintenance. The requirements for modern means of protection are increasingly excluding the user from the process of protecting information. Thus, for this user, comfortable conditions are created that do not require the adoption of "incomprehensible" decisions to protect information.

This article will reveal the idea of efficiently integrating the means of encrypting information on a disk with the processes of the Windows file system.

The developers set a goal to create a disk encryption mechanism that meets the requirements of maximum transparency for users. Requirements will have to be met by effectively interacting this encryption mechanism with the processes of the Windows operating system, which are responsible for managing the file system. The effectiveness of the encryption mechanism should also be confirmed by the high performance of encryption processes and the rational use of operating system resources.

Initially, the task was set to provide simultaneous access to encrypted and decrypted content, as well as to encrypt file names. This causes the main difficulties, because such a requirement goes against the prevailing Windows architecture. To understand the essence of the problem, first we need to analyze some of the highlights of this operating system.

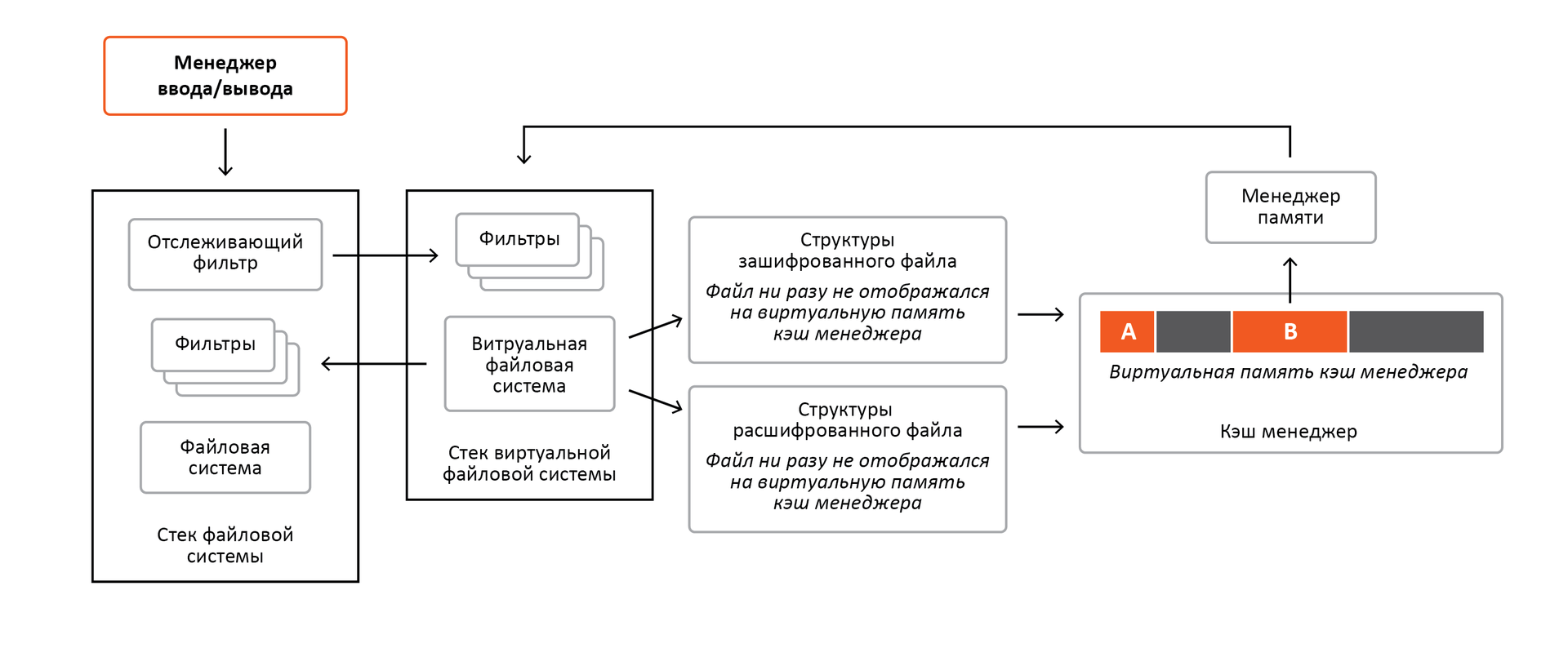

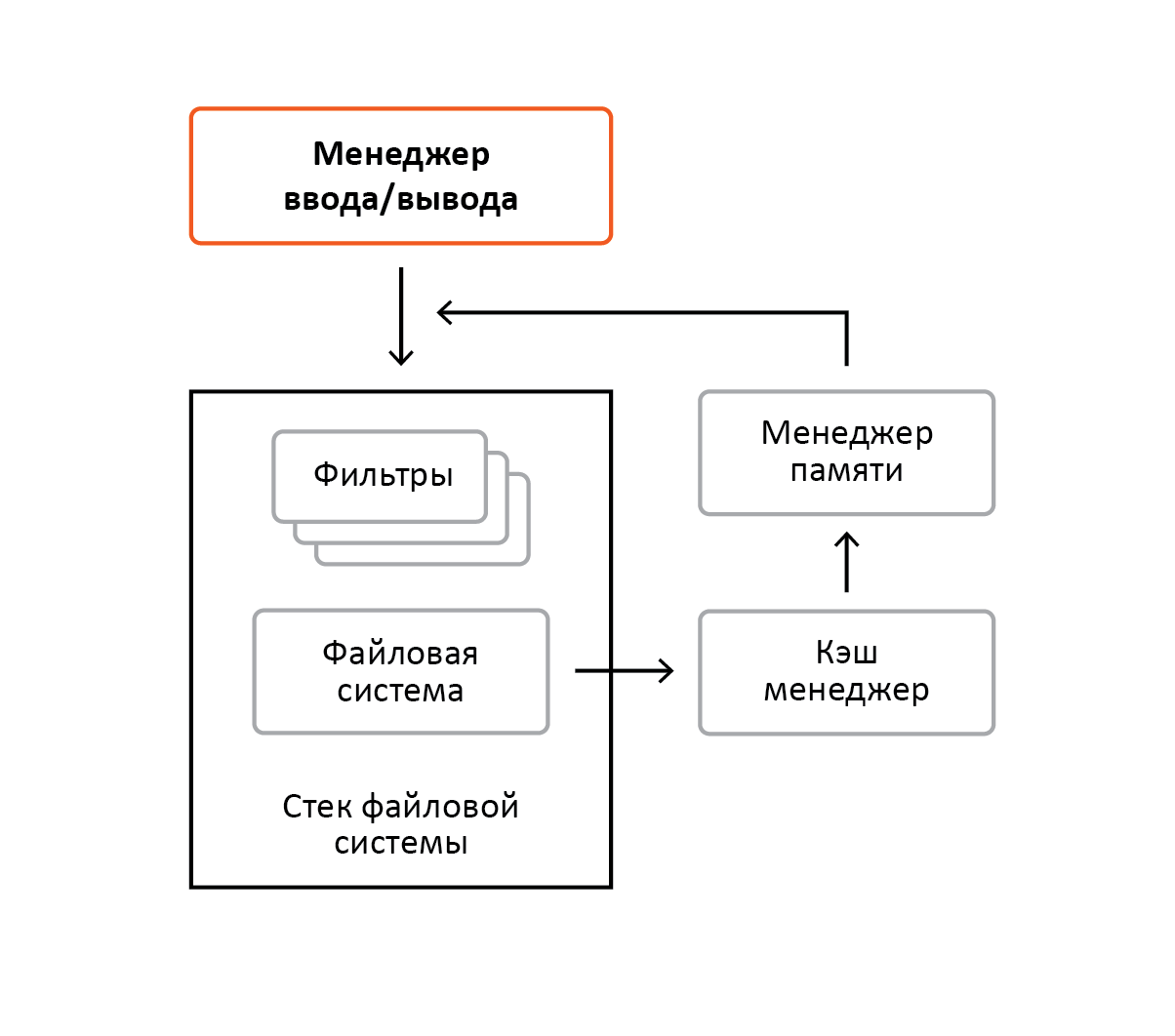

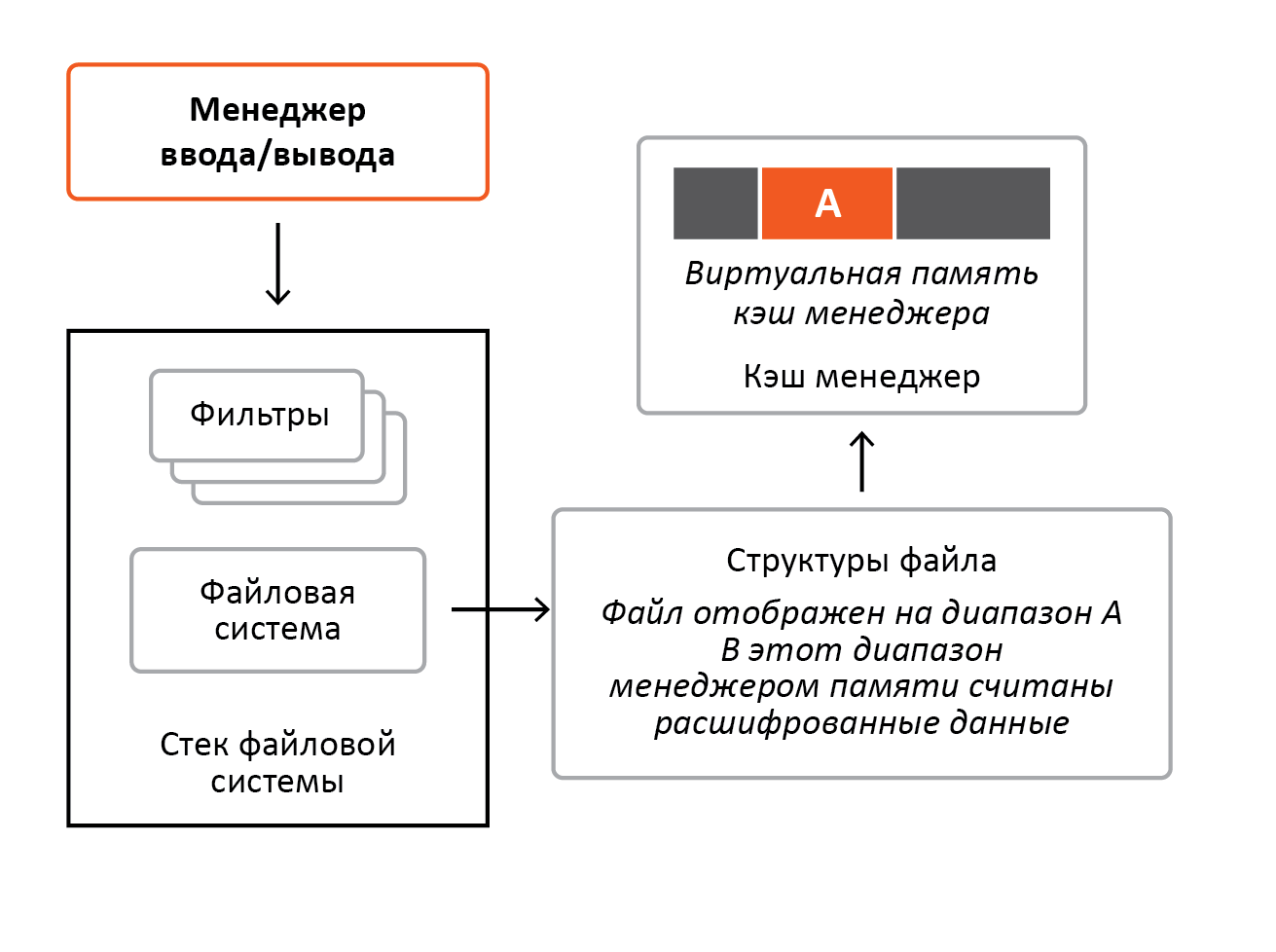

In Windows, all file systems rely on subsystems such as memory manager and cache manager, and the memory manager, in turn, relies on file systems. It would seem a vicious circle, but everything will become clear further. Below, in Figure 1, the listed components are shown, as well as an I / O manager, which accepts requests from subsystems (for example Win32) and from other system drivers. Also in the figure, the terms “filter” and “file system stack” are used, which will be discussed in more detail below.

Picture 1

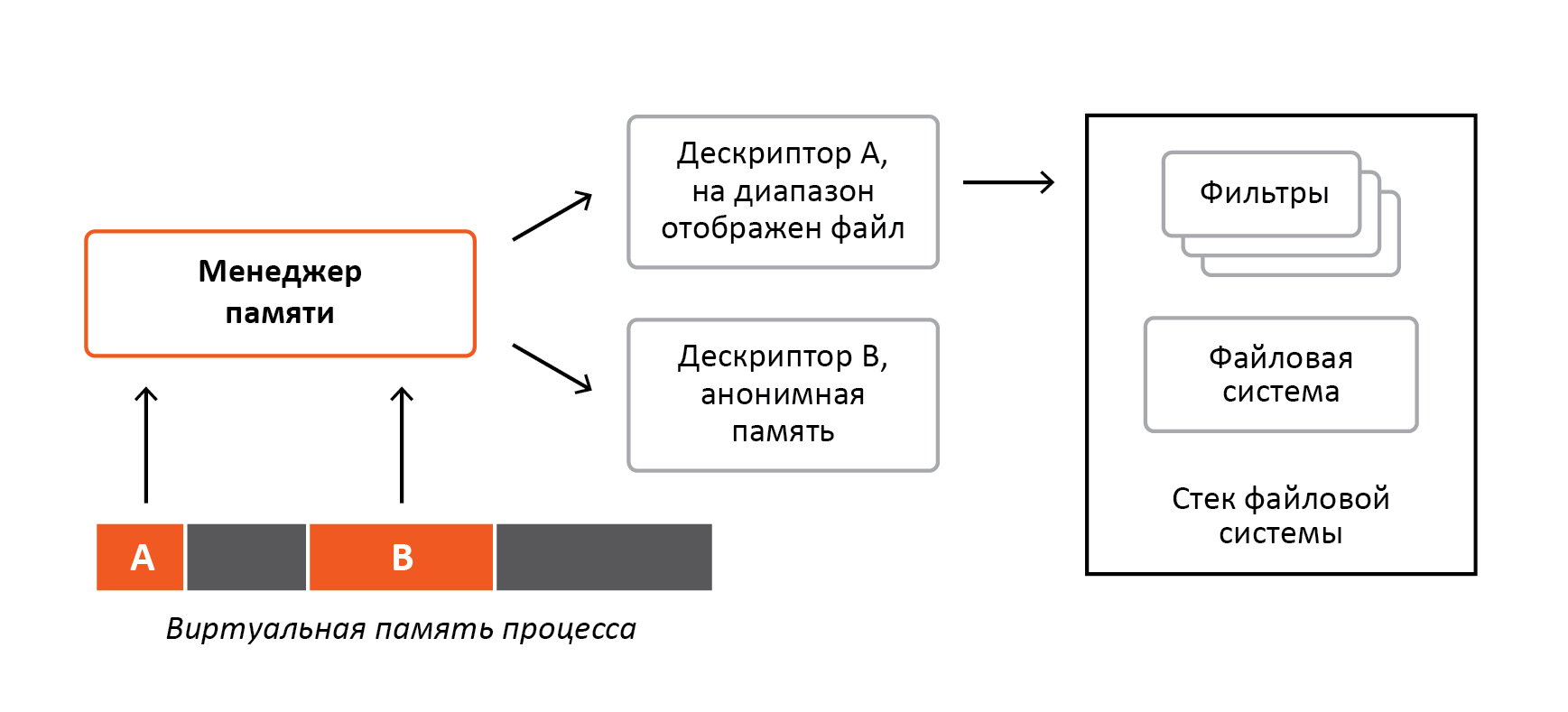

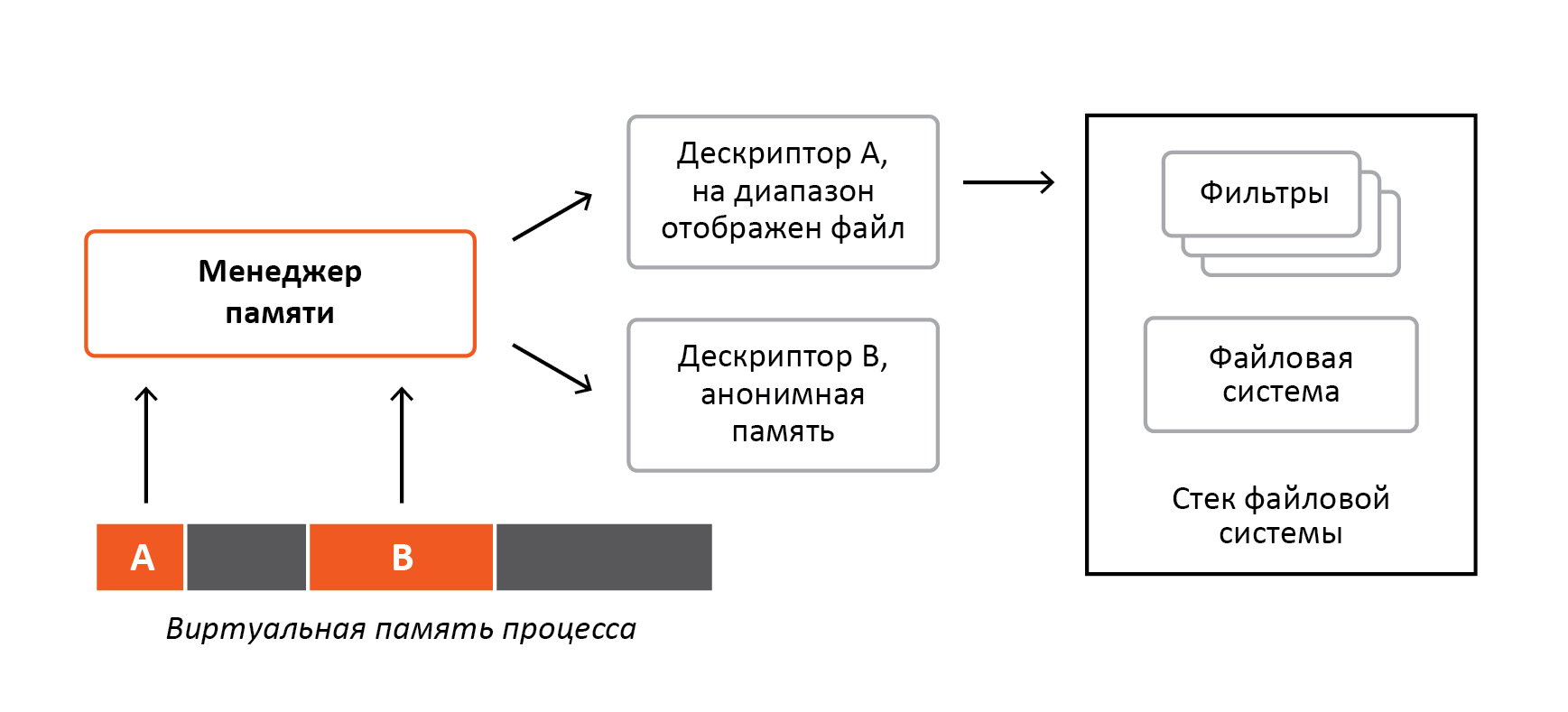

Let us examine a mechanism such as displaying a file for memory. Its essence lies in the fact that when accessing virtual memory in reality, part of the file is read. This is accomplished using the hardware mechanisms of the processors and the memory manager itself. Any ranges of process virtual memory are described by descriptors. If for some range there is no descriptor, then there is nothing in this area of virtual memory, and access to such range inevitably leads to the collapse of the process. If a physical memory is fixed for a range, access to these virtual addresses leads to normal access to physical memory, such memory is also called anonymous. If the file is mapped to virtual memory, then accessing these addresses will read part of the file from the disk into physical memory, after which it will be accessed in the usual way. Those. these two cases are very similar, the only difference is that for the latter, part of the file will be read into the corresponding physical memory. About all these types of access descriptor and contains information. These descriptors are memory manager structures that it manages to perform the required tasks. As it is not difficult to guess, in order to read a part of a file into a page of physical memory, the memory manager must send a request to the file system. Figure 2 shows an example of a virtual memory of a process with two ranges, A and B, access to which has never been performed.

')

Figure 2

The range A displays the executable file of the process itself, and the range B displays the physical memory. Now, when the process performs the first access to range A, the memory manager will receive control and the first thing he will do is evaluate the type of range. Since a file is mapped to range A, the memory manager first reads the corresponding part of it from the file into physical memory, and then displays it on the process range A, thus further access to the read content will be done without the memory manager. For the B range, the memory manager will perform the same sequence, the only difference is that instead of reading data from the file, it will simply display the free physical pages of memory on the B range, after which access to this range will be done without the involvement of the memory manager. Figure 3 shows an example of a virtual memory of a process after the first access to ranges A and B.

Figure 3

As can be seen from the figure, when accessing both ranges, access to physical memory is performed without the participation of the memory manager, since previously, when first accessed, it mapped physical memory to ranges A and B, and previously read part of the corresponding file to physical memory of range A.

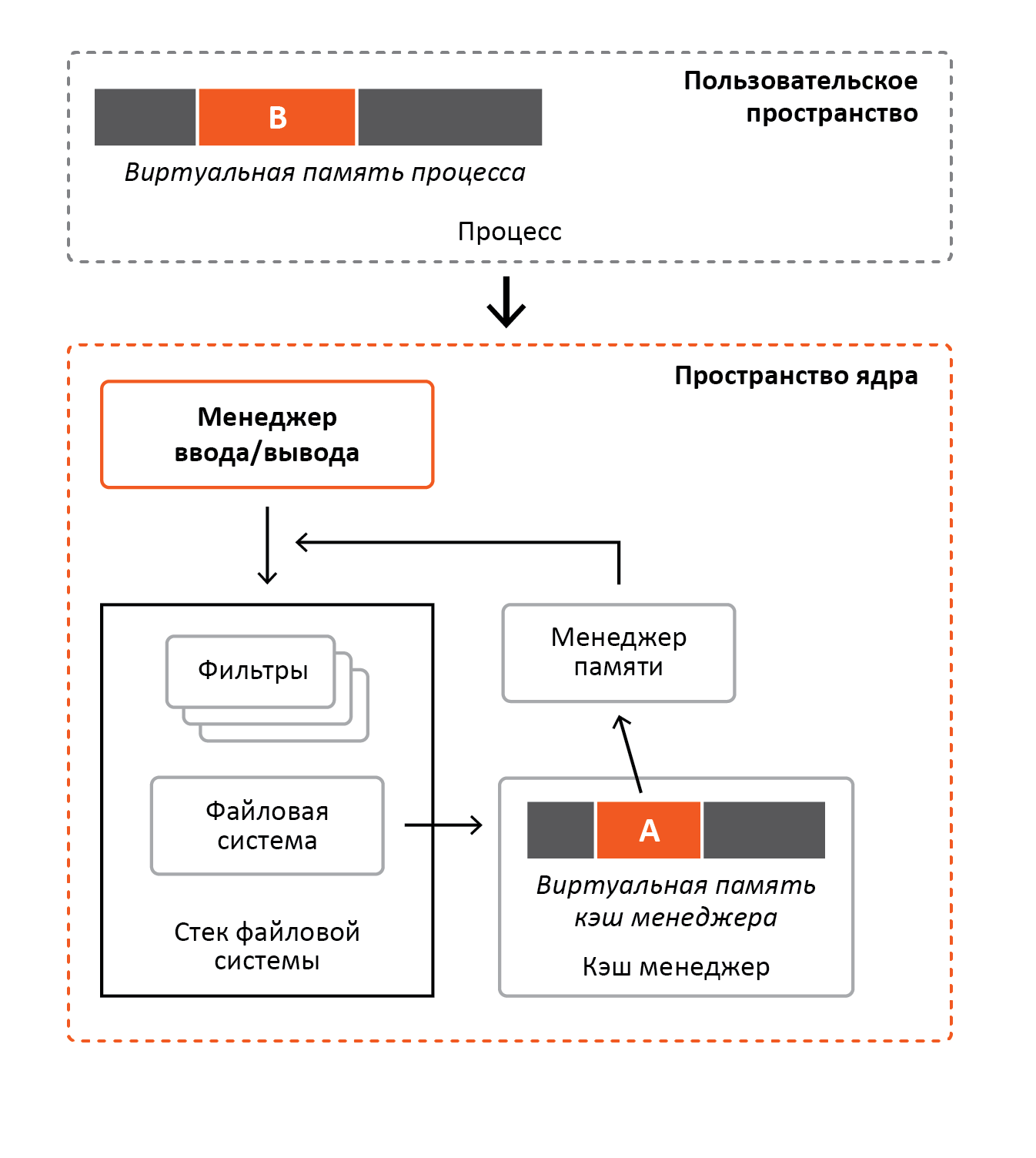

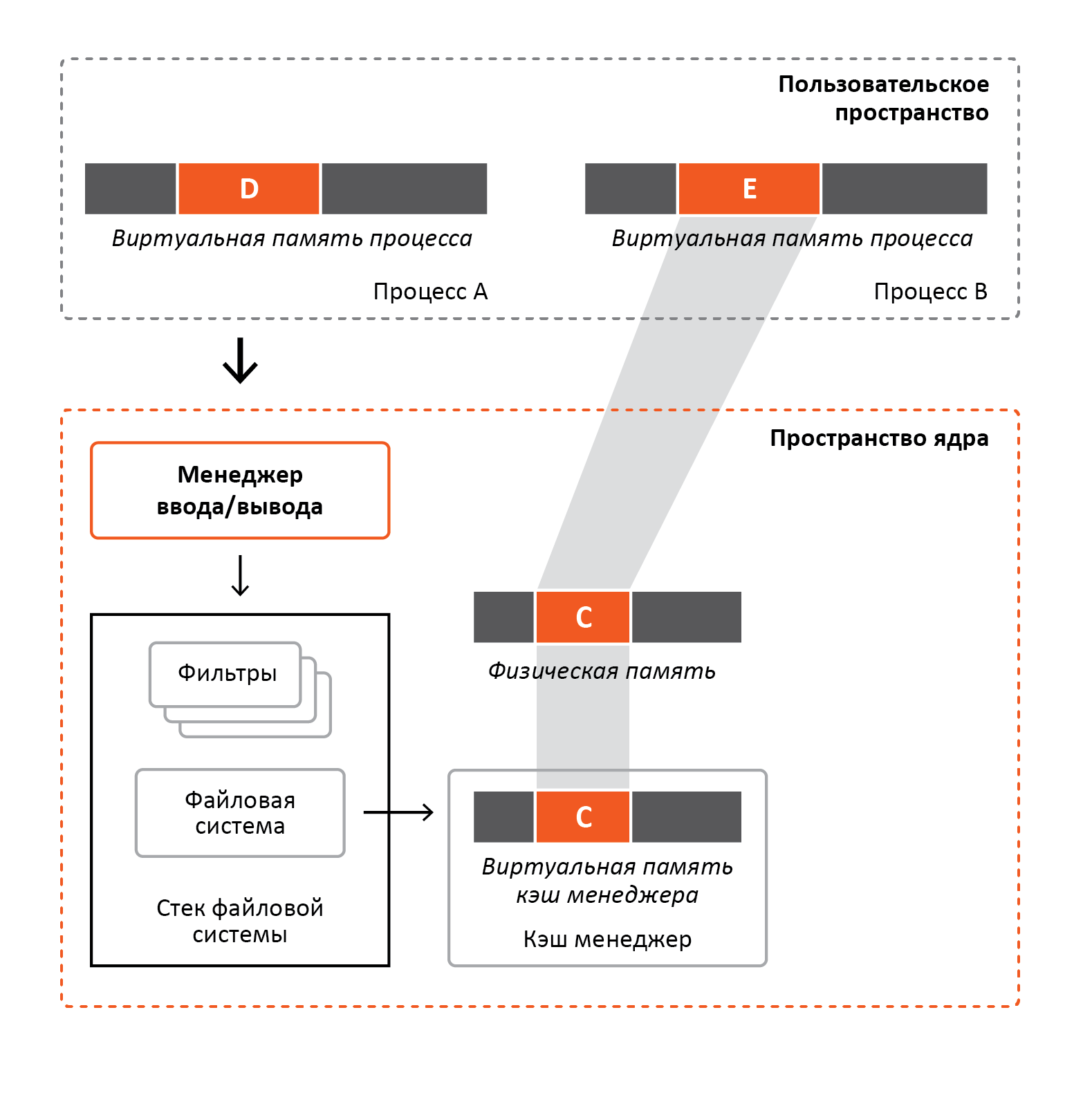

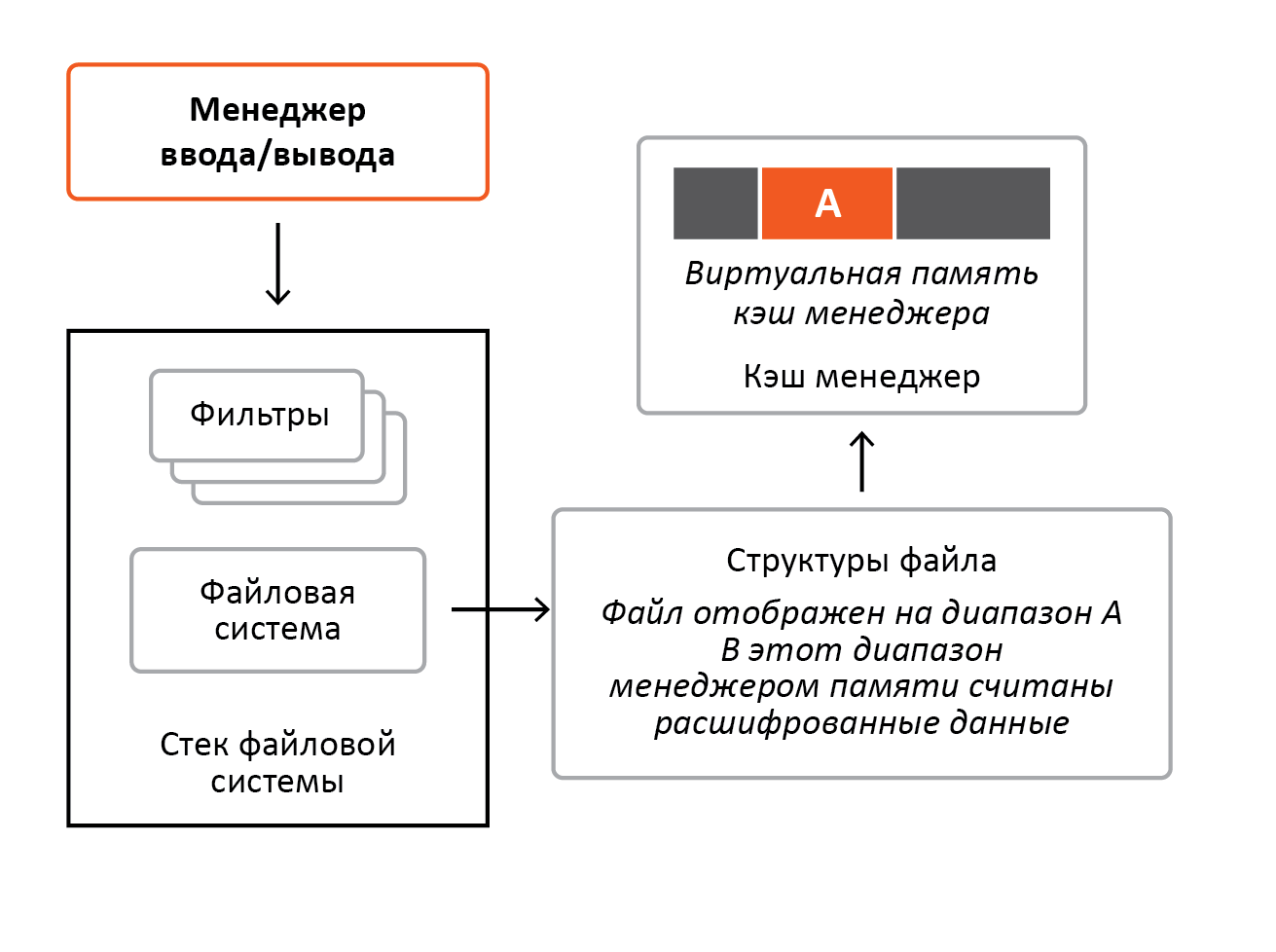

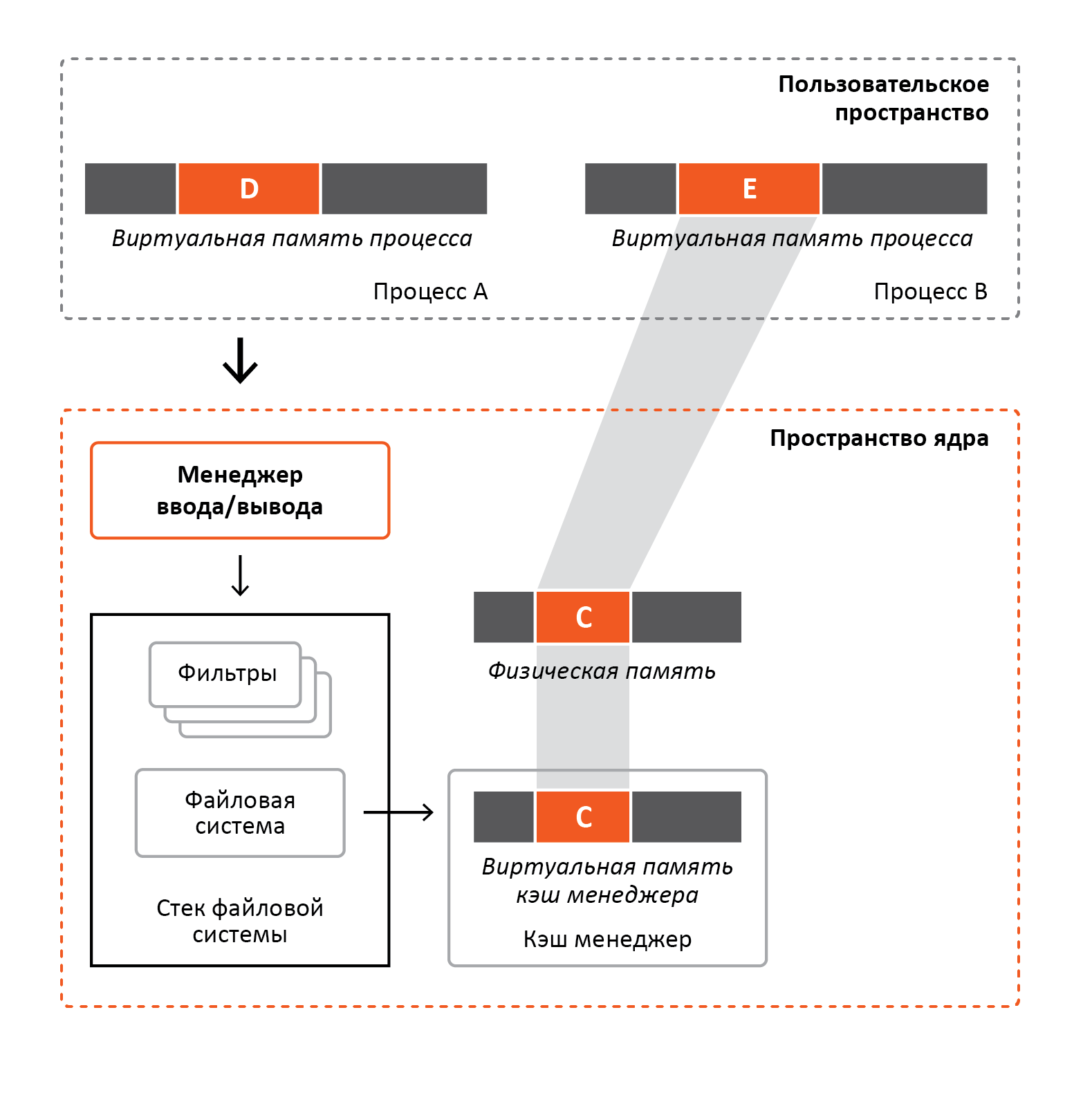

The cache manager is the central mechanism for all open files in the system on all disks. Using this mechanism allows not only to speed up access to files, but also to save physical memory. The cache manager does not work by itself, unlike the memory manager. It is completely managed by file systems, and all the necessary information about the files (for example, size) is provided by them. Whenever a read / write request arrives at the file system, the file system does not read the file from the disk; instead, it calls the manager's cache services. The cache manager, in turn, using the services of the memory manager, maps the file to virtual memory and copies it from memory to the interrogator's buffer. Accordingly, when accessing this memory, the memory manager will send a request to the file system. And it will be a special request that will say that the file should be read directly from the disk. If you are accessing a file that was previously already displayed by the cache manager, then it will not be re-mapped to virtual memory. Instead, the cache manager will use the virtual memory where the file was mapped earlier. File mapping is tracked through the structures that file systems pass to the cache to the manager when calling its services. About these structures a little more will be discussed below. Figure 4 shows an example of reading a file by a process.

Figure 4

As shown in the figure above, the process reads the file into buffer B. To perform the read, the process accesses the I / O manager, which creates and sends a request to the file system. The file system, upon receiving a request, does not read the file from the disk, but calls the cache manager. Next, the cache manager assesses whether the file is mapped to its virtual memory, and if not, it calls the memory manager in order to display the file / part of the file. In this example, the file has already been displayed, and access to it has never been performed. Next, the cache manager copies to the C buffer the process mapped to the virtual memory range A. Since access to band A is performed for the first time, the memory manager will receive control, then it will evaluate the band, and since this is the memory mapped file, it considers its part in physical memory, after which it will display it on the range of virtual memory A. After that, as already described earlier, access to range A will be performed, bypassing the memory manager.

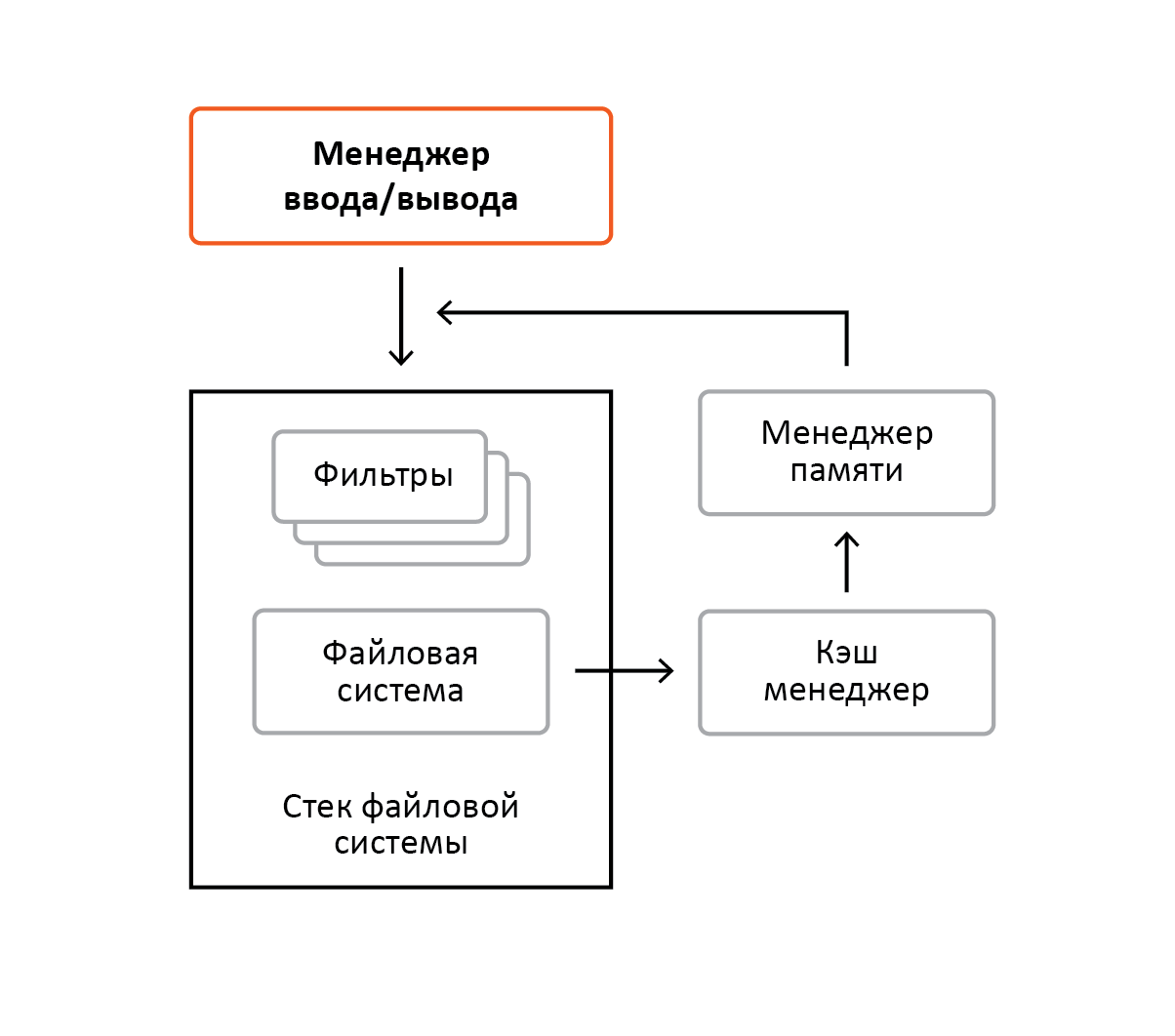

Nothing prevents you from simultaneously caching a file and displaying it to memory as many times as you like. Even if the file is cached and mapped to the memory of dozens of processes, the physical memory used for this file will be the same. This is the essence of saving physical memory. Figure 5 shows an example where one process reads a file in the usual way, and another process maps the same file to its virtual memory.

Figure 5

As can be seen from the figure above, the physical memory is mapped to the virtual memory of the process B and the virtual memory of the cache manager. When process A will read the file into buffer D, it will contact the I / O manager, which will generate and send a request to the file system. The file system, in turn, will refer to the cache manager, which simply copies the file mapped to the virtual memory range C cache manager, to buffer D of process A. Because at the time the cache manager was accessed, the file was already not only displayed, but also previously executed access to the range C, on which the file is displayed, the operation will be performed without the participation of the memory manager. Process B, when reading / writing range E, in effect will gain access to the same physical pages of memory that the cache manager accessed when copying a file.

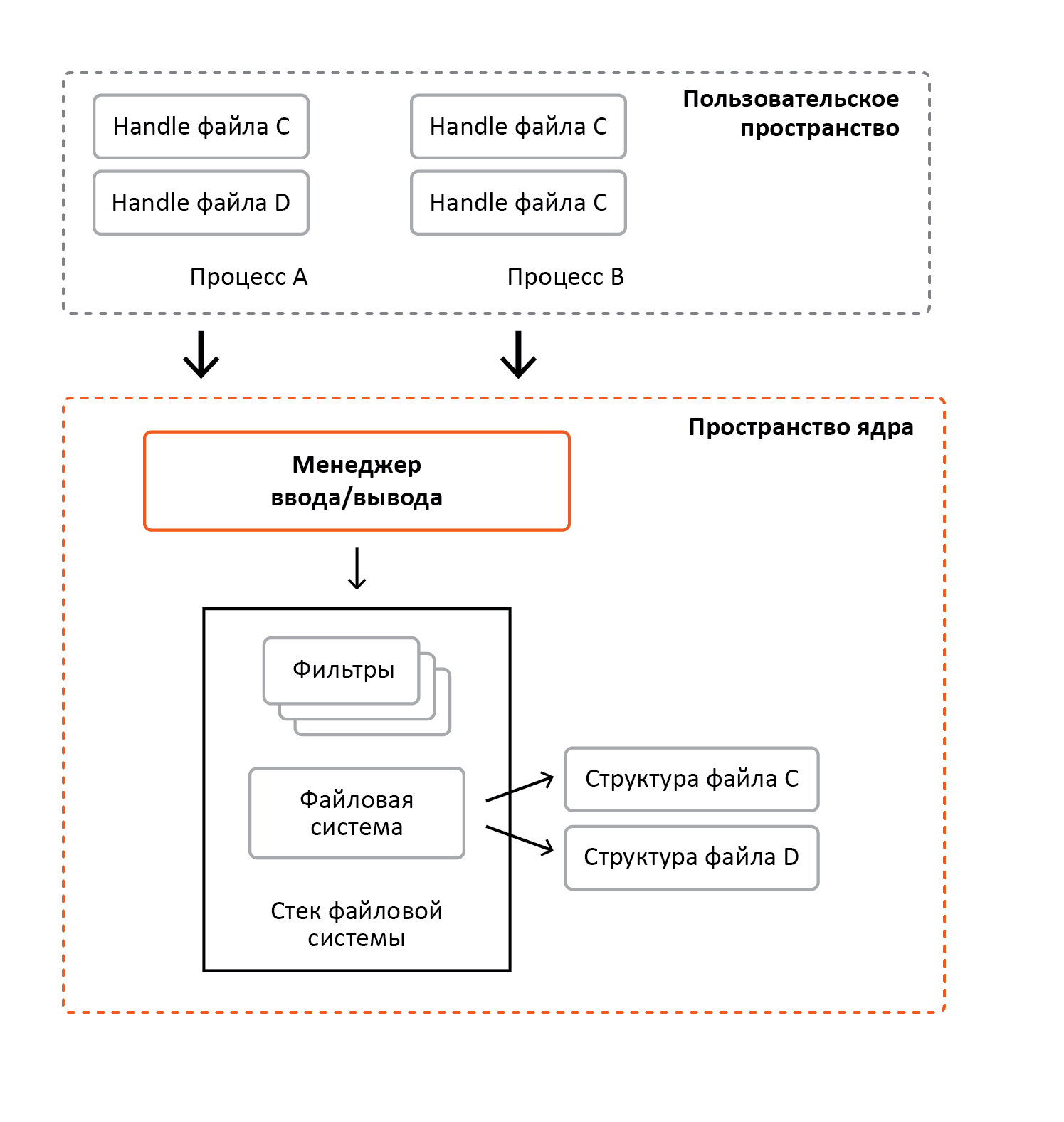

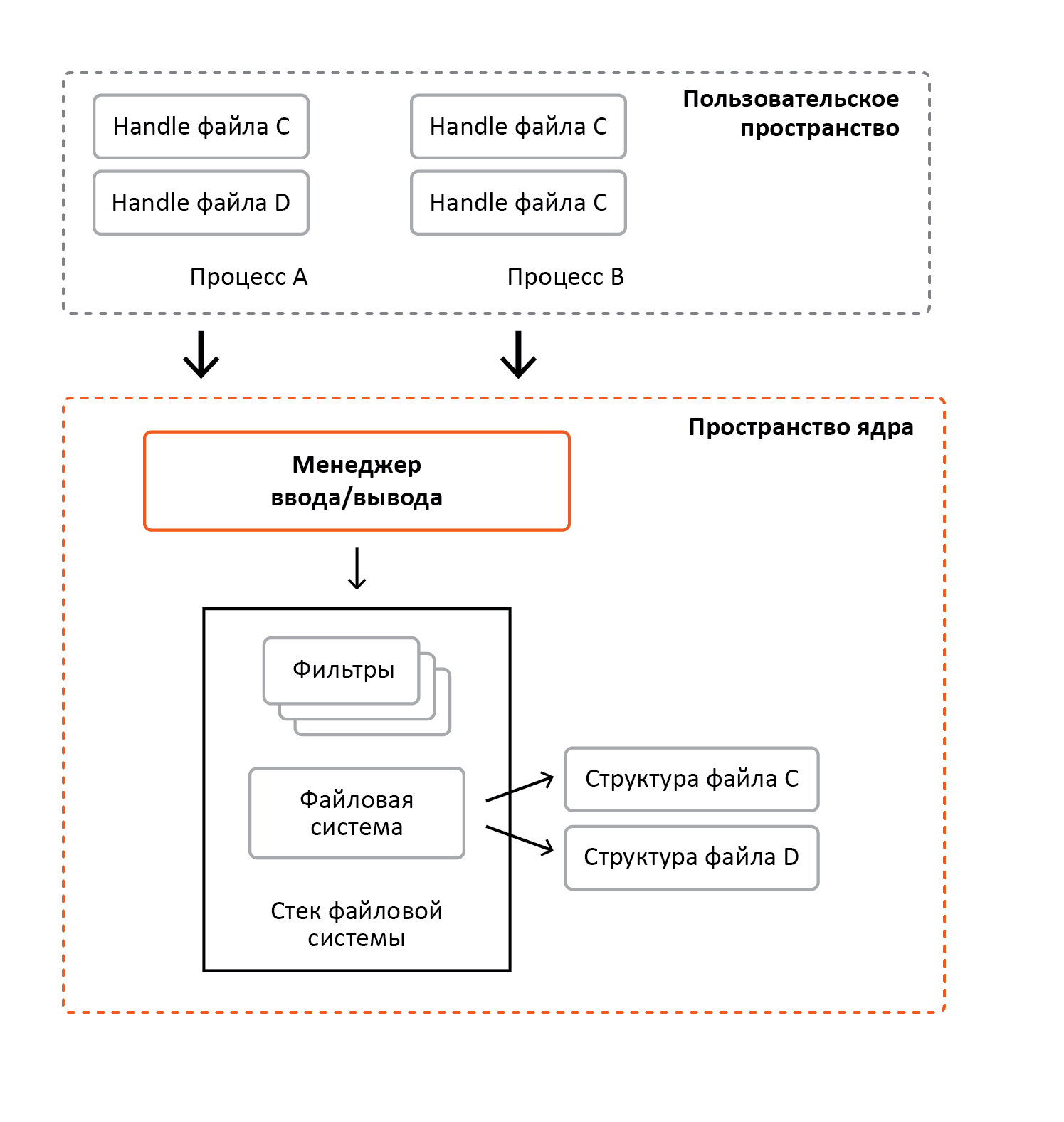

File systems accept requests from user software or other drivers. Before accessing the file must be open. In case of successful execution of file open / create requests, the file system will form the memory structures used by the cache manager and the memory manager. Also note that these structures are unique to the file. Those. if a specific disk file was opened at the time when the same request came for the same file, the file system will use the previously created memory structures. In fact, they are a software representation of a disk file in memory. Figure 6 shows an example of matching open copies of files and their structures.

Figure 6

In the figure, process A has opened file C and file D, and process B has opened file C twice. Thus, there are three open instances of file C, when there is only one structure formed by the file system. File D was opened once, therefore, there is one open instance that corresponds to the structure formed by the file system.

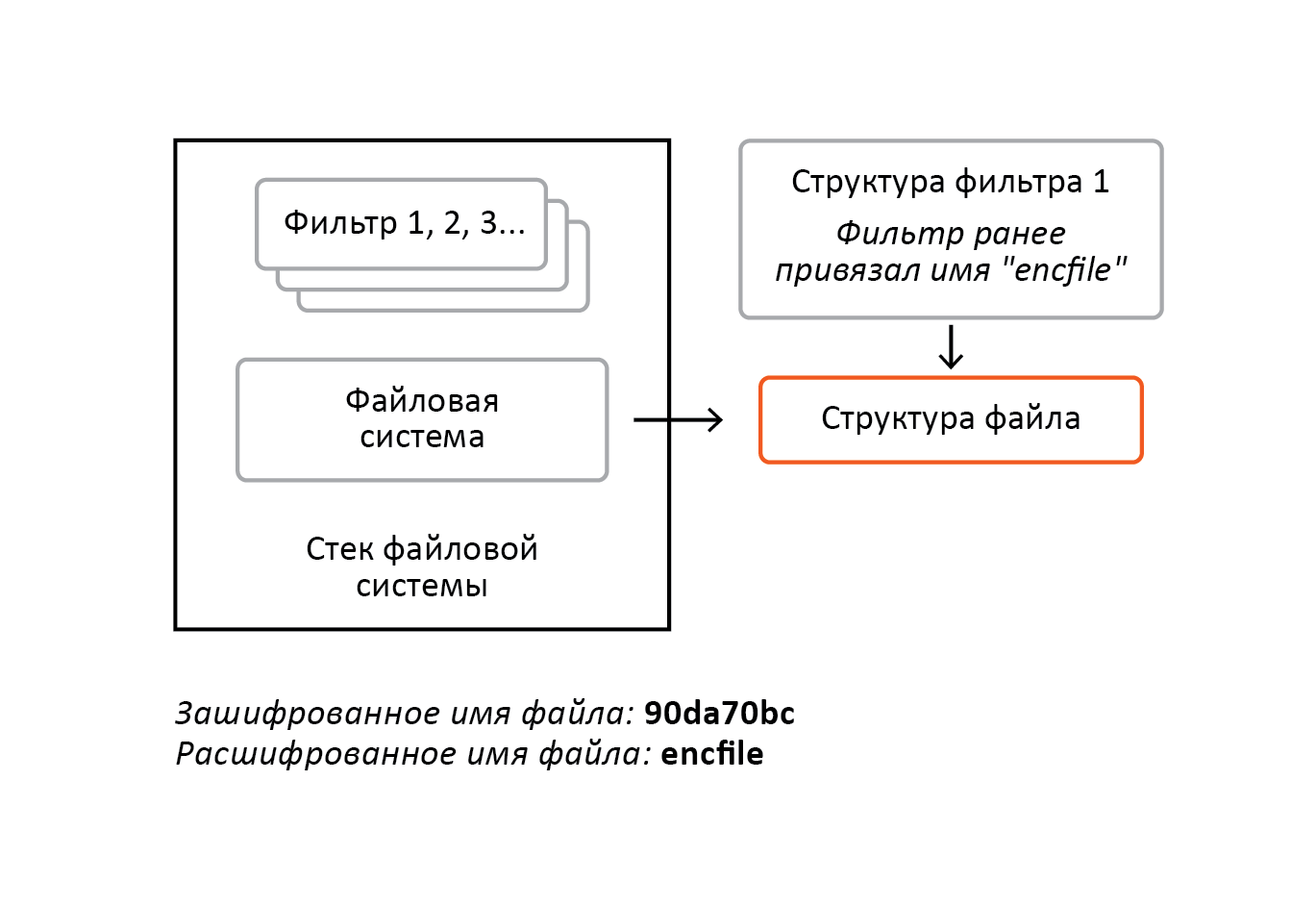

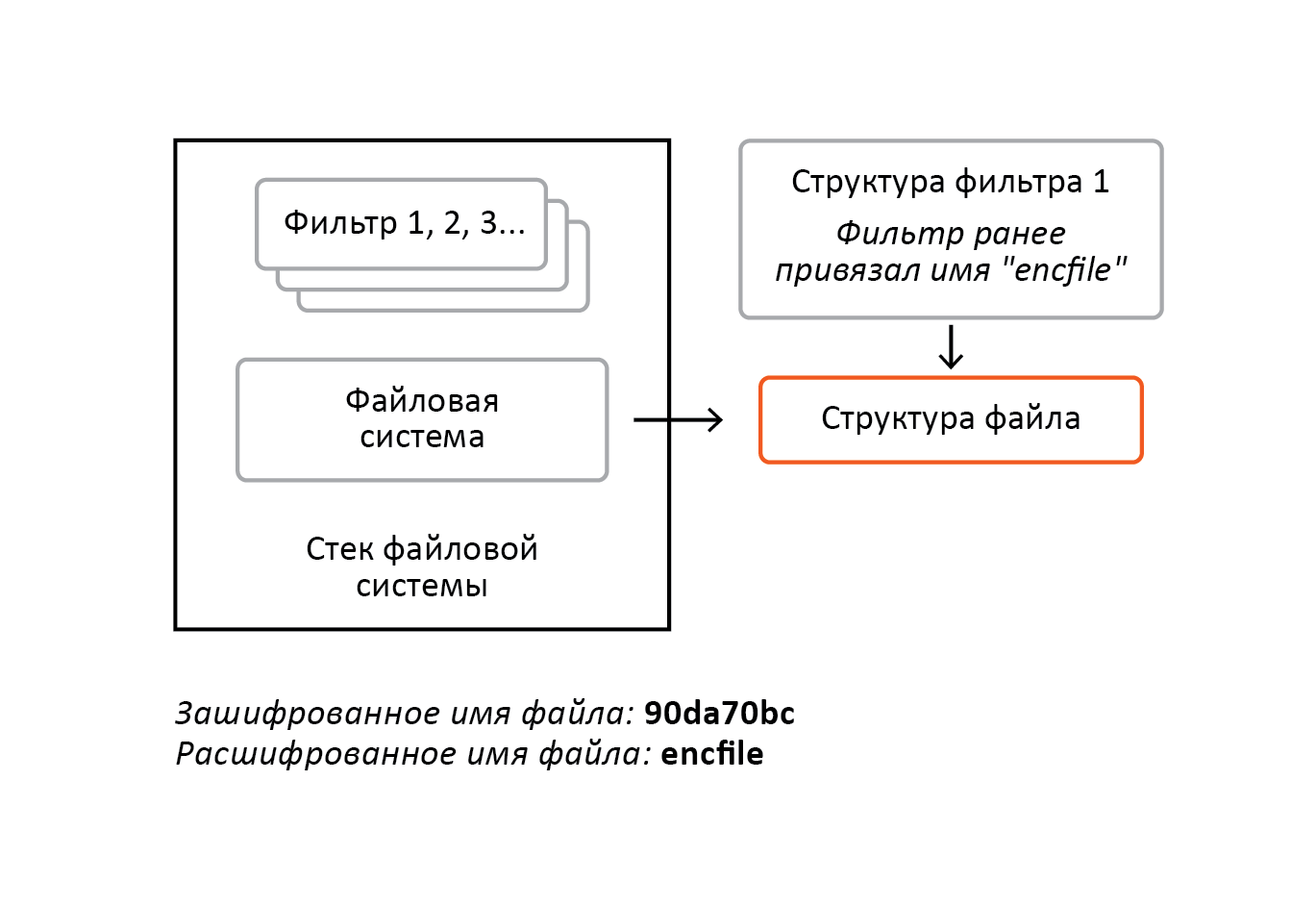

Any requests sent to the file system are not immediately processed by it. Requests first go through a chain of drivers who wish to track such requests. Such drivers are called filters. They have the ability to view requests before they reach the file system, as well as after the file system processes them. For example, a file encryption filter can track read / write requests in order to decrypt / encrypt data. Thus, without modifying the file systems themselves, we can encrypt the file data. Filters can bind their unique data to the file structures that the file system forms. Together, the filter drivers and the file system driver form the file system stack. The number of filters can be different, the filters themselves can also be different. Theoretically, they may not be at all, but this is practically not the case. Figure 7 shows the file system stack, which consists of three filters.

Figure 7

Before the request reaches the file system, it passes sequentially through filters 1, 2 and 3. When the request is processed by the file system, it is visible in the reverse order, that is, The request passes successively through filters 3, 2 and 1. Also, in the example above, filter 1 and filter 3 tied their structures to the file structure that the file system formed after executing the open / create file request.

The vast majority of problems are solved by filtering, but our case is unique. As previously noted, it is unique in that it is necessary to provide simultaneous access to encrypted and decrypted content, and also to encrypt file names. Nevertheless, we will try to develop a filter that will solve this problem.

Figure 8 shows the situation when the file was previously opened decrypted.

Figure 8

This means that the filters have seen the decoded name, and they, as shown in the figure, can bind this name to the structure that the file system will form (as was said earlier, this structure is unique to a specific disk file) for further file manipulations. And at that moment the file opens encrypted, which means that the filters have seen the encrypted name. How will they behave in such a situation when the decrypted name is already attached to the file structure? Obviously, the behavior is not predictable, although it is not necessary that the consequences will be fatal.

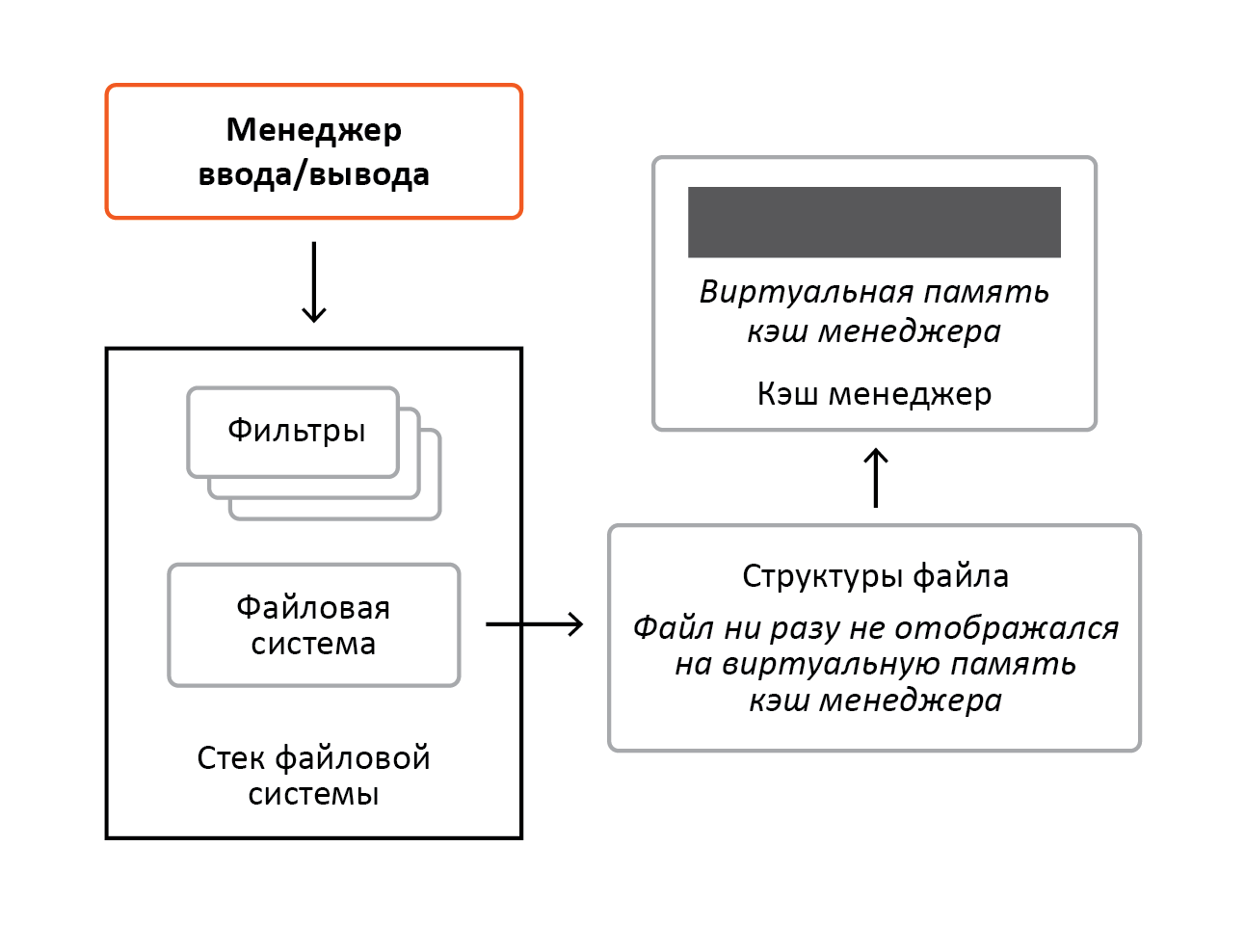

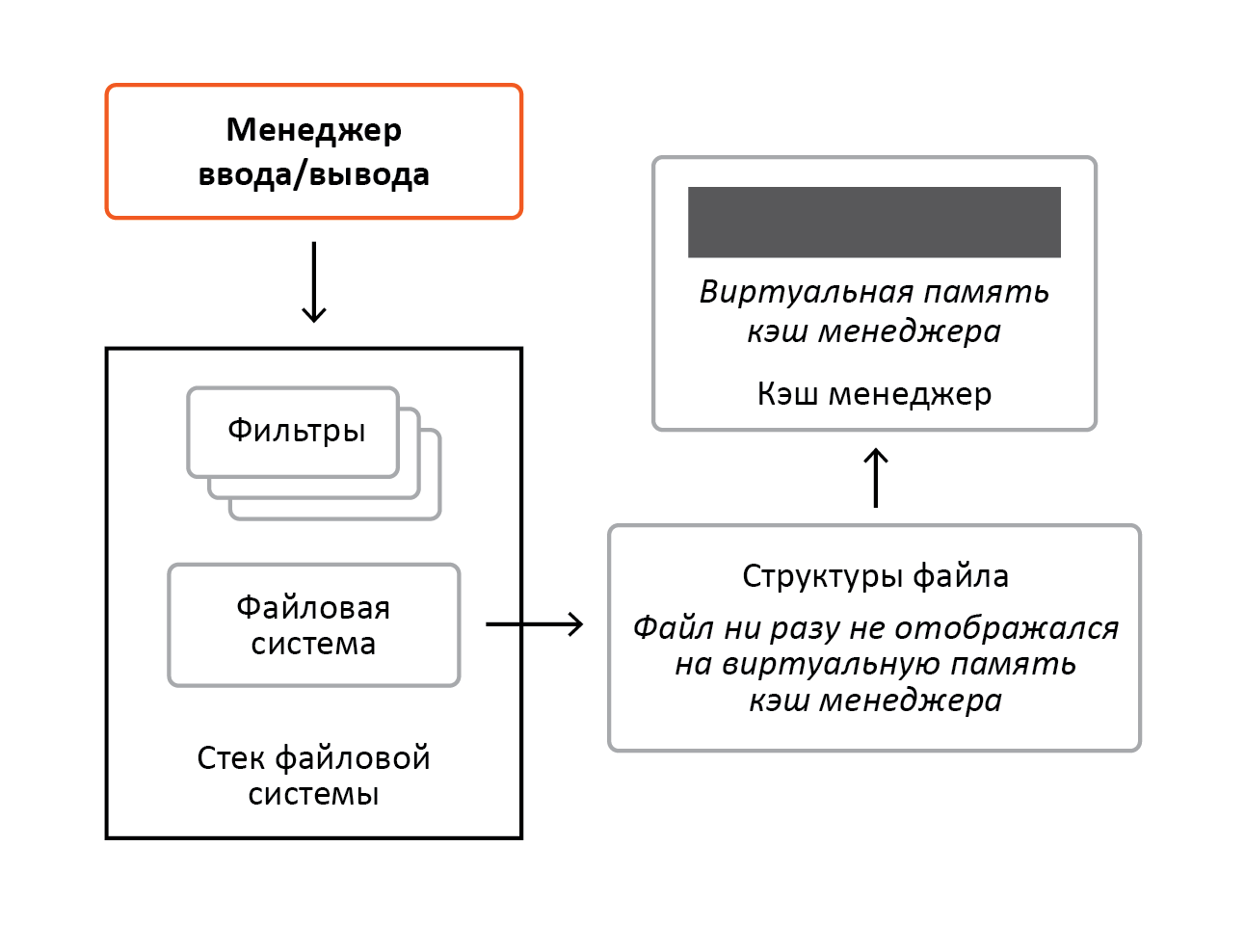

In continuation of the above, it can be added that access to the contents of the file also causes problems, and much more serious. Let's return to the situation when the file was opened simultaneously decrypted and encrypted. This situation is depicted in Figure 9, the file has never been read / written.

Figure 9

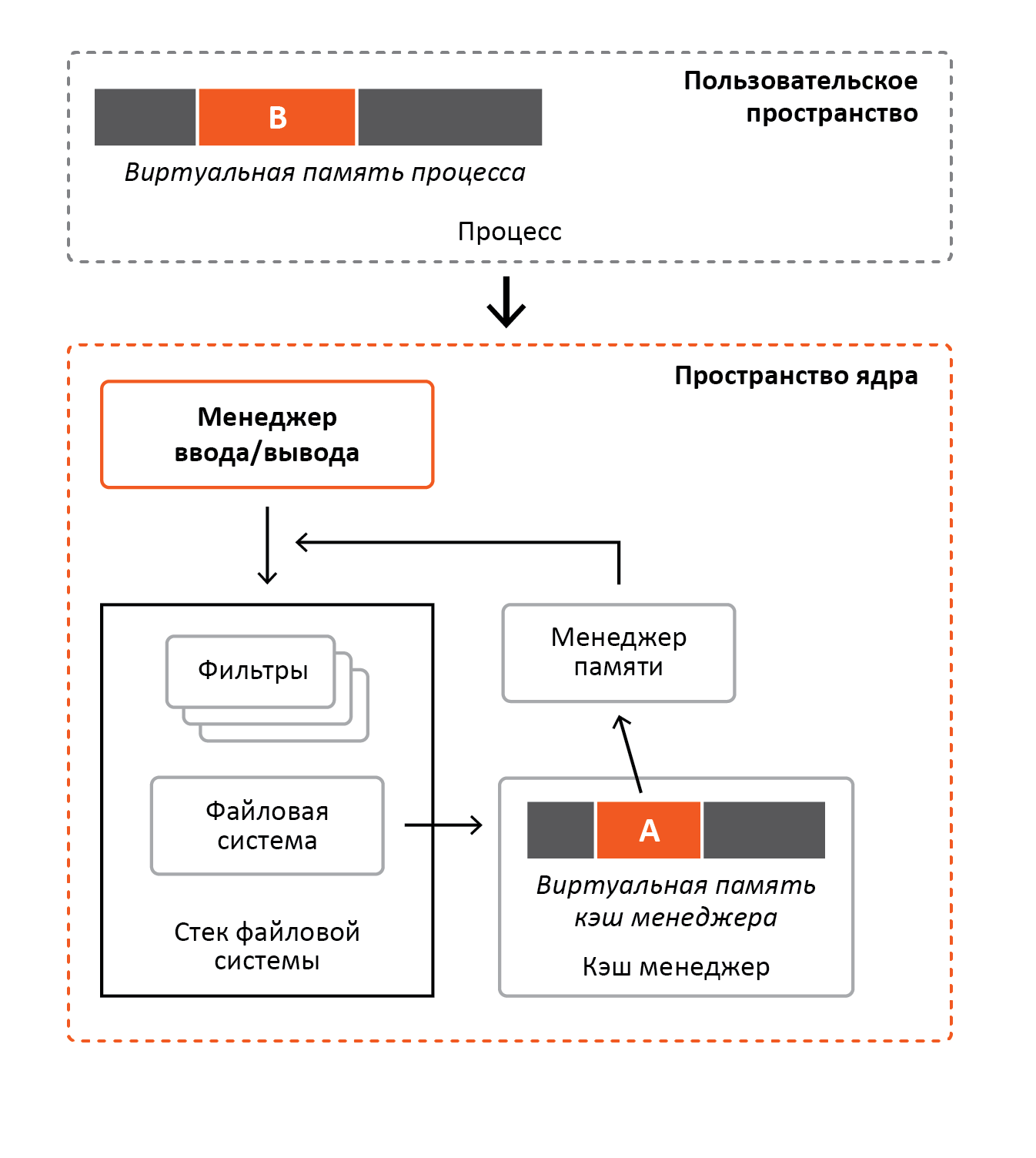

Now imagine that you have received a request to read decrypted content. The file system will use the services of the cache manager and will transfer to it the file structure to which both the cache manager and the memory manager will associate their unique data for further control over the display and caching of the file. This situation is depicted in Figure 10.

Figure 10

Next comes a request to read the encrypted content, and the file system again sends the file structure to the cache manager, and since the cache manager and memory manager previously tied to this structure unique data for this file, they will simply use them to execute the request. Those. now these structures will indicate where the file was mapped, and the cache manager simply copies the file data from the virtual memory to the requestor’s buffer. We remember that when the file was first accessed, the file was cached decrypted, so that with encrypted access the decrypt data would be in the interrogator's buffer.

We have just dismantled two fundamental problems. In practice, there were more. For example, within the framework of the task, it is necessary to add header information to each encrypted file, which is also not solved by filtering. To get around all these problems at once, a solution was found - a virtual file system.

Virtual file system, in its essence, is not much different from the usual. The radical difference is that the usual file system works with the disk, and the virtual one works with files of other file systems, i.e. is a common consumer of file system services. Figure 11 shows the concept of a virtual file system.

Figure 11

Unlike ordinary file systems, a virtual file system does not register itself in the system (this was not mentioned above, but in order for the operating system to use the services of a specific file system, it must register), and if so, requests to it should be sent will not. To solve this problem, we use a filter for a regular file system, but this time its functions will be very simple and limited, one of them (and the main one) is tracking access to encrypted files. As soon as the filter sees such a request, it will simply redirect it to the virtual file system. If, however, access to the regular file is performed, the request will be transferred to the original file system.

Now let's analyze the decrypted and encrypted access again, but in the context of the virtual file system. Figure 12 shows an example when accessing the decrypted and encrypted contents of a specific file was performed.

Figure 12

Let us once again imagine the situation when the file was opened decrypted. This means that in the process of executing a request to open a file, our filter saw it and redirected it to the virtual file system. The virtual file system, upon receiving a request, evaluates the type of access (decrypted or encrypted), and since decrypted access is performed, the virtual file system first converts the decrypted name into an encrypted one, and then tries to open the file through the native file system by that name (i.e. file on the native file system will be encrypted). If successful, the virtual file system will form memory structures that will later be used by the cache manager and memory manager. Now imagine that the file is opened encrypted, the filter again redirects the request to the virtual file system, without making any assessments. The virtual file system evaluates the type of access, and since the access is encrypted, it will simply try to open the file through the native file system by that name. And again, if successful, the virtual file system will form the memory structures for the cache manager and the memory manager. But unlike decrypted access, these will be other structures. Now, if the file is opened again decrypted, the virtual file system uses the same structures that it formed during the first decrypted access. In case the file will open again encrypted, the file system uses the structures that it formed during the first encrypted access. Thus, we have divided access to decrypted and encrypted content.

Figure 13 shows the situation when read / write access to the decrypted and encrypted file contents was never performed.

Figure 13

Now, if decrypted reading is performed, the virtual file system, using the services of the cache manager, as shown in Figure 14, will transfer to it the memory structures that it returned when opening the decrypted file.

Figure 14

The cache manager will display the file in virtual memory and copy the data to the requestor's buffer. Accordingly, in the process of copying, the memory manager will send a read request to the virtual file system, which in turn will send a read request to the native file system, and since the memory structure for the decrypted file was transferred to the cache manager, the virtual file system will decrypt the data and report the completion to the memory manager , so decrypted content is cached. In the event that an encrypted read is performed, the virtual file system will transmit, as shown in Figure 15, the cache to the memory structure manager, which it generated when opening the file encrypted.

Figure 15

The cache manager will again display the virtual memory file (since the file has not yet been displayed in these structures) and will copy the data into the interrogator's buffer. And again in the process of copying, the memory manager will send a read request to the virtual file system, which will again send the read request to the native file system, and since the cache structure has been transferred to the memory structure for the encrypted file, without decrypting the data, the virtual file system will inform the memory manager completion. So the file is encrypted.

As we can see, the virtual file system solves fundamental problems that do not allow simultaneous access to the decrypted and encrypted file contents, which makes it necessary to abandon the classical mechanisms of the operating system. In the filtering process, we can only add data to the memory structures of files that the file system returns, and since we do not form these structures, we cannot interfere in and manage them. And by means of a virtual file system, we completely form them, and, therefore, we have full control over them, which is necessary as part of the solution of this problem. For example, we need to ensure consistency of decrypted and encrypted content files. Imagine a situation where data was written to the decrypted file and the data is still in the cache, and not on the disk. And at that moment a request comes in to read the encrypted contents of the file. In response, the virtual file system unloads the decrypted content onto the disk and flushes the encrypted cache, which causes the cache manager to re-send the read request to the virtual file system for the encrypted read. In the framework of filtering such a problem is not solved in principle.

When developing a virtual file system had to deal with unusual problems. This is partly due to the fact that we work with filesystem files, when regular file systems work with the disk. For example, an error was found in the NTFS file system. It manifested itself in that access to the file X: \ $ mft \ <any name> resulted in the hanging of all access to disk X. As a result of the study, it was established that NTFS did not release the synchronization mechanisms for the $ mft file, which is the enumerator of all files disk. And accordingly, to find any file on the disk, you first need to read the $ mft file, access to which is hung. Another example, which cannot be called unusual, is a bug found in the Windows 8 kernel, as a result of which the memory manager assumes that the memory structure of a file is always the latest version. Because of this, he is trying to use some parts of this structure, which in reality may not be. And that led to BSOD.

Implementing a virtual file system is much more complicated than implementing a filter, but having such a mechanism gives you more flexibility when manipulating files. Including the one we just talked about. Although, at first glance, it may seem that the task is trivial.

As a result of applying this approach to encryption, the functions of providing simultaneous access of software processes to encrypted and decrypted content were successfully implemented, and the encryption of file names was implemented, which allows for a high degree of transparency in the implementation of cryptographic information protection.

It should be noted that this approach to ensuring the "transparency" of file encryption in Windows OS has been successfully implemented in the corporate product Secret Disk Enterprise ("Aladdin RD"), which is used by many organizations in Russia. This, in turn, proves the viability and perspectivity of applying this idea in the process of creating programs for encrypting files on a disk.

As a conclusion, it is worth noting that the technological complexity of the Windows file system and the lack of standard problem-solving mechanisms, such as those described in this article, will always be an obstacle to creating convenient and simple programs that protect information. In this case, the only correct solution is to independently implement the original mechanism that allows you to circumvent these limitations without losing the functionality of the software.

The main "headache" of the developer of encryption tools in this process is to ensure "encryption transparency", i.e. it is necessary to harmoniously integrate into the structure of the operating system processes and at the same time ensure that users are not involved in the encryption process and even more so its maintenance. The requirements for modern means of protection are increasingly excluding the user from the process of protecting information. Thus, for this user, comfortable conditions are created that do not require the adoption of "incomprehensible" decisions to protect information.

This article will reveal the idea of efficiently integrating the means of encrypting information on a disk with the processes of the Windows file system.

The developers set a goal to create a disk encryption mechanism that meets the requirements of maximum transparency for users. Requirements will have to be met by effectively interacting this encryption mechanism with the processes of the Windows operating system, which are responsible for managing the file system. The effectiveness of the encryption mechanism should also be confirmed by the high performance of encryption processes and the rational use of operating system resources.

Initially, the task was set to provide simultaneous access to encrypted and decrypted content, as well as to encrypt file names. This causes the main difficulties, because such a requirement goes against the prevailing Windows architecture. To understand the essence of the problem, first we need to analyze some of the highlights of this operating system.

In Windows, all file systems rely on subsystems such as memory manager and cache manager, and the memory manager, in turn, relies on file systems. It would seem a vicious circle, but everything will become clear further. Below, in Figure 1, the listed components are shown, as well as an I / O manager, which accepts requests from subsystems (for example Win32) and from other system drivers. Also in the figure, the terms “filter” and “file system stack” are used, which will be discussed in more detail below.

Picture 1

Let us examine a mechanism such as displaying a file for memory. Its essence lies in the fact that when accessing virtual memory in reality, part of the file is read. This is accomplished using the hardware mechanisms of the processors and the memory manager itself. Any ranges of process virtual memory are described by descriptors. If for some range there is no descriptor, then there is nothing in this area of virtual memory, and access to such range inevitably leads to the collapse of the process. If a physical memory is fixed for a range, access to these virtual addresses leads to normal access to physical memory, such memory is also called anonymous. If the file is mapped to virtual memory, then accessing these addresses will read part of the file from the disk into physical memory, after which it will be accessed in the usual way. Those. these two cases are very similar, the only difference is that for the latter, part of the file will be read into the corresponding physical memory. About all these types of access descriptor and contains information. These descriptors are memory manager structures that it manages to perform the required tasks. As it is not difficult to guess, in order to read a part of a file into a page of physical memory, the memory manager must send a request to the file system. Figure 2 shows an example of a virtual memory of a process with two ranges, A and B, access to which has never been performed.

')

Figure 2

The range A displays the executable file of the process itself, and the range B displays the physical memory. Now, when the process performs the first access to range A, the memory manager will receive control and the first thing he will do is evaluate the type of range. Since a file is mapped to range A, the memory manager first reads the corresponding part of it from the file into physical memory, and then displays it on the process range A, thus further access to the read content will be done without the memory manager. For the B range, the memory manager will perform the same sequence, the only difference is that instead of reading data from the file, it will simply display the free physical pages of memory on the B range, after which access to this range will be done without the involvement of the memory manager. Figure 3 shows an example of a virtual memory of a process after the first access to ranges A and B.

Figure 3

As can be seen from the figure, when accessing both ranges, access to physical memory is performed without the participation of the memory manager, since previously, when first accessed, it mapped physical memory to ranges A and B, and previously read part of the corresponding file to physical memory of range A.

The cache manager is the central mechanism for all open files in the system on all disks. Using this mechanism allows not only to speed up access to files, but also to save physical memory. The cache manager does not work by itself, unlike the memory manager. It is completely managed by file systems, and all the necessary information about the files (for example, size) is provided by them. Whenever a read / write request arrives at the file system, the file system does not read the file from the disk; instead, it calls the manager's cache services. The cache manager, in turn, using the services of the memory manager, maps the file to virtual memory and copies it from memory to the interrogator's buffer. Accordingly, when accessing this memory, the memory manager will send a request to the file system. And it will be a special request that will say that the file should be read directly from the disk. If you are accessing a file that was previously already displayed by the cache manager, then it will not be re-mapped to virtual memory. Instead, the cache manager will use the virtual memory where the file was mapped earlier. File mapping is tracked through the structures that file systems pass to the cache to the manager when calling its services. About these structures a little more will be discussed below. Figure 4 shows an example of reading a file by a process.

Figure 4

As shown in the figure above, the process reads the file into buffer B. To perform the read, the process accesses the I / O manager, which creates and sends a request to the file system. The file system, upon receiving a request, does not read the file from the disk, but calls the cache manager. Next, the cache manager assesses whether the file is mapped to its virtual memory, and if not, it calls the memory manager in order to display the file / part of the file. In this example, the file has already been displayed, and access to it has never been performed. Next, the cache manager copies to the C buffer the process mapped to the virtual memory range A. Since access to band A is performed for the first time, the memory manager will receive control, then it will evaluate the band, and since this is the memory mapped file, it considers its part in physical memory, after which it will display it on the range of virtual memory A. After that, as already described earlier, access to range A will be performed, bypassing the memory manager.

Nothing prevents you from simultaneously caching a file and displaying it to memory as many times as you like. Even if the file is cached and mapped to the memory of dozens of processes, the physical memory used for this file will be the same. This is the essence of saving physical memory. Figure 5 shows an example where one process reads a file in the usual way, and another process maps the same file to its virtual memory.

Figure 5

As can be seen from the figure above, the physical memory is mapped to the virtual memory of the process B and the virtual memory of the cache manager. When process A will read the file into buffer D, it will contact the I / O manager, which will generate and send a request to the file system. The file system, in turn, will refer to the cache manager, which simply copies the file mapped to the virtual memory range C cache manager, to buffer D of process A. Because at the time the cache manager was accessed, the file was already not only displayed, but also previously executed access to the range C, on which the file is displayed, the operation will be performed without the participation of the memory manager. Process B, when reading / writing range E, in effect will gain access to the same physical pages of memory that the cache manager accessed when copying a file.

File systems accept requests from user software or other drivers. Before accessing the file must be open. In case of successful execution of file open / create requests, the file system will form the memory structures used by the cache manager and the memory manager. Also note that these structures are unique to the file. Those. if a specific disk file was opened at the time when the same request came for the same file, the file system will use the previously created memory structures. In fact, they are a software representation of a disk file in memory. Figure 6 shows an example of matching open copies of files and their structures.

Figure 6

In the figure, process A has opened file C and file D, and process B has opened file C twice. Thus, there are three open instances of file C, when there is only one structure formed by the file system. File D was opened once, therefore, there is one open instance that corresponds to the structure formed by the file system.

Any requests sent to the file system are not immediately processed by it. Requests first go through a chain of drivers who wish to track such requests. Such drivers are called filters. They have the ability to view requests before they reach the file system, as well as after the file system processes them. For example, a file encryption filter can track read / write requests in order to decrypt / encrypt data. Thus, without modifying the file systems themselves, we can encrypt the file data. Filters can bind their unique data to the file structures that the file system forms. Together, the filter drivers and the file system driver form the file system stack. The number of filters can be different, the filters themselves can also be different. Theoretically, they may not be at all, but this is practically not the case. Figure 7 shows the file system stack, which consists of three filters.

Figure 7

Before the request reaches the file system, it passes sequentially through filters 1, 2 and 3. When the request is processed by the file system, it is visible in the reverse order, that is, The request passes successively through filters 3, 2 and 1. Also, in the example above, filter 1 and filter 3 tied their structures to the file structure that the file system formed after executing the open / create file request.

The vast majority of problems are solved by filtering, but our case is unique. As previously noted, it is unique in that it is necessary to provide simultaneous access to encrypted and decrypted content, and also to encrypt file names. Nevertheless, we will try to develop a filter that will solve this problem.

Figure 8 shows the situation when the file was previously opened decrypted.

Figure 8

This means that the filters have seen the decoded name, and they, as shown in the figure, can bind this name to the structure that the file system will form (as was said earlier, this structure is unique to a specific disk file) for further file manipulations. And at that moment the file opens encrypted, which means that the filters have seen the encrypted name. How will they behave in such a situation when the decrypted name is already attached to the file structure? Obviously, the behavior is not predictable, although it is not necessary that the consequences will be fatal.

In continuation of the above, it can be added that access to the contents of the file also causes problems, and much more serious. Let's return to the situation when the file was opened simultaneously decrypted and encrypted. This situation is depicted in Figure 9, the file has never been read / written.

Figure 9

Now imagine that you have received a request to read decrypted content. The file system will use the services of the cache manager and will transfer to it the file structure to which both the cache manager and the memory manager will associate their unique data for further control over the display and caching of the file. This situation is depicted in Figure 10.

Figure 10

Next comes a request to read the encrypted content, and the file system again sends the file structure to the cache manager, and since the cache manager and memory manager previously tied to this structure unique data for this file, they will simply use them to execute the request. Those. now these structures will indicate where the file was mapped, and the cache manager simply copies the file data from the virtual memory to the requestor’s buffer. We remember that when the file was first accessed, the file was cached decrypted, so that with encrypted access the decrypt data would be in the interrogator's buffer.

We have just dismantled two fundamental problems. In practice, there were more. For example, within the framework of the task, it is necessary to add header information to each encrypted file, which is also not solved by filtering. To get around all these problems at once, a solution was found - a virtual file system.

Virtual file system, in its essence, is not much different from the usual. The radical difference is that the usual file system works with the disk, and the virtual one works with files of other file systems, i.e. is a common consumer of file system services. Figure 11 shows the concept of a virtual file system.

Figure 11

Unlike ordinary file systems, a virtual file system does not register itself in the system (this was not mentioned above, but in order for the operating system to use the services of a specific file system, it must register), and if so, requests to it should be sent will not. To solve this problem, we use a filter for a regular file system, but this time its functions will be very simple and limited, one of them (and the main one) is tracking access to encrypted files. As soon as the filter sees such a request, it will simply redirect it to the virtual file system. If, however, access to the regular file is performed, the request will be transferred to the original file system.

Now let's analyze the decrypted and encrypted access again, but in the context of the virtual file system. Figure 12 shows an example when accessing the decrypted and encrypted contents of a specific file was performed.

Figure 12

Let us once again imagine the situation when the file was opened decrypted. This means that in the process of executing a request to open a file, our filter saw it and redirected it to the virtual file system. The virtual file system, upon receiving a request, evaluates the type of access (decrypted or encrypted), and since decrypted access is performed, the virtual file system first converts the decrypted name into an encrypted one, and then tries to open the file through the native file system by that name (i.e. file on the native file system will be encrypted). If successful, the virtual file system will form memory structures that will later be used by the cache manager and memory manager. Now imagine that the file is opened encrypted, the filter again redirects the request to the virtual file system, without making any assessments. The virtual file system evaluates the type of access, and since the access is encrypted, it will simply try to open the file through the native file system by that name. And again, if successful, the virtual file system will form the memory structures for the cache manager and the memory manager. But unlike decrypted access, these will be other structures. Now, if the file is opened again decrypted, the virtual file system uses the same structures that it formed during the first decrypted access. In case the file will open again encrypted, the file system uses the structures that it formed during the first encrypted access. Thus, we have divided access to decrypted and encrypted content.

Figure 13 shows the situation when read / write access to the decrypted and encrypted file contents was never performed.

Figure 13

Now, if decrypted reading is performed, the virtual file system, using the services of the cache manager, as shown in Figure 14, will transfer to it the memory structures that it returned when opening the decrypted file.

Figure 14

The cache manager will display the file in virtual memory and copy the data to the requestor's buffer. Accordingly, in the process of copying, the memory manager will send a read request to the virtual file system, which in turn will send a read request to the native file system, and since the memory structure for the decrypted file was transferred to the cache manager, the virtual file system will decrypt the data and report the completion to the memory manager , so decrypted content is cached. In the event that an encrypted read is performed, the virtual file system will transmit, as shown in Figure 15, the cache to the memory structure manager, which it generated when opening the file encrypted.

Figure 15

The cache manager will again display the virtual memory file (since the file has not yet been displayed in these structures) and will copy the data into the interrogator's buffer. And again in the process of copying, the memory manager will send a read request to the virtual file system, which will again send the read request to the native file system, and since the cache structure has been transferred to the memory structure for the encrypted file, without decrypting the data, the virtual file system will inform the memory manager completion. So the file is encrypted.

As we can see, the virtual file system solves fundamental problems that do not allow simultaneous access to the decrypted and encrypted file contents, which makes it necessary to abandon the classical mechanisms of the operating system. In the filtering process, we can only add data to the memory structures of files that the file system returns, and since we do not form these structures, we cannot interfere in and manage them. And by means of a virtual file system, we completely form them, and, therefore, we have full control over them, which is necessary as part of the solution of this problem. For example, we need to ensure consistency of decrypted and encrypted content files. Imagine a situation where data was written to the decrypted file and the data is still in the cache, and not on the disk. And at that moment a request comes in to read the encrypted contents of the file. In response, the virtual file system unloads the decrypted content onto the disk and flushes the encrypted cache, which causes the cache manager to re-send the read request to the virtual file system for the encrypted read. In the framework of filtering such a problem is not solved in principle.

When developing a virtual file system had to deal with unusual problems. This is partly due to the fact that we work with filesystem files, when regular file systems work with the disk. For example, an error was found in the NTFS file system. It manifested itself in that access to the file X: \ $ mft \ <any name> resulted in the hanging of all access to disk X. As a result of the study, it was established that NTFS did not release the synchronization mechanisms for the $ mft file, which is the enumerator of all files disk. And accordingly, to find any file on the disk, you first need to read the $ mft file, access to which is hung. Another example, which cannot be called unusual, is a bug found in the Windows 8 kernel, as a result of which the memory manager assumes that the memory structure of a file is always the latest version. Because of this, he is trying to use some parts of this structure, which in reality may not be. And that led to BSOD.

Implementing a virtual file system is much more complicated than implementing a filter, but having such a mechanism gives you more flexibility when manipulating files. Including the one we just talked about. Although, at first glance, it may seem that the task is trivial.

As a result of applying this approach to encryption, the functions of providing simultaneous access of software processes to encrypted and decrypted content were successfully implemented, and the encryption of file names was implemented, which allows for a high degree of transparency in the implementation of cryptographic information protection.

It should be noted that this approach to ensuring the "transparency" of file encryption in Windows OS has been successfully implemented in the corporate product Secret Disk Enterprise ("Aladdin RD"), which is used by many organizations in Russia. This, in turn, proves the viability and perspectivity of applying this idea in the process of creating programs for encrypting files on a disk.

As a conclusion, it is worth noting that the technological complexity of the Windows file system and the lack of standard problem-solving mechanisms, such as those described in this article, will always be an obstacle to creating convenient and simple programs that protect information. In this case, the only correct solution is to independently implement the original mechanism that allows you to circumvent these limitations without losing the functionality of the software.

Source: https://habr.com/ru/post/304024/

All Articles