Automation of software development: whether the "programmer" can turn into a "computer operator"

Where does the progress in software production lead us? Software development tools are becoming more sophisticated, some development stages are fully or partially automated. Conservatives, of course, will say that a programmer at the present time is no longer a cake, that such automation leads to simplification of tasks and loss of qualifications of an engineer-programmer. In their opinion, against the background of the development of tools, degradation of personnel occurs.

But if you dig deeper, there will be questions. What programmers are we talking about? About those who design software? About those who develop algorithms? About leading developers or simple "coders"? In any case, there is no single opinion here.

')

Therefore, before drawing any conclusions, it is worth at least recalling how we arrived at this.

Starting point

As Mikhail Gustokashin said at a lecture at Yandex, let's start from the very beginning:

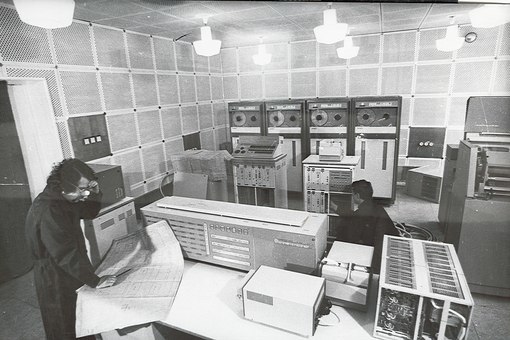

At the very beginning, computers did not even have a keyboard! That is, everything was very bad - they had neither a keyboard, nor a screen, they had punched cards (these are such little things with holes or with no holes).

And programs at that time were written with the help of machine codes - every operation in the computer (addition, multiplication, some more complex operations) had code. People themselves on the plate chose this code, all the addresses in the memory, all this was knocked out by hands and thrust into the reader - and it was all considered. Of course, the work of the programmer was probably not very interesting then - to make holes - and with the development of science and technology, of course, they began to invent all sorts of more “interesting” things.

For example, an assembler (Assembler), which already made life easier. Well, how did he make life easier? Instead of remembering that there is some kind of "magic" code for the team, all sorts of words were used, similar to "human" English - some kind of add or mov - and then the registers or memory areas, variables with which these operations to produce. But it is clear that this, in general, also required a sufficiently large strain of the mind to keep in my head what register we have, where are some variables, and what happens in general. Why did this happen? Because computers were “stupid” and could not understand anything more “intelligent”.

Image from devdelphi.ru

At this stage, the threshold for entering programming was extremely high.

The programmer's performance in these commands was extremely low — that is, he wrote several lines per day (meaningful), and each line didn’t do anything special — some simple arithmetic operations. And people wanted to make languages much more similar to human language, to English in particular, in order to write programs easier and more convenient.

Level Up - Threshold Below

With the advent of high-level programming languages, the life of programmers has become easier, and the productivity has improved. Programs did not need to be rewritten for each machine. There are compilers, development environments. Fortran, Algol, and later BASIC, PASCAL, C really looked more like "human" language.

Of course, it still took considerable time to study them, but it was a more profitable investment of time and effort. In the process, the compiler pointed out to the programmer syntax errors in his code, which particularly facilitated the development of novice specialists. With the advent of modularity and portability of the code, projects have become more, and programmers have begun to widely use third-party libraries in projects, to work more in teams. This eliminated the need for a detailed understanding of how the entire project works.

With the advent of object-oriented programming (C ++, Object Pascal, and so on), the trend of code reuse has intensified. In addition, over time, the development environment became more friendly, and there were more people willing to learn the basics of programming.

Programming - to the masses

Gradually, programming has ceased to be the prerogative of hardcore engineers and mathematicians. The number of projects grew rapidly, software penetrated into various areas of production. This also contributed to the development of database management systems. Even such specializations as the “DBMS Operator” have appeared.

Gradually, the concept of a “software engineer” became multifaceted: someone was involved in algorithms, someone designed interfaces, someone just did coding — that is, implemented ready-made algorithms in the code of their colleagues. Programming itself began to be divided into two large groups - systemic and applied: someone developed operating systems and drivers, and someone wrote applications for automating business processes based on vegetables.

For each of the specializations, individual competencies were required, so the transition from one specialization to another was difficult. But the minimum threshold for entering software development was rapidly decreasing.

The emergence of languages (Java, C #) and corresponding frameworks contributed even more to this decline, as well as the differentiation of specialists according to areas and levels of training.

As a result, programmers have a graduation like [Junior-developer, Senior / Middle, Team Lead, Software Architect]. On the one hand, advanced development tools allowed less qualified programmers to successfully cope with relatively simple tasks, without having deep knowledge and serious skills. On the other hand, with each subsequent step of the career ladder, the threshold of entry also became higher.

Image from the site

But if a specialist was delayed in the status of a beginner, he could notice one feature: rapidly developing software development technologies reduced the entry threshold even more, and the average Junior of the N-year crop knew and knew even less than its senior comrade Junior (N-k) year

Web development using the tyup-lyap method

With the development of web development, programmers needed to embark on other rails (an association with Ruby On Rails is also relevant here) in order to master new languages and the stack of Internet technologies in general.

After the web development, which began to gain momentum only in the 90s, made a huge leap in development, Mikhail Gustokashin can freely indulge in such arguments :

Let's say you want to write a new Facebook (social network). What will you write it on? HTML, CSS is a design, and we want there to be able to add photos, friends, leave comments.

For the script part, that is, what will happen on the client side is JavaScript.

Surprisingly, it is written in PHP - and Facebook, and many other great projects. Of course, I had to write my own things in order for it to work nevertheless normally, and not in the way it was done, but they managed.

Here and now, of course, no one writes anything from scratch to the web. Everyone is looking for some kind of framework or something else. Online store? They downloaded the framework for an online store - well, that's it, they wrote an online store.

It is worth noting that at first the threshold for entering the web development was lower than in Desktop. After the advent of frameworks, it has dropped even more.

On the wave of automation

It turns out that earlier sites were written with “hands”, but now it has become optional in many cases. Moreover, to create the same online stores, there is no need even to use frameworks: now there are website designers. Now web programmers who are not burdened with qualifications, who used to receive easy money by creating sites of the same type, can turn into operators-configurators.

That's because someone's bright mind got the idea to automate the process of web development. Although, in fact, the idea is not new, since it has already been partially implemented in many software development tools for the Desktop platform in the form of autogeneration of forms and entire application layers - for example, generating classes on the database structure.

If the industry develops in the same direction, then more and more coders (programmers who are at the beginning of the path or have low qualifications) will have the opportunity to turn into configurator operators. Use this opportunity or not, everyone decides for himself.

We found out what experts, representatives of the IT industry think about this:

Alexey Bychko , Senior Release Manager (Percona), project developer Percona Server for MySQL, Percona XtraDB Cluster, Percona XtraBackup, Percona Server for MongoDB, PQuery, teacher of the System Administration course in the IT Academy of Alexey Sukhorukov:

There are more and more environments to automate routine things - it helps once again not to write something by hand, not to reinvent the wheel. Much has already been provided in integrated development environments from which you can call the necessary libraries and so solve standard tasks. For those who are able and used to think, this is good - you can concentrate on the main, on the logic of the application. (These are people of the “old school” for whom a simple text editor is enough for writing high-quality code).

It’s bad that the number of bydlocoders is growing, which, in principle, can solve the set task, assemble some of the existing “cubes”, but they don’t know how it works inside, and they can’t estimate how optimal someone else’s ready-made code is. For this reason, I wouldn’t call a coder exactly a “coder” - even junior schoolchildren now write the code, and the machine can generate it. A programmer thinks, invents, establishes dependencies, sets paths, designs architecture - and then he can give all this to the coder for implementation.

In fact, these are two approaches that have grown out of the presence of projective Unix-like systems and procedural Windows-like ones. A huge corporation, where everything is divided into the smallest subtasks, is important for the reproducibility of the result, and not the optimal solution - if the operator has left, it is necessary for the new one to do the same and not worse.

But if we have a task that a particular procedural system, library, or environment solves poorly or does not solve at all (older colleagues remember what happens when a large file gets into a text editor written in C ++ Builder or Delphi - nothing scrolls and everything slows down, and this cannot be corrected), then we need to take a “clean” system, which gives more freedom, and call a programmer who agrees not just to google, but write an adequate solution on his own from scratch.

Oleg Bunin , founder of Ontiko, conference organizer Highload ++, RIT, FrontendConf, RootConf, WhaleRider, AppConf, Backend Conf.

As the organizer of the largest Russian developer conferences, I see specialization, simplification of routine procedures, but brains and a deep understanding of what is happening are in demand not less, but even more than before.

With the advent of automating development tools, the value of people who understand what is happening under the hood of such tools is growing. The requirements that are imposed on them are growing. The amount of knowledge you need to master is growing.

Of course, this is not about creating landing pages, but about developing a little bit serious project.

Max Lapshin , creator of ErlyVideo, creator of Flussonic, developer of many well-known video streaming solutions.

For the first time, the messages that everything that had to be written were already written as a whole, and now programmers will simply assemble cubes from ready-made components, and generally, probably, program with a mouse, I heard about 1995. As you can guess, with In 1995 to date, an insane amount of hell of a hardcore system code was written, and quite a few large business platforms appeared and died, on which even something was sold with a mouse.

Honestly, I have never even met a task that could be sent with a “mouse” without getting to the bottom, but I absolutely have no connection with Enterprise outsource-development in the “Luxoft” style, so I don’t know how it is in "them."

The thing is, the industry is moving forward. Yes, computers no longer have 640 KB of memory, and a Windows programmer can no longer worry about memory at all. But small computers came to replace large computers, and there are many of them.

Today, you still have to think how to write software for the IP camera firmware, stuffing 200 KB on disk. You have to think about how to shove the code into a small IOT-sensor, which should live a year on its tiny battery with a radio exchange.

The industry is expanding very rapidly, and there is a place for people who are not very deeply versed in the details of computer work, but certainly: today, people who know how DMA works, for example, need more than 10 years ago simply because the industry still growing.

Alexander Lyamin , founder and CEO of Qrator Labs, one of the world's leading suppliers in the field of protection against DDoS attacks.

There is such a joke: the programming department is such a plant for the production of refrigerators, in which 10 people work, one of whom can make starships, and the other nine people know how to rivet birdhouses.

So, programmers are needed in companies of completely different sense. Some companies produce refrigerators, some - rivet nesting boxes, and some, like SpaceX, are really developing starships. Therefore, the ratio of 1: 9, of course, is not a dogma, it will always be its own. Somewhere, indeed, quite a few coders in the state. Somewhere we need good application programmers - people with algorithmic baggage and able to competently use it.

In some cases, it is required not so much a programmer as a computer scientist - a person capable of not only, at least, competently modifying algorithms for the needs of a particular situation and task. The next level is a data scientist, a good applied mathematician. Continuing the analogy, the birdhouse can be riveted from publicly available materials, but you can no longer buy alloys for a rocket on the construction market. At this level, the task is already beginning to look something like this: here is the problem, here is the data array, we need hypotheses-algorithms-PoC and, as a result, the formulation of the problem for implementation. And in the process of creating really good products - sooner or later, one way or another - the tasks of non-trivial data processing arise inevitably.

That is, the person “coder” solves only a part of the problems facing the development.

Naturally, there are few really high-class programmers on the labor market, and there are many tasks for them, so an idea has arisen a long time ago: to develop tools that can help a specially trained computer operator to cope with tasks of high complexity. But all attempts to do this, one might say, failed. The SQL language was originally designed so that it could be used by any user, even without programming skills. Now programmers use this language, and computer operators, even after trainings, are not able to write even a more or less simple request.

Another example is all kinds of visual programming environments, designed, roughly speaking, so that the operator can not write code, but draw an adequate flowchart for the task, on the basis of which the compiler will select the necessary algorithms. None of these environments (and there were a lot of them) was ultimately not spread. This suggests that the original problem may have been set incorrectly or has no solution in such a formulation.

The law of leaky abstractions shows: sooner or later, in the process of honestly solving an applied task, one has to go down to a level lower than almost any applied tool allows.

Therefore, it is unlikely that anyone will ever be able to turn a programmer into a computer operator definitively.

Source: https://habr.com/ru/post/303486/

All Articles