Modular architecture and reusable code

I have always been interested in developing reusable and complete code. But the problem of reusable code begins at the stage of transfer to another infrastructure. If an application is expanded by plugins, then the plugins are written for a specific application. And what if we bring the application logic into a plugin (hereinafter referred to as the module), and turn the application interface from a control unit into a component controlled by a module. In my opinion, the most important task in such a scenario is to simplify the basic interfaces to a minimum and give the opportunity to rewrite or expand any fragment of the entire infrastructure separately. If you are interested in what came out of the idea of modular code, then welcome under cat.

Idea

The first condition for the upcoming system is the ability to dynamically expand the system without the need to recompile individual modules. This applies to both the host and the modules.

Any solution link (except basic interfaces) can be rewritten and dynamically integrated. In addition to the possibility of expanding modules with interfaces, I wanted to be able to gain dynamic access to public methods, properties, and events that are available in any module. Accordingly, all elements of the class implementing the basic interface IPlugin, which are marked by availability as public, must be visible from the outside by other modules.

Any module can be removed and added to the infrastructure, but at the same time, when deciding to replace one module with another module, you will have to implement all the functionality of the module to be deleted. Those. Modules are identified through the AssemblyGuidAttribute attribute, added by the machine when creating the project. Therefore, 2 modules with one identifier will not load

Each module should be lightweight, so that the basic interfaces do not need constant updating, and if necessary, the module can be removed from the system and embedded as a normal assembly into the application via a link (Reference). Fortunately, CLR loads dependent assemblies through lazy loading (LazyLoad), so there is no need for modular infrastructure assemblies.

And the last condition, the system should provide a phased extension of the functionality for the developer so that the level of entry is at a sufficiently low level.

At the same time, the system should automate routine tasks that are repeated from application to application. Namely:

- Saving / loading custom settings or general settings storage,

- Saving state or other parameters, depending on the application,

- Transferring previously written components

- Restriction in the use of software without a sufficient level of rights (Download components from the access level, and not hide the interface elements),

- Interaction with the cloud infrastructure without the need to refine the logic (Message Queue, REST, SOAP services, Web sockets, Caching, OAuth / OpenId / OpenId Connect ...)

Decision

As a result of the accumulated solutions and individual components operating on a single principle, a common vision of the entire infrastructure was compiled:

- Minimum requirements for basic interfaces

- Modular infrastructure with an independent source of loading of modules,

- General storage of settings

- Solution independence from application implementation (UI, Services):

- What hosts are at the time of writing:

- Dialog ,

- MDI ,

- EnvDTE (Visual Studio Add-In) . [Does not work in Visual Studio 2015],

- ASP.NET Component (Needs improvement → IHttpHandler, OwinMiddleware),

- Windows service

- What hosts are at the time of writing:

To provide development independence from both the specific application and the programs themselves, the following key components have appeared:

- SAL Interfaces - Build with Base Interfaces and Extension Interfaces

- Host - Application. (in case of use in Visual Studio - EnvDTE Add-In), which depends on the version of the launching application,

- Plugin - Basically , it is an independent module (plugin) for the host, but it may depend on other modules or realize the basis for a group of other modules. In addition to the usual plug-ins that perform their own tasks, there are 3 types of plug-ins that are actively used by the host itself:

- LoaderProvider - Provider that allows you to load other modules from different sources. I wrote a bootloader from the file system to memory for tests (Does not work with Managed C ++), network boot based on the user's role (The server is written for a specific task). But this is not a redistribution, the current architecture allows you to use as a source, for example, nuget.org, and remote communication with the host deployed on another machine.

- SettingsProvider - Provider that is responsible for saving and loading plugin settings. As I wrote above, by default, written hosts use XML to save and load data, but this does not limit further development. In the finished modules, I cited as an example a provider using MSSQL.

') - Kernel - The core of business logic and array of dependent modules. At its core, it is not only the basis for dependent modules, but also the application identification for the host (At the minimum, for identification in SettingsProvider, because different arrays of modules combined by different Kernel modules can be launched in the same host).

- LoaderProvider - Provider that allows you to load other modules from different sources. I wrote a bootloader from the file system to memory for tests (Does not work with Managed C ++), network boot based on the user's role (The server is written for a specific task). But this is not a redistribution, the current architecture allows you to use as a source, for example, nuget.org, and remote communication with the host deployed on another machine.

Ready base assemblies

As a result of these requirements, the following basic assemblies were formed:

- SAL.Core - A set of minimum required interfaces for hosts and modules,

- SAL.Windows - Depends on SAL.Core. A set of interfaces for hosts and modules that support standard WinForms functionality, WPF (Form, MenuBar, StatusBar, ToolBar ...) applications,

- SAL.Web - Depends on SAL.Core. A set of interfaces for the host and modules that support applications written using ASP.NET (It needs to be drafted).

- SAL.EnvDTE - Depends on SAL.Windows. Provides extensions for plugins that can interact with the shell that Visual Studio is written on.

For the minimum functioning of the system, it is enough to add a link to SAL.Core, and if necessary to implement or use extensions, add a link to the appropriate set of interface extensions. Or independently expand the minimum set of interfaces with the desired abstraction.

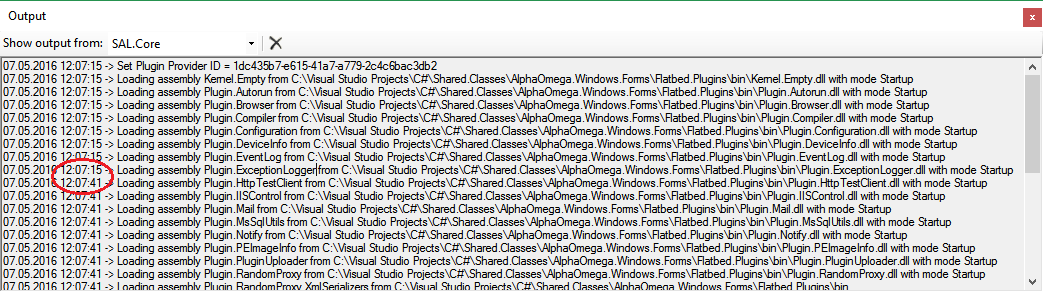

During the launch of the host, the base modules built into the host are first initialized to load the settings and external plug-ins (LoaderProvider and SettingsProvider).

First, the plugin provider is initialized, and then the settings provider. The built-in host loader searches for all plug-ins in the application folder and subscribes to the search event of dependent assemblies. Then, the settings provider built into the host loads the settings from an XML file located in the user profile. Both providers maintain a hierarchical inheritance infrastructure, and upon finding the next provider, become the parents of the new provider. If the provider does not find the required resources, the resource request is addressed to the parent provider.

After completion of the initialization process of all providers, all Kernels are initialized, and then the remaining plug-ins. Unlike other modules, Kernel plug-ins are initialized first of all, getting the opportunity to subscribe to download events for other plug-ins with the ability to cancel loading extra plug-ins.

This behavior can be rewritten in the hosts, if it is necessary to observe the load hierarchy of other types of plug-ins. Now I think about the removal of the sequence of loading modules in Kernel.

Build Downloads

Standard LoaderProvider through reflection is looking for all public classes that implement IPlugin and this is not the right approach. The fact is that if the code calls a specific class or through reflection there is a call to a specific class, and this class does not refer to any third-party assemblies, then the AssemblyResolve event will not occur. That is, the assembly can be removed from the modular infrastructure and used as a normal assembly by adding a link to it and the need for SAL.dll will disappear. But the basic providers of the modules are implemented according to the principle of scanning the current folder and all the objects of the assembly, so the AssemblyResolve event for all referencing assemblies will occur at the time the module is loaded.

To solve this problem, I wrote several variants of simple downloaders , but with different behavior. In some it is required to specify the list of assemblies in advance, some scan the folders themselves.

Further, as one of the solutions to this problem, you can use the PEReader assembly, which is described below.

SAL.Core

Basic interfaces and small pieces of code that are implemented in abstract classes to simplify development. As the most minimal version of the framework for the framework, the .NET Framework v2.0 version was chosen. Choosing the minimum required version allows you to use the database on any platforms that support this version of the framework, and backward compatibility (runtime selection at startup) allows you to use the foundation before .NET Core (for now, excluding).

In theory, base classes should be a fundamental basis, allowing them to be used in any situation. In practice, however, there will certainly be conditions for which they will have to expand. In this case, all the code of abstract classes can be rewritten, and the interfaces can be extended by their own implementation. Therefore, in this assembly and is the minimum possible code.

At the time of this writing, the only host inheriting the basic interfaces is the host for WinService applications.

SAL.Wndows

This set of base classes, which provides a framework for writing applications based on WinForms and WPF. It includes interfaces for working with abstract menus, toolbars and windows.

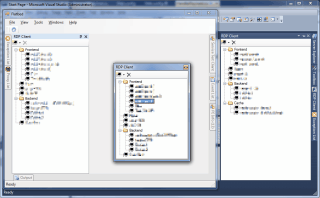

SAL.EnvDTE

In terms of expansion, the host as an Add-In for Visual Studio extends the SAL.Windows interfaces and adds VS-specific functionality. If the dependent plugin does not find the kernel interacting with Visual Studio, then it can continue to work with limited functionality.

All written hosts that support SAL.Core interfaces automate the following functionality:

- Loading plugins from the current folder,

- Saving and loading plug-in settings from XML files in a user profile,

- Restore positions and size of all previously closed windows when opening an application (SAL.Windows).

The following hosts are implemented on these interfaces:

- Host MDI - Multiple Document Interface, written using the DockPanel Suite component,

- Host Dialog - Dialog interface with control management via Windows ToolBar,

- Host EnvDTE - Add-In for Visual Studio, tested on EnvDTE versions: 8.9,10.12.

- Host Windows Service - Host as a Windows service, with the ability to install, delete and run through command line parameters (PowerShell is not supported).

Event logging is implemented through the standard System.Diagnostics.Trace. On the MDI, Dialog and WinService hosts, the listener specified in app.config tries to send the received events back to the application itself via Singleton, which is then displayed in the log windows (Output or EventList) depending on the event. For devenv.exe, it is also possible to register a trace listener in the app.config, but in this case we will get the host assembly load before loading it as an Add-In. Therefore, trace listener is added programmatically in code (Displays in VS Output ToolBar or by modal window).

The written infrastructure allows you to develop in the direction of HTTP applications, but for this you need to implement some of the modules that provide at least authentication, authorization and caching. For the TTManager application, which is described below, its own host for WEB services was implemented, which implemented all the necessary functionality, but, alas, it was made for a specific task, and not as a universal application.

This approach of logging and breaking into separate modules allows you to easily identify the narrow moments when running in a new environment. For example, when deploying an array of modules on Windows 10, I found that the load takes much more time than on other versions of the OS. Even on my old WinXP machine, loading of 35 modules is done in a maximum of 5 seconds. But on Win10, the process of loading a single module took much longer.

Due to the independent architecture, it was possible to locate the problem module instantly. (In this case, the problem was in the use of runtime v2.0 under Windows 10).

Ready Modules

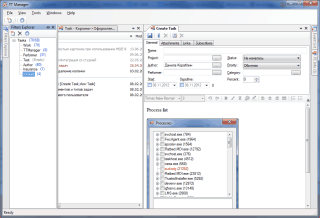

The first version of the infrastructure appeared in 2009. Both for testing and for accelerating the performance of trivial tasks for work, a large number of diverse and independent modules have been accumulated that automate various tasks (All images are clickable, the modules can be downloaded from the project pages).

Web Service / Windows Communication Foundation Test Client

At the core of this application is an application that comes with Visual Studio - WCF test client. In my opinion, there is a mass of uncomfortable moments in the original source. By the time of the transition to WCF, I already had many applications written on ordinary WebServices. Having studied the principles of operation of the program itself through ILSpy, I decided to expand the functionality of not only WCF, but also WS clients. As a result, having analyzed the main program, I wrote a plugin with the following extended functionality:

- WebService application support (except Soap Header),

- Ability to test the service with the old binding (when opened, it does not update the proxy class automatically, but only upon request from the UI),

- Independence from Visual Studio (merged dependent assemblies via ILMerge),

- View of all added services in the form of a tree, and not work with only one service,

- Search function on all nodes of the tree,

- A timer has been added to the service request form to track the time spent on the complete execution of the request,

- Added restoration of sent parameters when closing and opening a test form or the entire application,

- Added the ability to save and load parameters to a file by clicking on the test method form.

- Added the ability to auto-save and load method parameters (You need Plugin.Configuration module → Auto save input values [False])

- The ability to edit the .config file through the SvcConfigEditor.exe program is broken.

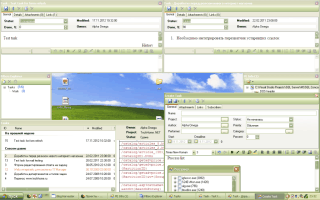

RDP Client

Again, programmers from M $ became the primary source of the program. The program is based on the RDCMan program, but, unlike the main program, I decided to embed the window of the connected server into the dialog interface. And the remote storage of settings helped keep the list of servers of all involved colleagues up to date.

PE Info

In the original source of this application is a new idea for automation, which I could not find in other applications. The goals of writing such an application were 3:

- Provide an interface for viewing the contents of a PE file, including most directories and metadata tables (Although the output of RT_DIALOG resources is significantly different from the original).

- Search by PE / CLI file structure

- To enable the download of a PE file not only from the file system, but also through the WinAPI function LoadLibrary. In the case of loading via LoadLibrary, there is a chance to read the unpacked PE file and no need to calculate the RVA .

Several times it turned out that the executable files implemented some functionality, but this functionality was either obsolete or not used by anyone. In order not to search for the use of certain objects in the source codes of applications in different languages, this application is written. For example, I have an assembly in the general repository and I decided to remove one method from this assembly. How to find out if this method is used in current dependent builds of other projects written by colleagues? You can ask to check all the source code, you can look to look in the Source Control, or you can just search for the method of the same name inside the compiled assemblies. It consists of 2 components:

- A PEReader build (written without unsafe token), the source of which is available on GitHub 'e,

- The client part, which is a plugin for the SAL infrastructure, using the SAL.Windows abstraction layer.

To search the hierarchy of PE, DEX, ELF and ByteCode files, a separate module was written, which remarkably fit into the infrastructure: ReflectionSearch . In this module, all the logic of searching through objects was brought through reflection and, thanks to several public methods in the modules for reading executable programs, we managed to achieve multiple code.

Rest

In order not to describe the entire list of ready-made modules for each individual item, I will describe the remaining modules in one list:

- ELF Image Info - Disassembling an ELF file by analogy with PE Info. ElfReader on github .

- ByteCode (.class) Info Disassembly of the JVM .class file. ByteCode Reader on github

- DEX (Davlik) Info - Disassembly of DEX format, which is used in Androyd applications. DexReader on github

- Reflection Search - Assembly to search for objects through reflection. It used to be part of the module PE Info, but with the advent of other modules, it was transferred to a separate module using the public methods PE, ELF, DEX and ByteCode modules.

- .NET Compiler - Real-time .NET code compiler in the current AppDomain. Provides the ability to write code (TextBox), host a compiled application, cache the compiled code, and store the compiled code as a separate assembly (Used in the second iteration of the automation application for the HTTP Harvester [described below]).

- Browser - Hosting for Trident with enhanced XPath functionality (self-written, similar to HtmlAgilityPack ) for DOM elements. (Used at the third iteration of the automation application for the HTTP Harvester [Described below]).

- Configuration - User interface for editing plugin settings, because not all settings are accessible via the UI when using SAL.Windows.

- Members - Displaying in UI public elements of plug-ins that are accessible from outside.

- DeviceInfo - An assembly that can read SMART attributes from compatible devices and works without an unsafe token. The WinAPI function DeviceIOControl is used to get all the data, the source code of the assembly itself is available on GitHub 'e.

- Single Instance - Restricting an application to a single instance (Key exchange is done through .NET Remoting),

- SQL Settings Provider - Provider for saving and loading settings from MSSQL. (the code was written on ADO.NET and stored procedures with a sweep of unification, so for some DBMS you will have to write your implementations stored),

- SQL Assembly scripter - Creating a Microsoft SQL Server script from a .NET assembly to install managed code in MSSQL (not tested on unsafe assemblies),

- Winlogon - Module provides public events for SENS interfaces. The first version used Winlogon, but it is no longer supported.

- EnvDTE.PublishCmd - I described this module in detail here .

- EnvDTE.PublishSql - Before or after manual publishing, executes an arbitrary SQL query through ADO.NET with indication of template values.

The rest are here ( there are about 30 modules in total). Images of all modules here .

Turnkey solutions

For a visual demonstration of the convenience of building the whole complex on a modular architecture, I will give a couple of ready-made solutions built on different principles:

- Full independence of modules among themselves

- Partial dependence on Kernel module

TTManager

An application for a task system that basically used a dynamic expansion system with the ability to use different sources of tasks. The result was a unified interface that can create, export / import, view tasks from different sources. Currently supports MSSQL, WebService and partially REST API of Megaplan tasks (not advertising) as a source. WebService is written on a similar principle, using the base classes SAL.Web. So the WebService itself can also be used as a source of MSSQL, Megaplan or again WebService.

How does it work

Kernel application plugin, lazy loading, searches for all task source plugins (DAL). If several data access plug-ins are found, then the client is offered to select the plug-in that he wants to use (Only in SAL.Windows, on hosts without a user interface, it will crash with an error). Dependent plugins access the selected DAL plug-in via the Kernel module.

Interesting moments

In this example, the Kernel plugin is abstracted by interfaces from other dependent plugins. In this case, you can write another Kernel module (or rewrite the current one). Or rewrite any plugin at all) to be able to work with several task sources simultaneously.

To solve a problem with the status of tasks, a matrix of statuses is protected within some DAL plug-ins (Or they are taken from the source of the tasks, if any). In this case, there are no problems with the transfer of data from one source to another.

HTTP Harvester

The application allows, using ready-made plugins, parse sites through Trident or WebRequest. There are several levels of abstraction available for parsing. The lowest level allows you to write an additional plugin that will deal with the opening and parsing of the response using the DOM or the response from the server. A higher level suggests to write .NET code in runtime, which through the plugin “.NET Compiler” will be compiled and applied to the result of the page displayed in Trident in runtime. The highest level involves the indication, through the UI, of the elements on the website page displayed in the Trident. And after applying the xpath (self-written version) of the template, transfer to the universal plug-in for processing or execute the .NET code from the ".NET Compiler" plugin.

How does it work

The module dependent on the Kernel plug-in is offered to choose one of the ready-made output interfaces and the basic user interface for downloading data. Or Trident, or WebRequest with the possibility of logging. Kernel offers not only an interface, but also a polling timer for each individual module.

The output interface offers a standard GridView with an output container, with the ability to save the last open position in the table. By default, the container supports display of image or text data.

Interesting moments

In this case, I did not abstract from the Kernel plug-in interfaces and all dependent plug-ins expect to find a specific Kernel plug-in in the array of loaded plug-ins.

The application was written in 3 iterations (Only under SAL.Windows):

- The ability to write a plugin using the basic controls and an array of methods for working with Trident described in the Kernel plugin

- Now it is possible to replace the code in the plugin using the runtime code generated and edited in Plugin.Compiler

- Now it is possible to specify the path to HTML nodes in Trient via UI. As a result, for a runtime or online code, an array of Key / Value is given, where the value is the path to the HTML element (s) like implementation in HtmlAgilityPack )

What is already outdated and removed

- The Host for Office 2010 was removed. It was written solely for the ability to create a task for the TTManager from the context menu, but due to the abundance of crutches and limited possibilities, further support was not practical.

- Removed the ability to create windows in EnvDTE via ATL. Before VS 2007, the possibility of creating windows in the studio was realized only through ATL and COM. Then there was an opportunity to do everything through .NET.

- Outdated EnvDTE host implemented as an Add-In

Known bugs

Host EnvDTE tested only in English studios. There may be problems on localized versions (I once experienced it on VS11 with Russian localization).

The EnvDTE host closes the studio if the Winlogon (SENS) plugin is loaded and the user decided to unload the host via Add-in Manager. (Met on Windows 10).

Because The host is written as an add-in, and not as a full-fledged extension, then compatibility with other EnvDTE-based products is not.

What are the forecasts for further development?

If you wish to use caching functions, in addition to the built-in classes System.Web.Caching.Cache and System.Runtime.Caching.MemoryCache, remote caches are available. For an example, AppFabric. Having written the basic client interface for caching, you can develop an array of modules for each type of cache and select the necessary module as needed (At the time of publication, they have already been written, but not laid out).

Modules at the time of writing can be loaded from the file system, from file system to memory, and updated over the network using an XML file as a TOC. Further development allows using not only a file system as a storage, but also using nuget as a storage or implementing a host that allows you to run modules remotely.

User customization is possible for both Roles and Claims. But when using OpenId, OAuth, OpenId Connect, there are a huge number of providers, and each provider is required to obtain System.Security.Principal.IIdentity (When using Roles based auth) or System.Security.Claims.ClaimsIdentity (When using Claims authentication) . Accordingly, once writing a client for LinedIn, you can use it in any application without recompiling.

When using message queues, you can write a module and a set of interfaces that will perform the ServiceBus functions, and the modules for implementing a specific queue will already be responsible for receiving and sending messages.

You can write a UI interface for dynamically linking public methods of modules, by analogy with SSIS or BizTalk services.

Source: https://habr.com/ru/post/303032/

All Articles