Launch DirectPath I / O on Cisco UCS via vm-fex for vSphere

In short, the technology allows virtual machines to directly access physical pci devices on a server with a hypervisor. However, when using this technology, almost all the useful things that a vSphere cluster does not work: fault tolerance , high availability , snapshots, vMotion and DRS with it.

Moreover, when a virtual machine uses a device directly, bypassing the hypervisor, this device is no longer accessible to the hypervisor itself. For example, if you throw a network card inside a virtual machine via DirectPath I/O , then yes, the hypervisor’s resources for processing traffic from the virtual machine are no longer used - this is good. The bad thing is that only one virtual machine will be able to use the pro-networked network card. Technology, it turns out, is very controversial, if not more - useless. But not so simple.

delightful Cisco UCS

Frankly, I'm a pretty tedious type, which is almost impossible to admire. Even if it turned out, I don’t always want to show it, and wherever I want, I’ll not always be able to. Before I became acquainted with Cisco blades, I honestly didn’t even know that Cisco manufactures a server. It turned out, produces, and even those that I do not get tired to admire.

All my life I have been working with servers, a bit less often the blade systems have fallen into my hands. When I first saw the UCS manager it became clear that you could not take it with a headache. Why? if only because there are no buttons the server. Until a service profile been formed from a certain set of configuration parameters, the piece of hardware is useless.

In the configuration parameters of the profile, everything can be molded from the blade: bios parameters, boot sequence, ip-kvm addresses, hba , firmware versions, parameters and mac network addresses ( iscsi and normal). All this is done very flexibly, with a convenient hierarchy and the ability to set pools of massively changing parameters, such as addresses and poppies. Accordingly, until all this has been studied, the blade will not start.

network part of the blades

I will not talk about configuration here. On this topic, Cisco , as well as for everything else, has quite clear documentation. And on the Internet, including on Habré, a number of articles are written about it. I would like to focus on the network portion of the Cisco UCS blades. The network part is also special here. The fact is that in server blades there are no network interfaces. How, then, do the blades work with the net? All the same, the network adapter in each blade is: this is the Virtual Interface Card ( vic ).

In conjunction with the IO Module ( iom ) in the basket, these two pieces of hardware allow you to create virtual network interfaces for real servers in the required amount. It is, of course, limited, but the limitation is quite large - it should be enough for everyone . so why do we need a hundred network interfaces on the blade? No need if we do not use virtualization. This is where the time comes Valery recall the useless DirectPath I/O , which is now in a completely different light.

In the second part of the documentation from vSphere , suddenly, it DirectPath I/O that DirectPath I/O on Cisco UCS comes with blackjack and whores works with snapshots, and with fault tolerance , and with high availability , and with vMotion , which is inseparably followed by DRS . “Cool!” I thought when I read about it. “Now I’ll do it quickly, everyone will be happy,” and broke off. Neither in the Cisco documentation, nor in the VMWare documentation, did I find something that looked like instructions on how to do all this. of all that I was able to find was just something very remotely resembling an attempt to make a step-by-step instruction. This site is already dead, so the link to the web archive.

some more water

I decided to write a detailed manual in the first place - for myself, so that when faced with a task a second time, I did not forget anything, I would repeat everything quickly and successfully. Secondarily, for everyone else, although I am well aware that most readers, and perhaps myself in the future, will never meet with Cisco UCS . Thanks to import substitution as well.

In order to successfully work with DirectPath I/O on UCS you need to understand well how vSphere virtual distributed switch ( vds ) works. Usly there is no understanding or it was not possible to launch it, and you think that having completed this manual it will be possible to launch everything described here - this is a mistake. It may turn out to start, but then it will very easily break due to incorrect actions due to misunderstanding.

The same applies to the UCS manager . I will not describe how to work with vds , as well as most of the configuration of the UCS manager in this article. There are more than enough instructions from VMWare , Cisco , different how-to, forums and other questions on the Internet.

ucsm and vcsa

In order for ucsm to create vds in the vCenter Server Appliance ( vcsa ), into which I will drive the virtuals, in the last one you need to allow access by adding a key:

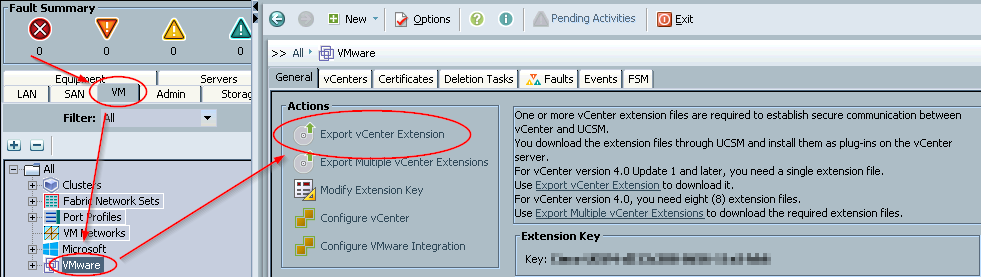

- I open the

ucsm→vm→filter: all→forvmware→export vCenter extension, specify some directory where thecisco_ucs_vmware_vcenter_extn.xmlfilecisco_ucs_vmware_vcenter_extn.xml. Actually, I don’t like to take screenshots, but it’s nice to draw such a piece of iron:

- Now you need to import this extension into

vcsa.VMWareclaims that all operations withvCenterstarting with version 5.1 can be done through the web client. It may be so, but I did not find how to import this file through the web client either in version 5.1, or in 5.5, or in 6.0.

Therefore, I open vmware vsphere client version 6.0 → the top menu → plugins → manage plugins → from a blank space in the opened window with the list of plug-ins click → new plug-in... → browse... → cisco_ucs_vmware_vcenter_extn.xml → register plug-in .

After successful registration, a new plug-in Cisco-UCSM-xxx should appear in the list, where instead of xxx there will be a key, to which I made obfuscation in the screenshot above.

vcsa is now ready to accept commands from ucsm .

vds for vm-fex

vm-fex works through a virtual distributed switch that you need to create and configure in ucsm , and the latter in turn applies this configuration to vcsa through the integration described in the previous part. All work in this part will be done in ucsm in the vm tab, so I will not refer to it in every paragraph.

- PCM by

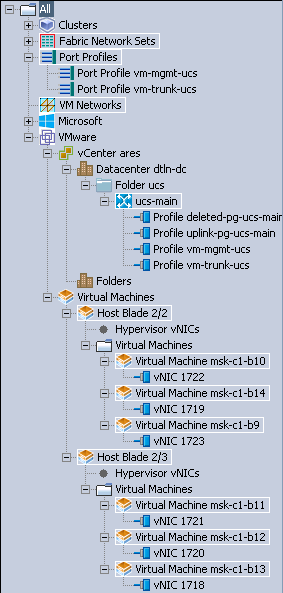

vmware→configure vCenter→ I record myvcsadata in thename,descriptionandhostname (or ip address)fieldshostname (or ip address):ares,gallente tackler,10.7.16.69→ two timesnext→datacentersskip thefoldersanddatacenterssections →finish; - pkm to

ares→create datacenter→ in thenameanddescriptionfields I write down the data of my datacenter, which is already created invcsa:dc→next→ I skip the →folders→finish; - pkm to

dc→create folder→ in thenameanddescriptionfields I write down the daddy's data, in which there will bevds:ucs→next→DVSssectionDVSsskip →finish; - PCM on

ucs-main→create DVS→ in thenameanddescriptionfields I record the data of myvds:ucs-main→OK→admin state: enable. vdsready, now createport profiles. In essence, this is the same as thedistributed port group, but in the terminology ofcisco. I have only two of them: one trunk profile, where all the wilan's are allowed, the other is a non-tagged wilan with a management network for virtual machines whose guest systems cannot tag traffic for some reason:- PCM

port profiles→ I record the data of the profilevm-mgmt-ucs→host network io performance:high performance→ in the fieldsnameanddescription→ in the list ofVLANsselect one radio button in the third column opposite the buttonmgmt; - I do the same for the trunk port

vm-trunk-ucs. Only instead of selecting the radio button, in the first column I tick off all the wilan's. it is assumed that the necessary vilans inucsmhave already been created; - now we need to make these two profiles fall into

vds. Lkm one of them, select theprofile clientstab → green[+]on the right →name:all,datacenter:all,folder:all,distributed virtual switch:all. With the second profile is the same. It should look like this:

after a short time, thisucs-mainappeared invcsa. If this does not happen, then it is worth looking into thefsmtab - most likely, the reason will be written there.

- PCM

blades for hypervisors

As I mentioned at the beginning, in order to run the blade, you need to hang a profile on it. To do this, you must first create a profile. Creating profiles for servers is not the purpose of this document, so I mean that everything you need to form a profile is already there. I will only touch on what is related to vm-fex , without which DirectPath I/O will not work. On each blade, I will do four static network interfaces. All four will be tied to one factory with failover to the second. Half of the blades will work with factory , the other half, respectively, with b .

The first interface will remain in the normal vSwitch. The second interface will be connected to normal vds . The third interface will be connected to ucs-vds . In fact, the participation of the interface in the form of an uplink in ucs-vds looks like an atavism, because nothing depends on it. But if this is not done, then the forwarding of virtual interfaces does not work - I checked :) I planned to connect the fourth interface to the additional vds as a soft cisco nexus 1000v , so that you can more flexibly configure the virtual machine ports, but have not reached it yet.

I add all interfaces to switches without stand by at the VMWare level, since failover implemented at the UCS level. I note that for conventional blades or servers with two interfaces that are not redundant at the connection level, this scheme is incorrect. You should not repeat it thoughtlessly on non- UCS .

creating adapter policy

adapter policy is a collection of settings for each network interface in the server. The first adapter policy will be for the four static interfaces of the hypervisor, and the second for the dynamic ones.

- tab

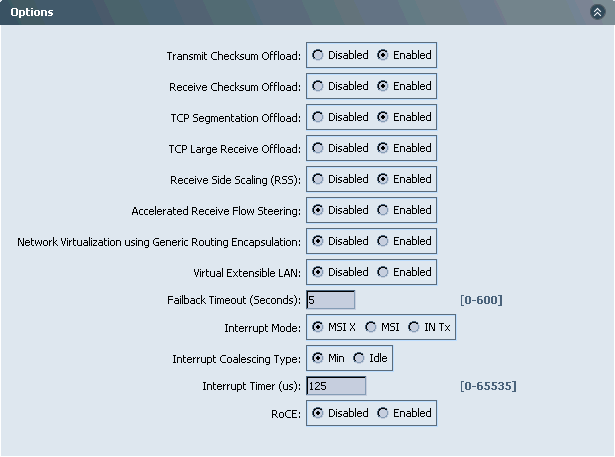

servers→policies→root→ pc byadapter policies→create ethernet adapter policy→name:nn-vmw, resources:transmit queues:4,ring size:4096;receive queues:4,ring size:4096;completion queues:8,interupts:16;- rewrite

optionslazy, so the screenshot:

- again the

servers→policies→root→ PC tab underadapter policies→name:nn-vmw-pt, resources:transmit queues:8,ring size:4096;receive queues:8,ring size:4096;completion queues:16,interupts:32;- the rest is the same. queues more than twice, because for probros dynamic interfaces need at least 8 queues. I cannot give the source in confirmation yet. If I find it again, I will add it.

creating a vnic template with grouping

In order for the blade to have network interfaces, you need to create their templates. Templates can be used directly in each profile, or can be grouped via lan connectivity policy . In the second case, for each server profile or profile template it will be enough just to specify the necessary lan connectivity policy , which will do everything necessary.

I create two templates for static interfaces. One template will work with factory a with failover to b , the second vice versa.

- tab

lan→policies→root→ pcm forvNIC templates→create vnic template→name:trunk-a→fabric id:a,enable failover→target:adapterandvm( note ! change this setting in the finished template will not work) →template type:updating template→ tick all the wilanas → in themac poolselect the previously created mac addresses →connection policies:dynamic vnic. The secondtrunk-btemplate is the same except for thefabric id:b. - By grouping, I mean

lan connectivity policy: the tablan→policies→root→ PCM bylan connectivity policies→create lan connectivity policy→name:esxi4-trunk-abutton[+] addfurther add 4 network interfaces:- I tick off the

user vnic templateand fill in all three fields:name:nic0,vnic template: Ivnic templateselect thetrunk-aadapter policycreated in the previous paragraph,adapter policy:nn-vmw, created earlier; - I repeat three more times for

name:nic1..nic3;

- I tick off the

I repeat the entire section for name : esxi4-trunk-b with binding, respectively, to the factory b .

creation bios policy

bios policy is bios settings: what can be changed by going to bios setup. It makes no sense to open the console and go there on each blade, if all this can be done centrally immediately for a pack of blades.

- tab

servers→policies→root→ pkm forbios policies→create bios policy:name:fex;- It will not be superfluous to put a tick in front of

reboot on bios settings change; - I also usually set the

resetradioresetopposite theresume on ac power loss-server;

- in the

processorsection:virtualization technology (vt):enabled;direct cache access:enabled;

- in the section

intel direct ioall radio buttons are in theenabledstate; DirectPath I/Ois not the case withDirectPath I/O, but I also like to enableboot option retryin theboot optionssection;- This is a mandatory minimum for

DirectPath I/OThe rest is necessary, or immediately reapFinish.

Other settings can be changed after the creation of the policy.

creating a service profile

From it is formed that will be hung directly on the blade and in accordance with what the piece of iron will be configured. Server profile can be made directly, and then cloned. But it is more correct to make it from a template, the settings of which will be inherited and changed on all dependent server profiles. The server profile template includes a lot of settings that are not considered here, meaning that they have already been completed without my help. Here I will consider only what is needed for DirectPath I/O to work.

Tab servers → service profile templates → pc by root → create service profile template . name : esxi4-a , type : updating template . The second setting can not be changed on the finished template, so it is very important to forget to set it when creating.

In the networking section, I set the dynamic vnic connection policy to create a specific dynamic vnic connection policy . number of dynamic vnics . In accordance with the plate in the manual , the default value for me is 54 : the ucs model is 6296, the iom model is iom in all baskets, so according to the last line, the maximum number of vif can be 58 for mtu 9000 and 58 with mtu 1500, total 116. This information is true only in part.

It is obvious that the Hindu who wrote the manual did not fully understand the question. The truth is that if the adapter policy contains excessive values for the number of queues and the ring size , then 54 dynamic interfaces cannot digest the blade. I cannot refuse overestimated values - on the servers there will be a huge traffic load, much more than 10 gigabits per port (I will write further about how this is the case). By the method of mathematical poking of values in the adapters and the number of vif adapters, I found out that in my case the number 33 is successfully set and there remains a small margin for additional static interfaces. Suddenly need.

It is for this reason that I chose a scheme with four static interfaces. 33 dynamic interfaces are many, they really should be enough for everyone . But in my clusters there is a large number of half-sleeping virtual loops who do not need a powerful network. Spending on them one of the 33 dynamic interfaces is too wasteful. Therefore, these virtuals will live on regular vds until they start to demand more. Since most of them will never get to that, they will live permanently on regular vds .

adapter policy : nn-vmw-pt , protection : protected . This means that the dynamic interfaces that ucsm will create for DirectPath I/O on each blade will be evenly scattered across both factories. I can not remember why I decided to do so. Perhaps just an intuition. It is also possible that this is incorrect and dynamic interfaces should be nailed to the same factory as the static ones. Time will tell. It’s not hard to remake a short time, and it’s not necessary to stop the virtual machine.

how do would you like to configure lan connectivity? : use connectivity policy . This is where I will use the grouping of network interface templates that was created before. lan connectivity policy : esxi4-trunk-a .

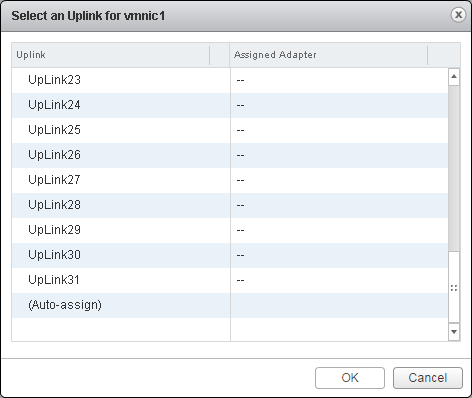

The vnic/vhba placement easier to show with a screenshot:

In the operational policies section, you need to set the newly created bios policy . This completes the creation of a server profile template, finish .

From the template you can now create the profile itself. Tab servers → service profile templates → root → PCM on esxi4-trunk-a → create service profiles from template . naming prefix : test , name suffix starting number : 1 , number of instances : 1 . This means that a single profile with the name test1 will be created from the template.

Now you need to associate the profile with the blade. Tab servers → service profiles → root → PC for test1 → change service profile association . I select existing server and in the appeared label I select the blade itself, which must be associated with the profile created. After clicking on the ok button, there will be a warning question from ucsm that the blade will rebuild, are we doing? I answer in the affirmative and wait until ucsm prepares the blade for work.

While ucsm is preparing the blade, you should pay attention to the network tab of the server profile. It should look like this:

after successful completion, this entire section must be repeated to create a second template and profile that will work with factory b instead of factory a .

marriage

Marriage in the automotive industry is called the moment of connection of the power unit with the body. This title is also great for this section.

I will skip the esxi installation process. It is very simple and completely uninteresting. I’ll just write that I put esxi from the image of Vmware-ESXi-5.5.0-2068190-custom-Cisco-5.5.2.3.iso , which I downloaded here , and then updated it to ESXi550-201512001.zip from here . As a result, according to the statements of vCenter , version 5.5.0-3248547 . As an option, you can immediately install Vmware-ESXi-5.5.0-3248547-Custom-Cisco-5.5.3.2.iso ( link ) - the result should be the same. Although the esxi installation image esxi specially prepared for Cisco servers, for some reason it does not include the cisco virtual machine fabric extender ( vm-fex ) vm-fex .

You need to download it separately: you need the file cisco-vem-v161-5.5-1.2.7.1.zip from ucs-bxxx-drivers-vmware.2.2.6c.iso . This driver should be poured into the hypervisor and installed: esxcli software vib install -d /tmp/cisco-vem-v161-5.5-1.2.7.1.zip . By the way, all the above links swing freely, you just need to register. The only thing that cannot be downloaded freely is vCenter. I use VMware-VCSA-all-6.0.0-3634788.iso (aka 6.0u2 ), but there is also a successful booth with VMware-VCSA-all-6.0.0-2800571.iso . I will also skip installing vcsa , adding hypervisors to it and creating clusters.

It is probably worth arguing why I chose vcsa version 6 and esxi version 5.5 . the web client of all previous vcsa almost completely written on flash . , vmware, npapi , chrome 2015. vmware vsphere client , , -. vcsa , .

esxi , 5.1 , iscsi host profile . , , , . , vds 5.5 , . , cisco nexus 1000v . esxi 6.0 : ucs-bxxx-drivers-vmware.2.2.6c.iso/VM-FEX/Cisco/VIC/ESXi_6.0/README.html : Not supported .

. vds , vcsa . : vds . . vds . vcsa 5.5 :

vcsa 6.0 :

, , :

. supportforums.cisco.com , communities.vmware.com - vmware employees , , - . vcsa 5.5 npapi chrome , . , vds , ucsm , - - .

vds vmware vsphere client . , — , . esxi vds host profile . , esxi vds — sdk, , , .

, DirectPath I/O vds , ucsm . esxi vds . , . esxi vSwitch , vcsa , esxi , vds 5.5.

DirectPath I/O . , — , ucs-vds , — vmxnet3 , reserve all guest memory . . DirectPath I/O Active . ucsm :

bond. james bond. ?

, 14 /. , irqbalance :

top - 13:35:03 up 9 days, 17:41, 2 users, load average: 0.00, 0.00, 0.00 Tasks: 336 total, 1 running, 334 sleeping, 0 stopped, 1 zombie Cpu0 : 1.4%us, 8.5%sy, 0.0%ni, 90.2%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu1 : 1.0%us, 7.8%sy, 0.0%ni, 91.2%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu2 : 1.4%us, 4.4%sy, 0.0%ni, 94.2%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu3 : 1.3%us, 8.1%sy, 0.0%ni, 90.6%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu4 : 2.5%us, 9.3%sy, 0.0%ni, 81.4%id, 0.0%wa, 0.0%hi, 6.8%si, 0.0%st Cpu5 : 1.8%us, 9.5%sy, 0.0%ni, 83.6%id, 0.0%wa, 0.0%hi, 5.1%si, 0.0%st Cpu6 : 1.8%us, 6.6%sy, 0.0%ni, 86.3%id, 0.0%wa, 0.0%hi, 5.2%si, 0.0%st Cpu7 : 2.6%us, 8.8%sy, 0.0%ni, 83.9%id, 0.0%wa, 0.0%hi, 4.7%si, 0.0%st Cpu8 : 2.9%us, 8.3%sy, 0.0%ni, 83.3%id, 0.0%wa, 0.0%hi, 5.4%si, 0.0%st Cpu9 : 1.0%us, 8.0%sy, 0.0%ni, 91.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu10 : 1.3%us, 8.9%sy, 0.0%ni, 89.4%id, 0.0%wa, 0.0%hi, 0.3%si, 0.0%st Cpu11 : 1.3%us, 9.3%sy, 0.0%ni, 89.4%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu12 : 0.7%us, 3.1%sy, 0.0%ni, 96.2%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Cpu13 : 1.1%us, 5.3%sy, 0.0%ni, 88.0%id, 0.0%wa, 0.0%hi, 5.6%si, 0.0%st Cpu14 : 2.9%us, 8.7%sy, 0.0%ni, 81.9%id, 0.0%wa, 0.0%hi, 6.5%si, 0.0%st Cpu15 : 1.8%us, 9.0%sy, 0.0%ni, 82.4%id, 0.0%wa, 0.0%hi, 6.8%si, 0.0%st Mem: 8059572k total, 3767200k used, 4292372k free, 141128k buffers Swap: 4194300k total, 0k used, 4194300k free, 321080k cached - , . , . . ucs ( ) . ecmp ? , . Cisco UCS . port-channel . , — 80 /.

')

Source: https://habr.com/ru/post/303026/

All Articles