Not all hyper-convergent solutions are equally useful, or what about the muddy marketing stream “we also have HCI”

Inspired by today's letter from a colleague from one of the largest Russian companies, which is under a massive "clone attack" (c).

We heard the opinion that it would be time to conduct some public educational program, especially for those who have not yet come across SDI (Software Defined Infrastructure), Webscale and other progressive approaches to building IT infrastructures.

As they say - The devil is in the detail.

')

Virtually any modern vendor has launched hyper-convergent solutions to the market, most often in its infancy, and as a result, a giant pasta factory has joined.

In particular, we will try to help deal with the very flow of marketing, as well as the "nuances" that are worth paying attention to.

Today we will talk about one of the most exemplary (non-technologically) vendors, in fact, an example of what the dominance of marketers instead of engineers leads to.

Unfortunately, this behavior leads to discrediting the very concepts of WebScale / hyperconvergence.

To begin with - ask a few questions "from the reverse."

In your opinion, is this technology really a “software-defined solution of a new generation”:

1) The use of a closed (“proprietary”) (giant in size — fits only into 2U servers) hardware PCI adapter for operation — in particular, forced compression and deduplication. What is going on inside this adapter nobody knows except the developer (and possibly special services of a number of interested countries), it goes without saying that all your data passes through this device.

At the same time, the adapter is SPOF (Single Point Of Failure, a single point of failure), requires a complete stop of the equipment to update its firmware (which is required, for example, when upgrading VMware), and the firmware can be done only by an official engineer of the company.

The actual approach - instead of using the capabilities of modern Intel processors (for deduplication - calculating sha256 by one instruction, with close to zero performance drawdown) - creating “tricked” PCI cards with limited performance, but a lot of problems.

Positioning this (extremely strange) solution as the only advantage over competitors (which we will discuss later).

2) Lack of own management interface.

Integration into third-party software, in particular VMware vCenter, with the corresponding problems, including the classic “bit the tail of itself” - in case of failure of the vCenter operating on the same equipment, control over the equipment is lost ...

That will not let vCenter run back without a mass of “ridiculous gestures”.

3) Using archaic RAID technologies inside the solution (RAID 5 and 6 to protect data on the internal drives of servers)

4) Lack of full clustering, to hide what was coined the term "federation", with the support of only 4 nodes (servers) in one data center.

5) Perennial promises to support other hypervisors except ESXi, with a mass of announcements and zero results.

6) Mass data loss on tests (according to colleagues from the largest companies in the Russian Federation).

7) The use of more than 100 gigabytes of RAM on each server for the operation of a virtual controller (despite the fact that it uses proprietary hardware acceleration). The work of the guest VM, respectively, remains quite a bit.

8) The lack of localization of input-output, which is already critical in 2016 and will be ultra-critical in 2017.

Several SSD drives can completely “clog” the bandwidth of a 10-gigabit network, and NVMe can easily do this even for 40 gigabits.

9) Lack of flexible control of functionality - for example, deduplication cannot be turned off (which, as most engineers know, can be harmful instead of good in a sufficiently large number of cases).

10) Lack of support for “stretched” (Stretch / Metro) clusters.

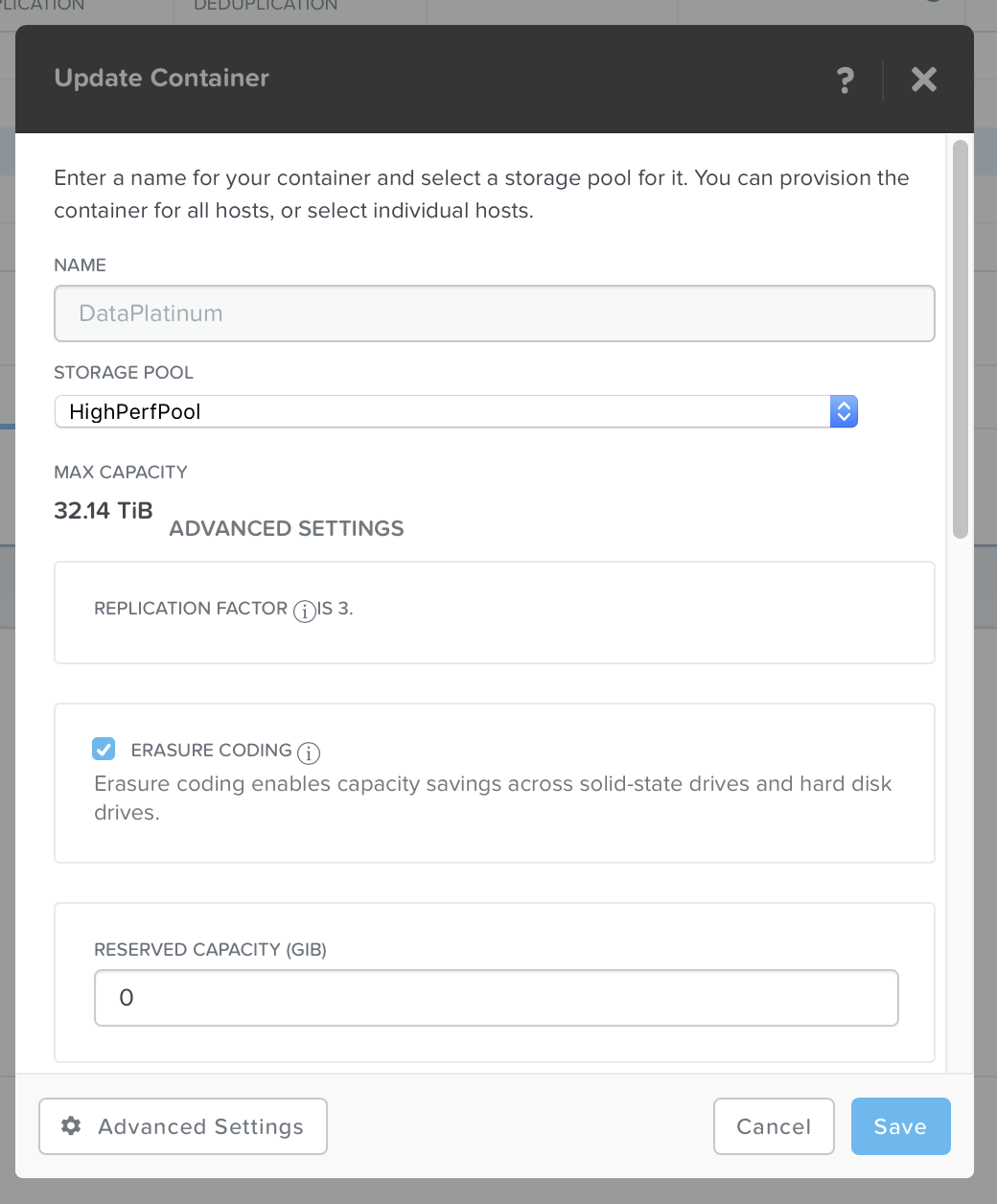

11) No support for Erasure Code .

What is EC? This is an extremely effective technology to save disk space, combining (in our case) the advantage of unlimitedly scalable systems with the most effective usable capacity of the data storage system.

Works for any data, unlike deduplication.

12) Lack of distributed "tiering" between SSD and the "cold" level.

13) There is no All Flash support.

14) There is no support for file access (built-in, unlimitedly scalable, as it should be webscale solution, file server)

15) There is no support for block storage functionality (in fact, the actual use of tier-1 applications like Oracle RAC is not possible)

16) The lack of intellectual "cloning" of master images of operating systems on local SSDs , i.e. infrastructure susceptibility to boot storm problem.

What does this mean? With the simultaneous launch of a mass of VM (from hundreds and above), there are massive problems due to the emphasis on the input-output interfaces (in this case, the network). The Shadow Disk technology on Nutanix fundamentally eliminates this problem — it automatically makes a copy of the VM master image on the local host, allowing the virtual machine to boot from the local SSD.

17) The inability to upgrade software without stopping the service.

18) The inability to self-update without contacting technical support.

19) The inability to update "one click" requires a lot of dangerous operations (which is why it is necessary to contact the technical center)

20) The inability to automatically update the server firmware (BIOS, BMC, controllers, disks, etc.)

21) The lack of automated upgrade hypervisors.

22) The inability to "attach" VM to flash (SSD level).

23) DRS support for only 2 servers.

24) Lack of support for data consistency when creating snapshots.

25) The actual attempt to position the solution for the SMB market (small and medium businesses) as an IT platform for corporate use.

26) Reporting false information to customers (more on this below).

...

Probably enough to start. Do you like this solution? Will you call it "software defined"?

If yes, then you can feel free to contact Simplivity. Just do not forget to make backups. :)

But if it seems to you that the real WebScale is about something else, then welcome to us.

Usually, we do not waste time on “spacing” lagging technological solutions (especially since they are so lagging behind ), but we could not but react to the constant attempts to convey incorrect information to our customers.

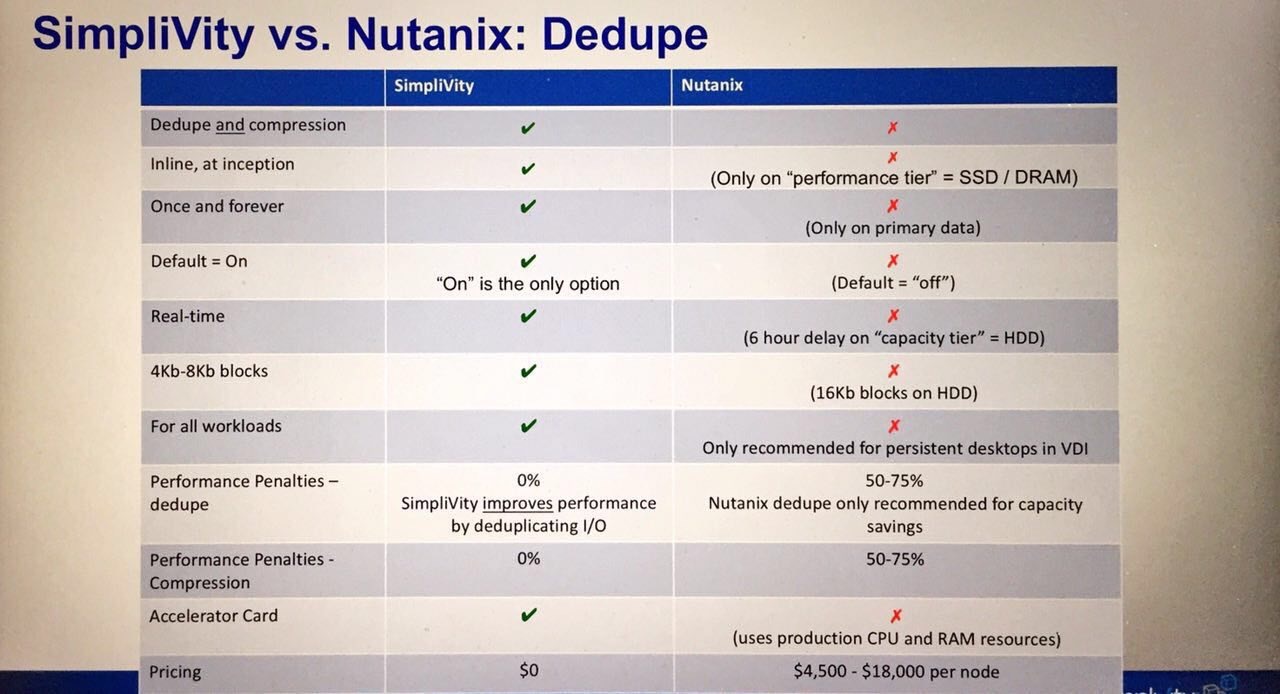

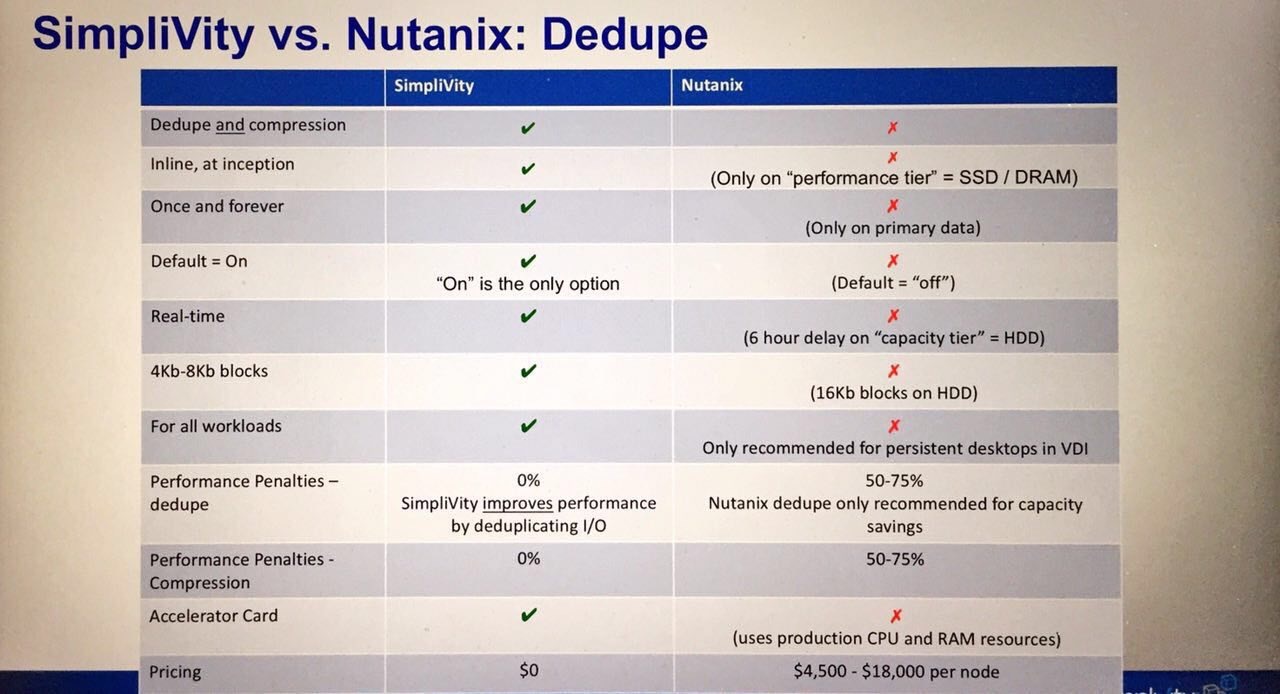

The idea is simple - “in any incomprehensible situation, we need to talk about deduplication” (c) Simplity.

In short, we are talking about these two pictures with which Simplity is trying to “compete” and shows them to the right and left:

Let's disassemble this muddy stream "drop by drop".

To begin with, the settings of the Nutanix virtual datastores shown in the screenshots. This is quite important, since they (for the attentive reader) already show that most of the information provided by Simplity is a distortion of facts or a direct lie.

Go!

1) Dedupe and compression - false application, simultaneous compression and deduplication are fully supported, but we do even better - simultaneous EC-X (Erasure Code) and compression are possible, and with zero performance dropping (deferred map-reduce processes in the background).

By the way, Nutanix can even do deduplication of the RAM cache (used when reading data) - this is generally unique.

2) Once and forever - false information, we can do EC-X / compression / dedupe (!) In deferred mode, and throughout the cluster. This is much more efficient than on-the-fly deduplication with a proprietary 2-unit PCI card with limited performance.

3) Default = on is a huge minus Simplity, there is no possibility to fine tune the solution for specific tasks. For example, for linked clones (with which huge problems are actually on Simplity), deduplication is almost completely useless.

4) Real-time - false information, Nutanix can do both on the fly (due to Intel processor hardware instructions and SHA256 checksum counting) and in deferred mode, and the delay can be finely tuned (from minutes to many hours).

5) 4kb-8Kb - false information, on Nutanix you can adjust the block size for deduplication

6) For all workloads - incorrectly, deduplication in the mass of scenarios does not bring any advantages, but at the same time (in the case of inline, even hardware) introduces increased latency. Nutanix, due to the possibility of "deferred" deduplication has no such problems.

7) Performance Penalties - false information, performance drawdown is almost zero for inline (due to the use of processor hardware instructions) and generally zero for deferred distributed deduplication.

Do not forget that Simplity has to use both a software controller (the first "layer") and a hardware adapter (an additional layer).

8) Accelerator Card - here is the complete truth, a giant propietary card, with a lot of problems (they were written above), which is the point of failure.

Our solution is 100% software defined, while showing outstanding performance results (4 All Flash nodes will easily give out more than 600 thousand IOPS).

It seems that here someone does not know how to program.

Additional "zest" gives the fact that the controller Simpliti requires much more hardware resources (memory and processor) than Nutanix, despite the fact that the hardware accelerator is also required.

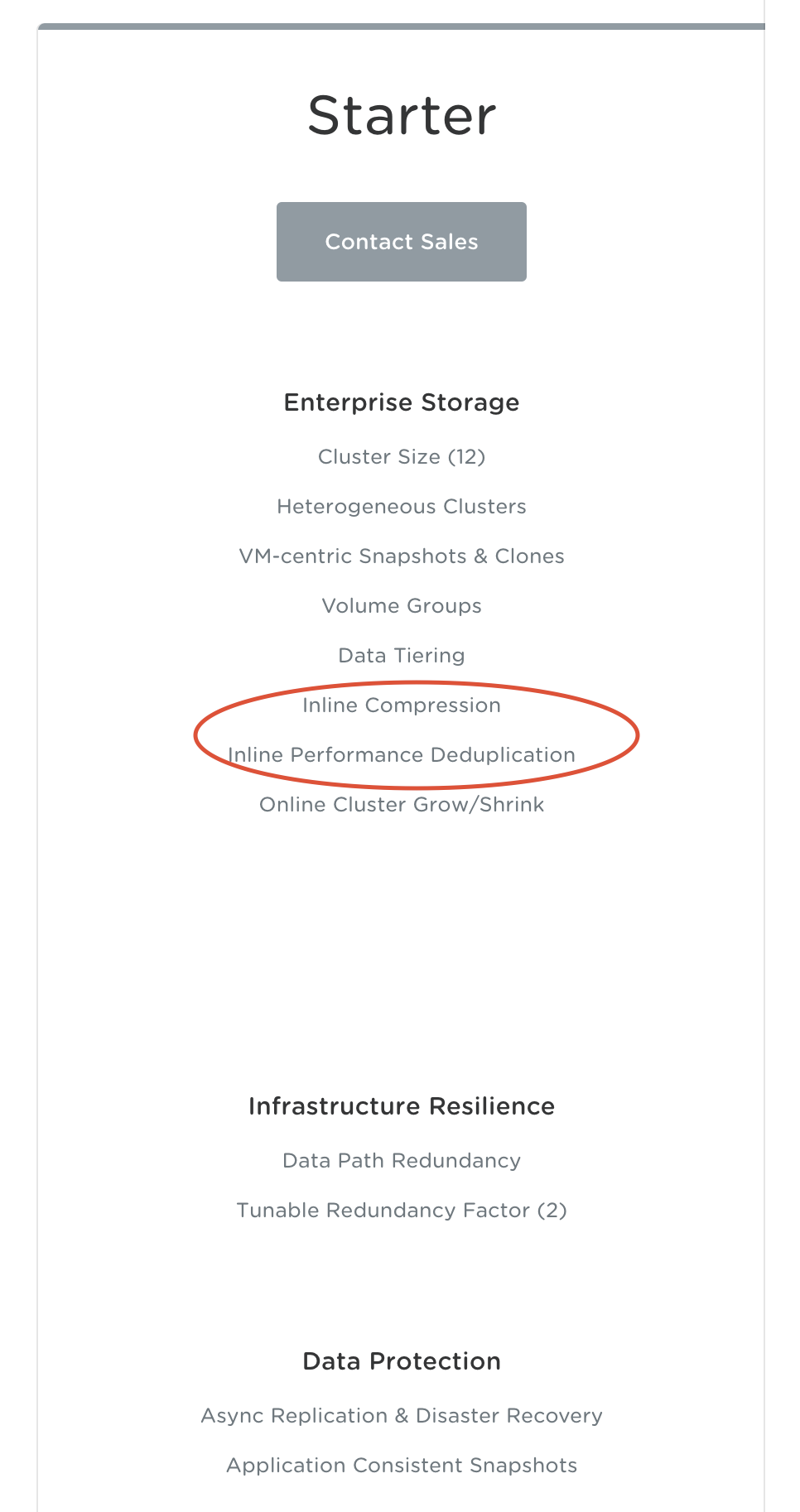

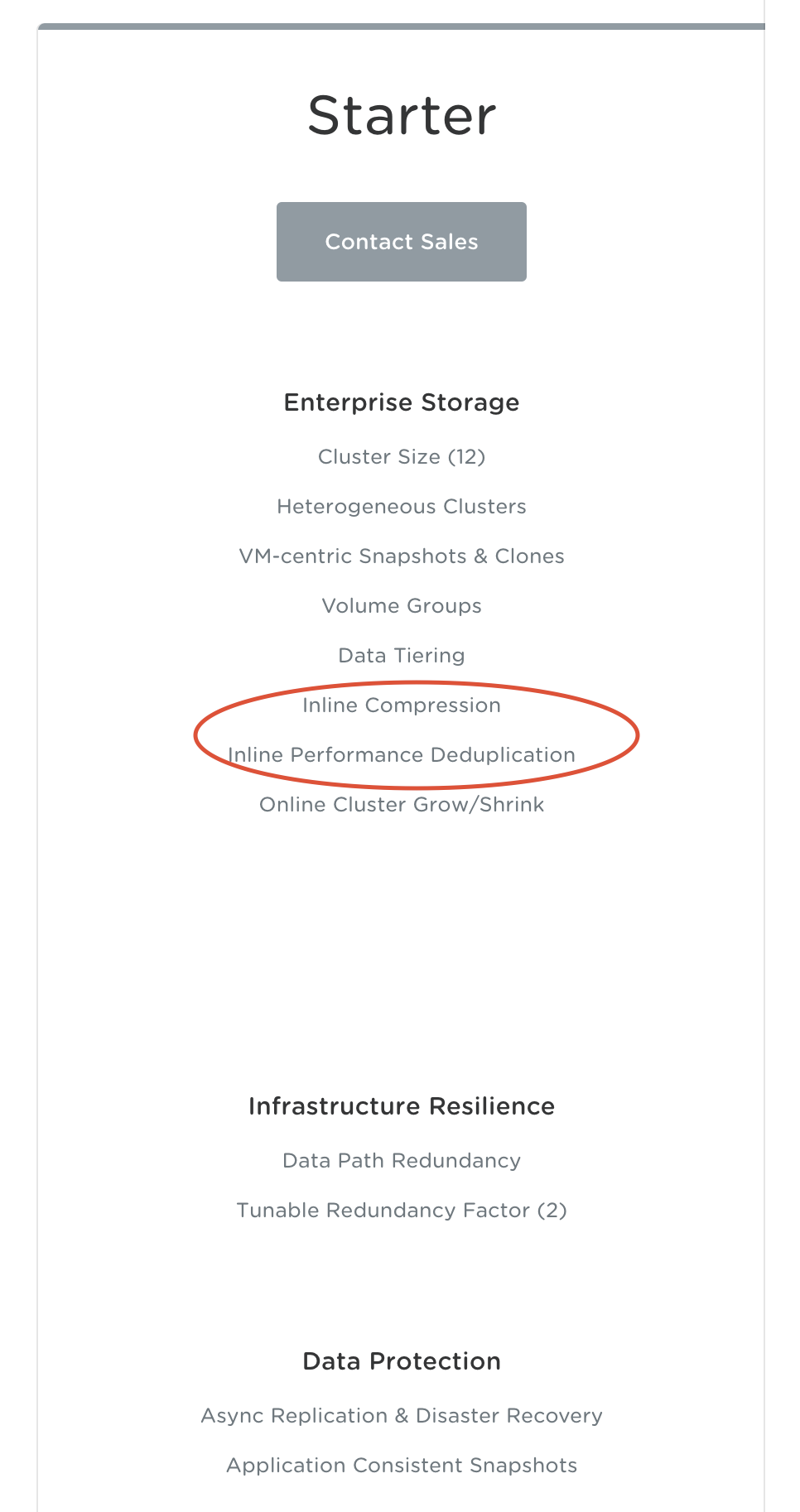

9) Pricing - false information, as it is easy to see - deduplication (and backups) is even built into the basic starter edition, as well as our SMB solution (Nutanix Express) and a completely free Community Edition.

To paraphrase, deduplication and backups for all clients are free and built into all versions of licenses.

www.nutanix.com/products/software-editions

10) Simple VM-level Backup is a distortion of facts, on Nutanix this is not tied to vCenter, which in itself is a bottleneck.

It works for any hypervisor (including ESXi), data consistency is maintained (which Simplity cannot do).

Perhaps a file-by-file recovery (of which Simplity is generally stating that they are the only ones in the world who did this, which of course is a hoax).

11) No need for protection domain - indeed, Simplity does not know how to operate with virtual machine groups (as we can), which does not allow creating VM protection groups (for example, describing your application).

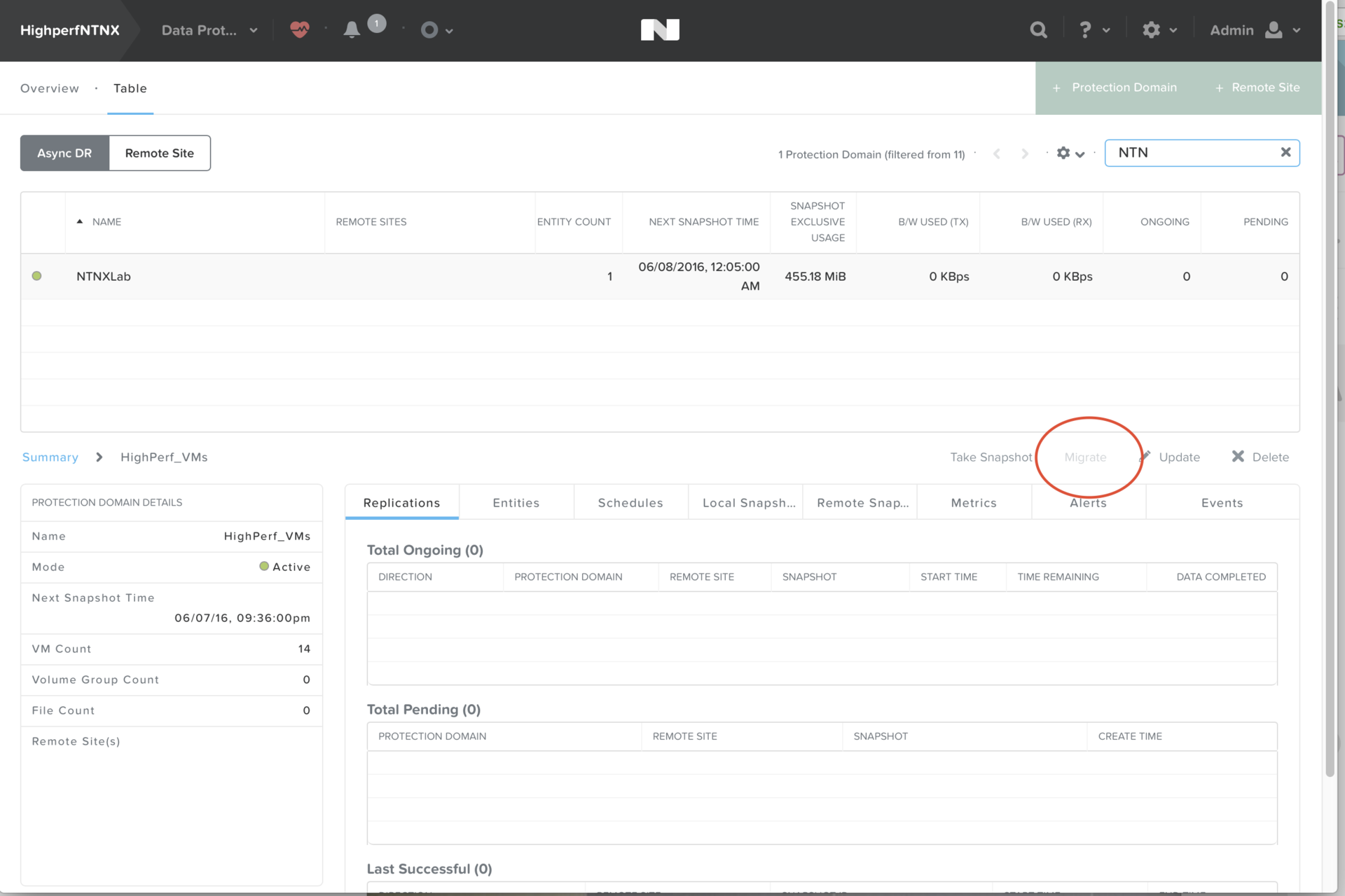

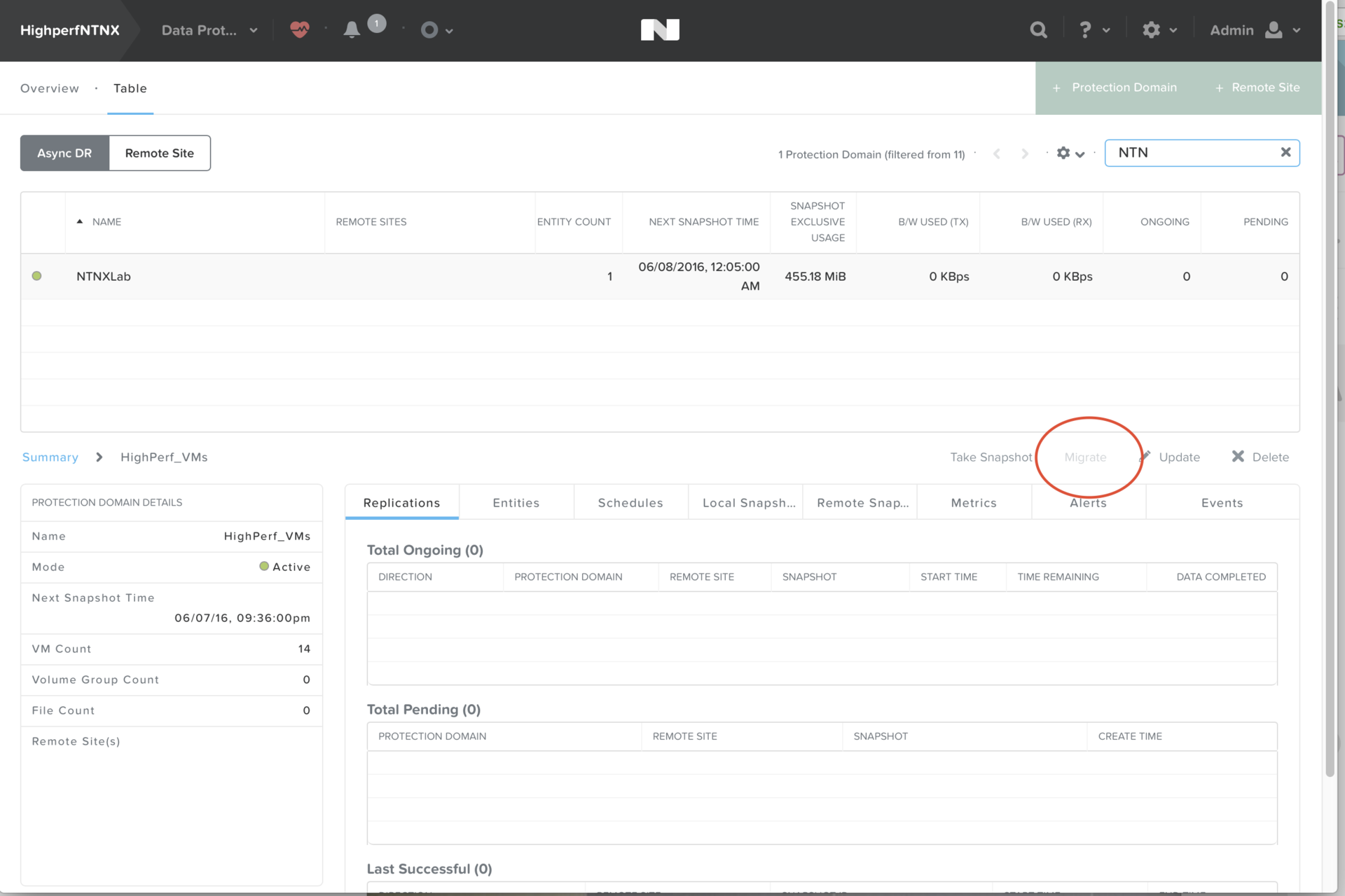

12) Single Click Data Migration - it's not very clear what the gentlemen wanted to say, but it seems again a deception. On Nutanix, data migration is performed by pressing one of the “Migrate” buttons, and unlike Simplity, tens / hundreds / thousands / millions of virtual machines can migrate at the same time (the same protection groups)

13) No need for external backup applications — false information, powerful backup features (including backup capabilities in Azure and AWS clouds) are built into Nutanix, and there is integration with leading market players (for example, Commvault natively supports Nutanix, including our AHV virtualization stack .

Moreover, we gave the opportunity to recover data at the guest VM level - the virtual machine user can independently recover the information he needs (Nutanix Guest Tools). Of course, Simplity does not know how.

14) 10 minutes RPO - false information, snapshots of data can be done with overlapping schedules and more often than once per hour.

...

What is the result? Our recommendation is to study the materials on our own (in our case, the Nutanix Bible will help to sort out the solution in detail), not to give an opportunity to “divert the focus to the side” (when the vendor is trying to pass off unimportant functionality as a “breakthrough”), to ask questions.

Nothing complicated, right? :)

We heard the opinion that it would be time to conduct some public educational program, especially for those who have not yet come across SDI (Software Defined Infrastructure), Webscale and other progressive approaches to building IT infrastructures.

As they say - The devil is in the detail.

')

Virtually any modern vendor has launched hyper-convergent solutions to the market, most often in its infancy, and as a result, a giant pasta factory has joined.

In particular, we will try to help deal with the very flow of marketing, as well as the "nuances" that are worth paying attention to.

Today we will talk about one of the most exemplary (non-technologically) vendors, in fact, an example of what the dominance of marketers instead of engineers leads to.

Unfortunately, this behavior leads to discrediting the very concepts of WebScale / hyperconvergence.

To begin with - ask a few questions "from the reverse."

In your opinion, is this technology really a “software-defined solution of a new generation”:

1) The use of a closed (“proprietary”) (giant in size — fits only into 2U servers) hardware PCI adapter for operation — in particular, forced compression and deduplication. What is going on inside this adapter nobody knows except the developer (and possibly special services of a number of interested countries), it goes without saying that all your data passes through this device.

At the same time, the adapter is SPOF (Single Point Of Failure, a single point of failure), requires a complete stop of the equipment to update its firmware (which is required, for example, when upgrading VMware), and the firmware can be done only by an official engineer of the company.

The actual approach - instead of using the capabilities of modern Intel processors (for deduplication - calculating sha256 by one instruction, with close to zero performance drawdown) - creating “tricked” PCI cards with limited performance, but a lot of problems.

Positioning this (extremely strange) solution as the only advantage over competitors (which we will discuss later).

2) Lack of own management interface.

Integration into third-party software, in particular VMware vCenter, with the corresponding problems, including the classic “bit the tail of itself” - in case of failure of the vCenter operating on the same equipment, control over the equipment is lost ...

That will not let vCenter run back without a mass of “ridiculous gestures”.

3) Using archaic RAID technologies inside the solution (RAID 5 and 6 to protect data on the internal drives of servers)

4) Lack of full clustering, to hide what was coined the term "federation", with the support of only 4 nodes (servers) in one data center.

5) Perennial promises to support other hypervisors except ESXi, with a mass of announcements and zero results.

6) Mass data loss on tests (according to colleagues from the largest companies in the Russian Federation).

7) The use of more than 100 gigabytes of RAM on each server for the operation of a virtual controller (despite the fact that it uses proprietary hardware acceleration). The work of the guest VM, respectively, remains quite a bit.

8) The lack of localization of input-output, which is already critical in 2016 and will be ultra-critical in 2017.

Several SSD drives can completely “clog” the bandwidth of a 10-gigabit network, and NVMe can easily do this even for 40 gigabits.

9) Lack of flexible control of functionality - for example, deduplication cannot be turned off (which, as most engineers know, can be harmful instead of good in a sufficiently large number of cases).

10) Lack of support for “stretched” (Stretch / Metro) clusters.

11) No support for Erasure Code .

What is EC? This is an extremely effective technology to save disk space, combining (in our case) the advantage of unlimitedly scalable systems with the most effective usable capacity of the data storage system.

Works for any data, unlike deduplication.

12) Lack of distributed "tiering" between SSD and the "cold" level.

13) There is no All Flash support.

14) There is no support for file access (built-in, unlimitedly scalable, as it should be webscale solution, file server)

15) There is no support for block storage functionality (in fact, the actual use of tier-1 applications like Oracle RAC is not possible)

16) The lack of intellectual "cloning" of master images of operating systems on local SSDs , i.e. infrastructure susceptibility to boot storm problem.

What does this mean? With the simultaneous launch of a mass of VM (from hundreds and above), there are massive problems due to the emphasis on the input-output interfaces (in this case, the network). The Shadow Disk technology on Nutanix fundamentally eliminates this problem — it automatically makes a copy of the VM master image on the local host, allowing the virtual machine to boot from the local SSD.

17) The inability to upgrade software without stopping the service.

18) The inability to self-update without contacting technical support.

19) The inability to update "one click" requires a lot of dangerous operations (which is why it is necessary to contact the technical center)

20) The inability to automatically update the server firmware (BIOS, BMC, controllers, disks, etc.)

21) The lack of automated upgrade hypervisors.

22) The inability to "attach" VM to flash (SSD level).

23) DRS support for only 2 servers.

24) Lack of support for data consistency when creating snapshots.

25) The actual attempt to position the solution for the SMB market (small and medium businesses) as an IT platform for corporate use.

26) Reporting false information to customers (more on this below).

...

Probably enough to start. Do you like this solution? Will you call it "software defined"?

If yes, then you can feel free to contact Simplivity. Just do not forget to make backups. :)

But if it seems to you that the real WebScale is about something else, then welcome to us.

Usually, we do not waste time on “spacing” lagging technological solutions (especially since they are so lagging behind ), but we could not but react to the constant attempts to convey incorrect information to our customers.

The idea is simple - “in any incomprehensible situation, we need to talk about deduplication” (c) Simplity.

In short, we are talking about these two pictures with which Simplity is trying to “compete” and shows them to the right and left:

Let's disassemble this muddy stream "drop by drop".

To begin with, the settings of the Nutanix virtual datastores shown in the screenshots. This is quite important, since they (for the attentive reader) already show that most of the information provided by Simplity is a distortion of facts or a direct lie.

Go!

1) Dedupe and compression - false application, simultaneous compression and deduplication are fully supported, but we do even better - simultaneous EC-X (Erasure Code) and compression are possible, and with zero performance dropping (deferred map-reduce processes in the background).

By the way, Nutanix can even do deduplication of the RAM cache (used when reading data) - this is generally unique.

2) Once and forever - false information, we can do EC-X / compression / dedupe (!) In deferred mode, and throughout the cluster. This is much more efficient than on-the-fly deduplication with a proprietary 2-unit PCI card with limited performance.

3) Default = on is a huge minus Simplity, there is no possibility to fine tune the solution for specific tasks. For example, for linked clones (with which huge problems are actually on Simplity), deduplication is almost completely useless.

4) Real-time - false information, Nutanix can do both on the fly (due to Intel processor hardware instructions and SHA256 checksum counting) and in deferred mode, and the delay can be finely tuned (from minutes to many hours).

5) 4kb-8Kb - false information, on Nutanix you can adjust the block size for deduplication

6) For all workloads - incorrectly, deduplication in the mass of scenarios does not bring any advantages, but at the same time (in the case of inline, even hardware) introduces increased latency. Nutanix, due to the possibility of "deferred" deduplication has no such problems.

7) Performance Penalties - false information, performance drawdown is almost zero for inline (due to the use of processor hardware instructions) and generally zero for deferred distributed deduplication.

Do not forget that Simplity has to use both a software controller (the first "layer") and a hardware adapter (an additional layer).

8) Accelerator Card - here is the complete truth, a giant propietary card, with a lot of problems (they were written above), which is the point of failure.

Our solution is 100% software defined, while showing outstanding performance results (4 All Flash nodes will easily give out more than 600 thousand IOPS).

It seems that here someone does not know how to program.

Additional "zest" gives the fact that the controller Simpliti requires much more hardware resources (memory and processor) than Nutanix, despite the fact that the hardware accelerator is also required.

9) Pricing - false information, as it is easy to see - deduplication (and backups) is even built into the basic starter edition, as well as our SMB solution (Nutanix Express) and a completely free Community Edition.

To paraphrase, deduplication and backups for all clients are free and built into all versions of licenses.

www.nutanix.com/products/software-editions

10) Simple VM-level Backup is a distortion of facts, on Nutanix this is not tied to vCenter, which in itself is a bottleneck.

It works for any hypervisor (including ESXi), data consistency is maintained (which Simplity cannot do).

Perhaps a file-by-file recovery (of which Simplity is generally stating that they are the only ones in the world who did this, which of course is a hoax).

11) No need for protection domain - indeed, Simplity does not know how to operate with virtual machine groups (as we can), which does not allow creating VM protection groups (for example, describing your application).

12) Single Click Data Migration - it's not very clear what the gentlemen wanted to say, but it seems again a deception. On Nutanix, data migration is performed by pressing one of the “Migrate” buttons, and unlike Simplity, tens / hundreds / thousands / millions of virtual machines can migrate at the same time (the same protection groups)

13) No need for external backup applications — false information, powerful backup features (including backup capabilities in Azure and AWS clouds) are built into Nutanix, and there is integration with leading market players (for example, Commvault natively supports Nutanix, including our AHV virtualization stack .

Moreover, we gave the opportunity to recover data at the guest VM level - the virtual machine user can independently recover the information he needs (Nutanix Guest Tools). Of course, Simplity does not know how.

14) 10 minutes RPO - false information, snapshots of data can be done with overlapping schedules and more often than once per hour.

...

What is the result? Our recommendation is to study the materials on our own (in our case, the Nutanix Bible will help to sort out the solution in detail), not to give an opportunity to “divert the focus to the side” (when the vendor is trying to pass off unimportant functionality as a “breakthrough”), to ask questions.

Nothing complicated, right? :)

Source: https://habr.com/ru/post/302812/

All Articles