The igrodel approach to creating modern web applications

Boring entry

Not so long ago, I had the opportunity to participate in the development of a certain software and hardware complex for one American company. I developed a backend, a bit of a frontend, spliced devices with a cloud ( IoT, I mean). The technology stack has been clearly marked. Neither right nor left - enterprise, in a word. At some point, I was thrown in to help the front-end POS (Point of Sale) web application.

Problem. It becomes more interesting

All anything, but the web application was designed to work in 6 thousand offices throughout America (for a start). Where, as it turned out, there may be problems with the Internet. Yes, yes, in the very advanced America! Problems with coverage not only of the wired Internet, but also of mobile communication! Those. A bad Internet channel (often mobile) is quite a common story for small American cities.

And this is POS ... Here, you understand, customers are standing, you need to quickly print out the invoice ... There should be no brakes! And livesearch ... There were discussions, estimations, in the end - they did not load the backend with requests (traffic, again). Agreed that the web application should load data to the maximum and do the same search locally. It is, of course, about the data, the size of which allows you to do this.

')

Data frontend pulled a lot from different services. As a result - a lot of traffic and long page load. In general - the trouble.

Some of the problems are solved by the backend (compression, geo-clustering, etc.), but this is a separate story, now only about the frontend.

Cache

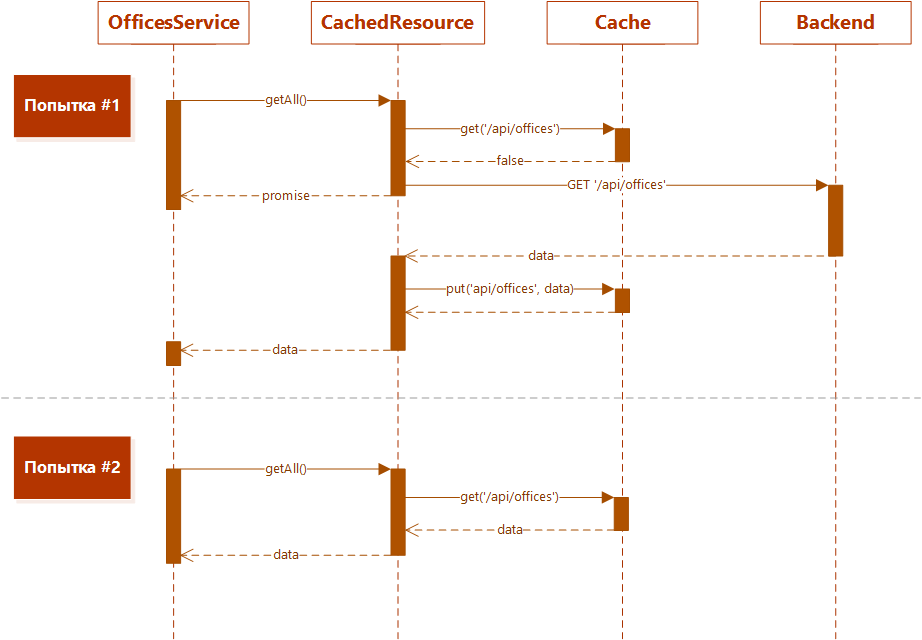

The first thing that was done right away (and this is logical) is the caching and storage of the received data locally. If possible - for as long as security allows. For some data it is better to use localStorage, for others - sessionStorage, and what does not fit - you can simply store in memory. We used angular, so angular-cache was quite suitable for us.

In part, this solved the problem - the first time a page was accessed, the resources it required were loaded. Further, resources were already taken from the cache.

Made, of course, cache invalidation and so on. The traffic was reduced, but the response during the initial access to the pages remained prohibitively large.

Background Resource Upload

The next logical step was the background load of resources. While we are on the main page, we are looking at messages, alerts, and so on — there is a background load of resources for hidden sections. The idea is that when the user switches to the desired section, the data (or at least part of them) will be already in the cache, the load will not be required, the page response will decrease. The first option is the easiest:

$q.all([ Service1.preLoad(), Service2.preLoad(), … ServiceN.preLoad() ]); Where Service.preLoad () is a function that returns the promise of a page resource.

But there is a problem - in this case all promises are executed simultaneously, i.e. at the same time all 100500 resources are pulled. And the channel is not rubber! Plus, we have a mega-request to a third-party service, which slowly and slowly downloaded a lot of data. As a result, all parallel requests were executed in time, almost the same as this mega-request.

Okay, let's load in order:

Service1.preLoad() .then().Service2.preLoad() … .then().ServiceN.preLoad(); It became better for the water, but not so much - if the user immediately went to the “wrong” section - we will wait for the queue to load until we get to the resources of this section. In general, there is endless heuristics. It is better to load something in a pack, something separately in parallel, and so on and so on ... But I wanted some more systematic approach.

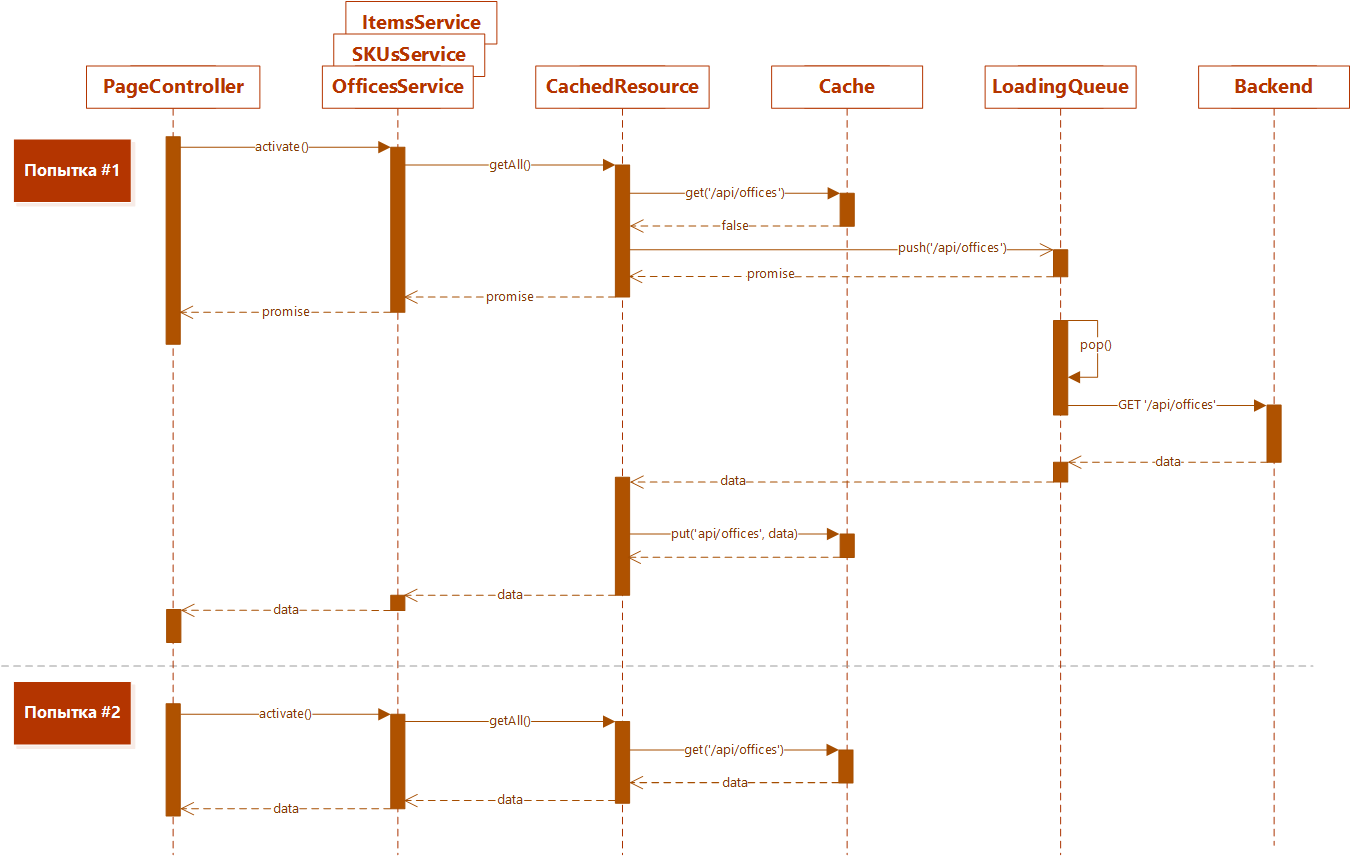

Turn

And again, the logical step is to put the download of all resources into a dynamic queue. Priority. If we go to the section, and its data (or their part) is still in the queue - we increase their priority and they are loaded first. As a result, the response <= what it was. Trifle, but nice.

So it is so, only there is one more, not rubber place - the size of the cache.

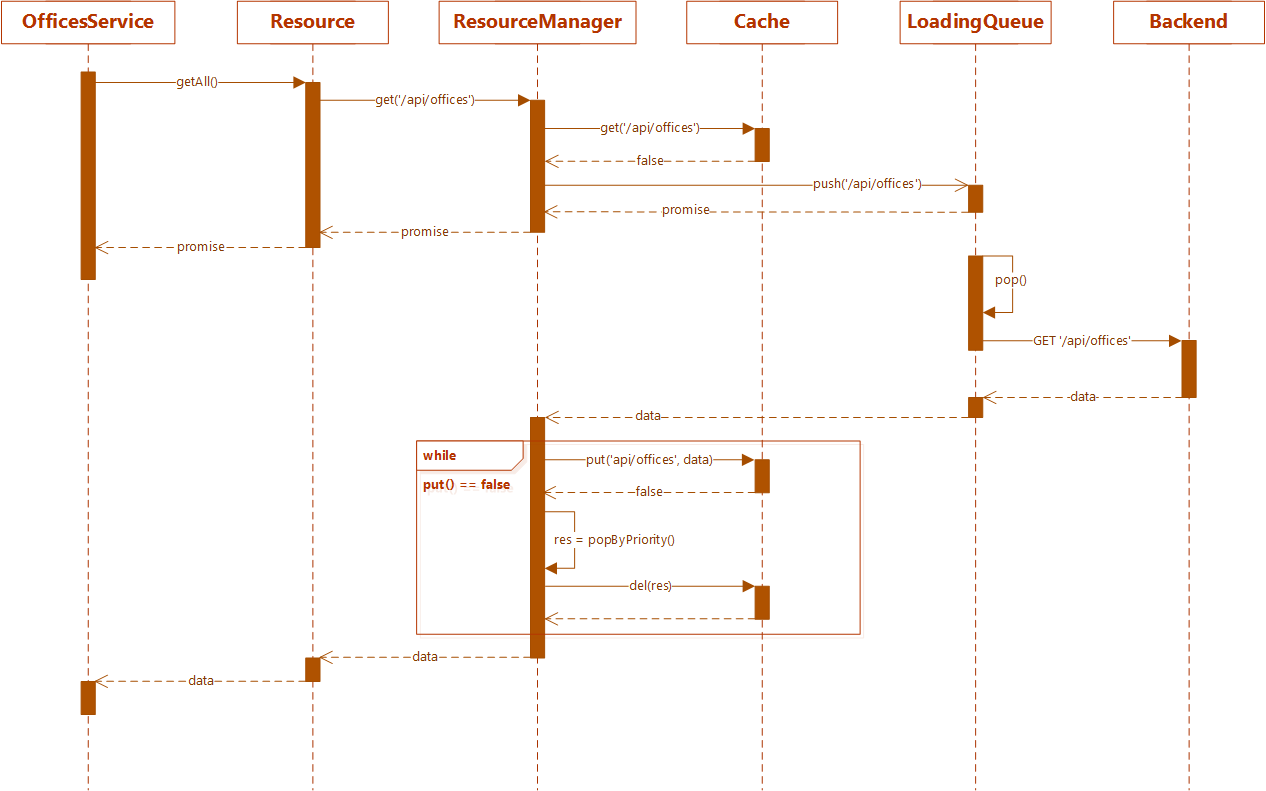

Resource system

The deeper I went into this problem, the stronger the deja vu arose - I already went through this! Limited memory resources, background loading, uploading, priorities ... it's the same ... this is the typical system resource of the game engine! You go on a typewriter - locations are loaded, which is far behind - unloaded. Another special term was for game engines - streaming support ... I spent 5 years living in a game dev, it was cool ...

So here. It turns out, a web application, in fact - an analog of a resource-intensive game. Only here we do not have locations - but pages / sections. Pages pull resources and add to the cache, and he - limited in size, something needs to be unloaded. Those. An analogy is appropriate.

The problem of a web application, as in game development, is to predict where the user will be in order to load resources in advance. Decision number 1 - design, of course. Send the user along the predictable (and sometimes only) route. What can not be solved by design - statistics + heuristics.

Uploading is the same. Just have to understand what to unload in the first place. We set priorities for the resources themselves, unload resources with the lowest priority. Or banging whole packs.

Implementation options - the mass. The most predictable is preprocessing, manual or automatic. We need to indicate which resources in the location / section we will need, and somehow lower the priority.

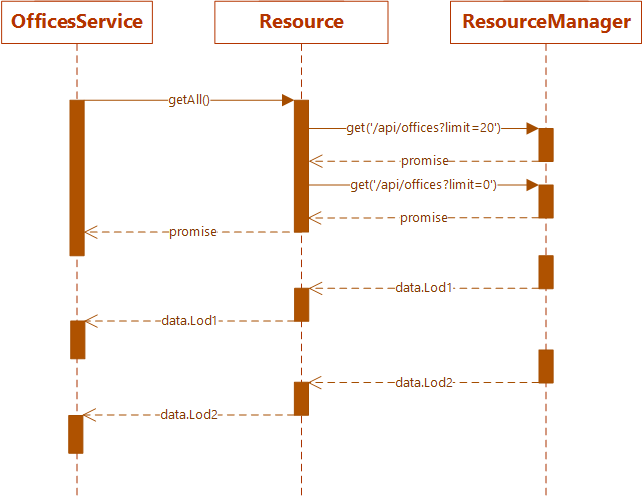

LOD

And then Ostap suffered ... I mean, gamedev tricks applicable in web development got into my head. One of them is LOD (Level of Detail), the level of detail. In game dev, it is applied to textures and patterns. You can immediately load the world with a minimum level of detail, and stream the already detailed texture models. And the player always sees something, and even, can play.

Those. Need a LOD system for downloadable data! For the web, the most primitive option is suitable - two levels of detail. First, we load the initial data that the user sees (the first pages of the tables, for example).

Data turns out a little, are loaded quickly. And the background ... the background is already loaded with LODs “heavier”.

Compression

Shove in nevpihuemoe - almost standard task igrodel. Well, let's push the boundaries of localStorage! Take some LZ-compressor and go! Yes, but localStorage can only store strings ... Well, then, for example, lz-string.js will do . Compression is not the same, but even -20%, when only 5MB is available is not bad at all! And as a bonus - security affairs, in localStorage there will be not a clear text, but Chinese signs.

And then what?

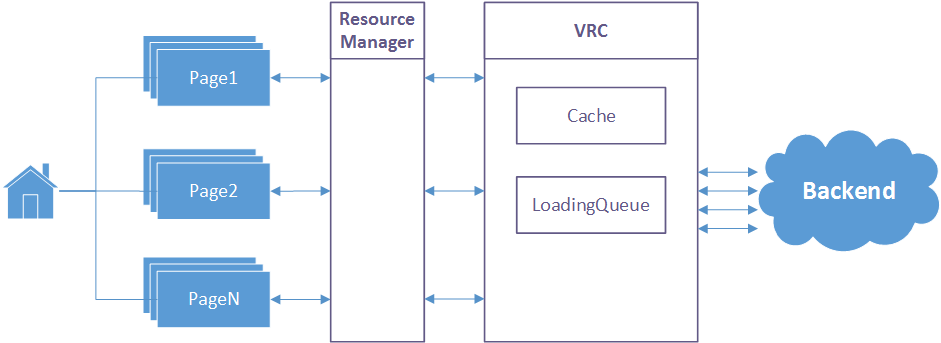

Further ... further the thought rushes to uncharted depths. A VFS (Virtual File System) pops up in memory - an interlayer between the game's resource system and the file system of the operating system. Usually, everything revolves around a data file that can be accessed as a file system. Read the file, write ... And what if you make VRC (Virtual REST Call) !? Then, after all, you can support the work of a web application in general in the absence of an Internet connection! To some extent, of course, but still.

Controllers communicate with the resource manager. He can give away - he gives immediately, all other requests are given to VRC. And he, in turn, already independently synchronizes his state with the backend and, as far as loading, informs about it.

When they talk about the offline work of a web application, Meteor is sure to slip. Cool, of course, but we were in the tight framework of the development stack. The proposed option can be implemented on almost any framework. With reservations, of course, but you can.

But the article is not specifically about that. And about how sometimes unexpectedly, the experience of long gone affairs comes up ...

Enjoy your coding, friends!

Source: https://habr.com/ru/post/302684/

All Articles