Machine Learning Boot Camp - how it was and how it will be

On June 13, ML Boot Camp started - a machine learning contest from the Mail.Ru Group. In this regard, we want to share with you our impressions of its previous launch, the success stories of the winners and tell you what's new for the participants this year.

How is the contest organized?

At the start of the championship, participants receive a condition of the problem - a verbal description of what is contained in the data serving as a training sample. In addition to the condition, the learning sample itself becomes available, it consists of labeled examples — vectors of descriptions of each object with a known answer. If there are a finite number of possible answers, they can be interpreted as the number of the class to which the object belongs.

The participants, using the methods of machine learning known to them, train the computer and use the trained system on new objects (test sample), trying to determine the answer for them. The test sample is randomly divided into two parts: rating and final. The overall result on the rating data is calculated by our system and published immediately, but the winner is the one who gets the best results on the final data. They remain hidden for participants until the very end of the competition.

')

The assignment is given one month. Experienced participants can improve their algorithms throughout the entire contest by updating the result. And those who are not so deeply familiar with this area will have enough time to figure everything out and write their decision. For beginners, an extensive introductory article is also offered, which will indicate in which direction to dig, and schematically describe a possible solution for the championship problem.

ML Boot Camp 2015

In 2015, we held an open championship, which many did not know about. The participants were mostly students of our educational projects - Technosphere and Technopark.

Here is the condition of the task that participants received when opening a contest:

“You are faced with the task of classification: based on the well-known distribution of classes of training elements, also distribute test ones. All data provided for this task is divided into two parts: training (x_train.csv and y_train.csv) and test (x_test.csv). Each line of the x_train.csv and x_test.csv files is a description of some objects in the form of sets of binary values (features), listed by comma. All objects are divided into three categories (classes). For objects from the training set, this partition is known and is given in the y_train.csv file. The answer for this problem is a text file, each line of which corresponds to a line in the x_test.csv file and contains the class number (0, 1 or 2).

Sample data:

| x_train.csv | y_train.csv | x_test.csv |

| 1,1,1,1,0,0,0,1,0,0,0,1,0,0,1,0,0,0,1,0,1,1,1,1,1,1,1, 0,1,1,0,1 | one | 1,1,0,1,1,0,1,0,0,1,1,0,1,0,0,1,1,0,0,1,1,1,1,1,0,0, 1,1,1,1,0 |

| 1,0,1,0,0,0,1,1,0,1,0,1,1,1,1,1,1,1,1,1,0,1,1,1,1,0,1, 0,0,1,0,0 | 2 | 0,1,1,0,1,0,1,0,1,0,0,0,1,0,1,0,0,1,1,0,1,0,0,1,0, 0,1,0,0,0 |

| 0,1,1,0,0,0,1,1,1,1,0,1,0,1,0,1,0,0,1,0,1,1,0,0,1,1, 1,1,0,1,0 | one | 0,0,1,0,0,0,0,0,0,0,1,1,0,1,0,0,0,1,1,0,0,1,1,1,0,0, 1,1,1,1,1 |

| 1,0,1,1,0,1,0,1,1,0,0,0,1,0,1,1,0,1,1,1,0,0,1,0,0,1,100 0,1,1,1,0 | 0 | 1,0,1,0,1,1,0,1,0,0,1,0,0,1,0,1,0,1,1,0,0,0,1,1,0,0, 1,0,0,1,0 |

The classification accuracy will be taken as the quality criterion for solving the problem, i.e. the proportion of correctly classified objects. The test sample is randomly divided into two parts in a 40/60 ratio. The result of the first 40% will determine the position of participants in the rating table throughout the competition. The result for the remaining 60% will be known after the end of the competition, and it is he who will determine the final placement of the participants. ”

The contestants had no idea how the faceless chains of zeros and ones were obtained, but despite this, they managed to achieve impressive results. See for yourself in the table TOP-10 contestants.

| No | Participant | Preliminary score | Final score |

| one | Pavel Shvechikov | 0,6984126984 | 0.6785714286 |

| 2 | Anton Gordienko | 0.6904761905 | 0.6547619048 |

| 3 | Anastasia Videneeva | 0,6984126984 | 0.630952381 |

| four | Artem Kondyukov | 0.6825396825 | 0.619047619 |

| five | Alexander Lutsenko | 0.7301587302 | 0.619047619 |

| 6 | Konstantin Sozykin | 0.6587301587 | 0.619047619 |

| 7 | Vitaly Malygin | 0.4126984127 | 0.5833333333 |

| eight | Alexey Nesmelov | 0.4841269841 | 0.5714285714 |

| 9 | Vladimir Vinnitsky | 0.380952381 | 0.5714285714 |

| ten | Viktor Shevtsov | 0.4047619048 | 0.5714285714 |

Here, the score is the percentage of sequences for which the final program correctly identified the class.

In fact, we got these chains as follows.

Class 0 contains sequences generated by humans. We asked each of the 140 people who agreed to help us, to write a sequence of zeros and ones so that it looked as similar to random as possible. However, they could not use the computer, tables and other materials. Everyone had to simply imagine that he was throwing a coin. If an eagle falls in his mind, then write down 1, if tails, write down 0. Of course, this coin and other devices were also forbidden to use.

Class 1 contains computer-generated sequences using a pseudo-random number sensor. The probability of a zero, as well as the probability of a unit, is 50%, while there is no dependence between the different elements of the sequence.

Class 2 contains sequences also generated by a computer, but the sequential values are different with a probability of 70%.

Just think: even having no idea how these chains were obtained, the participants, with the help of their iron friend (computer), with a probability of almost 70% (!) Correctly determine whether this sequence was written by a person or a computer, randomly or with an algorithm . This seems to be a sufficient reason to evaluate the power of machine learning methods, because even a 50% estimate would be good, considering that a priori chances of guessing are only 33, (3)%.

We asked the winners to tell how they achieved such a result.

3rd place

Anastasia Videneeva

“I solved the problem as follows. First, I examined the data and found out that in the training sample of objects from different classes approximately equally, the number of zeros and ones is approximately the same in objects from each class, but in class 2 more often than in others, the next character differs from the previous one (in classes 0 and 1 there were on average about 14 such “switchings” in each object, in class 2 - 17). I added the number of switchings as a new feature and trained randomForest on the resulting features. The result was not high (without the selection of parameters, my speed was 0.438, as I recall). Judging by the speeds of the other participants at that time, they, most likely, also used the original signs, but more carefully selected the parameters of the algorithms or used combinations of algorithms.

I thought about a fundamentally different idea, which in this problem can "shoot". I decided to treat the data as strings of length 30, and use n- grams as attributes, because they made it possible to reflect the fact that in class 2 often there is a symbol change, and other patterns in the data. On the new signs, all algorithms made it possible to get a significantly higher result (the same randomForest without a selection of parameters received a speed of 0.706). Then I made the selection of features using ExtraTreesClassifier (the sample is very small, I didn’t want to retrain) and classification using gradient boosting, svm and randomForest. The parameters of all algorithms and the cut-off threshold were chosen by importance using leave-one-out cross-validation. I did not get a significant difference between the algorithms, so in the final premise I used the more familiar randomForest. It would be interesting to see what results can be achieved with the help of neural networks, but there was not enough time to figure them out. ”

2nd place

Anton Gordienko

“Before participating in the contest, all my machine learning experience was reduced to taking the Andrew Ng course on Coursera, and a couple of times I tried to do simple classifiers using logistic regression.

First try: I tried to train XGBoost. For the night I set to go through the parameters. I got a good local quality (about 50%), but after sending the solution to the site - only 39%. Surely I retrained, because when I sent XGBoost with default parameters I got 41%.

Second attempt: tried to analyze the signs. I found out that each column separately contains absolutely no information: the number of zeros and ones is the same for each class. I decided to look for dependencies for different sets of columns. I went through all sorts of combinations of three columns (I also tried combinations of two and four columns) and looked at how often each combination occurs in each class. Now, many combinations in one of the classes were 1.5–2 times more often (or less) than in the other two. But the transition to the new binary signs of “the presence in the sequence of each combination” resulted in nothing. The site returned the same 41%. The reason, most likely, is in too many signs (4060 signs at the size of the training base of 210 elements).

Third attempt: I decided to consider as signs the number of subsequences of each type of length 5 (I also tried other lengths, but the quality turned out to be a little worse). I trained with randomForest on random parameters and got 63% on the site. Looking at the importance of the signs, I saw that the feature “presence of a subsequence 10101” stands out well. Added another sign: "the total number of alternations in the sequence." Slightly selected the max_features parameter and got the final 69%. ”

1st place

Pavel Shvechikov

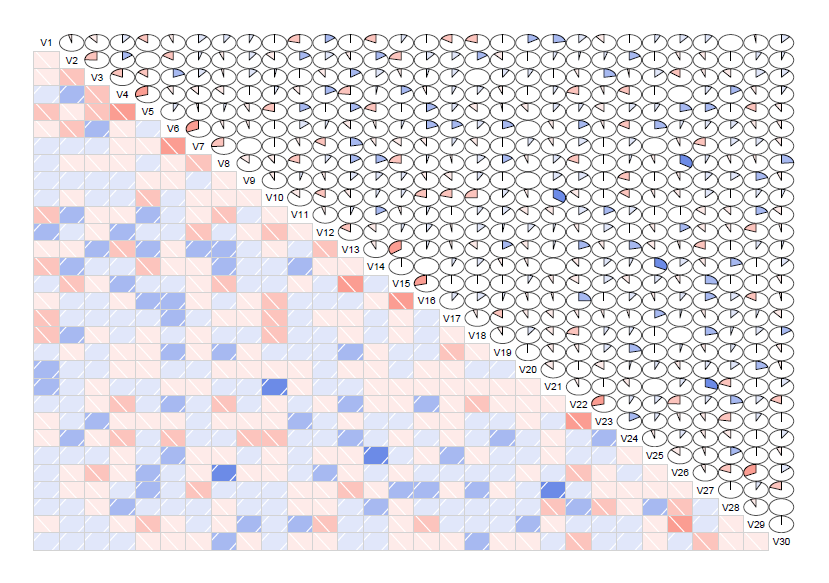

“It was my first machine learning competition, so I tried to try everything that came to my mind and could improve the quality of the prediction. At first, I tried to visualize the data for a long time, tried to look at the data, spread it out on the PCA, but this did not give any good results. The data was all in a heap, and something simple, immediately noticeable to the eye was not visible. Then I wondered if the signs are somehow related to each other, and I built a diagram of correlations between the signs in different classes. So the diagram looked for class 0:

I also built a correlation diagram for the first class:

At this stage I stopped for a long time and tried to understand what the true difference was, but when I built a similar diagram for the second class, everything became somewhat clearer:

Obviously, the signs were related to each other, and most of all it was noticeable in the second grade. After that, I remembered how one of my friends said that on kaggle, in most cases, ensembles from models were beaten, I completely forgot about the dependencies found and hit the ensemble building.

Here I gave myself a will. :) I tried simple voting, weighted voting, stacking, blending, each of those listed with a different number of levels and different models. Here I tried many different interesting things, including teaching higher-level models on the predictions of the previous level with the addition of the initial binary features, I tried different types of cross-validation. It must be admitted that the leave-one-out method of cross-validation became a real find for second-tier models due to the small amount of data, which somewhat raised the quality of the ensemble, which I carefully combed, drawing on the methods and their justification from articles and discussions.

At some point, I quite accidentally gave some good ideas, in particular, about the fact that you can use for teaching models of the next level not predictions of previous ones, but probabilities of classes that are obtained on models of the first level, as well as a great idea that you can look at signs are like strings. Coupled with the correlation diagrams that I received at the very beginning of the contest, it opened my eyes to what else can be done to improve the quality. In particular, I tried to present binary signs as a random walk, tried to use the symmetry of signs, the number of shifts 0-1, the number of continuous 0 or 1, their maximum / median / average length, and also I tried to present the original signs in the form of text documents consisting from the words 0, 1, added new signs indicating the number of the sign on which a continuous sequence of identical values is interrupted. I was thinking of going further and adding TF-IDF signs for different combinations of words, but time was running out, and I decided to stop at what had already happened.

The resulting model looked like this:

For 90% of the data (with the added features described above) I trained seven models (presented below), for the remaining 10% of the data I received predictions of the class probability from the first level models and on these new signs I taught second level models, including svm , randomForest and a small neural network. I pledged second-tier models with the help of majority voting. ”

As you can see, two of the three finalists admitted that they had little experience in solving machine learning problems before the contest. This means that the competition dates and open resources provide you with an opportunity not only to sort out and propose your solution, but also to show a decent result - there would be only desire and perseverance.

***

This year we are holding the competition again! At this time, a new task was prepared for the participants, and the winners are expected not only with respect and respect, but also with useful prizes (iPod shuffle and 500 GB external hard drives), as well as the opportunity to join the Mail.Ru Group team! The scope of application of machine learning algorithms is constantly expanding, now they are actively used in advertising services, antispam and social networks. We are interested in machine learning specialists and we want people to know what it is and how to use it. Competitions like ML Boot Camp are a great opportunity not only to read an abstractly incomprehensible theory, but to immediately run it on tasks that are close to real ones. This is a reason to finally engage in machine learning for those who have always been interested in this, but hesitated in indecision, and the chance for experienced users to show their high level. Join us at ML Boot Camp!

The ML Boot Camp Championship is one of the Mail.Ru Group initiatives aimed at the development of the Russian IT industry and united by the IT.Mail.Ru resource. The IT.Mail.Ru platform is designed for those who are keen on IT and are seeking to develop professionally in this area. Also, the project combines the championships of the Russian Ai Cup, Russian Code Cup and Russian Developers Cup, educational projects Technopark in MSTU. Bauman, Technosphere at Moscow State University. MV Lomonosov and Tehnotrek MIPT. In addition, using IT.Mail.Ru, you can use tests to test your knowledge of popular programming languages, learn important news from the IT world, visit or watch broadcasts from specialized events and lectures on IT topics.

Source: https://habr.com/ru/post/302674/

All Articles