Dell Compellent Storage QoS

A typical problem that can occur with virtually any storage system is the “subsidence” of performance with abnormal activity on one of the logical drives. As a result, due to one “neighbor”, all consumers of resources suffer, and a drop in productivity can lead to very sad consequences. The bursts of activity can be due to both the useful (from an applied point of view) periodic load (for example, the generation of reports) and the random error made by the developers on the test repository. For small infrastructures, where the storage system was implemented according to the principle of one system - one task, it is certainly not so bad. However, now we are usually talking about the consolidation of various services. The active use of virtualization only reinforces this trend. The solution to this problem is already known for many years - service level management (QoS), with which you can set priorities for different types of workload. Only a few years ago, technology was usually available only for “large” storage systems as a separate product (license), now more and more storage systems are able to manage QoS even in entry-level systems and often “for nothing”. So for Dell Compellent systems with the release of Storage Center OS version 7, it is also possible to manage QoS settings. Upgrading to SC OS 7 is currently available only for SC9000 series storage systems, but it will soon be possible to use the new version on other Compellent systems.

When QoS control is enabled in DSM, the setting for the latency limit value becomes available. When this indicator is reached, the system will begin to try to “take action” to improve the situation. Usually, the basic settings are sufficient to protect against periodic load spikes on individual volumes. Therefore, you should always first try to fine-tune the system parameters and, if it does not help, move on to the fine tuning. The target latency value does not need to be taken "from the ceiling" - in DSM you can see the accumulated statistics of IO Usage and already on its basis set the QoS settings. This is quite important, since at too high latency values we may encounter a situation where at the application level the delays already have a negative effect, and the QoS technology does not work because the system is sure that so far so good. Too low latency will be unattainable without a forced load reduction, which will also adversely affect performance.

In configurations with disks of various types (SSD + HDD), one should be very careful when choosing the boundary value of delays - the latency of SSD and regular disks is very different and the “system-wide” settings can lead to a decrease in performance of volumes on SSD, although the root cause will be excessively active volumes located on the HDD.

To prevent such situations, as well as to finely manage individual volumes, you should use the individual QoS profile settings for logical volumes.

')

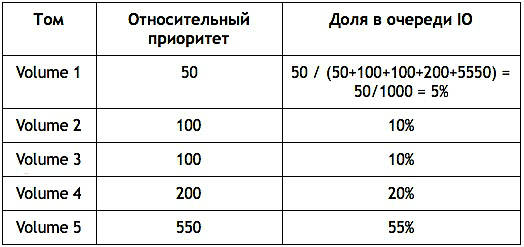

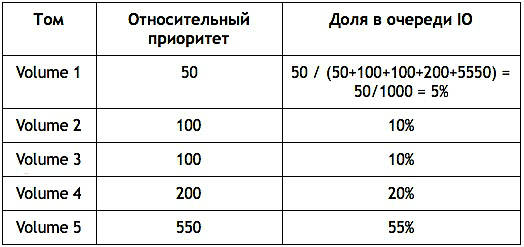

An important parameter that is configured as part of QoS management is relative volume priority. It establishes who and in what proportion will be “cut down” when achieving system-wide restrictions. In addition to the basic values (Low - 50, Medium - 100 and High - 200), you can set arbitrary priorities (from 1 to 1000).

Prioritization works quite simply - each volume receives a percentage corresponding to the priority from the I / O queue. Consider this with an example: we have 5 volumes with settings of 50, 100, 100, 200 and 550. At the moment when the system latency exceeds the specified limit, the storage system will try to reduce the load and allocate such volumes in the I / O queue to our volumes:

But if one of the volumes is not currently loaded and does not use the share allocated to it, then its performance will not decrease, and free resources will be distributed among the “needy” volumes. This approach allows you to fully utilize the resources of the storage system, ensuring maximum performance.

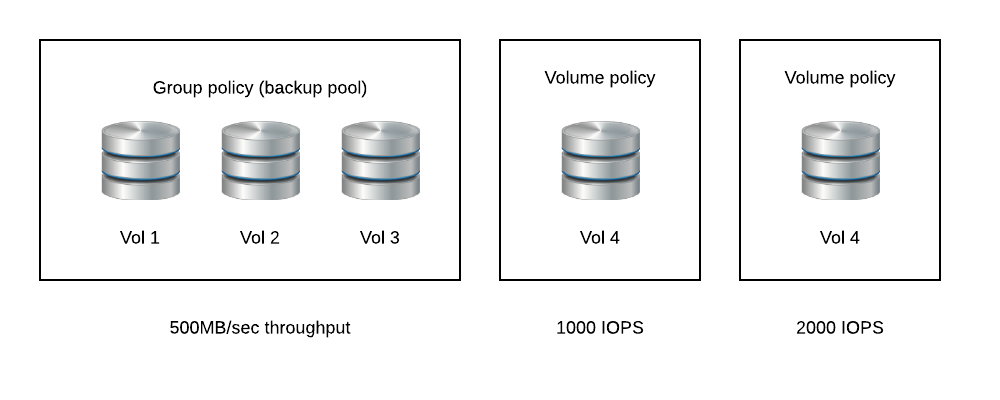

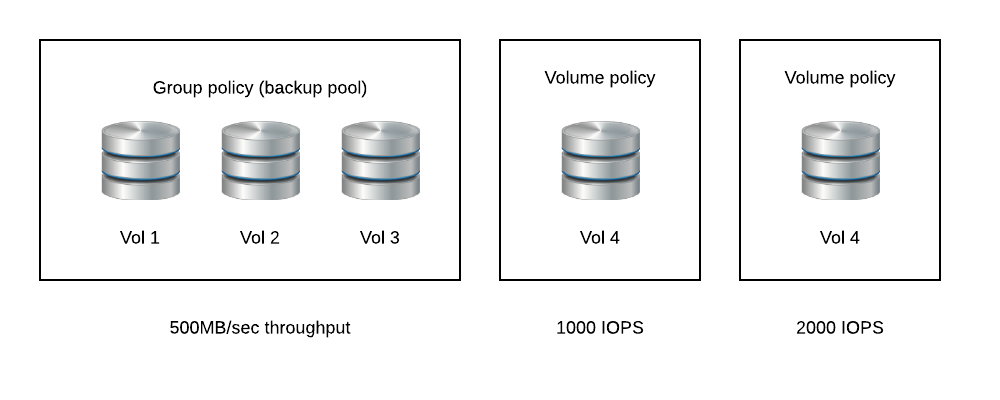

When prioritization no longer saves, it's time to move on to managing the constraints on the number of I / O operations (IOPS) and throughput (MB / sec) for individual volumes.

In this case, along with individual profiles, you can customize and group. This makes it possible to impose restrictions on certain consumers (software systems). The figure shows an example when a limit of 1000 and 2000 IOPS is allocated to individual volumes, and a group limit of 500 MB / s is allocated for a volume group in the backup pool. These 500 MB / s will be distributed between all three volumes in the group in any ratio. But the maximum bandwidth will not exceed the established limits. This is convenient since it is possible to control the load on the system from the point of view of the resources provided for use.

The implementation of QoS in SC is based on the forced introduction of delays between I / O operations when resource consumption becomes higher than the specified limits. Although in the settings you can set the bandwidth limit, in fact it is always about limiting the number of I / O operations - the storage system itself calculates the IOPS limit values based on the block size used.

Since Dell Compellent has an SDK for management via PowerShell, load monitoring and QoS management can be automated and integrated into the monitoring and control systems already used. The latest publicly available version of the SDK does not yet contain the necessary commands for this, but let's hope that the update is just around the corner.

The ability to manage QoS is a serious step forward and can determine the customer’s choice in favor of Compellent in a number of projects. New features of SC7 are not limited to this and in the near future we will tell about other benefits.

Youtube can watch a video demonstrating QoS management in the SC9000: youtu.be/1o18zTs9qdo

Visit the popular technical forum of Trinity or order a consultation .

Trinity engineers will be happy to advise you on server virtualization, storage systems, workstations, applications, networks.

Other Trinity articles can be found on the Trinity blog and hub . Subscribe!

When QoS control is enabled in DSM, the setting for the latency limit value becomes available. When this indicator is reached, the system will begin to try to “take action” to improve the situation. Usually, the basic settings are sufficient to protect against periodic load spikes on individual volumes. Therefore, you should always first try to fine-tune the system parameters and, if it does not help, move on to the fine tuning. The target latency value does not need to be taken "from the ceiling" - in DSM you can see the accumulated statistics of IO Usage and already on its basis set the QoS settings. This is quite important, since at too high latency values we may encounter a situation where at the application level the delays already have a negative effect, and the QoS technology does not work because the system is sure that so far so good. Too low latency will be unattainable without a forced load reduction, which will also adversely affect performance.

In configurations with disks of various types (SSD + HDD), one should be very careful when choosing the boundary value of delays - the latency of SSD and regular disks is very different and the “system-wide” settings can lead to a decrease in performance of volumes on SSD, although the root cause will be excessively active volumes located on the HDD.

To prevent such situations, as well as to finely manage individual volumes, you should use the individual QoS profile settings for logical volumes.

')

An important parameter that is configured as part of QoS management is relative volume priority. It establishes who and in what proportion will be “cut down” when achieving system-wide restrictions. In addition to the basic values (Low - 50, Medium - 100 and High - 200), you can set arbitrary priorities (from 1 to 1000).

Prioritization works quite simply - each volume receives a percentage corresponding to the priority from the I / O queue. Consider this with an example: we have 5 volumes with settings of 50, 100, 100, 200 and 550. At the moment when the system latency exceeds the specified limit, the storage system will try to reduce the load and allocate such volumes in the I / O queue to our volumes:

But if one of the volumes is not currently loaded and does not use the share allocated to it, then its performance will not decrease, and free resources will be distributed among the “needy” volumes. This approach allows you to fully utilize the resources of the storage system, ensuring maximum performance.

When prioritization no longer saves, it's time to move on to managing the constraints on the number of I / O operations (IOPS) and throughput (MB / sec) for individual volumes.

In this case, along with individual profiles, you can customize and group. This makes it possible to impose restrictions on certain consumers (software systems). The figure shows an example when a limit of 1000 and 2000 IOPS is allocated to individual volumes, and a group limit of 500 MB / s is allocated for a volume group in the backup pool. These 500 MB / s will be distributed between all three volumes in the group in any ratio. But the maximum bandwidth will not exceed the established limits. This is convenient since it is possible to control the load on the system from the point of view of the resources provided for use.

The implementation of QoS in SC is based on the forced introduction of delays between I / O operations when resource consumption becomes higher than the specified limits. Although in the settings you can set the bandwidth limit, in fact it is always about limiting the number of I / O operations - the storage system itself calculates the IOPS limit values based on the block size used.

Since Dell Compellent has an SDK for management via PowerShell, load monitoring and QoS management can be automated and integrated into the monitoring and control systems already used. The latest publicly available version of the SDK does not yet contain the necessary commands for this, but let's hope that the update is just around the corner.

The ability to manage QoS is a serious step forward and can determine the customer’s choice in favor of Compellent in a number of projects. New features of SC7 are not limited to this and in the near future we will tell about other benefits.

Youtube can watch a video demonstrating QoS management in the SC9000: youtu.be/1o18zTs9qdo

Visit the popular technical forum of Trinity or order a consultation .

Trinity engineers will be happy to advise you on server virtualization, storage systems, workstations, applications, networks.

Other Trinity articles can be found on the Trinity blog and hub . Subscribe!

Source: https://habr.com/ru/post/301986/

All Articles