Syncookied - OpenSource ddos protection system

When our company BeGet LTD got the task of transparent filtering attacks on OSI model level 4, we wrote our Syncookied solution. We would like to share this decision with the Internet community, since we haven’t found any analogues to it (or we don’t know about them). There are paid solutions like Arbor, F5, SRX, but they cost a completely different money and they use other protection technologies.

Why we chose Rust and the NetMap framework for development , what difficulties we encountered in the process - this article will tell you.

» Github

» GitHub kernel module

» Project Page

')

More information about the principles of TCP and methods of protection against DDOS attacks level L4 can be found on the project page . But, in any case, to describe the scheme of work and its advantages in this article is necessary.

Syncookied - is a logical continuation of the development of technology Syncookie . Syncookie technology is removed from the operating system kernel on a separate server. To do this, we wrote a module to the kernel and a daemon that communicates with the firewall, transmits the secret key and synchronizes the time stamps, and also includes Syncookie on the server if necessary. That is, a kernel module is installed on the protected server, which deceives the kernel, causing it to think that it sent Syncookie, and creates the file / proc / tcp_secrets , and a daemon, which sends data from this file to the firewall.

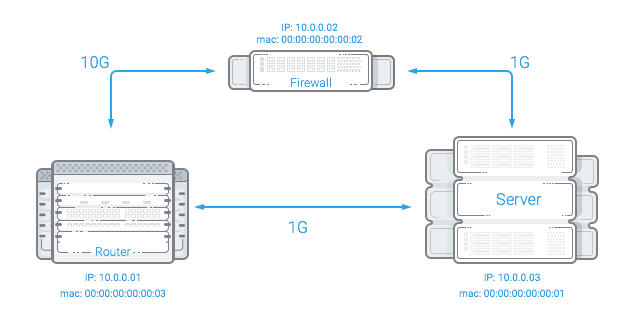

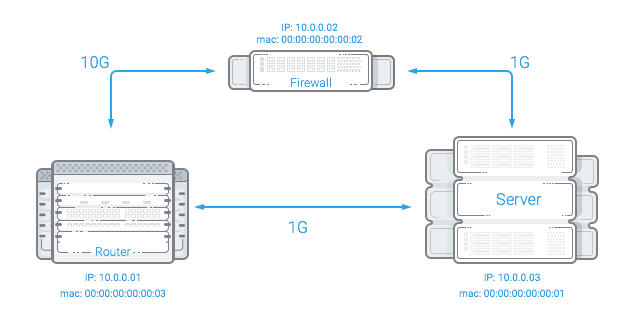

Schematic diagram of the work below:

Explanations:

In the normal state, the router and the protected server communicate directly; if an attack is detected on the router, a static binding of the server's IP address to the MAC address of the firewall is registered, after that all traffic going to the protected server goes to the firewall . When receiving a SYN packet, a firewall sends a packet on behalf of the protected server to the SYN-ACK network from the server’s IP address and, more importantly, from the protected syncookie server (since it has a secret key and a timestamp from the protected server), in the case of receiving an ACK packet (with a cookie), the firewall checks its validity, then either changes the MAC address in the packet to the MAC address of the protected server and sends the packet back to the network, or deletes it if the cookie is not valid. The protected server , receiving an ACK packet, opens connections and sends outgoing packets directly to the router .

On the server, as well as in the SynProxy technology, connections are monitored (the algorithm of work can be read in more detail on the project page ), which allows filtering also other non-valid packets - RST, DATA, SYN + ACK, ACK, and so on.

The main difference from similar systems:

It is possible to specify additional filters in the host configuration, the filters are written in pcap format.

This filtering system is not suitable for everyone, since its advantages also imply disadvantages:

Initially, the system was written in C ++, after creating a working prototype and testing the viability of the ideas, it was decided to rewrite everything in Rust. Partly because of the simplification of the code, partly because of the desire to minimize the risk of shooting yourself in the leg when working with memory. Since all resource-intensive operations were implemented on the assembler, a slight loss of performance was not critical. To work with the network card, the NetMap framework was selected (there are several articles with a description in Habré ) as one of the easiest to use and easy to learn (as it turned out, not without problems).

The number of running threads is tied to the number of interrupts generated by the network card for incoming and outgoing queues; between queues for incoming and outgoing traffic, two queues are created - for the forwarded traffic and the traffic that needs to be answered. Incoming traffic flows take packets from the network card, analyze their headers and, based on filtering rules, do one of three actions:

Flows that process sending packets to the network wait for a signal from the network card about the appearance of a place in the queue for sending packets, then polling the queue for forwarding packets and, if it is empty, polling the queue for packets whose answer is needed, creating a SYN + ACK response and sending it to the network.

The minimum delay is made for forwarded valid packets, packets that need to be answered go with a lower priority.

There was an idea to implement separate processes for processing the queue of packets requiring a response, but, as practice has shown, this is not required on modern processors and adequate network cards, since at the maximum theoretical load the kernels are not loaded 100%. Yes, and additional queues will lead to slower processing.

The library was not as perfect as we thought. Initially, the prototype implemented a filtering system for a local server. It turned out in NetMap only one inbound and one outbound queue is used to communicate with the network subsystem of the kernel, which created certain performance difficulties.

For the incoming queue, the NS_FORWARD flag was implemented to automatically forward packets from the NIC to HOST RING, for the outgoing this was not implemented. For the TX queue, this is added quite simply:

In the future, we refused to work with HOST RING.

For testing, we used two cards from Intel - X520 and X710 . The X520-based card started up without any problems, but only 4 bits were allocated for interruption in them - as a result, a maximum of 16 interrupts, despite the fact that drivers can show more. In X710, 8 bits are already allocated for interruption, there is virtualization and a lot of interesting things. The X710 had to be flashed, since Intel did not bother to add third-party SPF + support through the kernel module option, as is done in the X520 cards:

The card was stitched according to the instructions below:

A newer (than in the kernel) version of the driver from e1000.sf.net with a patch patch from netmap here .

Also leave here a set of magical crutches. Our tests showed that with the following settings, optimal performance is achieved:

Rust, as a programming language, saved us from a lot of difficulties and significantly accelerated development (if there are people willing to rewrite in C ++, it is not difficult), but added a number of specific performance problems with which we successfully struggled during the month. During the development, we sent 15 pools of requests, of which 12 were accepted by us.

In the first implementation, we achieved the performance of 5M packages on 16 cores, at about the same time Google employee Eric Dumazet wrote to the kernel mailing list that he fixed everything and managed to get the vanilla core to process 6M packets. As the saying goes:

From more or less interesting pull requests you can note:

libpnet

rust-lang

concurrent-hash-map

bpfjit for Rust

In the absence of traffic, syncookied to reduce delays constantly polls the network card, which creates a small load. Parasitic load decreases with increasing number of incoming packets:

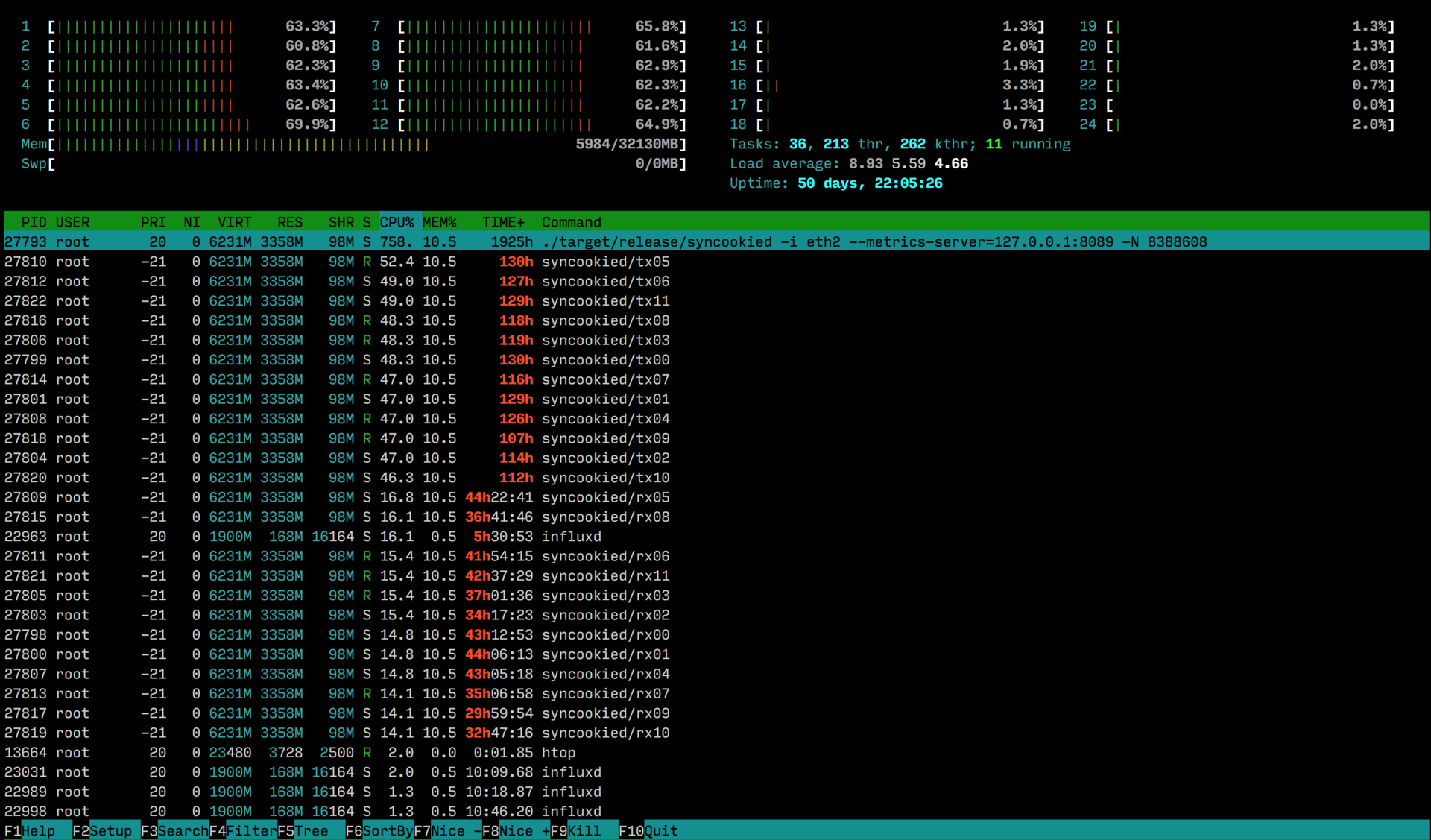

The load generated by traffic filtering is 12.755pps ( theoretical limit for a packet size of 74 bytes + 4 bytes of ethernet header)

When UDP filtering or port / protocol rules are applied, the load will be indistinguishable from the load in the absence of traffic.

In fact, the 12 cores of the Intel Xeon E5-2680v3 processor can handle 10 gigabit of syn / ack / data flooding traffic. One physical server can handle more than 40 gigabit of traffic.

At the moment, we have almost completed the implementation of SynCookied on the entire infrastructure of our hosting, repulsed several not very strong attacks and, if possible, are improving the product. Plans to add protection Out of Seq (with this, I hope, our colleagues will help us), almost implemented full-fledged SynProxy, add beautiful metrics to Influx, we think to add Auto mode to the implementation of Syncooked protection (similar to net.ipv4.tcp_syncookies = 1, Cook is sent when too many Syn arrives (packets).

We very much hope that our work will benefit the Internet community.

If the DDOS protection companies had put their practices into OpenSource, and every provider / administrator / client could use them, the problem of exploiting the imperfections of the Internet would be solved much faster.

We tested our system on synthetic tests and weak DDOS attacks (As an evil one, no normal attack has arrived for 4 months). For our tasks, the system turned out to be quite convenient. I would also like to note that the project at Rust was actually implemented by one person, a very talented programmer Alexander Polyakov, in a short time, after implementing the C ++ prototype and checking the idea for efficiency before the working version 5 weeks passed.

» Source Code

» Kernel Module Source Code

» Project Page

Why we chose Rust and the NetMap framework for development , what difficulties we encountered in the process - this article will tell you.

» Github

» GitHub kernel module

» Project Page

')

Principle of operation

More information about the principles of TCP and methods of protection against DDOS attacks level L4 can be found on the project page . But, in any case, to describe the scheme of work and its advantages in this article is necessary.

Syncookied - is a logical continuation of the development of technology Syncookie . Syncookie technology is removed from the operating system kernel on a separate server. To do this, we wrote a module to the kernel and a daemon that communicates with the firewall, transmits the secret key and synchronizes the time stamps, and also includes Syncookie on the server if necessary. That is, a kernel module is installed on the protected server, which deceives the kernel, causing it to think that it sent Syncookie, and creates the file / proc / tcp_secrets , and a daemon, which sends data from this file to the firewall.

Schematic diagram of the work below:

Explanations:

- A router is a router that processes incoming traffic.

- Firewall - the server on which the filtering system is running.

- Protected server - the server, the traffic to which you want to filter.

In the normal state, the router and the protected server communicate directly; if an attack is detected on the router, a static binding of the server's IP address to the MAC address of the firewall is registered, after that all traffic going to the protected server goes to the firewall . When receiving a SYN packet, a firewall sends a packet on behalf of the protected server to the SYN-ACK network from the server’s IP address and, more importantly, from the protected syncookie server (since it has a secret key and a timestamp from the protected server), in the case of receiving an ACK packet (with a cookie), the firewall checks its validity, then either changes the MAC address in the packet to the MAC address of the protected server and sends the packet back to the network, or deletes it if the cookie is not valid. The protected server , receiving an ACK packet, opens connections and sends outgoing packets directly to the router .

On the server, as well as in the SynProxy technology, connections are monitored (the algorithm of work can be read in more detail on the project page ), which allows filtering also other non-valid packets - RST, DATA, SYN + ACK, ACK, and so on.

The main difference from similar systems:

- Asynchronous filtering traffic - allows you to install the system near the border routers, regardless of the path of the reverse packet. That is, in places with the maximum size of the channel.

- Syncookied does not break the connection after disabling protection. Since packages, unlike SynProxy technology, SEQ numbers of sequences do not change. The disconnection itself consists in removing the static binding of the MAC address to the IP address of the protected server on the router, after which the traffic goes directly to the protected server without processing it with a firewall.

- The openness of the solution, scalability and the ability to work on a sufficiently low-cost hardware without noticeable delays.

- The system is written in Rust using assembler for demanding operations.

It is possible to specify additional filters in the host configuration, the filters are written in pcap format.

This filtering system is not suitable for everyone, since its advantages also imply disadvantages:

- This is not a solution for installation on a protected server or a solution for protecting a single server.

- It is assumed that there is access to the protected servers, and they work under the Linux operating system (to install the kernel module)

Implementation

Initially, the system was written in C ++, after creating a working prototype and testing the viability of the ideas, it was decided to rewrite everything in Rust. Partly because of the simplification of the code, partly because of the desire to minimize the risk of shooting yourself in the leg when working with memory. Since all resource-intensive operations were implemented on the assembler, a slight loss of performance was not critical. To work with the network card, the NetMap framework was selected (there are several articles with a description in Habré ) as one of the easiest to use and easy to learn (as it turned out, not without problems).

Program architecture

The number of running threads is tied to the number of interrupts generated by the network card for incoming and outgoing queues; between queues for incoming and outgoing traffic, two queues are created - for the forwarded traffic and the traffic that needs to be answered. Incoming traffic flows take packets from the network card, analyze their headers and, based on filtering rules, do one of three actions:

- remove the package.

- send the packet to the transfer queue.

- send a packet to the queue for a response.

Flows that process sending packets to the network wait for a signal from the network card about the appearance of a place in the queue for sending packets, then polling the queue for forwarding packets and, if it is empty, polling the queue for packets whose answer is needed, creating a SYN + ACK response and sending it to the network.

The minimum delay is made for forwarded valid packets, packets that need to be answered go with a lower priority.

There was an idea to implement separate processes for processing the queue of packets requiring a response, but, as practice has shown, this is not required on modern processors and adequate network cards, since at the maximum theoretical load the kernels are not loaded 100%. Yes, and additional queues will lead to slower processing.

Problems we encountered in netmap

The library was not as perfect as we thought. Initially, the prototype implemented a filtering system for a local server. It turned out in NetMap only one inbound and one outbound queue is used to communicate with the network subsystem of the kernel, which created certain performance difficulties.

For the incoming queue, the NS_FORWARD flag was implemented to automatically forward packets from the NIC to HOST RING, for the outgoing this was not implemented. For the TX queue, this is added quite simply:

Patch to add NS_FORWARD

diff --git a/sys/dev/netmap/netmap.cb/sys/dev/netmap/netmap.c index c1a0733..2bf6a26 100644 --- a/sys/dev/netmap/netmap.c +++ b/sys/dev/netmap/netmap.c @@ -548,6 +548,9 @@ SYSEND; NMG_LOCK_T netmap_global_lock; + +static inline void nm_sync_finalize(struct netmap_kring *kring); + /* * mark the ring as stopped, and run through the locks * to make sure other users get to see it. @@ -1158,6 +1161,14 @@ netmap_sw_to_nic(struct netmap_adapter *na) rdst->head = rdst->cur = nm_next(dst_head, dst_lim); } /* if (sent) XXX txsync ? */ + if (sent) { + if (nm_txsync_prologue(kdst, rdst) >= kdst->nkr_num_slots) { + netmap_ring_reinit(kdst); + } else if (kdst->nm_sync(kdst, NAF_FORCE_RECLAIM) == 0) { + nm_sync_finalize(kdst); + } + printk(KERN_ERR "Synced %d packets to NIC ring %d", sent, i); + } In the future, we refused to work with HOST RING.

For testing, we used two cards from Intel - X520 and X710 . The X520-based card started up without any problems, but only 4 bits were allocated for interruption in them - as a result, a maximum of 16 interrupts, despite the fact that drivers can show more. In X710, 8 bits are already allocated for interruption, there is virtualization and a lot of interesting things. The X710 had to be flashed, since Intel did not bother to add third-party SPF + support through the kernel module option, as is done in the X520 cards:

insmod ./ixgbe/ixgbe.ko allow_unsupported_sfp=1 The card was stitched according to the instructions below:

- Download github.com/terpstra/xl710-unlocker .

- Substitute pci values in mytool.c

- Compile

- Download ./mytool 0 0x8000

- Find where this is repeated 4 times:

00006870 + 00 => 000b 00006870 + 01 => 0022 = external SFP+ 00006870 + 02 => 0083 = int: SFI, 1000BASE-KX, SGMII (???) 00006870 + 03 => 1871 = ext: 0x70 = 10GBASE-{SFP+,LR,SR} 0x1801=crap? 00006870 + 04 => 0000 00006870 + 05 => 0000 00006870 + 06 => 3303 = SFP+ copper (passive, active), 10GBase-{SR,LR}, SFP 00006870 + 07 => 000b 00006870 + 08 => 2b0c *** This is the important register *** (0xb=bits 3+1+0 = enable qualification (3), pause TX+RX capable (1+0), 0xc = 3+2 = 10GbE + 1GbE) 00006870 + 09 => 0a00 00006870 + 0a => 0a1e = default LESM values 00006870 + 0b => 0003 - Shove mypoke.c offset and value

- Compile, run (it hangs for a long time, it seems ok)

- Run mytool and see that everything has changed

- Download

- Run nvmupdate64e -u from there (also hangs for a long time)

- Then either the link appeared, or you killed the card =)

A newer (than in the kernel) version of the driver from e1000.sf.net with a patch patch from netmap here .

iommu=off netmap.ko no_timestamp=1 ixgbe.ko InterruptThrottleRate=9560,9560 RSS=12,12 DCA=2,2 allow_unsupported_sfp=1,1 VMDQ=0,0 AtrSampleRate=0,0 FdirPballoc=0,0 MDD=0,0 The problems we have overcome in Rust

Rust, as a programming language, saved us from a lot of difficulties and significantly accelerated development (if there are people willing to rewrite in C ++, it is not difficult), but added a number of specific performance problems with which we successfully struggled during the month. During the development, we sent 15 pools of requests, of which 12 were accepted by us.

In the first implementation, we achieved the performance of 5M packages on 16 cores, at about the same time Google employee Eric Dumazet wrote to the kernel mailing list that he fixed everything and managed to get the vanilla core to process 6M packets. As the saying goes:

Main performance issues we encountered:

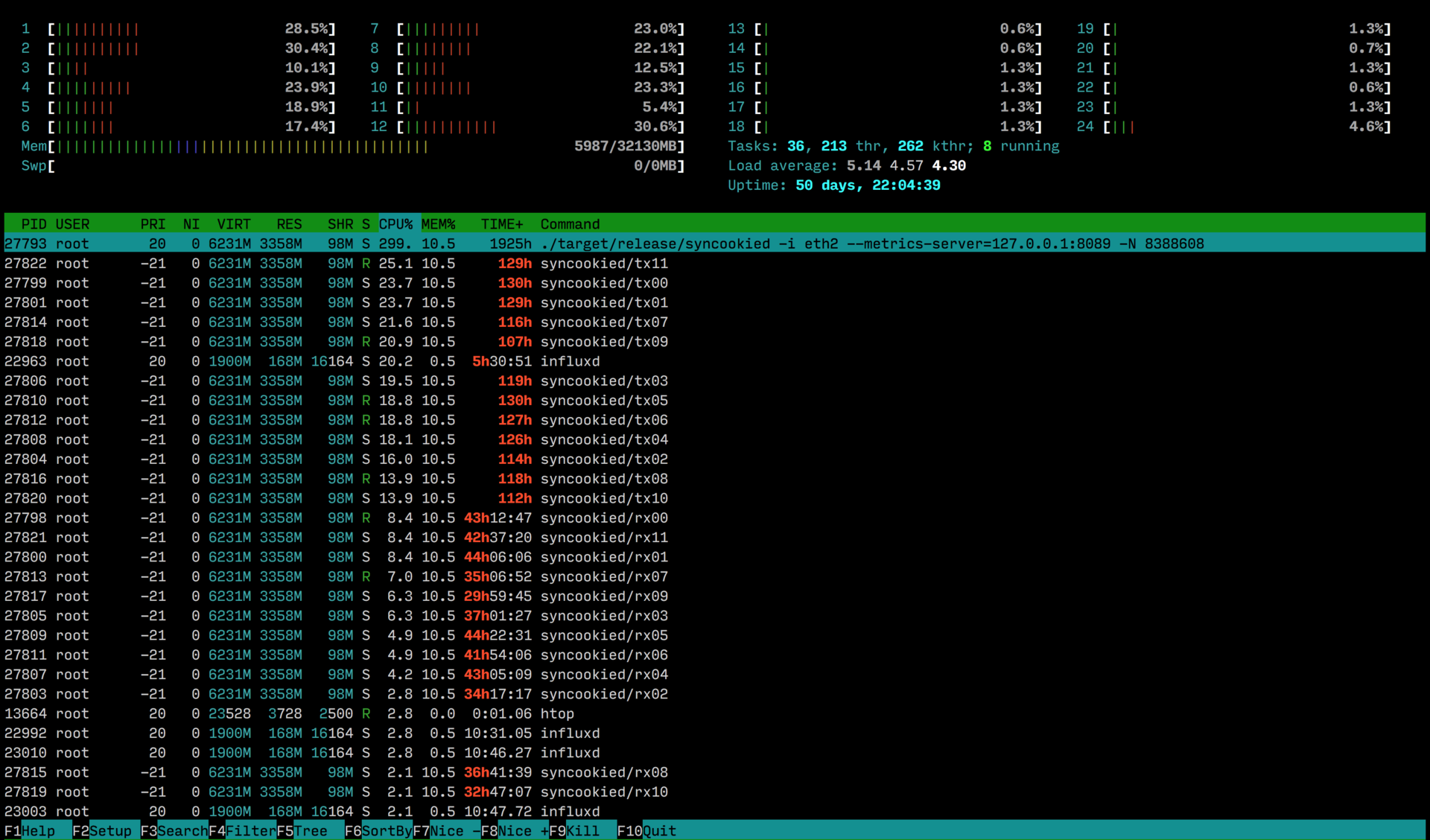

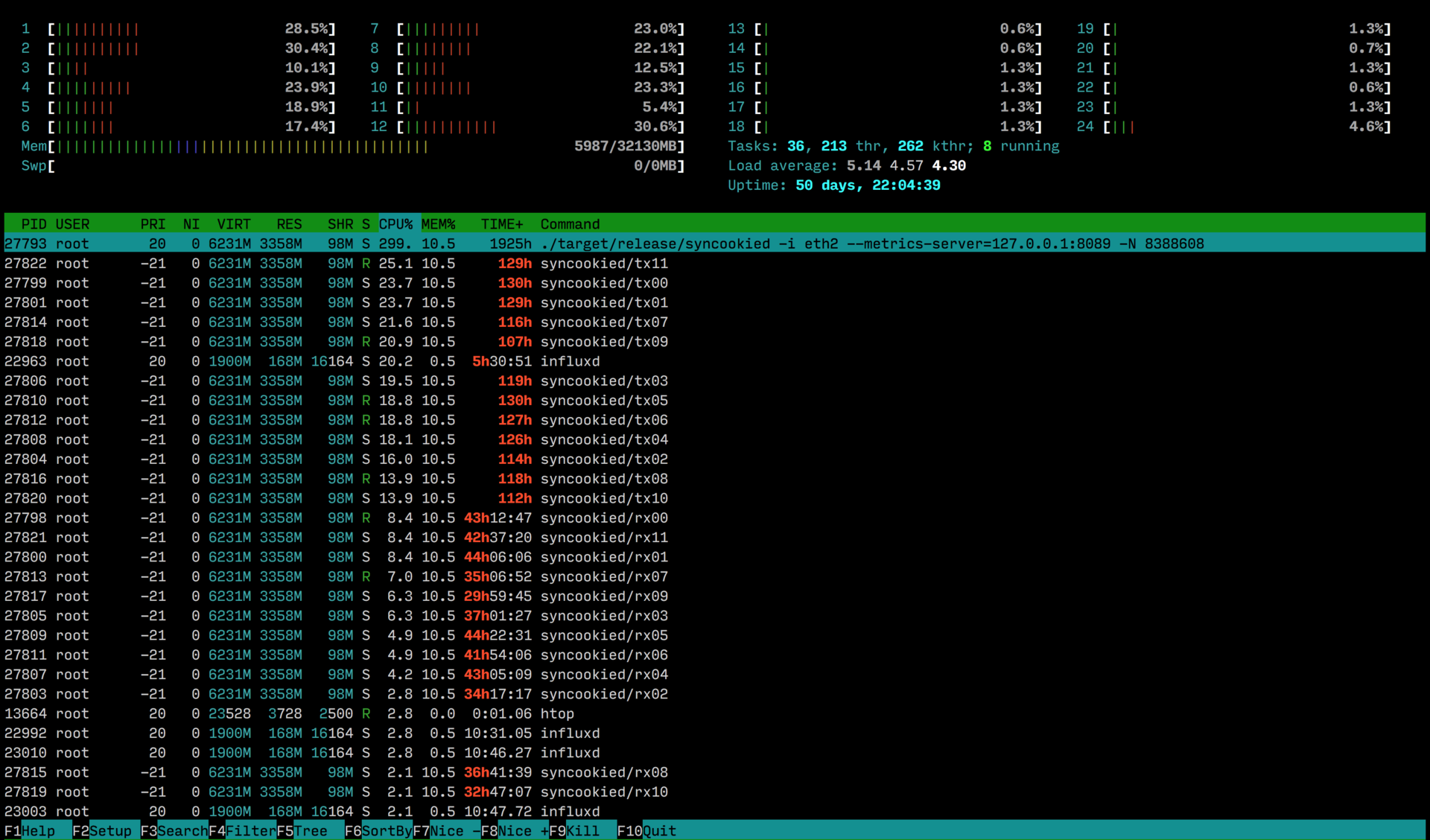

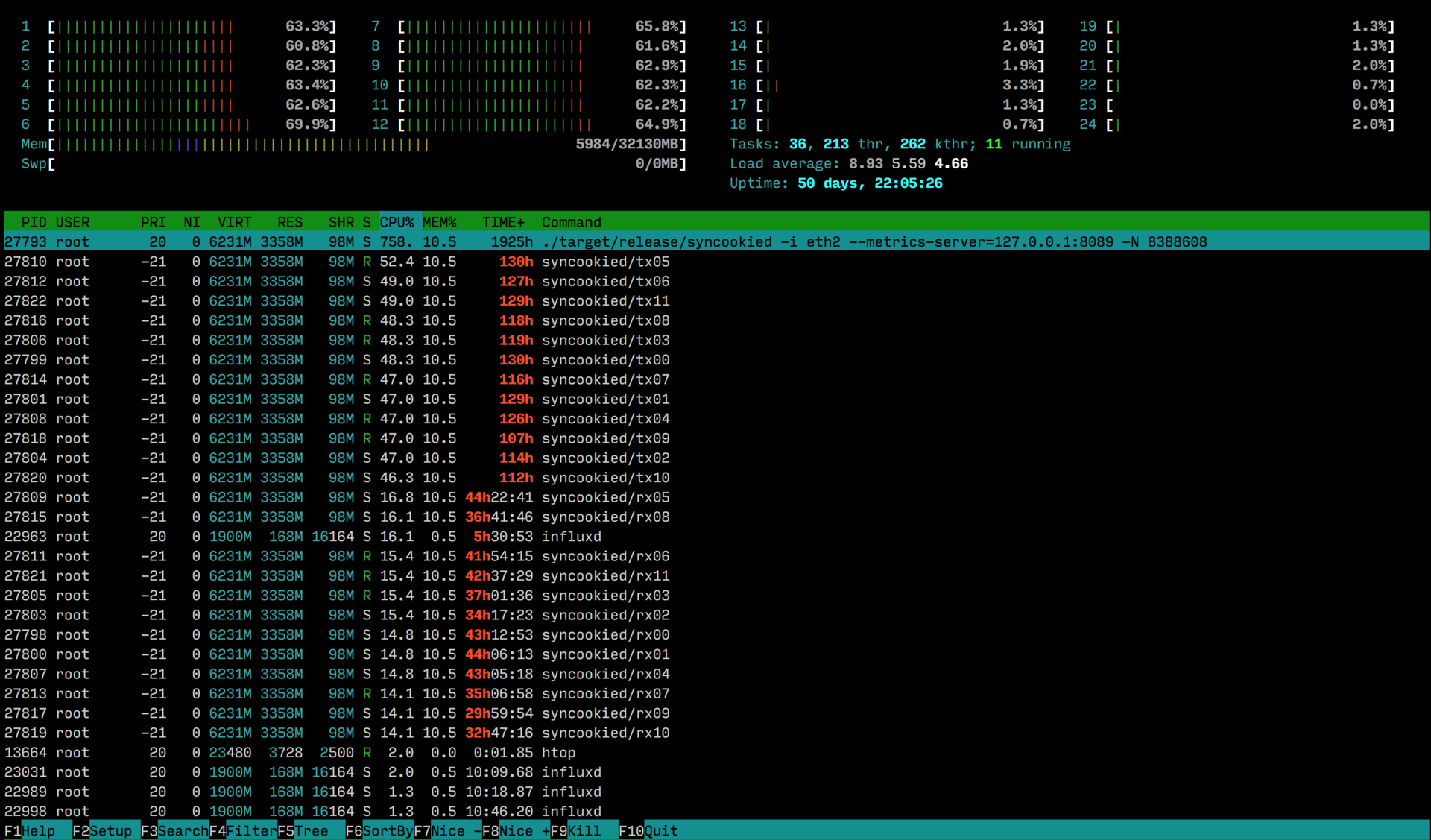

Initially, we used a generic driver, but it was not the best solution in terms of performance. When using the generic driver, the package still falls into the kernel's network stack, which is quite sad, since the ksoftirqd picture with red in htop continues:

The solution is to use native drivers. These are special drivers with added netmap hooks.

A newer (than in the kernel) version of the driver from e1000.sf.net with a patch applied by netmap:

github.com/polachok/i40e-netmap/tree/master

In the native drivers, we are faced with new problems - they did not work. I had to understand the source code, read the documentation, and it turned out that the drivers are working, the Host Ring is not working. Host Ring has two problems - it does not work with native drivers and it has only one queue, that is, even if the network has 16 queues, the Host Ring will have one queue. This creates additional synchronization and blocking problems. We found the right solution in this situation - not to use Host Ring =), but to send packets back to the network. Alternatively, tap devices could be used, they support multiqueue, but we have not yet implemented this feature.

After abandoning the Host Ring and refusing to install software on each server (as we originally wanted), it turned out that we need to have support for several protected hosts in one daemon, a quick reload of the config without losing states, and other pleasant trifles. We tried to make it simple - take a global structure to wrap it in mutex - performance dropped to 3M packets. After finding out, it turned out that std :: sync :: Mutex from the standard Rust library is just wrappers on pthread mutexes and they work quite slowly, since for each lock you have to go to the kernel, switch the context. We found a post on the Internet where people from webkit made more efficient locks, while parking_lot was made on the basis of this post. In parking_lot, locks are organized somewhat differently - they are adaptive, at first they try to spin ( Spinlock ) several times and only then go to the core, they are smaller in size, because the list of threads that are blocked on it is stored separately. It became a little better, but not much.

We decided to use Thread Local Storage . Each stream can have its own storage, to which we can walk without blocking. We have created a global configuration that is once every 10 seconds copied to Thread Local Storage. It turns out the almost complete absence of locks, they certainly are, but are spread over time and are not actually noticeable.

One more problem was found out: in Rust there are std :: sync :: mpsc (channels) - they are about the same as the channels in Go. We use them to transfer packets from streams that are received to streams that are sent. In fact, the channel is an array for mutex. The word mutex in the context of our story is a sad word. We took the channels and moved them to parking_lot: github.com/polachok/mpsc .

It got a little better. mpsc is the multiple producer single consumer, in our case, the multiple producer is not needed, since from one RX package goes into one TX.

We found the library with the bounded-spsc-queue functionality we needed - it became much better, the locks were gone - only atomic operations were used, but it turned out that copying was happening - we had to finish the library a little.

The next problem we encountered: in the case of an answer, a packet is collected from scratch every time. This was displayed in reds in perf, and I had to optimize this with a one-time build of the package template, after copying it, we replace the necessary fields in it. The performance gain was about 30%.

Netmap generic driver

Initially, we used a generic driver, but it was not the best solution in terms of performance. When using the generic driver, the package still falls into the kernel's network stack, which is quite sad, since the ksoftirqd picture with red in htop continues:

The solution is to use native drivers. These are special drivers with added netmap hooks.

A newer (than in the kernel) version of the driver from e1000.sf.net with a patch applied by netmap:

github.com/polachok/i40e-netmap/tree/master

Using Host Ring

In the native drivers, we are faced with new problems - they did not work. I had to understand the source code, read the documentation, and it turned out that the drivers are working, the Host Ring is not working. Host Ring has two problems - it does not work with native drivers and it has only one queue, that is, even if the network has 16 queues, the Host Ring will have one queue. This creates additional synchronization and blocking problems. We found the right solution in this situation - not to use Host Ring =), but to send packets back to the network. Alternatively, tap devices could be used, they support multiqueue, but we have not yet implemented this feature.

Locks

After abandoning the Host Ring and refusing to install software on each server (as we originally wanted), it turned out that we need to have support for several protected hosts in one daemon, a quick reload of the config without losing states, and other pleasant trifles. We tried to make it simple - take a global structure to wrap it in mutex - performance dropped to 3M packets. After finding out, it turned out that std :: sync :: Mutex from the standard Rust library is just wrappers on pthread mutexes and they work quite slowly, since for each lock you have to go to the kernel, switch the context. We found a post on the Internet where people from webkit made more efficient locks, while parking_lot was made on the basis of this post. In parking_lot, locks are organized somewhat differently - they are adaptive, at first they try to spin ( Spinlock ) several times and only then go to the core, they are smaller in size, because the list of threads that are blocked on it is stored separately. It became a little better, but not much.

We decided to use Thread Local Storage . Each stream can have its own storage, to which we can walk without blocking. We have created a global configuration that is once every 10 seconds copied to Thread Local Storage. It turns out the almost complete absence of locks, they certainly are, but are spread over time and are not actually noticeable.

Channels

One more problem was found out: in Rust there are std :: sync :: mpsc (channels) - they are about the same as the channels in Go. We use them to transfer packets from streams that are received to streams that are sent. In fact, the channel is an array for mutex. The word mutex in the context of our story is a sad word. We took the channels and moved them to parking_lot: github.com/polachok/mpsc .

It got a little better. mpsc is the multiple producer single consumer, in our case, the multiple producer is not needed, since from one RX package goes into one TX.

We found the library with the bounded-spsc-queue functionality we needed - it became much better, the locks were gone - only atomic operations were used, but it turned out that copying was happening - we had to finish the library a little.

Build the package every time

The next problem we encountered: in the case of an answer, a packet is collected from scratch every time. This was displayed in reds in perf, and I had to optimize this with a one-time build of the package template, after copying it, we replace the necessary fields in it. The performance gain was about 30%.

From more or less interesting pull requests you can note:

libpnet

github.com/libpnet/libpnet/pull/178 - TCP protocol support

github.com/libpnet/libpnet/pull/181 - change package without allocation

github.com/libpnet/libpnet/pull/183 - reading package without allocations

github.com/libpnet/libpnet/pull/187 - ARP support

rust-lang

github.com/rust-lang/rust/pull/33891 - accelerating the comparison of ip-addresses

concurrent-hash-map

github.com/AlisdairO/concurrent-hash-map/pull/4 - adds support for custom hashing algorithms

bpfjit for Rust

github.com/polachok/bpfjit - bindings of the pcap JIT compiler for Rust

Full list of our pull requests

github.com/gobwas/influent.rs/pull/9

github.com/gobwas/influent.rs/pull/8

github.com/terminalcloud/rust-scheduler/pull/4

github.com/rust-lang/rust/pull/33891

github.com/libpnet/libpnet/pull/187

github.com/libpnet/libpnet/pull/183

github.com/libpnet/libpnet/pull/182

github.com/libpnet/libpnet/pull/181

github.com/libpnet/libpnet/pull/178

github.com/libpnet/netmap_sys/pull/10

github.com/libpnet/netmap_sys/pull/4

github.com/libpnet/netmap_sys/pull/3

github.com/AlisdairO/concurrent-hash-map/pull/4

github.com/ebfull/pcap/pull/56

github.com/polachok/xl710-unlocker/pull/1

github.com/gobwas/influent.rs/pull/8

github.com/terminalcloud/rust-scheduler/pull/4

github.com/rust-lang/rust/pull/33891

github.com/libpnet/libpnet/pull/187

github.com/libpnet/libpnet/pull/183

github.com/libpnet/libpnet/pull/182

github.com/libpnet/libpnet/pull/181

github.com/libpnet/libpnet/pull/178

github.com/libpnet/netmap_sys/pull/10

github.com/libpnet/netmap_sys/pull/4

github.com/libpnet/netmap_sys/pull/3

github.com/AlisdairO/concurrent-hash-map/pull/4

github.com/ebfull/pcap/pull/56

github.com/polachok/xl710-unlocker/pull/1

Performance

In the absence of traffic, syncookied to reduce delays constantly polls the network card, which creates a small load. Parasitic load decreases with increasing number of incoming packets:

The load generated by traffic filtering is 12.755pps ( theoretical limit for a packet size of 74 bytes + 4 bytes of ethernet header)

When UDP filtering or port / protocol rules are applied, the load will be indistinguishable from the load in the absence of traffic.

In fact, the 12 cores of the Intel Xeon E5-2680v3 processor can handle 10 gigabit of syn / ack / data flooding traffic. One physical server can handle more than 40 gigabit of traffic.

Todo

At the moment, we have almost completed the implementation of SynCookied on the entire infrastructure of our hosting, repulsed several not very strong attacks and, if possible, are improving the product. Plans to add protection Out of Seq (with this, I hope, our colleagues will help us), almost implemented full-fledged SynProxy, add beautiful metrics to Influx, we think to add Auto mode to the implementation of Syncooked protection (similar to net.ipv4.tcp_syncookies = 1, Cook is sent when too many Syn arrives (packets).

Conclusion

We very much hope that our work will benefit the Internet community.

If the DDOS protection companies had put their practices into OpenSource, and every provider / administrator / client could use them, the problem of exploiting the imperfections of the Internet would be solved much faster.

We tested our system on synthetic tests and weak DDOS attacks (As an evil one, no normal attack has arrived for 4 months). For our tasks, the system turned out to be quite convenient. I would also like to note that the project at Rust was actually implemented by one person, a very talented programmer Alexander Polyakov, in a short time, after implementing the C ++ prototype and checking the idea for efficiency before the working version 5 weeks passed.

» Source Code

» Kernel Module Source Code

» Project Page

The author of the idea and the implementation of the prototype: Manikin redfenix Alexey

Implementation option on Rust: Polyakov polachok Alexander

Infrastructure creation and testing: Losev moosy Maxim

Source: https://habr.com/ru/post/301892/

All Articles