Event system and responses or the makings of Visual Scripting for Unity3D

Introduction

In my last article , I presented ways to ensure a “soft” connection between the components of the game logic, based on notifications and subscriptions to them. In a general sense, such notifications can be sent to any action or event that we want in a component's work: from changing a variable to more complex things. However, often certain events require us to perform a series of actions that are not advisable to delegate. The simplest example is sound design - an event has arisen in the component that requires sound. In the simplest version, we will call the AudioSource.Play () function, in a slightly more complex, wrapper function over the sound system. In principle, there is nothing wrong with that, if the project is small and there are few people in the team who combine many roles, but if this project is large, where there are several programmers and a sound designer, then, in particular, tuning the sounds will turn into a programmer nightmare. It’s not a secret that we are trying to abstract from the content and work less with it in terms of customization, for it’s more correct if the responsible specialists deal with this, and not us.

The example described above is applicable not only to sounds, but also to many other things: animations, effects, actions with GUI elements, etc., etc. All of this together represents a big problem on relatively large projects. In the course of my work, I came across this many times and basically all my decisions came down to lightening my work, which is essentially a dead end, since content management was completely cut off from the designers. In the end, it was decided to come up with some kind of system that would allow responsible persons to manage such things themselves with minimal participation of programmers. About this system and will be discussed in this article.

Events and Feedback

Little intro

I think it’s no secret to anyone what the gameplay is like from a programmer’s point of view. However, I still explain that my point of view is clear to readers.

So, all the code that we write to implement the gameplay is initially a set of components, each of which is the generator of a number of events occurring in the game world and affecting it, or on other components. In the simplest version, logic is divided into components according to events that are grouped by the source criterion: character, opponent, interface, interface window, etc., etc. However, the events themselves do not form the gameplay, we need the necessary actions (responses) performed in response to the events. For example, a character took a step and generated corresponding events, in response to which we need to play a sound, play the effect of dust from under our feet and maybe even shake the camera. In a more complex version, actions performed in response to events can also generate new events, I call this the “sound wave effect”. Thus, the “surface” of our gameplay is formed, as a chain of events and responses.

')

In most cases, the mechanism described above is formed directly in the code, but as was said earlier, a number of events can (and will) be associated with content that provides animation and perception of the game - the part for which artists and designers are responsible. With the wrong project management process and the wrong approach to the production of the game, most of these tasks are solved by the programmer. The artist gets angry - the programmer writes a “hack” and everything will be fine. It was good for the beginning of the “russian game development”, but now we need quality, speed and flexibility, so I decided to go the other way and this path is connected with the Unity Editor

Extensions .

A couple of words about architecture

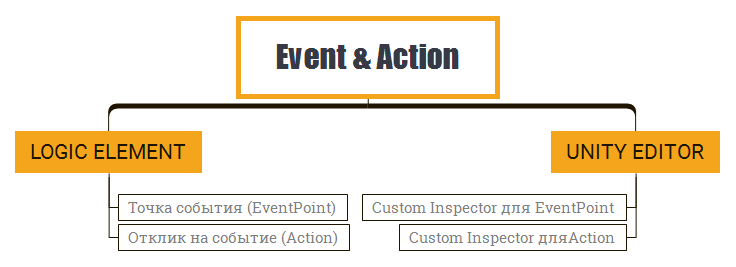

Below is a small block diagram showing the main elements necessary to implement the mechanism of events and responses, which will allow designers to customize certain elements of the game logic themselves.

So, the basic elements of the system are EventPoint (event point in the component) and Action (event response). Each of these elements requires a specialized editor to be configured, which will be implemented through Editor Extensions, in our case through custom inspector (more on this will be written in a separate section).

In the baseline scenario, each event point can generate not one response, but a multitude, with each response, can also be an event generator and contain entry points to them. In addition, the response should be able to operate with the basic entities of the Unity3D engine.

Based on the above, I decided that the simplest option would be to make Action the heir from MonoBehaviour , and EventPoint to make part of the logic of components in the form of a public field, which should be serialized by the standard Unity3D mechanism.

Let us dwell on these elements.

Eventpoint

[Serializable] public class EventPoint { public List<ActionBase> ActionsList = new List<ActionBase>(); public void Occurrence() { foreach (var action in ActionsList) { action.Execute(); } } } As you can see from the code, everything is quite simple. EventPoint is essentially a list that stores a set of Actions that are tied to it. Entry to the event point in the code occurs through a call to the Occurence function. ActionsList is made public field so it can be serialized with Unity3D tools.

Use in code

public class CustomComponent : MonoBehaviour { [HideInInspector] public EventPoint OnStart; void Start () { OnStart.Occurrence(); } } As it was said above, the Action should be an heir from MonoBehaviour , and also since the event point should not care what the response it causes, we will make a wrapper over this class in the form of an abstraction.

Actionbase

public abstract class ActionBase : MonoBehaviour { public abstract void Execute(); } Trite and primitive. The Execute function is required to trigger response logic when entering an event point.

Now you can implement the response (as an example - turn on and off the scene object)

public class ActionSetActive : ActionBase { public GameObject Target; public bool Value; public override void Execute() { Target.SetActive(Value); } } It is not difficult to notice that the basis of the system does not represent any complexity at all and in general is primitive. All problems are caused by the implementation of the visual part of the system associated with the inspector. Read more about this below.

Editor

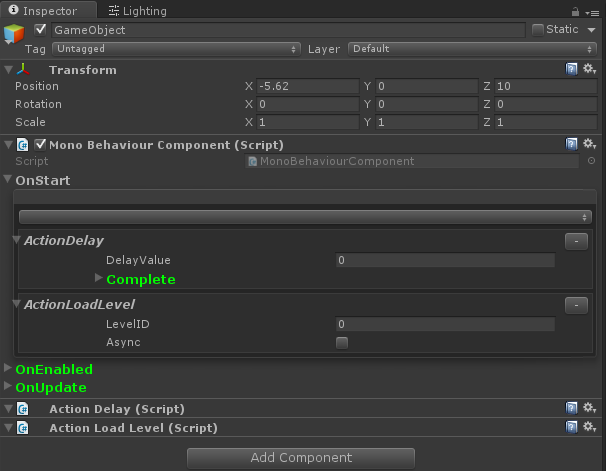

Before proceeding to the analysis of the implementation code of the editor, I want to show what we will strive for visually, and then we will consider how to achieve this. Below are images of the inspector with points of events and responses to them.

Let's start in order:

Override the inspector for the component .

Since we need flexibility, in order to unify the editor redefinition in the inspector for event points, there are two ways:

- Redefining the inspector for a specific type of property (in our case EventPoint )

- Redefining the inspector for the entire component (in our case, the successor to MonoBehaviour )

In general, both options are acceptable, I chose the second one, because in it I can set the desired order for displaying the component's public fields in the inspector.

Custom editor for all heirs of MonoBehaviour

[CustomEditor(typeof(MonoBehaviour), true)] public class CustomMonoBehaviour_Editor : Editor { // } Receiving and displaying a list of responses

In order for the system to be as flexible as possible, we need to deduce all the responses we have implemented (classes derived from ActionBase ) and add them to the event point by simple selection from the list.

It is implemented as follows.

void OnEnable() { var runtimeAssembly = Assembly.GetAssembly(typeof(ActionBase)); m_actionList.Clear(); foreach (var checkedType in runtimeAssembly.GetTypes()) { if (typeof(ActionBase).IsAssignableFrom(checkedType) && checkedType != typeof(ActionBase)) { m_actionList.Add(checkedType.FullName, checkedType); } } } As you can see, we get a list of all the heirs from ActionBase, and then exclude the asbtraction from the list. The response name is taken from the class name. Here you can go a more complicated way and pick up the name through the class attribute and also through the attribute you can set a short description of what the response does. Since I call the classes in detail, I decided to take a simpler path.

Listing event points and component public fields

Since we need to redefine how to display only the event points, the drawing function of the inspector will take the following form.

GUI Inspector's drawing function

public override void OnInspectorGUI() { serializedObject.Update(); base.OnInspectorGUI(); // FindAndEditEventPoint(target); // serializedObject.ApplyModifiedProperties(); } Function for finding event points (EventPoints)

void FindAndEditEventPoint(UnityEngine.Object editedObject) { var fields = editedObject.GetType().GetFields(); foreach (var fieldInfo in fields) { if (fieldInfo.FieldType == typeof(EventPoint)) { EventPointInspector(fieldInfo, editedObject); // } } } The main question that arises when looking at this code is why reflection is used, and not SerializedProperty . The reason is simple, because we redefine the inspector for all MonoBehaviour heirs, we have no idea about the naming of the component fields and their relationship to the type, therefore, we cannot use the serializedObject.FindProperty () function.

Now, as for the immediate drawing of the inspector for the event points. I will not give the whole code, because everything is quite simple there: FoldOut style is used for folding and unfolding information about responses. Let us dwell on the key points.

Retrieving EventPoint Instance Data

var eventPoint = (EventPoint)eventPointField.GetValue(editedObject); Listing responses and adding them to an event point

var selectIndex = EditorGUILayout.Popup(-1, actionNames.ToArray()); if (selectIndex >= 0) { var actionType = m_actionList[actionNames [selectIndex]]; var actionObject = (ActionBase)((target as Component).gameObject.AddComponent(actionType)); eventPoint.ActionsList.Add(actionObject); } Inspector for responses (Actions)

As can be seen from the code above, the response is added as a component to the object, as a result of such an action, this class will be displayed in the inspector window and will be processed accordingly (with the display of all fields, etc.). For us, this is not convenient, since we go all the manipulations on setting parameters to produce in one place. First of all, let's prevent Unity from displaying response class parameters in the inspector.

This is done by overriding the editor for all heirs to ActionBase.

[CustomEditor(typeof(ActionBase), true)] public class ActionBase_Editor : Editor { public override void OnInspectorGUI() {} } Now you can show the response class fields in the inspector, which we redefined for the event point (I quote the base code, how to add styles (FoldOut), I think it is not necessary to explain)

var destroyingComponent = new List<ActionBase>(); for (var i = 0; i < eventPoint.ActionsList.Count; i++) { EditorGUILayout.BeginHorizontal(); ActionBaseInspector(eventPoint.ActionsList[i]); // , if (GUILayout.Button("-", GUILayout.Width(25))) { destroyingComponent.Add(eventPoint.ActionsList[i]); } EditorGUILayout.EndHorizontal(); } The response is deleted as follows.

foreach (ActionBase action in destroyingComponent) { RecursiveFindAndDeleteAction(action); eventPoint.ActionsList.Remove(action); GameObject.DestroyImmediate(action); } void RecursiveFindAndDeleteAction(object obj) { var fields = obj.GetType().GetFields(); var destroyingComponent = new List<ActionBase>(); foreach (var fieldInfo in fields) { if (fieldInfo.FieldType == typeof(EventPoint)) { var eventPoint = (EventPoint)fieldInfo.GetValue(obj); destroyingComponent.AddRange(eventPoint.ActionsList); eventPoint.ActionsList.Clear(); } } foreach (ActionBase action in destroyingComponent) { RecursiveFindAndDeleteAction(action); GameObject.DestroyImmediate(action); } } Since the system is not limited in any way (although it is possible) in the length of the chain of events and responses, the removal of the response at the beginning or middle of the chain should be accompanied by the removal of all responses below, and for this it is necessary to recursively go through this chain and systematically delete all, including components from the object, and then delete the base response.

Note:

Here lies the strangest point that I still can not overcome: despite the fact that Unity recommends using DestroyImmediate to remove components from an object through the editor, this leads to the MissingReferenceException error in the editor. However, it does not affect the correct operation of the code. The bug is my or Unity, I still try to understand. If anyone knows what's the matter, write in the comments.

Drawing fields of the class that implements the response (Action)

As mentioned earlier, we cannot use serializedObject.FindProperty () in the code, because field names are not available to us, among other things, serializedObject is not available to us, so, like for EventPoint , reflection is used, which in turn is the most inconvenient moment in the whole system.

We draw the inspector for the response

void ActionBaseInspector(ActionBase action) { var fields = action.GetType().GetFields(); EditorGUILayout.BeginVertical(); EditorGUILayout.Space(); EditorGUILayout.Space(); EditorGUILayout.Space(); foreach (FieldInfo fieldInfo in fields) { if (fieldInfo.FieldType == typeof(int)) { var value = (int)fieldInfo.GetValue(action); value = EditorGUILayout.IntField(fieldInfo.Name, value); fieldInfo.SetValue(action, value); } else if (fieldInfo.FieldType == typeof(float)) { var value = (float)fieldInfo.GetValue(action); value = EditorGUILayout.FloatField(fieldInfo.Name, value); fieldInfo.SetValue(action, value); } // .. , } FindAndEditEventPoint(action); // . EditorGUILayout.EndVertical(); } As you can see, we will have to define the output of the editor for all the necessary field types with pens. On the one hand, this is tin, on the other hand, it is possible to redefine the field editor, which I use, for example, for sounds.

Total

If you look at the system as a whole, there is nothing difficult in it, however, the advantages that it gives are simply incomparable. Saving time and effort is huge. In fact, the programmer now needs to create an event point and entry in the necessary logic component, and then, what is called “wash hands”, of course, besides this, writing response code is required, but since they are essentially Unity components, here everything is limited only by imagination: you can make large complicated things, you can make many small monosyllables.

At the beginning of the article, I mentioned that delegating the processing of events to other components may be inappropriate, but in fact this has its advantages from the position of the pipeline. Using the message system (see previous article), you can define specialized components to process them so that you can create entries to event points. If you place such components in a predetermined place, then designers will not have to scour the hierarchy of objects. This approach, for example, can be used to tune the sound environment.

As it was said earlier, the system is not limited to a long chain of events and responses, in fact, the designer can create as many large and complex logic of behavior (referring to Visual Scripting ), but in practice this is not convenient or unreadable, therefore it is better to limit the chains to at least verbal agreements. In the course of our work, my partner and I used such chains in an extended version - a separate window ( EdtiorWindow ) was used for editing. Everything was fine until there was a need to create complex branches in logic. Understand after some time in this mess was just unreal. That is why in my system I went towards a simple solution based on an inspector.

With regard to Visual Scripting as a whole, the approach of events and responses and their implementation through the Unity components has its advantages and, in the end, my partner and I decided to develop a full-fledged visual logic editor in the course of our work on several projects. What came out of this is a topic for a separate article, and in conclusion I want to give a small video demonstrating the work of the described system, using the example of uGUI.

https://youtu.be/sfZ9Tf_EVYE

Source: https://habr.com/ru/post/301804/

All Articles