Techniques to capture the user's attention - from a magician and a designer ethics specialist at Google

I am an expert in how technology uses the weaknesses of our psyche. That’s why I’ve been working for the last three years as a google ethicist in design. My job was to design software in such a way as to protect the minds of a billion people from "gimmicks."

Using technology, we are often optimistic about what they do for us. But I want to show you how you can achieve the opposite result.

I learned to think so when I was a magician. Magicians always start by searching for blind spots, edges, vulnerabilities and limits of perception, so that they are able to influence the behavior of people, and the latter do not even realize it. After you learn how to correctly press these human “buttons”, you can start playing them like a piano.

')

It is me who creates "magic" with the help of sleight of hand, on the birthday of my mother

This is what designers do with your mind. They play on psychological vulnerabilities (consciously or unconsciously) in order to grab your attention.

And I want to show you how they do it.

Western culture is built around the ideals of individual choice and freedom. Millions fiercely defend their right to make a “free” choice, at the same time we will ignore it, since we did not choose the “menu” itself.

This is exactly what magicians do. They give people the illusion of freedom of choice, designing the menu in such a way as to win no matter what you choose in the end. And I emphasize - this is extremely important.

When people are given menus with options to choose from, they rarely ask:

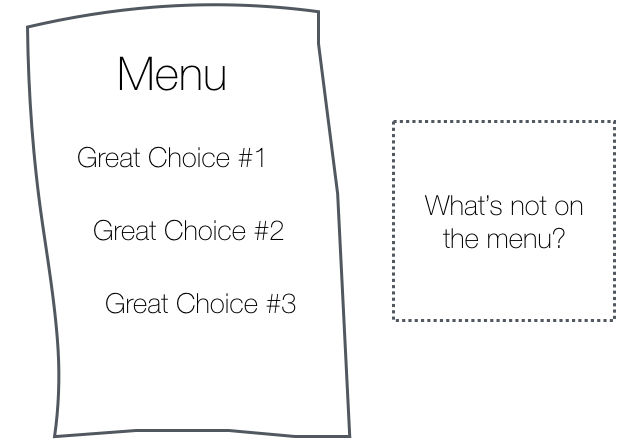

“What's not on the menu?”

“Why are these options offered to me, and not others?”

“I know what the developer wants to achieve?”

“This menu provides more information than I need - in fact, it distracts my attention?” (For example, many brands of toothpastes)

This is the menu of choice for the need “I have finished toothpaste”

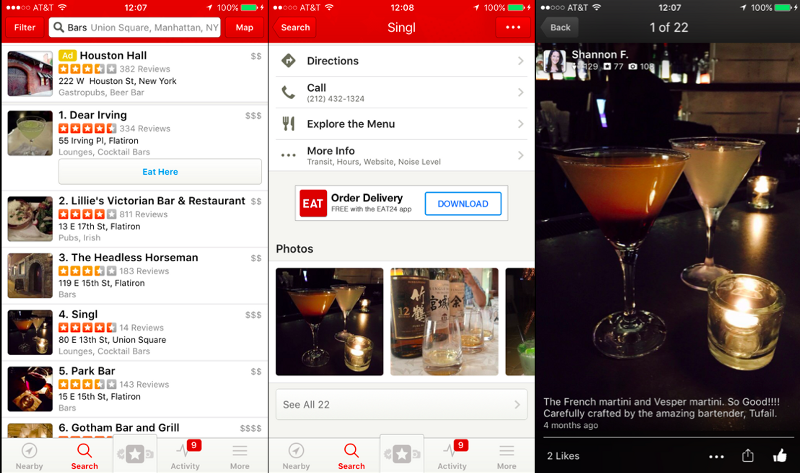

For example, imagine that you sat up with your friends on Tuesday night and want to continue the conversation. You open Yelp to find recommendations and see a list of nearby bars. Your company is turning into a group of people staring at their phones comparing bars. Everyone is carefully studying photos and cocktails. Is all this still related to the original desire of the group (to find a place to sit)?

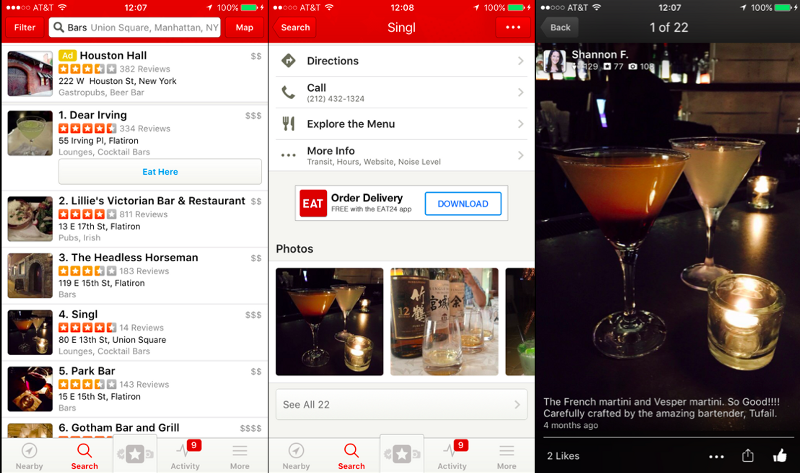

This does not mean that a bar is a bad choice. This means that Yelp replaces the question of the group with “where can we go to sit and talk?” On “in which bar are the best cocktails and interiors, based on the photos?”. And all this is achieved by forming menu items.

In addition, your company arrives in the illusion that the Yelp menu provides a complete list of possible options. While you are all staring at the screens of your phones in the park, live music is playing across the road, selling pancakes and hot coffee. And this option is not presented in the Yelp menu.

Yelp subtly shapes the need of the group “where can we go to continue the conversation?” In terms of cocktail photos

The more choice technology provides in almost every area of our life (information, events, places to relax, chat with friends, acquaintances, work), the more we begin to rely on the phone to provide the most extensive and useful selection menu for us. So?

The menu, which gives "the maximum expansion of our capabilities" differs from the menu, where we are offered to choose from specific options. But when we blindly rely on what is offered, these differences are very easy to overlook:

“Who is free to walk tonight?” Is formed from the list of those people who wrote to us last.

“What is happening in the world?” Is formed from a news feed.

“Who is lonely and goes on a date with me?” Is formed from the search results of Tinder, instead of finding the right person at some event, in the city or among friends.

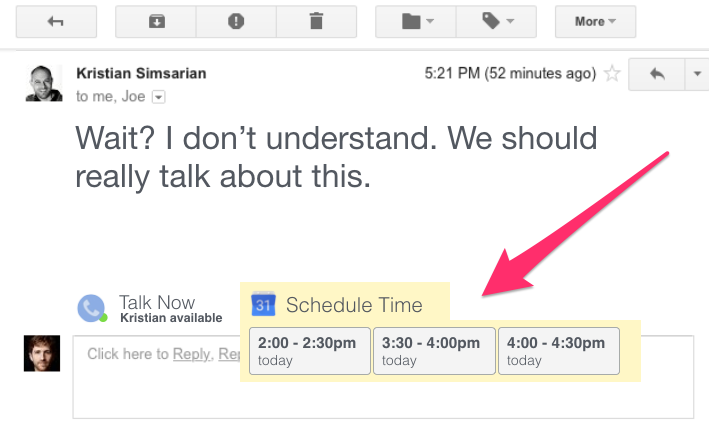

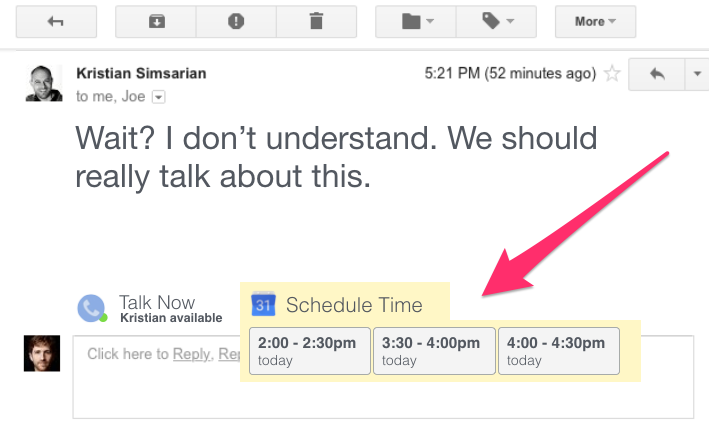

The whole essence of the menu items in the UI. Your email client offers you ready-made solutions instead of asking “What value do you want to enter?” (Design by Tristan Harris)

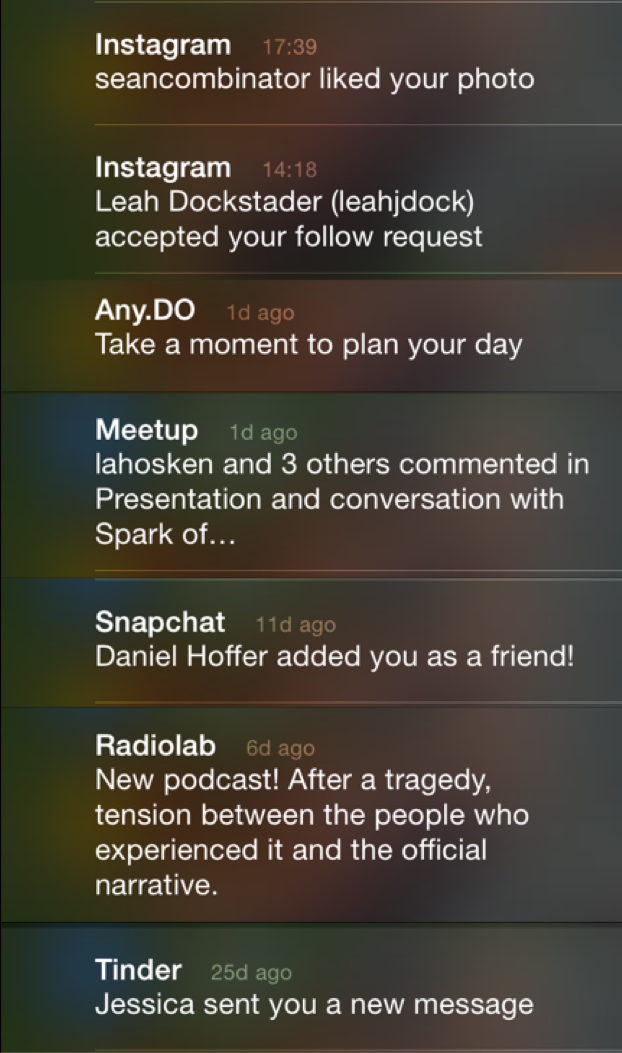

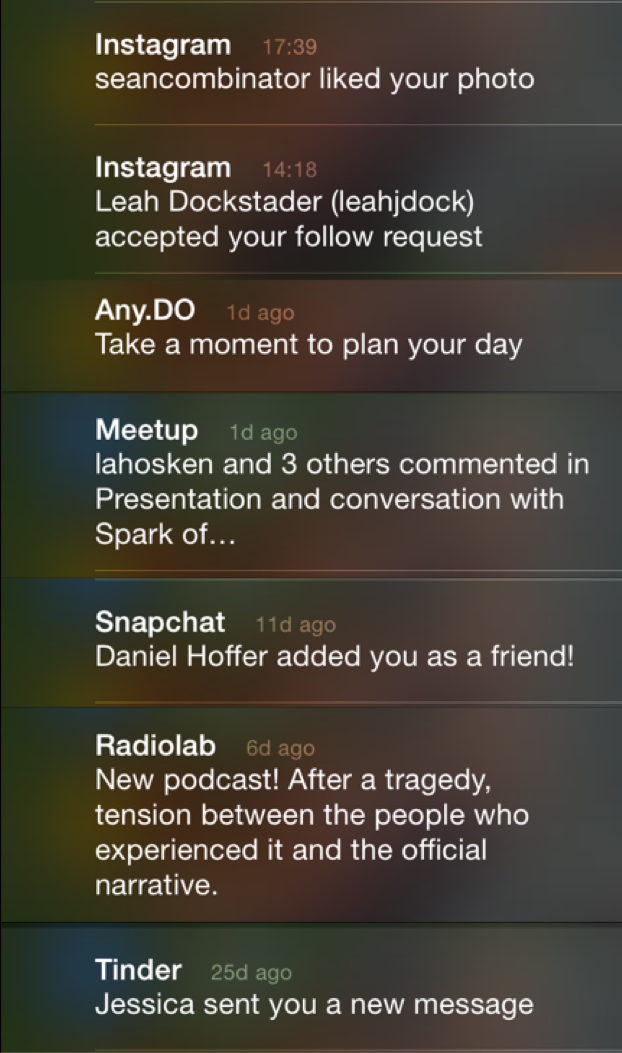

When we wake up in the morning and turn over the phone, we see a list of notifications, which can be described as “morning awakening”. He is part of "everything that I missed yesterday."

Creating a menu of selectable items from the list, technology companies capture our perception of the choice itself and replace it. But the more closely we follow the options we are offered, the more we will notice when they do not really correspond to our true needs.

If you are an application, then how to hook people? Turn into a gaming machine.

The average user checks his smartphone 150 times a day. Why do we do this? And do we make 150 conscious choices?

How many times a day do you check your email?

One of the main reasons for the similarity of applications with slot machines is reinforcement .

If you want to maximize the degree of habituation, you need to associate a user action (for example, pressing a lever) with a variable reward. You pull the lever and immediately receive one of the awards, or nothing. And addiction is maximized when the reward is variable.

You ask, does it really work? Yes. Slot machines in the US make more money than baseball, movies, and amusement parks combined . According to Natasha Dow Shall, a professor at New York University, the author of the book “Dependence on Design,” compared to other types of gambling, people sit down on gambling machines 3-4 times faster.

And here it is, the sad truth: several billion people carry a slot machine in their pockets:

Applications and websites use reinforcements throughout the industry’s history, because it has a positive effect on business.

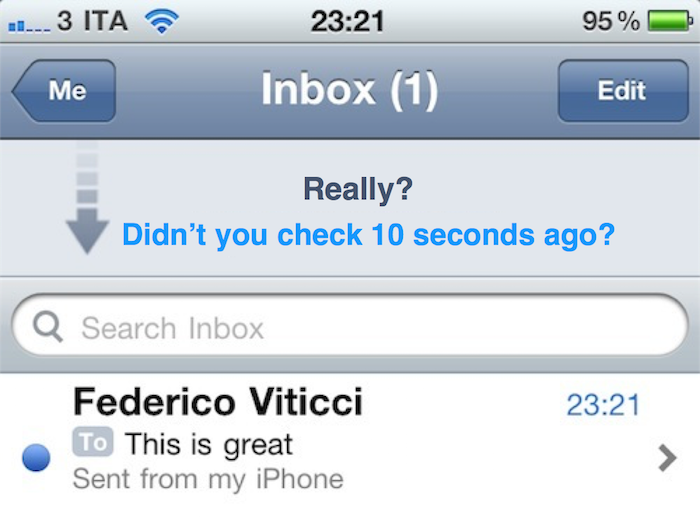

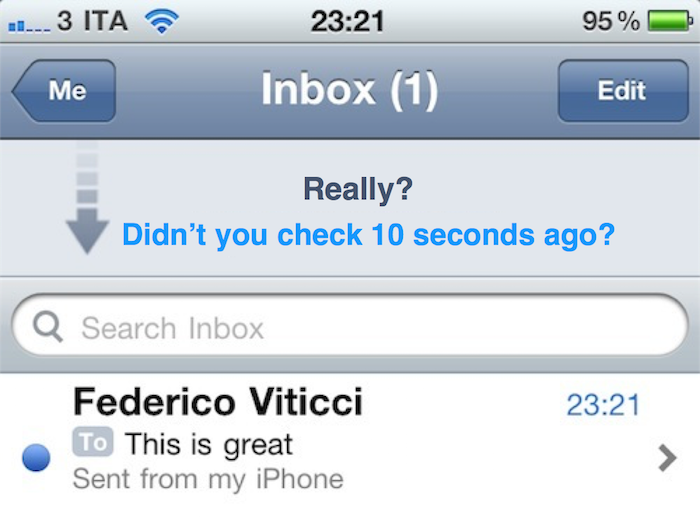

But in other cases, "slot machines" appear randomly. For example, there is no separate “Evil Corporation”, which is behind the invention of the e-mail service, the purpose of which would be to make it a gaming machine. No one makes a profit when millions of people check their email and find nothing new there. No Apple or Google designer wanted smartphones to work on the gaming machine principle. This happened by accident.

And now companies like Apple and Google are required to reverse this effect by transforming the “reinforcement” into something less exciting and more predictable, plus, with an improved design. For example, they could give people the opportunity to set a specific time during the day or week when they want to check their “slot machine” of applications and, accordingly, send notifications during this period.

Another way to capture the minds of millions is to cry out that "there is a 1% chance that you missed something important."

If “I” can convince you that “I” is a channel for obtaining important information, of a friendly or potentially sexual nature, then you cannot ignore me, unsubscribe, or delete my account because (hehe, I won ) you can miss something important:

But if you take a closer look at these fears, you will see that it still does not save: we will always skip something important for each of the points as soon as we finish the user session.

Yes, we will miss something important on Facebook if we do not use it for 6 hours (for example, that an old friend is right now in my city).

There is something important that we will miss in Tinder (for example, the love of all life), without spending the 700th match in it.

There are extremely important calls that we will miss if we are not in touch 24/7.

But we cannot live from moment to moment, for fear of missing out on anything.

And it is amazing how quickly we understand the illusiveness of what is happening, overcoming this fear. If we do not have a day online, do not sign up for anything, or check for alerts, we won’t miss anything of great importance.

Because we do not miss what we do not see.

The thought “I'll miss something” is formed in your mind before you exit the application, unsubscribe or go offline - not after. Imagine that technology companies recognized the above and helped us to build our friendly and business relations in terms of “I had a good time” and not in anticipation and fear of missing something?

It's easiest to get

We are all vulnerable to social approval. The need to belong to a social group is one of the strongest motivators. But now our social self-assertion is in the hands of private companies.

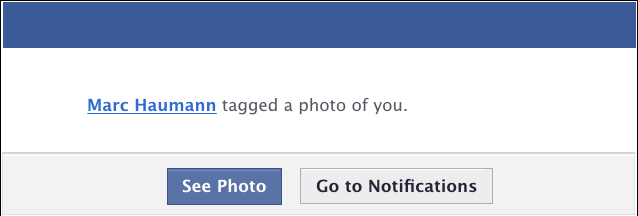

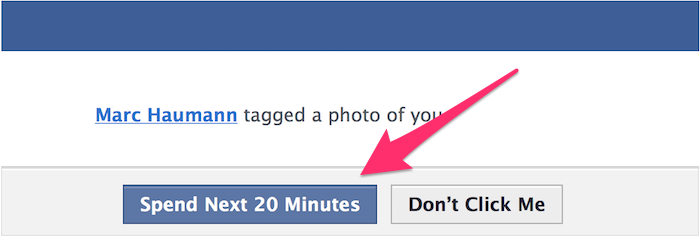

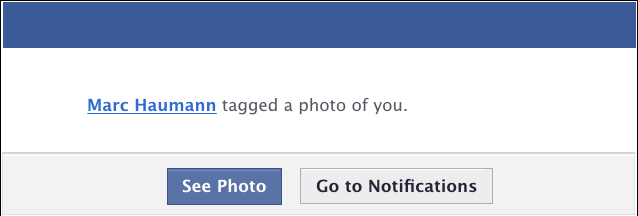

When I receive a notice that my friend Mark has tagged me in a photo, I think he deliberately did this. But at the same time, I see how companies like Facebook are forcing people to do this.

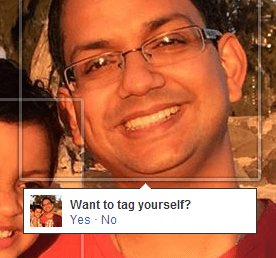

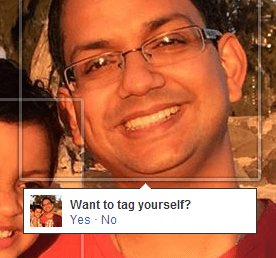

A faceboom, instagram, or span can manipulate people's actions in terms of marking their friends' photos (for example, the one-click alert “Mark Tristan on this photo?”).

So, when Mark marks me in the pictures, in fact, he answers the question that Facebook asked him, without making any independent choices. This kind of design decision shows how Facebook manipulates millions of people for the sake of social approval.

Some people like to change the avatar on their page and Facebook knows - this is the moment of applying for the approval of the society: “what will my friends think about this photo?”. Facebook can rank these things higher in the news feed in order for as many of our friends as possible to rate or comment on a new avatar. And every time they do this, we again get sucked into all of the above.

Every person has an innate need for social approval, but adolescents are the most vulnerable group. That is why it is very important to understand how seriously designers have an impact on us when using this "vulnerability".

You are helping me - and now I am in your debt.

You say: “thank you” - I will say “please”.

You send me a message - rude not to answer you.

You follow me - it’s rude not to fold back (especially for teenagers).

We are vulnerable to having to answer others. But, like social approval, technology companies manipulate our reciprocity with other people.

In some situations there is an accident. E-mail and chat messaging are the main “factories of social reciprocity,” but in other cases this weakness is purposefully exploited.

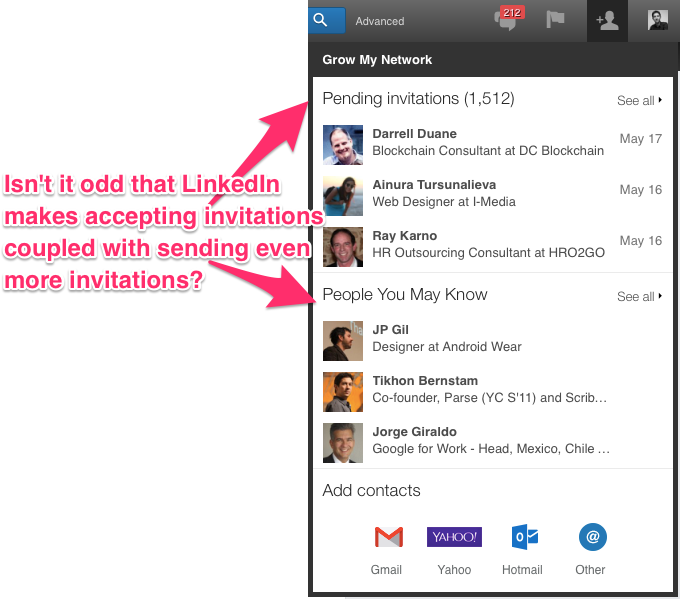

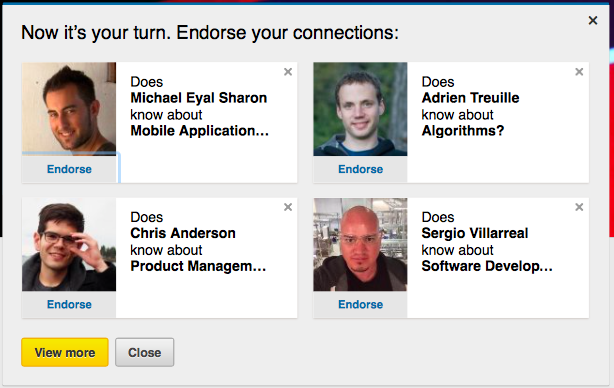

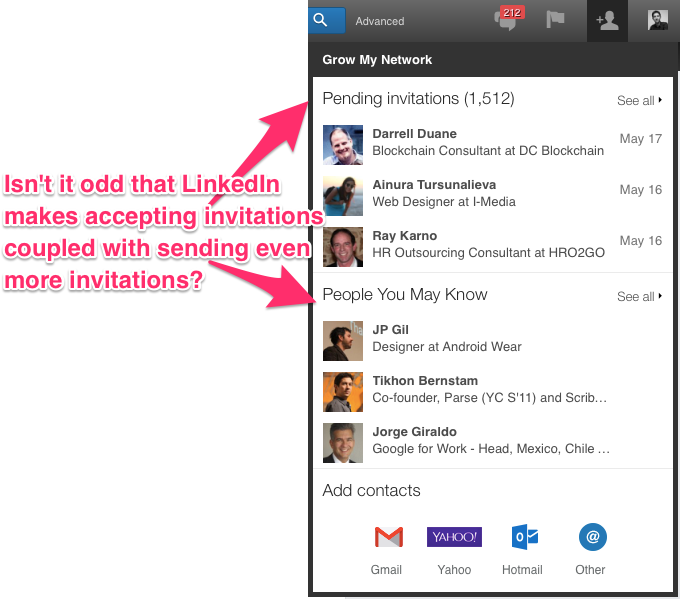

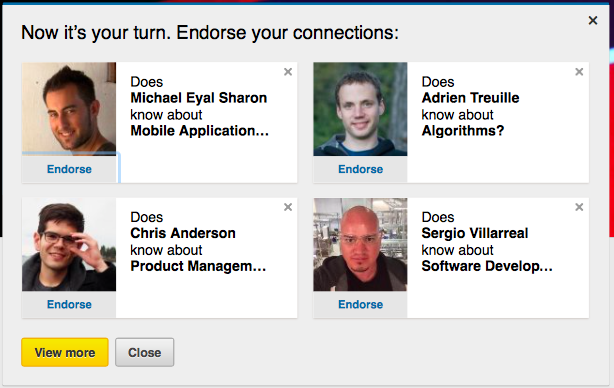

LinkedIn is the most obvious “exploiter”, because every time someone leaves us with a message, comments (assigned) for a skill or something else, we have to go back to linkedin.com and waste our time.

Like Facebook, LinkedIn uses the asymmetry of perception of what is happening. Every time you receive a request from someone, you think that the person sent it to you consciously, however, most likely, he just saw you on the list that the platform gave him. In other words, LinkedIn turns your unconscious impulses to reciprocate the offer to “accept friendship.” And they benefit directly from how much time is spent on it.

Imagine millions of people in the form of hens with severed heads, which only do that they run around, confirming applications for friends. And the company that has developed a similar model benefits from all this.

Welcome to social media.

Abusing your reciprocity with other people, LinkedIn, after approval of one application, gives you 4 more options for a “friend request”.

Imagine if technology companies were required to minimize social reciprocity. Or if there would be an “FDA for technology” that controlled when companies abused these biases?

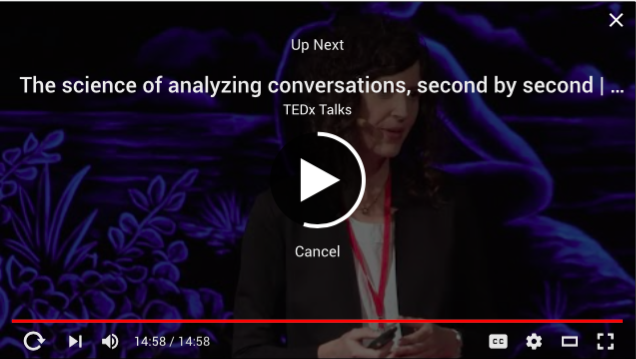

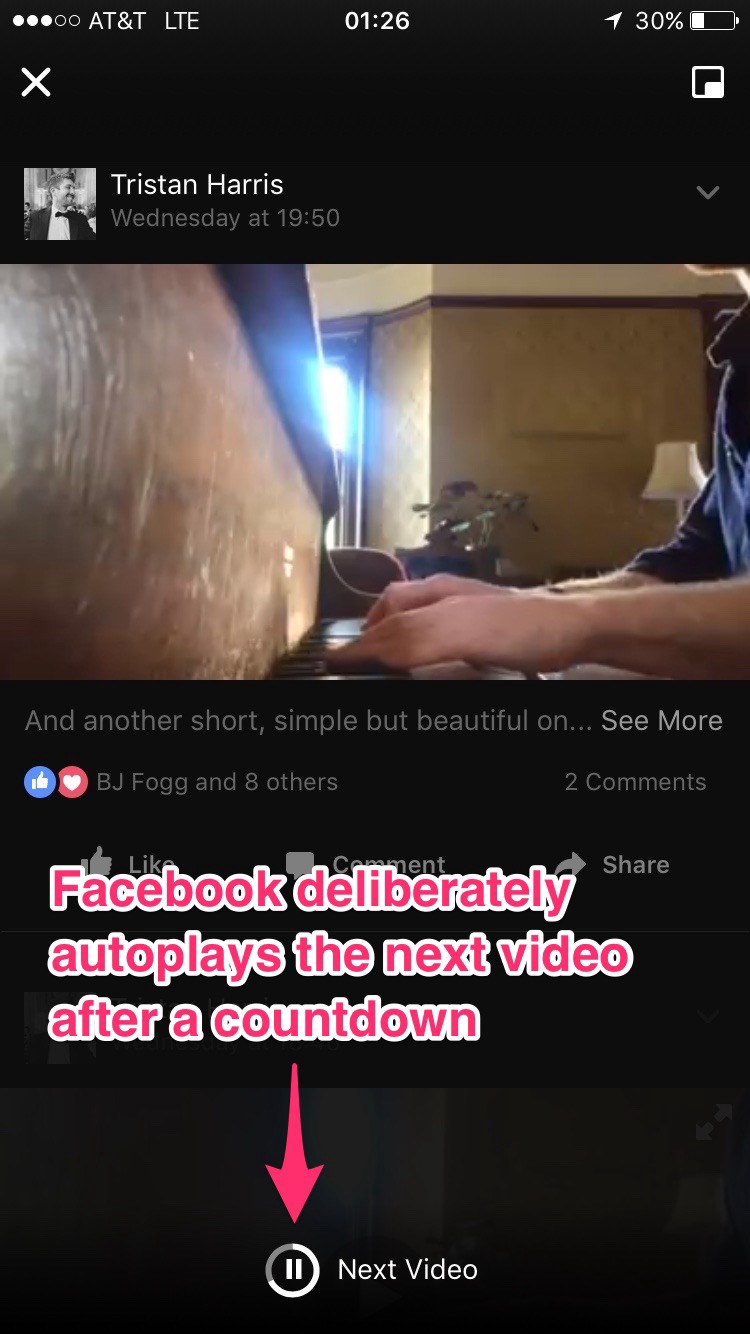

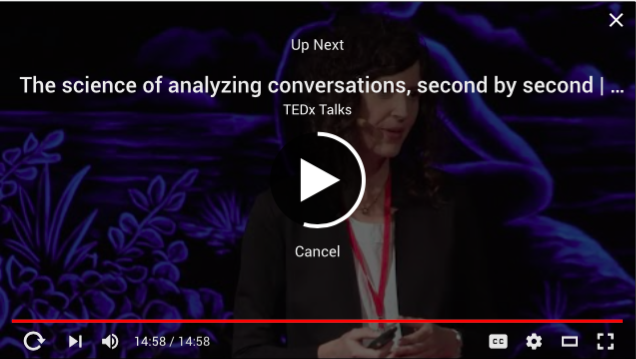

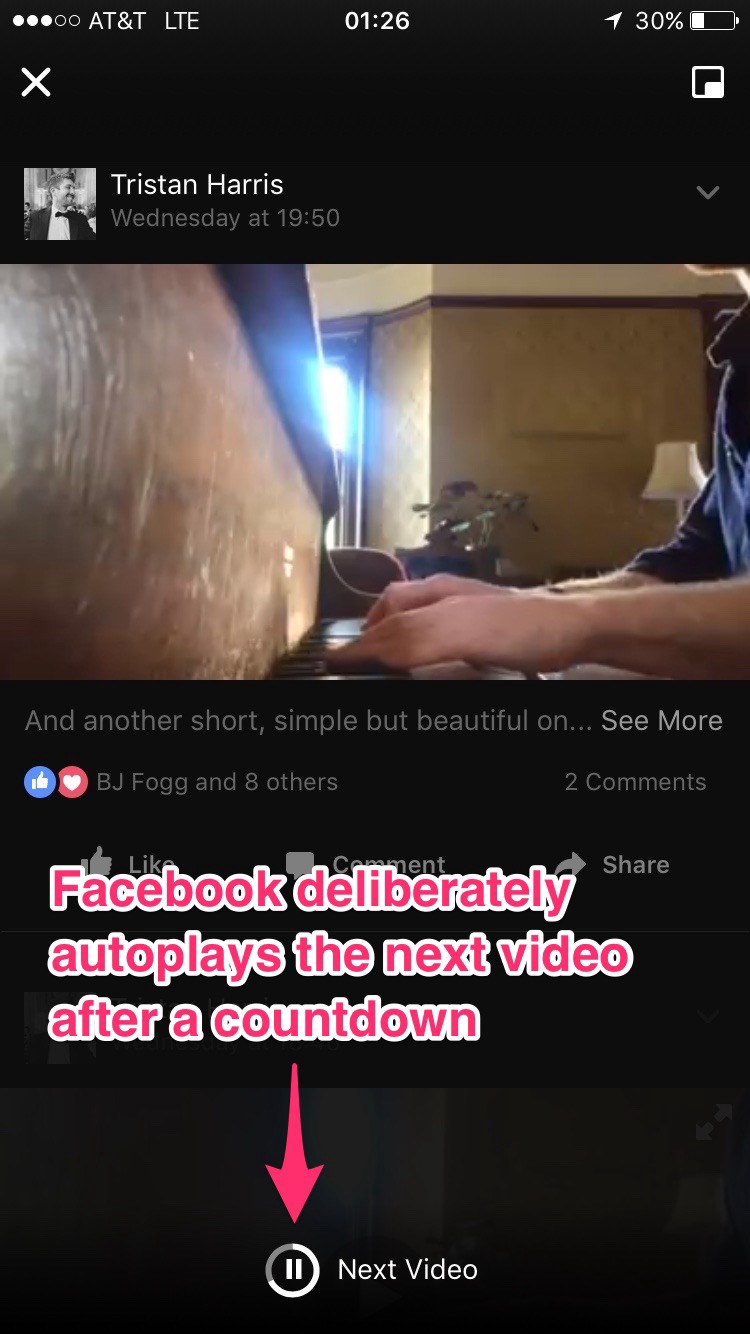

YouTube automatically plays the next video after the countdown

There is another way to capture people's attention, to make them “eat” something, even when they are not “hungry.”

How? Easy. Take "user expirience", which was previously finite and limited, and turn it into an endless stream.

Professor Brian Wonsink of Cornell University demonstrated this during his experiment. In his own office, he showed how to deceive a person by giving him a bottomless plate of soup. Every time the subjects ate, the soup was automatically added to the plate. As a result, people consumed 73% more calories than they usually needed to get enough.

Technology companies use the same principle. The news feed has been specifically designed to automatically add content and keep your attention scrolling through the page. Plus, they specifically eliminate everything that can make you pause and leave.

This also explains why videos on socially oriented sites like Netflix, Youtube or Facebook have the autoplay function for the next video at the end of the previous one. They do not expect you to make an informed choice, but simply launch the next video after the countdown. And this feature generates a huge part of the traffic.

Autoplay video on Facebook after countdown

Technology companies often claim that they only “make life easier for their users when they want to see something,” but in fact, they are upholding their own business interests. And you cannot blame them for this, because they all compete for a special currency called “time spent on a resource.” Now imagine that a technology company will make efforts not only to increase the time spent on its resource, but also increase the quality of this “time spent”.

Companies know that a message that drastically distracts a person is more convincing for an answer than, for example, delicately waiting for an email to be seen.

Considering this, Facebook Messenger, WhatsApp, WeChat, or Snapchat chose to develop their applications to instantly interrupt the user and immediately show the chat window, instead of helping users to respect each other and not distract from business.

In other words, interruption is good for business.

Such behavior is also in the interest of technology companies in order to increase the sense of urgency and social reciprocity. For example, Facebook automatically informs the sender whether the recipient “saw” his message, instead of helping you to “hide” from undesirable correspondence. Because when you know that the person on the other side knows that you have seen his message, you feel even more obliged to answer him.

For comparison, Apple has a great deal of respect for users and allows you to manually turn read notifications on or off. The problem is that maximizing “interruptions” in the name of business has created a global tragedy, the fruit of which was a general disruption of concentration and billions of unnecessary interruptions every day around the world. And we must correct this problem, for example, by introducing universal standards for designing applications and services.

Another way for the app to “grab” your attention is to combine your personal reasons for visiting the app with the company's business goals (for example, maximizing content consumption per session).

An example of this behavior in real life can serve as shops. First of all, people need medicines and dairy products. In other words, business makes part of itself what people need. But if the stores actually organized a display convenient for consumers, then these two groups of goods would be located at the very entrance, and not at the far end of the hall, as is usually the case.

Technology companies develop their sites on the same principle. For example, when you want to check the latest events on Facebook, the resource does not allow you to go directly - you can access the information of interest only after “passing” through the news feed and this is done on purpose. Facebook wants to translate all the reasons for its use by you, into maximizing the consumption of content and other products.

In aspherical ideal world in a vacuum, the app will allow you to get directly to where you want. Imagine a digital “Bill of Rights” setting out design standards, thanks to which applications used by billions of people will allow you to get to the part of interest to you by the shortest way.

We were told that it is enough for business to simply make “choice available.”

Naturally, what the companies want is for you to make the choice that they need. Therefore, what suits them is easy to choose, and what is not is difficult. With tricks the same thing. You simplify the visual part that you want others to see, and complicate what you want to hide from prying eyes.

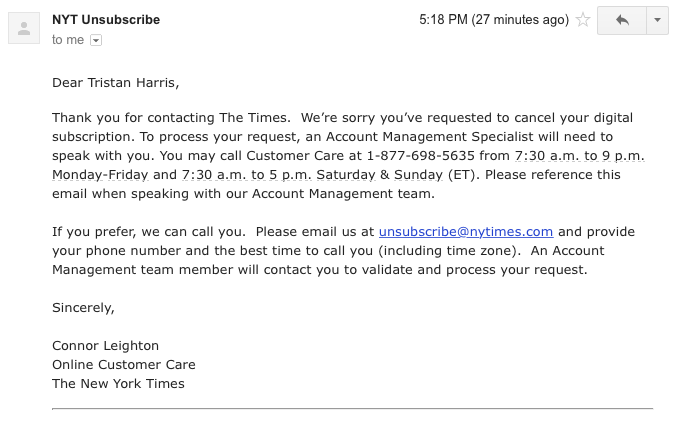

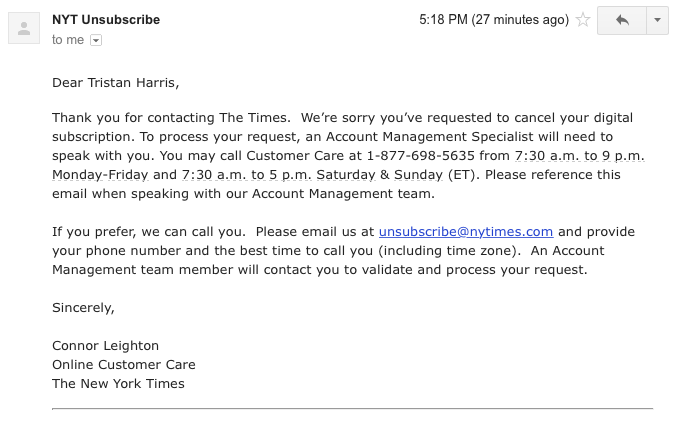

For example, NYTimes.com allows you to “freely choose” when it comes to canceling a digital subscription. But instead of simply making this functionality in the form of a “unsubscribe” button, they send an email to your e-mail with information on how to cancel a subscription using a phone call to a specific number that is available only during the designated working hours.

Instead of considering the world from the point of view of having a choice as such, we have to estimate the number of movements for each of the options. Imagine a world where every possible choice would be labeled with its own “coefficient of body movements” that would have independence - not part of a consortium or property - with which the degree of complexity of certain actions would be marked, and on the basis of which would have certain standards for their complexity.

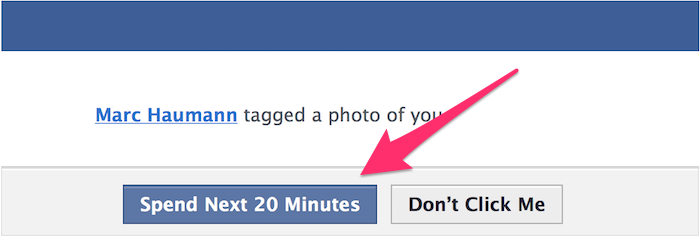

Facebook offers us an easy choice to view photos. Would we press this button, knowing the true price of this click?

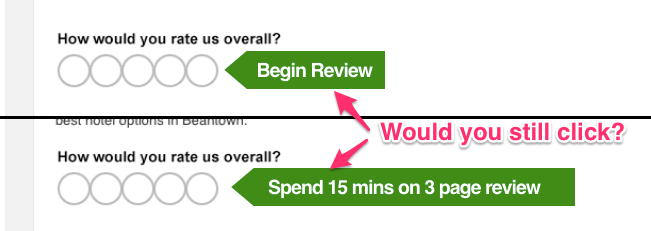

Applications also use the inability of people to predict the effects of their clicks.

People cannot intuitively estimate the true cost of a click that they are offered to make. Salespeople use the so-called “feet in the door” tactics, starting with the innocuous request “just one mouse click to see those who retweeted you” and continuing in the style “why don't you stay here for a while?”. Almost everyone uses this trick.

Imagine web browsers and smartphones through which, as if through gateways, people make a similar choice every day, who would be able to predict for their users the consequences of their click based on real data on benefits and costs.

That is why I add information about the approximate time of reading my publications. When you show the “true value” of a proposed choice, people (or the audience) begin to treat you with more respect.

In a good way, the Internet should be designed in terms of predicting costs and benefits, so that people are able to make a choice consciously by default, without making any extra effort for this.

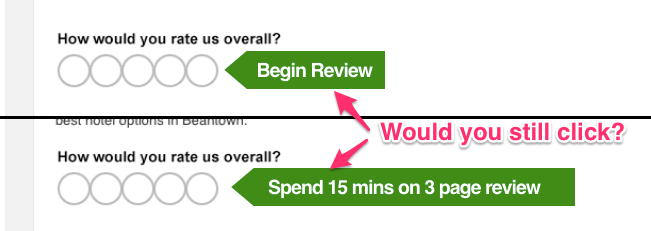

TripAdvisor uses the strategy of “sticking feet into the door”, showing a single-click action “estimate the number of stars” and hiding three more questions from the user.

Are you upset that technology steals your time? So do I. I have listed only a few methods, when in fact there are thousands of them. Imagine whole bookshelves, seminars, workshops and trainings that teach entrepreneurs about these techniques. Imagine hundreds of engineers whose job is to come up with new ways every day to keep you online.

Absolute freedom requires a free mind, and we need technologies that will be on our side: help us to live, feel, think and act freely.

We need smartphones, notifications and web browsers to become an exoskeleton for our mind and interpersonal relationships, which puts our values, not impulses, first. Human time is valuable. And we need to protect it, as we protect privacy and other digital rights.

Using technology, we are often optimistic about what they do for us. But I want to show you how you can achieve the opposite result.

How does technology exploit the weak points of our mind?

I learned to think so when I was a magician. Magicians always start by searching for blind spots, edges, vulnerabilities and limits of perception, so that they are able to influence the behavior of people, and the latter do not even realize it. After you learn how to correctly press these human “buttons”, you can start playing them like a piano.

')

It is me who creates "magic" with the help of sleight of hand, on the birthday of my mother

This is what designers do with your mind. They play on psychological vulnerabilities (consciously or unconsciously) in order to grab your attention.

And I want to show you how they do it.

Trick number 1: If you control the menu, you control the choice made

Western culture is built around the ideals of individual choice and freedom. Millions fiercely defend their right to make a “free” choice, at the same time we will ignore it, since we did not choose the “menu” itself.

This is exactly what magicians do. They give people the illusion of freedom of choice, designing the menu in such a way as to win no matter what you choose in the end. And I emphasize - this is extremely important.

When people are given menus with options to choose from, they rarely ask:

“What's not on the menu?”

“Why are these options offered to me, and not others?”

“I know what the developer wants to achieve?”

“This menu provides more information than I need - in fact, it distracts my attention?” (For example, many brands of toothpastes)

This is the menu of choice for the need “I have finished toothpaste”

For example, imagine that you sat up with your friends on Tuesday night and want to continue the conversation. You open Yelp to find recommendations and see a list of nearby bars. Your company is turning into a group of people staring at their phones comparing bars. Everyone is carefully studying photos and cocktails. Is all this still related to the original desire of the group (to find a place to sit)?

This does not mean that a bar is a bad choice. This means that Yelp replaces the question of the group with “where can we go to sit and talk?” On “in which bar are the best cocktails and interiors, based on the photos?”. And all this is achieved by forming menu items.

In addition, your company arrives in the illusion that the Yelp menu provides a complete list of possible options. While you are all staring at the screens of your phones in the park, live music is playing across the road, selling pancakes and hot coffee. And this option is not presented in the Yelp menu.

Yelp subtly shapes the need of the group “where can we go to continue the conversation?” In terms of cocktail photos

The more choice technology provides in almost every area of our life (information, events, places to relax, chat with friends, acquaintances, work), the more we begin to rely on the phone to provide the most extensive and useful selection menu for us. So?

The menu, which gives "the maximum expansion of our capabilities" differs from the menu, where we are offered to choose from specific options. But when we blindly rely on what is offered, these differences are very easy to overlook:

“Who is free to walk tonight?” Is formed from the list of those people who wrote to us last.

“What is happening in the world?” Is formed from a news feed.

“Who is lonely and goes on a date with me?” Is formed from the search results of Tinder, instead of finding the right person at some event, in the city or among friends.

The whole essence of the menu items in the UI. Your email client offers you ready-made solutions instead of asking “What value do you want to enter?” (Design by Tristan Harris)

When we wake up in the morning and turn over the phone, we see a list of notifications, which can be described as “morning awakening”. He is part of "everything that I missed yesterday."

Creating a menu of selectable items from the list, technology companies capture our perception of the choice itself and replace it. But the more closely we follow the options we are offered, the more we will notice when they do not really correspond to our true needs.

Trick number 2: Put a slot machine in a billion pockets

If you are an application, then how to hook people? Turn into a gaming machine.

The average user checks his smartphone 150 times a day. Why do we do this? And do we make 150 conscious choices?

How many times a day do you check your email?

One of the main reasons for the similarity of applications with slot machines is reinforcement .

If you want to maximize the degree of habituation, you need to associate a user action (for example, pressing a lever) with a variable reward. You pull the lever and immediately receive one of the awards, or nothing. And addiction is maximized when the reward is variable.

You ask, does it really work? Yes. Slot machines in the US make more money than baseball, movies, and amusement parks combined . According to Natasha Dow Shall, a professor at New York University, the author of the book “Dependence on Design,” compared to other types of gambling, people sit down on gambling machines 3-4 times faster.

And here it is, the sad truth: several billion people carry a slot machine in their pockets:

- When we pull the phone out of our pocket, we play to see which notifications we received.

- When we drag the screen down to refresh the email, we play to see which email we received.

- When we update the Instagram feed, we play to see which photo will appear after that.

- When we iterate through photos in a dating app like Tinder, we pull the slot machine lever in the hope of winning.

- When we go to the alert section to check what has come, we are playing again.

Applications and websites use reinforcements throughout the industry’s history, because it has a positive effect on business.

But in other cases, "slot machines" appear randomly. For example, there is no separate “Evil Corporation”, which is behind the invention of the e-mail service, the purpose of which would be to make it a gaming machine. No one makes a profit when millions of people check their email and find nothing new there. No Apple or Google designer wanted smartphones to work on the gaming machine principle. This happened by accident.

And now companies like Apple and Google are required to reverse this effect by transforming the “reinforcement” into something less exciting and more predictable, plus, with an improved design. For example, they could give people the opportunity to set a specific time during the day or week when they want to check their “slot machine” of applications and, accordingly, send notifications during this period.

Trick number 3: Fear of missing something important

Another way to capture the minds of millions is to cry out that "there is a 1% chance that you missed something important."

If “I” can convince you that “I” is a channel for obtaining important information, of a friendly or potentially sexual nature, then you cannot ignore me, unsubscribe, or delete my account because (hehe, I won ) you can miss something important:

- This forces us to “trade face” in dating apps, even if we do not plan to meet with anyone (what if I miss someone “hot” who will be interested in me?).

- This forces us to subscribe to a useless newsletter (what if I miss an ad?).

- It makes us keep “friends” of people with whom we have not spoken for ages (“what if I miss something important from them?”).

- It makes us use social media (“what if I miss some important news and don’t understand what my friends are talking about?”).

But if you take a closer look at these fears, you will see that it still does not save: we will always skip something important for each of the points as soon as we finish the user session.

Yes, we will miss something important on Facebook if we do not use it for 6 hours (for example, that an old friend is right now in my city).

There is something important that we will miss in Tinder (for example, the love of all life), without spending the 700th match in it.

There are extremely important calls that we will miss if we are not in touch 24/7.

But we cannot live from moment to moment, for fear of missing out on anything.

And it is amazing how quickly we understand the illusiveness of what is happening, overcoming this fear. If we do not have a day online, do not sign up for anything, or check for alerts, we won’t miss anything of great importance.

Because we do not miss what we do not see.

The thought “I'll miss something” is formed in your mind before you exit the application, unsubscribe or go offline - not after. Imagine that technology companies recognized the above and helped us to build our friendly and business relations in terms of “I had a good time” and not in anticipation and fear of missing something?

Rule number 4: Social approval

It's easiest to get

We are all vulnerable to social approval. The need to belong to a social group is one of the strongest motivators. But now our social self-assertion is in the hands of private companies.

When I receive a notice that my friend Mark has tagged me in a photo, I think he deliberately did this. But at the same time, I see how companies like Facebook are forcing people to do this.

A faceboom, instagram, or span can manipulate people's actions in terms of marking their friends' photos (for example, the one-click alert “Mark Tristan on this photo?”).

So, when Mark marks me in the pictures, in fact, he answers the question that Facebook asked him, without making any independent choices. This kind of design decision shows how Facebook manipulates millions of people for the sake of social approval.

Some people like to change the avatar on their page and Facebook knows - this is the moment of applying for the approval of the society: “what will my friends think about this photo?”. Facebook can rank these things higher in the news feed in order for as many of our friends as possible to rate or comment on a new avatar. And every time they do this, we again get sucked into all of the above.

Every person has an innate need for social approval, but adolescents are the most vulnerable group. That is why it is very important to understand how seriously designers have an impact on us when using this "vulnerability".

Trick number 5: Social reciprocity (Tooth per tooth)

You are helping me - and now I am in your debt.

You say: “thank you” - I will say “please”.

You send me a message - rude not to answer you.

You follow me - it’s rude not to fold back (especially for teenagers).

We are vulnerable to having to answer others. But, like social approval, technology companies manipulate our reciprocity with other people.

In some situations there is an accident. E-mail and chat messaging are the main “factories of social reciprocity,” but in other cases this weakness is purposefully exploited.

LinkedIn is the most obvious “exploiter”, because every time someone leaves us with a message, comments (assigned) for a skill or something else, we have to go back to linkedin.com and waste our time.

Like Facebook, LinkedIn uses the asymmetry of perception of what is happening. Every time you receive a request from someone, you think that the person sent it to you consciously, however, most likely, he just saw you on the list that the platform gave him. In other words, LinkedIn turns your unconscious impulses to reciprocate the offer to “accept friendship.” And they benefit directly from how much time is spent on it.

Imagine millions of people in the form of hens with severed heads, which only do that they run around, confirming applications for friends. And the company that has developed a similar model benefits from all this.

Welcome to social media.

Abusing your reciprocity with other people, LinkedIn, after approval of one application, gives you 4 more options for a “friend request”.

Imagine if technology companies were required to minimize social reciprocity. Or if there would be an “FDA for technology” that controlled when companies abused these biases?

Trick number 6: The endless plate, endless tapes and automatic playback

YouTube automatically plays the next video after the countdown

There is another way to capture people's attention, to make them “eat” something, even when they are not “hungry.”

How? Easy. Take "user expirience", which was previously finite and limited, and turn it into an endless stream.

Professor Brian Wonsink of Cornell University demonstrated this during his experiment. In his own office, he showed how to deceive a person by giving him a bottomless plate of soup. Every time the subjects ate, the soup was automatically added to the plate. As a result, people consumed 73% more calories than they usually needed to get enough.

Technology companies use the same principle. The news feed has been specifically designed to automatically add content and keep your attention scrolling through the page. Plus, they specifically eliminate everything that can make you pause and leave.

This also explains why videos on socially oriented sites like Netflix, Youtube or Facebook have the autoplay function for the next video at the end of the previous one. They do not expect you to make an informed choice, but simply launch the next video after the countdown. And this feature generates a huge part of the traffic.

Autoplay video on Facebook after countdown

Technology companies often claim that they only “make life easier for their users when they want to see something,” but in fact, they are upholding their own business interests. And you cannot blame them for this, because they all compete for a special currency called “time spent on a resource.” Now imagine that a technology company will make efforts not only to increase the time spent on its resource, but also increase the quality of this “time spent”.

Rule number 7: Sharp interruption vs polite feed

Companies know that a message that drastically distracts a person is more convincing for an answer than, for example, delicately waiting for an email to be seen.

Considering this, Facebook Messenger, WhatsApp, WeChat, or Snapchat chose to develop their applications to instantly interrupt the user and immediately show the chat window, instead of helping users to respect each other and not distract from business.

In other words, interruption is good for business.

Such behavior is also in the interest of technology companies in order to increase the sense of urgency and social reciprocity. For example, Facebook automatically informs the sender whether the recipient “saw” his message, instead of helping you to “hide” from undesirable correspondence. Because when you know that the person on the other side knows that you have seen his message, you feel even more obliged to answer him.

For comparison, Apple has a great deal of respect for users and allows you to manually turn read notifications on or off. The problem is that maximizing “interruptions” in the name of business has created a global tragedy, the fruit of which was a general disruption of concentration and billions of unnecessary interruptions every day around the world. And we must correct this problem, for example, by introducing universal standards for designing applications and services.

Trick number 8: Grouping your goals with their goals

Another way for the app to “grab” your attention is to combine your personal reasons for visiting the app with the company's business goals (for example, maximizing content consumption per session).

An example of this behavior in real life can serve as shops. First of all, people need medicines and dairy products. In other words, business makes part of itself what people need. But if the stores actually organized a display convenient for consumers, then these two groups of goods would be located at the very entrance, and not at the far end of the hall, as is usually the case.

Technology companies develop their sites on the same principle. For example, when you want to check the latest events on Facebook, the resource does not allow you to go directly - you can access the information of interest only after “passing” through the news feed and this is done on purpose. Facebook wants to translate all the reasons for its use by you, into maximizing the consumption of content and other products.

In a

Trick number 9: an inconvenient way to make a choice

We were told that it is enough for business to simply make “choice available.”

- "If something does not suit you, you can always use another product."

- “If you don’t like something, you can unsubscribe.”

- "If you have a dependency on our application, you can always just remove it."

Naturally, what the companies want is for you to make the choice that they need. Therefore, what suits them is easy to choose, and what is not is difficult. With tricks the same thing. You simplify the visual part that you want others to see, and complicate what you want to hide from prying eyes.

For example, NYTimes.com allows you to “freely choose” when it comes to canceling a digital subscription. But instead of simply making this functionality in the form of a “unsubscribe” button, they send an email to your e-mail with information on how to cancel a subscription using a phone call to a specific number that is available only during the designated working hours.

Instead of considering the world from the point of view of having a choice as such, we have to estimate the number of movements for each of the options. Imagine a world where every possible choice would be labeled with its own “coefficient of body movements” that would have independence - not part of a consortium or property - with which the degree of complexity of certain actions would be marked, and on the basis of which would have certain standards for their complexity.

Trick number 10: Predicting errors and sticking your feet in the door

Facebook offers us an easy choice to view photos. Would we press this button, knowing the true price of this click?

Applications also use the inability of people to predict the effects of their clicks.

People cannot intuitively estimate the true cost of a click that they are offered to make. Salespeople use the so-called “feet in the door” tactics, starting with the innocuous request “just one mouse click to see those who retweeted you” and continuing in the style “why don't you stay here for a while?”. Almost everyone uses this trick.

Imagine web browsers and smartphones through which, as if through gateways, people make a similar choice every day, who would be able to predict for their users the consequences of their click based on real data on benefits and costs.

That is why I add information about the approximate time of reading my publications. When you show the “true value” of a proposed choice, people (or the audience) begin to treat you with more respect.

In a good way, the Internet should be designed in terms of predicting costs and benefits, so that people are able to make a choice consciously by default, without making any extra effort for this.

TripAdvisor uses the strategy of “sticking feet into the door”, showing a single-click action “estimate the number of stars” and hiding three more questions from the user.

Conclusions and how we can fix it all

Are you upset that technology steals your time? So do I. I have listed only a few methods, when in fact there are thousands of them. Imagine whole bookshelves, seminars, workshops and trainings that teach entrepreneurs about these techniques. Imagine hundreds of engineers whose job is to come up with new ways every day to keep you online.

Absolute freedom requires a free mind, and we need technologies that will be on our side: help us to live, feel, think and act freely.

We need smartphones, notifications and web browsers to become an exoskeleton for our mind and interpersonal relationships, which puts our values, not impulses, first. Human time is valuable. And we need to protect it, as we protect privacy and other digital rights.

Source: https://habr.com/ru/post/301786/

All Articles