Storage Federation or all enterprise storage federation

In today's world, where data / information has long become vital for any business, the ability to freely move data between enterprise storage systems is becoming more and more important than ever. The need for simple and flexible data movement can be dictated by various tasks, for example:

- optimization of capacity utilization of more efficient disk arrays; since data for which a very high processing speed is no longer required, must be moved from the all-flash array to the hard disk array in order to free up expensive capacity on SSD drives for other tasks;

- increasing the efficiency of using disk and computing resources of arrays: if on one array you lack some resources (capacity or performance), and on another array (located near or in a neighboring building) such resources are abundant, then moving part of the data from one an array on another will allow you to solve this problem without having to spend money on an array upgrade; i.e., it’s actually about load balancing between multiple arrays;

') - removing an obsolete array from operation and moving data from this array to a new array.

And here, of course, what’s important is not so much the ability to move data, but the simplicity of this process, the ability to move data in any direction, the ability to move data without stopping business processes (that is, transparent to servers and applications). It is also important that all this can be implemented without additional costs for special hardware or software.

HPE 3PAR Storage Federation just allows you to implement all of the above. HPE 3PAR Storage Federation allows you to combine multiple HPE 3PAR StoreServ arrays into a single logical system in order to increase the utilization of disk resources of the entire system and load balancing between different components of the system. Data transfer is performed by one click of the mouse. In addition, other arrays (i.e., arrays not belonging to the HPE 3PAR StoreServ family) can also be included in a common system, but with one exception: data transfer in this case will be possible only in one direction - into the StoreServ array from the array non-storeserv.

Next, I will try to briefly describe how the federation of disk arrays works and what is required to configure it.

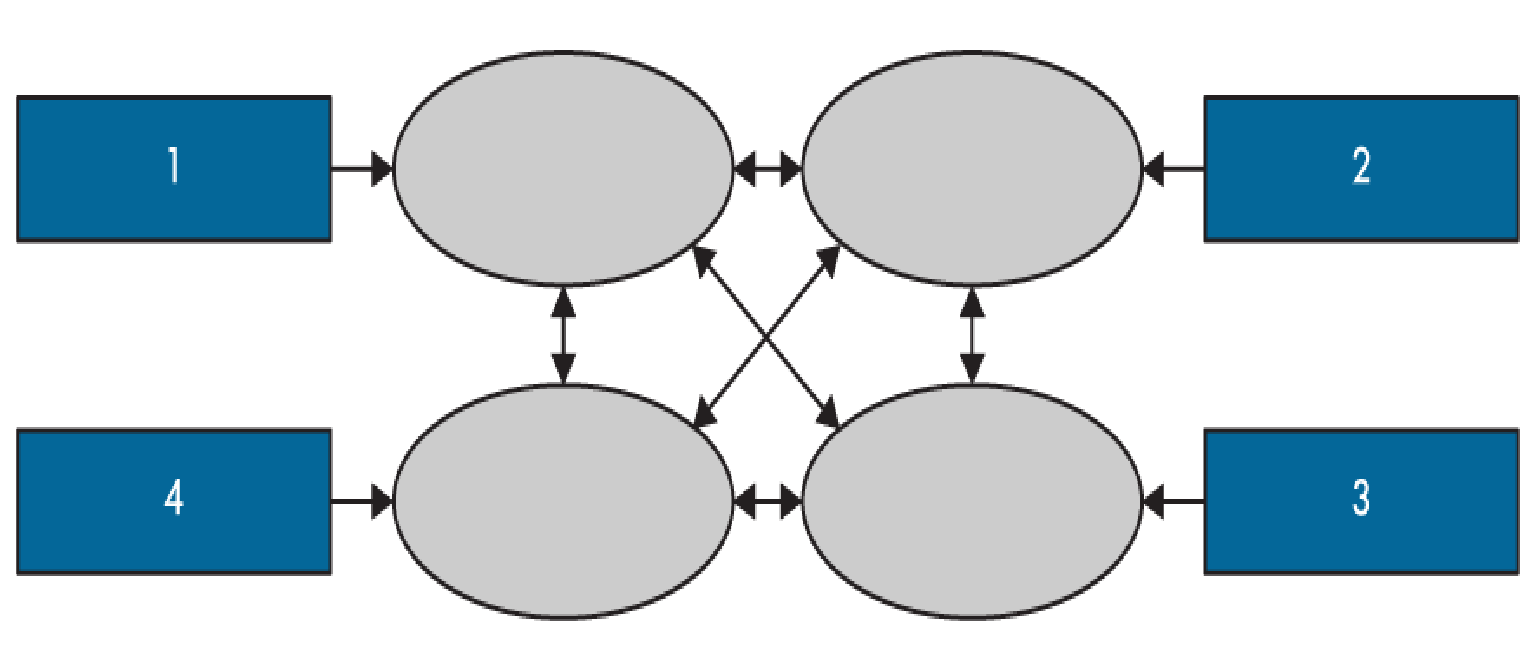

Topology

Up to 4 HPE 3PAR StoreServ arrays can be combined into a federation today, these can be various models, both modern and previous generations, say, 7400, 8450 and 20800. The Federation allows you to move data between any pair of arrays in both directions, for this purpose on arrays microcode must be at least 3.2.2 MU1. The arrays included in the federation (hereinafter, such arrays will be referred to as “federated”) can also include other arrays from which you need to do one-way migration (such arrays are called “migration sources”). One-way migration is supported both from HPE 3PAR StoreServ arrays and from other HPE arrays: EVA / P6000 and P9500 / XP10000 / XP12000 / XP20000 / XP24000, as well as from third-party arrays: EMC CX4 / VNX / vMAX, HDS USP / USP -V / USP-VM / VSP, IBM XiV. There can be up to 6 such migration sources, but the total number of arrays, federative and migration sources cannot exceed 8. Two options for possible configurations are given below. The arrows in these figures show the possible directions of data migration.

Fig.1. two federated arrays and 6 migration sources

Fig.2. four federated arrays and 4 sources of migration

Further in this blog I will only talk about federated arrays. I will discuss the data migration to HPE 3PAR StoreServ arrays from other arrays in subsequent publications.

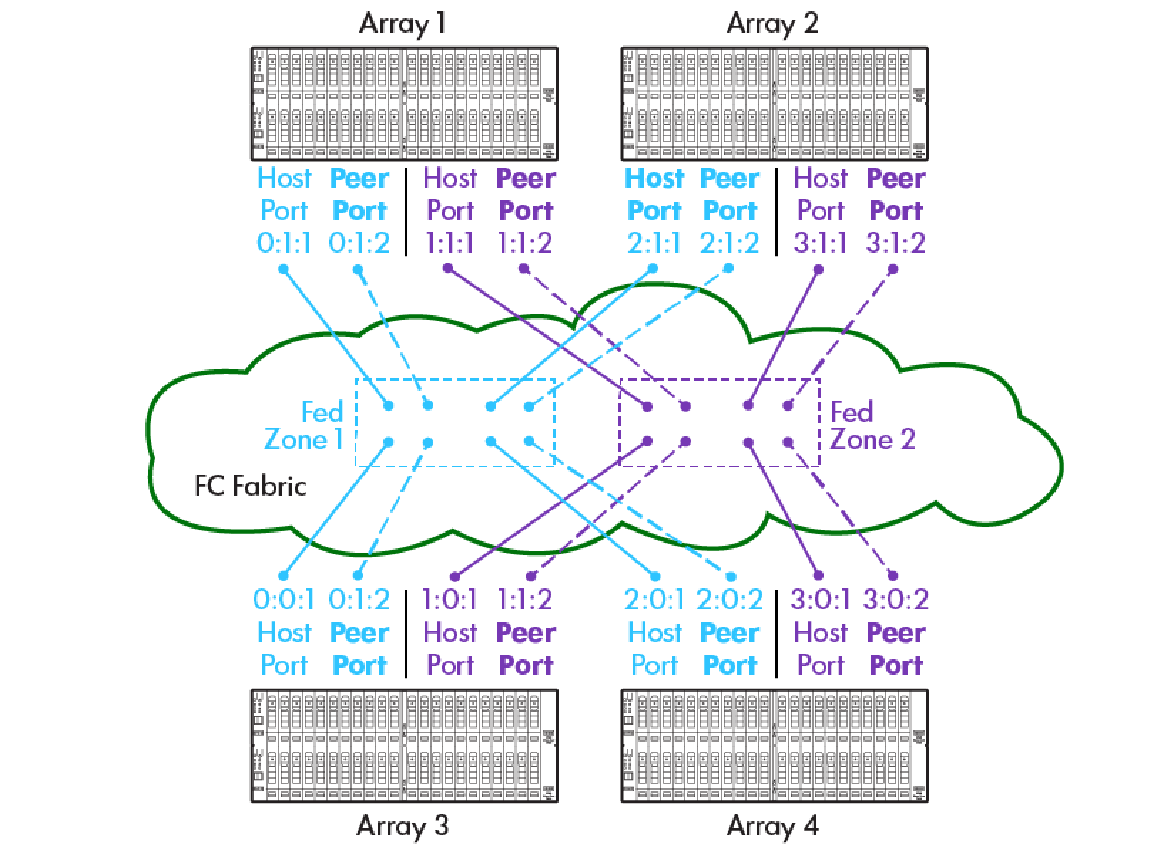

Ports and zones

On the federated array, you need to select 2 FC ports through which data will be migrated to this array, such ports are called peer . Ports through which migration to another will be performed / other arrays are called host ports. Host ports can be either dedicated or unallocated. Peer and host ports of federated arrays should be combined into zones and in this case a simplified zoning scheme can be used, in which only 2 zones can be used for all ports (see Fig. 3 below): one zone of one peer and one port are combined into each zone host port from each array.

Fig. 3. Zoning scheme for federated disk arrays.

Migration options

3 migration options are supported:

- Online migration is possible if the server operating system and multipath software support adding paths to volumes online. During online migration, the server (s) do not lose access to their volumes and the processing of an array of I / O requests from the server is not interrupted.

There are two options for online migration:

- server-level migration — in this case, all volumes exported to a specific server (or group of servers) will be migrated simultaneously.

- migration at the volume level (volume group) - in this case, part of the volumes exported to a specific server (or group of servers) will be migrated simultaneously. At the same time, the remaining server volumes will continue to be served by the array. This migration option allows you to redistribute the I / O load between multiple arrays, the server can simultaneously access all its volumes: volumes that have already been migrated to another array, volumes that are in the process of migration, and volumes that should not be migrated

- Minimally Disruptive Migration Migration. If the online migration option is not possible, then you can perform the migration with minimal downtime. The idle time is determined by the time required to reconfigure the server — so that it sees the target-array instead of the source-array. During the migration, the server will have access to all its data. This migration option is possible only at the server level (see above).

- Offline migration . This migration option is used if you need to migrate non-presented volumes.

The ability of one or another migration provider depends on the operating system of the server (s) whose data will need to be moved. Online migration is possible, for example, for the following OS: Windows Server 2008/2012, RedHat Enterprise 5/6/7, SuSE Enterprise 10/11/12, VmWARE 5.x / 6.0.

Migration process

The initial setup of Storage Federation comes down to a few simple steps that are performed from the SSMC graphical management console (StoreServ Management Console):

- choose arrays that we want to federate;

- assign peer and host ports on these arrays and include them in zones (zones on the switches will need to be created in advance)

Migration at the volume level (volume group) and migration at the host level (when all volumes presented to the host are migrated) are implemented a little differently. The fact is that when migrating at the volume level, the source array must continue to serve all the other volumes of this host that are not migrating. Volume-level migration requires the host to support the ALUA multipathing SCSI protocol.

The migration process at the volume level is as follows:

- Select the volume group to migrate. In addition, each source volume is automatically exported (presented) to the target array and then the target array automatically exports these volumes to the host. Copy volumes (on the target array) will have exactly the same WWN as the corresponding source volumes.

- As a result, the host will see the new access paths for each volume and will request the status (via the Report Target Port Group (RTPG) SCSI command) of these new paths from the array. As a result, the host will see each volume in two groups of paths (Target Port Groups): the first group will be through the ports of the source array and the second group will be through the ports of the target array. In this case, the first group will be in active optimized mode, and the second group - in standby mode.

- Next, the migration process starts, and the TPG mode first changes: the first group of paths goes into standby mode, and the second into active optimized mode (see Figure 4). After that, all I / O requests will go to the target array. It starts copying data from the source volume to the volume copy, of course, it is done directly between the two arrays without the participation of the hosts.

- During the migration, the recording of new data is performed in a volume copy, while the new data is also copied by the target array to the original volume (in case something goes wrong so that the data is not lost). In this case, the reading will be performed locally (i.e., from the copy volume) - if the requested blocks have already been migrated. If the requested blocks were not yet emigrated, then they will be emulated "out of turn". It is natural to expect some performance degradation during the migration process.

- After the migration of the volume (volume group) is completed - it will be permanently located on the target array. The source volume can be automatically deleted upon successful completion of the migration or left on the source array, depending on the migration settings selected. If the settings are such that the source volume is not automatically deleted, no mirroring of the volumes will be performed after the migration is completed. During the migration process and after the migration process, the volumes on the source array that did not participate in the migration are available to the host without any restrictions / changes.

Fig. 4. Status of access paths to the migrated volume (Vol-B) during data migration.

In conclusion, I want to add that a federation can also be used if disaster-proof solutions and high availability solutions are used. That is, you can migrate the volumes that the server cluster works with. You can migrate the volumes that are involved in replication between the HPE StoreServ arrays (in this case, the volumes from one array from the replication pair migrate to the third array — preserving replication for these volumes; you can migrate either the source or target volumes). Yes, and you also need to add that during the migration consistency groups are supported: if the application works with multiple volumes, then such volumes should be migrated as a consistent volume group. A consistent group means that the mirroring of volumes between the target array and the source arrays will continue until all the volumes from the consistent group are migrated to the target array.

Licenses

The license for Storage Federation is not called Storage Federation at all ... but Peer Motion. You need to license all the arrays included in the federation - if you need the possibility of bidirectional migration. If only unidirectional migration is needed, then only target arrays can be licensed.

So, if you are the administrator of several HPE StoreServ arrays, you can immediately take advantage of the Storage Federation without being shelved. Speaking - take advantage immediately - I mean the ability to use trial (trial) licenses to assess the capabilities of the Storage Federation.

Federate arrays, it's worth it!

Source: https://habr.com/ru/post/301472/

All Articles