Web scraping with Node.js

This is the first article in the series on creating and using scripts for web-scraping using Node.js.

This is the first article in the series on creating and using scripts for web-scraping using Node.js.

- Web scraping with Node.js

- Web scraping on Node.js and problem sites

- Node.js web scraping and bot protection

- Web scraping updated data with Node.js

The topic of web-scraping is attracting more and more interest, at least because it is an inexhaustible source of small, but convenient and interesting orders for freelancers. Naturally, more and more people are trying to figure out what it is. However, it is quite difficult to understand what web-scraping is by abstract examples from the documentation for the next library. It is much easier to understand this topic by observing the solution of the real problem step by step.

Usually, the task for web-scraping looks like this: there is data available only on web pages, and you need to pull it out from there and save it in some kind of digestible format. The final format is not important, as no one has canceled converters. For the most part, it’s about opening a browser, clicking on the links with a mouse, and copying the necessary data from the pages. Well, or do the same script.

The purpose of this article is to show the whole process of creating and using such a script, from setting the task to obtaining the final result. As an example, I’ll consider a real task, such as those that can often be found, for example, on freelance exchanges, well, and we’ll use Node.js as a tool for web-scraping.

Formulation of the problem

Suppose I want to get a list of all the articles and notes that I published on the website Ferra.ru. For each publication I want to get the title, link, date and size of the text. There is no convenient API for this site, so you have to grab the data from the pages.

Suppose I want to get a list of all the articles and notes that I published on the website Ferra.ru. For each publication I want to get the title, link, date and size of the text. There is no convenient API for this site, so you have to grab the data from the pages.

For many years, I did not bother to organize a separate section on the site, so that all my publications are mixed with the usual news. The only way I know how to highlight the publications I need is filtering by author. On the pages with a list of news, the author is not listed, so you have to check every news on the corresponding page. I remember that I wrote only in the “Science and Technology” section, so you can search not for all the news, but for one section only.

Here, approximately in this form, I usually receive tasks for web-scraping. Even in such tasks there are different surprises and pitfalls, but they are not immediately visible and you have to detect and settle them right in the process. Let's start:

Site analysis

We will need pages with news, links to which are collected in the Padjini list. All the necessary pages are available without authorization. Looking at the source page in the browser, you can make sure that all the data are contained directly in the HTML code. Quite a simple task (actually, because I chose it). Looks like we don’t have to mess with login, session storage, sending forms, tracking AJAX requests, parsing connected scripts and so on. There are cases when the analysis of the target site takes much more time than designing and writing a script, but not this time. Maybe in the following articles ...

Project preparation

I think it makes no sense to describe the creation of a project directory (and there is an empty index.js file and the simplest package.json file), the installation of Node.js and the npm package manager, and the installation and removal of modules via npm.

In real life, the development of a project is accompanied by a GIT repository, but this is beyond the scope of the article, so just keep in mind that each significant code change in real life will correspond to a separate commit.

Retrieving Pages

To get data from the HTML code of the page you need to get this code from the site. This can be done using the http client from the http module that is built into Node.js by default, however, to perform simple http requests it is more convenient to use different wrappers over http most popular of which is request, so let's try it.

The first step is to make sure that the request module receives from the site the same HTML code that comes to the browser. With most sites this will be the case, but sometimes there are sites that give the browser one thing, and a script with an http client another. Before, I first of all checked the landing pages with a GET request from curl , but once I came across a site that in curl and in the request script produced different http-responses, so now I immediately try to run the script. Something like this:

var request = require('request'); var URL = 'http://www.ferra.ru/ru/techlife/news/'; request(URL, function (err, res, body) { if (err) throw err; console.log(body); console.log(res.statusCode); }); Run the script. If the site is lying or with the connection problem, then an error will fall out, and if everything is good, then a long sheet of the original text of the page will fall right into the terminal window, and you can make sure that it is almost the same as in the browser. This is good, so we don’t need to set special cookies or http headers to get the page.

However, if you are not too lazy and squander text up to the Russian-language text, then you will notice that request incorrectly determines the encoding. Russian news headlines, for example, look like this:

4.7- iPhone 7

PC- DOOM 4

The problem with encodings is now not as common as at the dawn of the Internet, but still quite often (and on sites without an API, especially often). The request module has an encoding parameter, but it only supports encodings adopted in Node.js for converting a buffer into a string. Let me remind you, this is ascii , utf8 , utf16le (aka ucs2 ), base64 , binary and hex , while we need windows-1251 .

The most common solution for this problem is to set the encoding to null in the request so that it places the original buffer in the body , and use the iconv or iconv-lite module to convert it. For example, like this:

var request = require('request'); var iconv = require('iconv-lite'); var opt = { url: 'http://www.ferra.ru/ru/techlife/news/', encoding: null } request(opt, function (err, res, body) { if (err) throw err; console.log(iconv.decode(body, 'win1251')); console.log(res.statusCode); }); The disadvantage of this solution is that on every problem site you have to spend time trying to figure out the encoding. If this site is not the last, then it is worthwhile to find a more automated solution. If the coding is understood by the browser, then our script should also understand it. The path for real geeks is to find the request module on GitHub and help its developers implement coding support from iconv . Well, or make your own fork with blackjack and good support for encodings. The path for experienced practitioners is to look for an alternative to the request module.

I found the needle module in a similar situation, and was so pleased that I didn’t use the request anymore. With the default settings, needle determines the encoding in the same way as the browser does, and automatically recodes the text of the http response. And this is not the only thing where needle better than request .

Let's try to get our problem page using needle :

var needle = require('needle'); var URL = 'http://www.ferra.ru/ru/techlife/news/'; needle.get(URL, function(err, res){ if (err) throw err; console.log(res.body); console.log(res.statusCode); }); Now everything is great. To clear your conscience, you should try the same with a separate news page. There, too, everything will be fine.

Crawling

Now we need to get the page of each news, check the name of the author on it, and save the necessary data if it matches. Since we do not have a ready list of links to news pages, we will get it recursively following the Padjin list. Like search engines crawlers, only more sighting. Thus, we need our script to take the link, send it for processing, save some useful data (if any), and put new links (for news or the next pages of the list) for the same treatment.

At first it may seem that cracking is easier to carry out in several passes. For example, first recursively collect all the pages of the Pajini list, then get all the news pages from them, and then process each news item. This approach helps the newcomer to keep in mind the process of scraping, but in practice a single, single-level queue for requests of all types is, at a minimum, easier and faster to develop.

To create such a queue, you can use the queue function from the famous async module, but I prefer to use the tress module, which is backward compatible with async.queue , but much smaller, since it does not contain the other functions of the async module. A small module is good not because it takes up less space (this is nonsense), but because it is easier to finish it quickly if it is needed for particularly complex cracking.

The tress works like this:

var tress = require('tress'); var needle = require('needle'); var URL = 'http://www.ferra.ru/ru/techlife/news/'; var results = []; // `tress` var q = tress(function(url, callback){ // url needle.get(url, function(err, res){ if (err) throw err; // res.body // results.push // q.push callback(); // callback }); }); // , q.drain = function(){ require('fs').writeFileSync('./data.json', JSON.stringify(results, null, 4)); } // q.push(URL); It is worth noting that our function will each time perform an HTTP request, and while it is being executed, the script will be idle. So the script will work for quite a long time. To speed it up, you can pass tress second parameter the number of links that can be processed in parallel. At the same time, the script will continue to work in the same process and in the same thread, while parallelism will be provided by non-blocking I / O operations in Node.js.

Parsing

The code that we already have can be used as the basis for scraping. In fact, we created the simplest miniframe, which can be gradually modified every time we hit another complex website, and for simple websites (most of them), you can simply write a code snippet responsible for parsing. The meaning of this fragment will always be the same: at the entrance, the body of the http response, and at the exit, the completion of the results array and the queue of links. Tools for parsing the rest of the code should not affect.

Parsing gurus know that the most powerful and versatile way of parsing pages is regular expressions . They allow parsit pages with a very non-standard and extremely anti-semantic layout. In general, if the data can be accurately copied from the site without knowing its language, then they can be parsed with regulars.

However, most HTML pages are easily understood by DOM parsers, which are much easier and easier to read. Regulars should only be used if DOM parsers fail. In our case, the DOM parser is perfect. Currently, cheerio , the server version of the iconic jQuery, is leading the way among DOM parsers under Node.js.

( By the way, JQuery is used on Ferra.ru. This is a fairly reliable sign that cheerio will cope with such a site )

At first, it may seem more convenient to write a separate parser for each type of page (in our case there are two of them — lists and news). In fact, you can simply search the page for each type of data. If there is no necessary data on the page, then they simply will not be found. Sometimes you have to think about how to avoid confusion if different data looks the same on pages of different types, but I have never met a site where it would be difficult. But I have met many sites where different data types were randomly combined on the same pages, so it’s worth getting used to writing a single parser for all pages.

So, the lists of links to news are located inside the div element with the class b_rewiev . There are other links that we do not need, but the correct links are easy to distinguish, since only such links have a parent — the p element. The link to the next page of the page is located inside the span element with the bpr_next class, and it is there alone. There is no such item on the news pages and on the last page of the list. It is worth considering that the links in the Padzinator are relative, so they should not be forgotten to result in absolute ones. The name of the author is hidden in the depth of the div element with the class b_infopost . On the pages of the list there is no such element, so if the author matches, you can stupidly collect the news data.

Do not forget about broken links (spoiler: in the section that we scraped, there are one such links). Alternatively, you can check the response code for each request, but there are sites that give the page a broken link with code 200 (even if they write “404” on it). Another option is to look in the code of such a page for those elements that we are going to look for by the parser. In our case, there are no such elements on the broken link page, so the parser simply ignores such pages.

Add parsing to our code using cheerio :

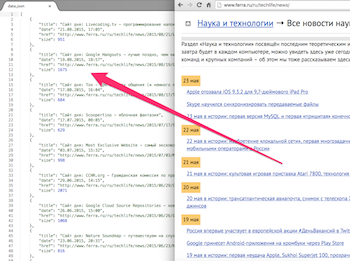

var tress = require('tress'); var needle = require('needle'); var cheerio = require('cheerio'); var resolve = require('url').resolve; var fs = require('fs'); var URL = 'http://www.ferra.ru/ru/techlife/news/'; var results = []; var q = tress(function(url, callback){ needle.get(url, function(err, res){ if (err) throw err; // DOM var $ = cheerio.load(res.body); // if($('.b_infopost').contents().eq(2).text().trim().slice(0, -1) === ' '){ results.push({ title: $('h1').text(), date: $('.b_infopost>.date').text(), href: url, size: $('.newsbody').text().length }); } // $('.b_rewiev p>a').each(function() { q.push($(this).attr('href')); }); // $('.bpr_next>a').each(function() { // q.push(resolve(URL, $(this).attr('href'))); }); callback(); }); }, 10); // 10 q.drain = function(){ fs.writeFileSync('./data.json', JSON.stringify(results, null, 4)); } q.push(URL); In principle, we received a script for web-scraping, which solves our problem (for those who want the code on gist ). However, I would not give such a script to the customer. Even with parallel requests, this script runs for a long time, which means that at least it needs to add an indication of the execution process. Also now, even with a brief interruption of the connection, the script will fall without saving intermediate results, so you need to do so either that the script retains the intermediate results before the fall, or that it does not fall, but pauses. I would also add the possibility of forcibly terminating the script, and then continue from the same place. This is overkill, by and large, but such “cherries on the cake” greatly strengthen the relationship with customers.

However, if the customer asked to scrape the data once and simply send the file with the results, then nothing can be done. Everything works like this (23 minutes in 10 streams, 1005 publications and one broken link found). If you completely insolent, it would be possible not to make a recursive passage through Padzinator, but to generate links to list pages using a template during the period when I worked at Ferra.ru. Then the script would not have worked so long. At first, this is annoying, but the choice of such solutions is also an important part of the web scraping task.

Conclusion

In principle, knowing how to write such scrippers can take orders on freelancing exchanges and live well. However, there are a couple of problems. Firstly, many customers do not want final data, but a script that they can use without any problems (and they have very specific requirements). Secondly, on the websites there are difficulties that are found only when the order has already been taken and half of the work has already been done, and you have to either lose money and reputation, or perform mental feats.

In the short term, I am planning articles about more complex cases (sessions, AJAX, glitches on the site, and so on) and about bringing web scraper scripts to the presentation. Questions and wishes are welcome.

')

Source: https://habr.com/ru/post/301426/

All Articles