What to do if the site fell under the sanction of Google

Filters - a topic for optimizers is always relevant and often quite painful. The lion's share of articles, recommendations and other varieties of SEO-literacy on the Web is dedicated precisely to the art of properly building relationships with search engines. Those who are more fortunate, write and read materials on how to avoid sanctions; those who have less - how to get out from under them with minimal losses. This article, as you already understood, will not be one of those who tell success stories or talk about precautionary measures. I would like to share my personal unsuccessful experience with search engines - to describe how the situation looks from the inside, what questions and doubts arise and, of course, what steps it makes sense to take. In a word, I invite everyone to learn from our mistakes!

I think there is no need to describe in detail what filters Google has and what they want from us - the information is rather trivial and publicly available. But for the order I will nevertheless briefly describe those that will be regularly mentioned in the narration:

- Panda - evaluates the content of the site in terms of quality (text saturation, uniqueness) and usefulness to the user;

- Penguin - monitors external links, eliminates sites involved in various kinds of commercial and non-commercial reference schemes;

- Hummingbird - compares the semantic core of the site with its actual content and reveals inconsistencies.

')

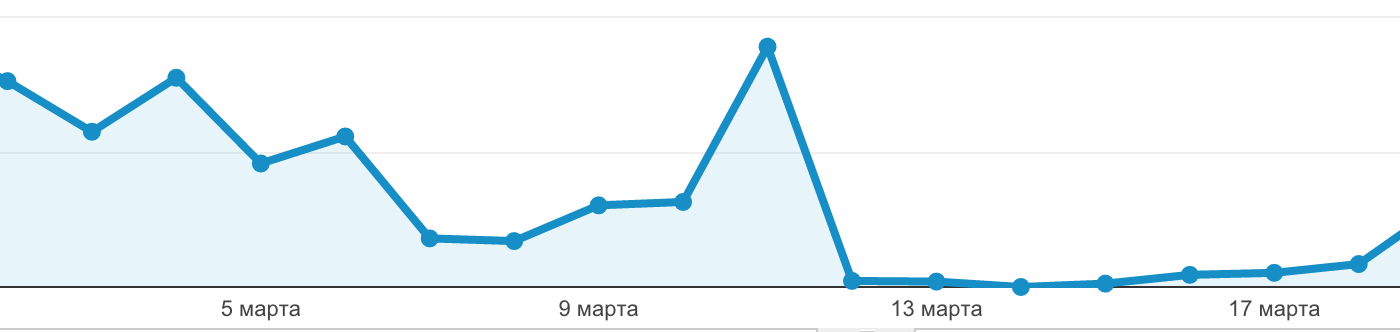

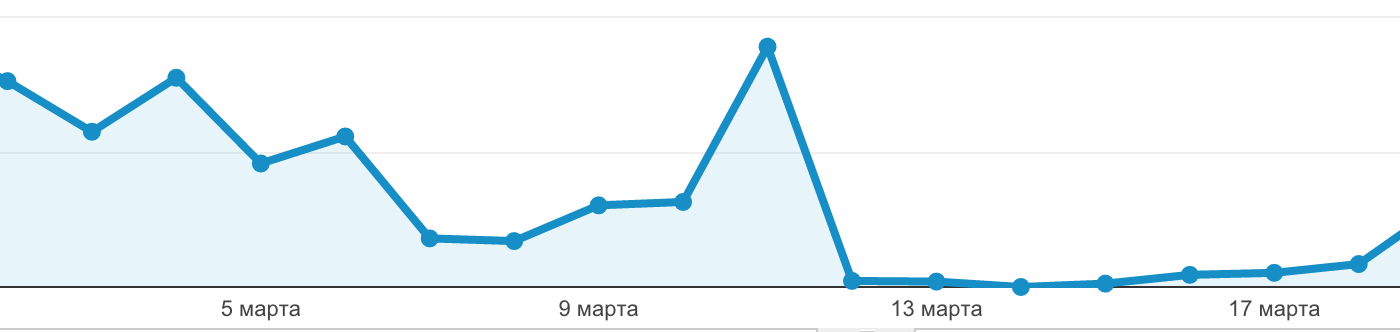

Now, having refreshed our memory, we begin our story. So, it all started with a sharp, inexplicable drop in indicators in mid-March. As if on purpose, right before the introduction of sanctions, we reached the peak of attendance, so the schedule turned out to be particularly impressive:

The site we were leading was very young at the time, page indexing started not so long ago, so the assumptions, one more pessimistic than the other, we had a lot. First of all, of course, all technical issues were considered with passion: metric codes were checked, all pages of the site were tested. After making sure that everything functions as it should, we unwittingly began to turn our thoughts to sanctions. I was confused by one thing that is rarely mentioned when they talk about filters: we did not receive any notifications by mail and we were not completely sure which conclusions to draw from this. Obviously, from here it was possible to conclude that the site did not fall into the hands of Penguin, whose "letters of happiness" are widely known. But for the rest of Google’s fauna, it was hard to say anything definite.

Then, to clarify the situation, we delved into the analysis of traffic sources.

The clinical picture loomed a textbook: transitions on search queries fell to almost zero, while the “external” attendance was almost not affected. What came as a surprise to us is the simultaneous fall in direct hits. It seems to be obvious that the sources of traffic are interrelated, but we did not think to see the effect on such a short segment. Anyway, the conclusion suggested itself: we were really “drowned” for violations.

There is little joy, but in such a situation there are some bright sides: for example, an incentive to analyze in detail and critically your own web page for compliance with generally accepted criteria and to identify areas of risk. To find out which of the silent filters did deal with us and on what grounds, the whole investigation was ahead. Hummingbirds we crossed out on mature reflection: the short-circuit, even to the most captious glance, fully corresponded to the site content. Panda seemed the most likely option, but we could vouch for the uniqueness of our texts, but not enough, perhaps, regular updates still did not seem to be a sufficiently weighty reason for such punitive measures.

The reason, as always, was in the details. A few days before the site changed the design of the main page; On the existing design of the existing text went bad, and it was decided to temporarily remove it. However, due to an oversight instead of deleting, the text was first hidden. We found and eliminated this error literally in a quarter of an hour, but this time was enough for the vigilant robot. Noticing the discrepancy between the content “for user” and “for search engine”, Panda decided that she caught us in deception and duplicity, and then it’s understandable.

This was the second important lesson we learned from this epic: SEO Optimizer is a bit of a sapper, even the smallest and dumbest mistakes in our profession can lead to devastating consequences.

But be that as it may, the life of the site continued, and it was necessary to somehow return it to its previous positions. For a start, we focused on what let us down - the long-suffering text on the main page. They calculated the amount that Google would have liked and would not have affected the usability of the site negatively (remember the conflict with the design?), Picked up absolutely new short circuits and, finally, rewrote everything from scratch based on the new semantic core. Thus, the work on the errors was successfully completed, but apart from a clear conscience, this did not give us much.

Above, I said that getting under the filter stimulates reflection, but in fact everything is much better: as a result of reflection, you will have to do global self-improvement. The punctual correction of any one violation will not do anything, even if, as in our case, there is confidence that the problem lies precisely in it. Sanctions throw the site to the starting position and make it the object of particularly close attention from the search filters. Therefore, to show that you are really using the provided second chance for a full reel, you will have to show your best. That is, to eliminate the slightest opportunity for quibbles - at least for the first time.

Our site was, in general, clean: the content from the very beginning was created with an eye to the rules of Google, and we were not fond of buying links and other risky ventures. At first glance, it is not clear how to correct. But over time (for some reason, Google gave us enough time) it turned out that the longer you look, the more shortcomings are revealed. Besides working with problem text, over the next couple of months, we also:

- deleted all empty pages;

- preoccupied with internal relink;

- checked all the texts for uniqueness and found out that some of them were copied to other sources;

- finalized the design;

- Replaced some of the old keywords that have lost their positions.

I don’t know if any of this has changed Google’s attitude radically, but I want to believe that, taken together, the measures taken have accelerated the matter. Unlike the fall, progress was very, very gradual, but the indicators began to grow steadily. Some sources advise contacting Google directly for revision; in my opinion, these are extra chores without much impact. Judging by the speed of the reaction, the robot visits even the fined pages is not so rare, so have patience and focus on bringing the site to shine. The main danger at this stage is to hurry, not miss the moment. Here is your third lesson.

We are approaching a happy ending. In early August, that is, somewhere in 4-5 months after the disaster, the indicators reached the previous values. The term is quite standard, you will see it in almost any thematic article. With all our efforts, we did not succeed in rehabilitating ahead of schedule, so I would advise you to immediately tune in to long distances.

In general and in general, I can say that the experience for our team was negative, but very valuable in the long run. During the work on the bugs, we learned to look at the site through the eyes of a search engine, we learned some interesting details about the rules, and we developed a habit of not panicking in emergency situations. I hope our observations will be useful to readers purely as a theoretical guide, but even if you are not lucky enough to please under the sanction, do not despair and try to approach the situation philosophically. After all, for the broken, as is known, two are not broken.

I think there is no need to describe in detail what filters Google has and what they want from us - the information is rather trivial and publicly available. But for the order I will nevertheless briefly describe those that will be regularly mentioned in the narration:

- Panda - evaluates the content of the site in terms of quality (text saturation, uniqueness) and usefulness to the user;

- Penguin - monitors external links, eliminates sites involved in various kinds of commercial and non-commercial reference schemes;

- Hummingbird - compares the semantic core of the site with its actual content and reveals inconsistencies.

')

Now, having refreshed our memory, we begin our story. So, it all started with a sharp, inexplicable drop in indicators in mid-March. As if on purpose, right before the introduction of sanctions, we reached the peak of attendance, so the schedule turned out to be particularly impressive:

The site we were leading was very young at the time, page indexing started not so long ago, so the assumptions, one more pessimistic than the other, we had a lot. First of all, of course, all technical issues were considered with passion: metric codes were checked, all pages of the site were tested. After making sure that everything functions as it should, we unwittingly began to turn our thoughts to sanctions. I was confused by one thing that is rarely mentioned when they talk about filters: we did not receive any notifications by mail and we were not completely sure which conclusions to draw from this. Obviously, from here it was possible to conclude that the site did not fall into the hands of Penguin, whose "letters of happiness" are widely known. But for the rest of Google’s fauna, it was hard to say anything definite.

Then, to clarify the situation, we delved into the analysis of traffic sources.

The clinical picture loomed a textbook: transitions on search queries fell to almost zero, while the “external” attendance was almost not affected. What came as a surprise to us is the simultaneous fall in direct hits. It seems to be obvious that the sources of traffic are interrelated, but we did not think to see the effect on such a short segment. Anyway, the conclusion suggested itself: we were really “drowned” for violations.

There is little joy, but in such a situation there are some bright sides: for example, an incentive to analyze in detail and critically your own web page for compliance with generally accepted criteria and to identify areas of risk. To find out which of the silent filters did deal with us and on what grounds, the whole investigation was ahead. Hummingbirds we crossed out on mature reflection: the short-circuit, even to the most captious glance, fully corresponded to the site content. Panda seemed the most likely option, but we could vouch for the uniqueness of our texts, but not enough, perhaps, regular updates still did not seem to be a sufficiently weighty reason for such punitive measures.

The reason, as always, was in the details. A few days before the site changed the design of the main page; On the existing design of the existing text went bad, and it was decided to temporarily remove it. However, due to an oversight instead of deleting, the text was first hidden. We found and eliminated this error literally in a quarter of an hour, but this time was enough for the vigilant robot. Noticing the discrepancy between the content “for user” and “for search engine”, Panda decided that she caught us in deception and duplicity, and then it’s understandable.

This was the second important lesson we learned from this epic: SEO Optimizer is a bit of a sapper, even the smallest and dumbest mistakes in our profession can lead to devastating consequences.

But be that as it may, the life of the site continued, and it was necessary to somehow return it to its previous positions. For a start, we focused on what let us down - the long-suffering text on the main page. They calculated the amount that Google would have liked and would not have affected the usability of the site negatively (remember the conflict with the design?), Picked up absolutely new short circuits and, finally, rewrote everything from scratch based on the new semantic core. Thus, the work on the errors was successfully completed, but apart from a clear conscience, this did not give us much.

Above, I said that getting under the filter stimulates reflection, but in fact everything is much better: as a result of reflection, you will have to do global self-improvement. The punctual correction of any one violation will not do anything, even if, as in our case, there is confidence that the problem lies precisely in it. Sanctions throw the site to the starting position and make it the object of particularly close attention from the search filters. Therefore, to show that you are really using the provided second chance for a full reel, you will have to show your best. That is, to eliminate the slightest opportunity for quibbles - at least for the first time.

Our site was, in general, clean: the content from the very beginning was created with an eye to the rules of Google, and we were not fond of buying links and other risky ventures. At first glance, it is not clear how to correct. But over time (for some reason, Google gave us enough time) it turned out that the longer you look, the more shortcomings are revealed. Besides working with problem text, over the next couple of months, we also:

- deleted all empty pages;

- preoccupied with internal relink;

- checked all the texts for uniqueness and found out that some of them were copied to other sources;

- finalized the design;

- Replaced some of the old keywords that have lost their positions.

I don’t know if any of this has changed Google’s attitude radically, but I want to believe that, taken together, the measures taken have accelerated the matter. Unlike the fall, progress was very, very gradual, but the indicators began to grow steadily. Some sources advise contacting Google directly for revision; in my opinion, these are extra chores without much impact. Judging by the speed of the reaction, the robot visits even the fined pages is not so rare, so have patience and focus on bringing the site to shine. The main danger at this stage is to hurry, not miss the moment. Here is your third lesson.

We are approaching a happy ending. In early August, that is, somewhere in 4-5 months after the disaster, the indicators reached the previous values. The term is quite standard, you will see it in almost any thematic article. With all our efforts, we did not succeed in rehabilitating ahead of schedule, so I would advise you to immediately tune in to long distances.

In general and in general, I can say that the experience for our team was negative, but very valuable in the long run. During the work on the bugs, we learned to look at the site through the eyes of a search engine, we learned some interesting details about the rules, and we developed a habit of not panicking in emergency situations. I hope our observations will be useful to readers purely as a theoretical guide, but even if you are not lucky enough to please under the sanction, do not despair and try to approach the situation philosophically. After all, for the broken, as is known, two are not broken.

Source: https://habr.com/ru/post/293734/

All Articles