How to conduct A / B testing contextual advertising?

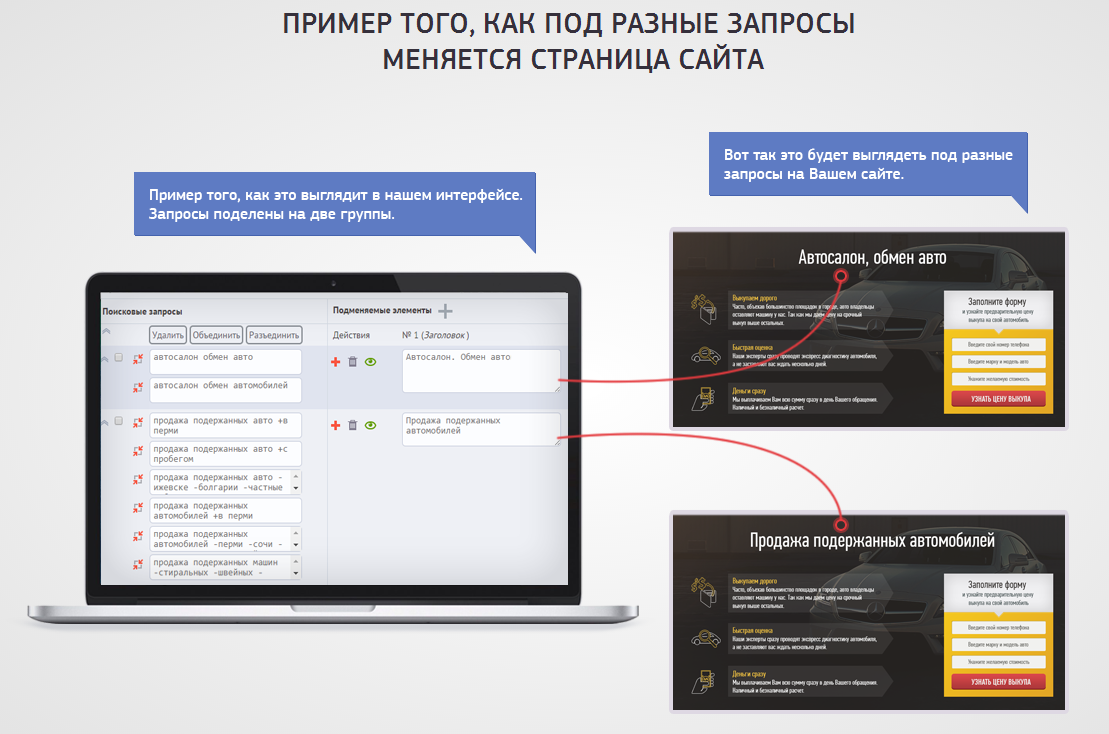

As we have already told in our introductory material, one of the most effective tools for increasing conversion in working with contextual advertising is the personalization of the content on the site to the needs and demands of the user. By personalizing the content (headers and content of the text) for a search query for which your potential client comes to the site, you get the so-called “multilanders”.

Thus, the visitor sees a result that is pleasant and understandable to the human eye (and not just a “stupid” mechanical replacement of the title directly to search queries) and is more likely to be converted into a client.

')

In the previous post, we tried to explain the differences between this system of increasing conversion from product recommendations and covered the topic of segmentation of search queries and the corresponding analytics. Today we decided to bring a more detailed story about dynamic A / B testing from Yagla.

Based on our experience of working with hundreds of campaigns on contextual advertising, we realized that with a poorly visible landing header, the probability of missing a visitor increases. In this case, the user instinctively begins to pay attention to other, more noticeable elements (description of any product, logotype, etc.). He has to spend time trying to figure out how much the opened page is relevant to his request (and the longer this time, the higher the chance of missing a potential client). Therefore, the greatest efficiency is shown by landings (the first screen) with rather large and noticeable headings (the screenshot above).

Such headlines become the first element of your landing page that a visitor sees, quickly determining how the information presented relates to their current need. Our system allows you to work with dynamic headlines that can be configured in manual mode, segmented search queries and corresponding headline substitutions, based on the understanding of your immediate audience.

How to determine the effectiveness of substitutions?

Of course, we will not open America for you by talking about A / B testing , in which the control group of elements is compared with a set of test groups with the changes made compared to the original version. Such testing can be carried out safely using services like Optimizely or abtest.ru , but in this case you will need, relatively speaking, copies of your landings.

When working with medium contextual campaigns, purely such “doublers” can go up to dozens of test landings, plus you need to set up one or another substitution option in manual mode, collect statistics for each campaign separately, compare the results and choose which or other options for substitutions

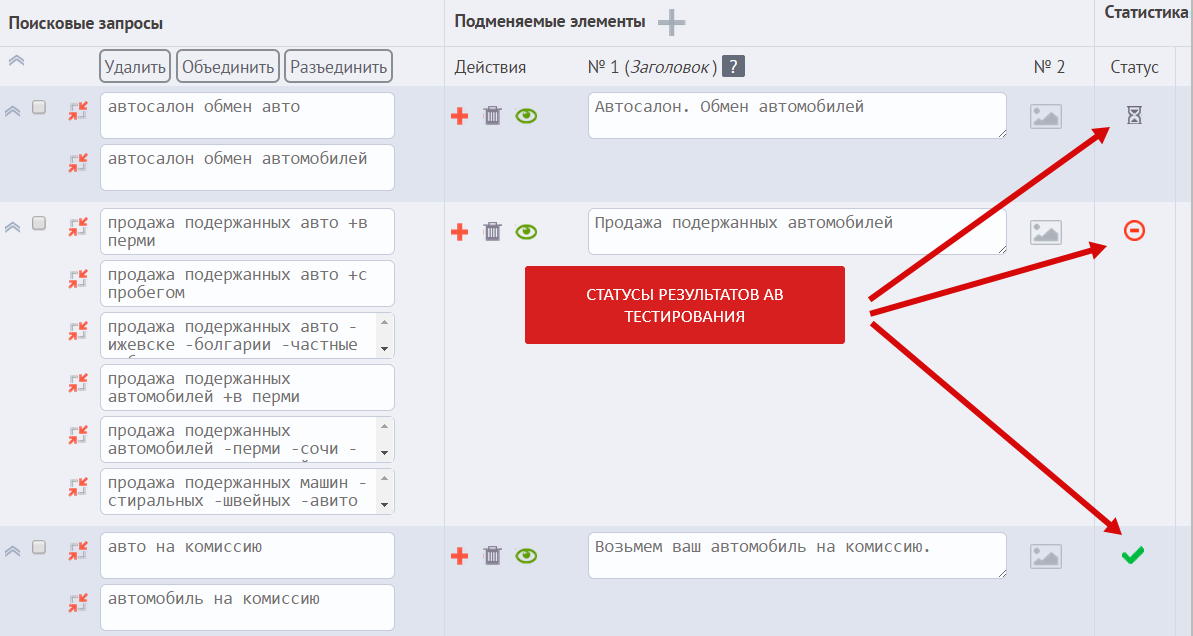

We solve this problem with automatic A / B tests. The system conducts dozens, hundreds or even thousands of tests for different groups of search queries. In the automatic mode, Yagla determines those substitutions that worked well in relation to the original version, puts down statuses and automatically disables the ineffective options.

Everything is summarized in the form of tables for visual convenience and control of the system, and if there is substantial traffic, you can test at least 10 headers for one group of requests. To work with the system, you need to insert only one line of code on all pages of the site:

<script src='//st.yagla.ru/js/ycjs'></script> Important note : during A / B testing, there is no situation in our system when the client sees the original version of the page, which is replaced in a moment by the tested version.

In the next article we will talk about the features of testing homogeneous (and heterogeneous) traffic, which can significantly affect the accuracy of the decisions made during the testing of the effectiveness of your campaign.

Source: https://habr.com/ru/post/289156/

All Articles