Removing the image from the OV7670 camera to a VGA monitor using FPGA

Foreword

Having read many fascinating articles about interesting developments under FPGA, such as Tetris , radio transmitter and others , I also set about trying to do something for the soul. For this purpose, I purchased the OV7670 camera and the DE-1 debug board from Terasic with a Cyclone II chip from Altera. The task set the following: display the image from the camera on the VGA monitor. In order to justify the use of FPGA, I am going to do it at the maximum speed for the camera. I should note that knowledge in the field of electronics will help to understand this work more easily: knowledge of VGA and I2C interfaces, an idea of SDRAM memory, etc.

Introduction

This article is not a study, it is rather a report on the work done, in which I tried to show the main idea and the most interesting and difficult places in my opinion. In terms of complexity, this project follows “to blink an LED,” but it has a huge potential for expansion. The project intentionally does not use ready IP-cores and standard interfaces, since the project was originally planned as a handwritten one. It also helps to win a little in terms of resources and speed. I hope this article will be of interest to readers, and the desire to see yourself on the screen through a "self-made camera" will lead to the study of FPGA.

Disclamer

In some places of the project, the architecture and syntax of the code may not be optimal. This is due to the fact that I was engaged in the implementation of the project in my spare time, and between approaches there were sometimes breaks of several months: old thoughts were forgotten, but new ones appeared. So, for example, the central automat was greatly simplified: from the original monster only the name “global” remained. Before publication, I refactored the code, but if there are any flaws, please indicate them in the comments.

Problems and solutions

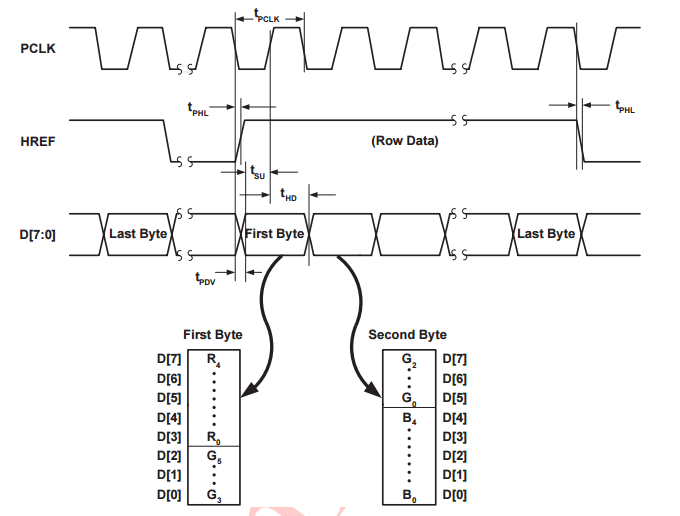

In order to understand what awaits us, take a look at the hardware and evaluate what problems we will have to face. Camera OV7670. The camera is capable of producing an image with a resolution of 640x480 pixels with a frequency of 30 frames per second in RGB565 format. To operate the camera, it is necessary to feed a 24 MHz clock on it. The camera transmits data to the user on an 8-bit bus, as well as synchronization gates VSYNC and HSYNC. Timing diagrams of the camera are shown in Figure 1.

Pic1

')

Color information is transmitted in 2 cycles per byte. Packing data in bytes is shown in Figure 2.

Pic2

VGA monitor. VGA is an analog signal, so it will not work to provide digital data to its input. But on board the DE-1 there are 4-bit DACs, and we will use them to convert a digital signal into an analog one. VGA with a resolution of 640x480 has a refresh rate of 60 frames per second. It is necessary to set data on the DAC with a frequency of 25.175 MHz, as well as to form synchronization gates VSYNC and HSYNC. Timing for VGA can be found here .

It becomes clear that the frequency of data from the camera and the frequency of data output to the monitor are different, which eliminates the possibility of direct connection. The way out of this situation is to use the frame buffer . We will allocate two equal areas in memory: the current frame from the camera will be recorded into one, and the previous one will be extracted from the second, after the end of the recorded frame, the buffers are swapped. To store one frame, 640 * 480 * 16 = 4.915 * 10 ^ 6 bits are required, which is much more than on-chip memory available onboard the Cyclone II. Therefore, we will use SDRAM memory located in a separate chip on the DE-1 board for storing frames. This will allow us to organize a frame buffer for solving a technical problem and provide an opportunity to practice writing the SDRAM controller.

The next problem arises from the solution of the previous one. When using SDRAM memory in our project, two important points need to be taken into account: first, the memory works at a high frequency of 120 MHz for our design and we have a new problem - the transfer of data from the clowk domain of the camera to the clown domain SDRAM; secondly, in order to achieve maximum performance, writing in SDRAM follows whole transactions called burst. To solve these problems, the FIFO, organized in on-chip FPGA memory, is the best approach. The basic idea is this: the camera at a low frequency fills up the FIFO, after which the SDRAM controller reads the data at a high frequency and immediately writes it to the memory in one transaction.

The output of data on the monitor is organized the same principle. Data from SDRAM is written to the FIFO, and then retrieved at 25 MHz for submission to the DAC. After emptying the FIFO, the operation is repeated.

The smallest problem is that out-of-the-box camera settings do not suit us, and we need to change them. The most important point, the camera provides data in the format YUV422, and you need to change it to RGB444. To access the internal registers of the OV7670, it will be necessary to describe the I2C transmitter module.

Now we can say which modules we will have to implement, and what tasks they will solve.

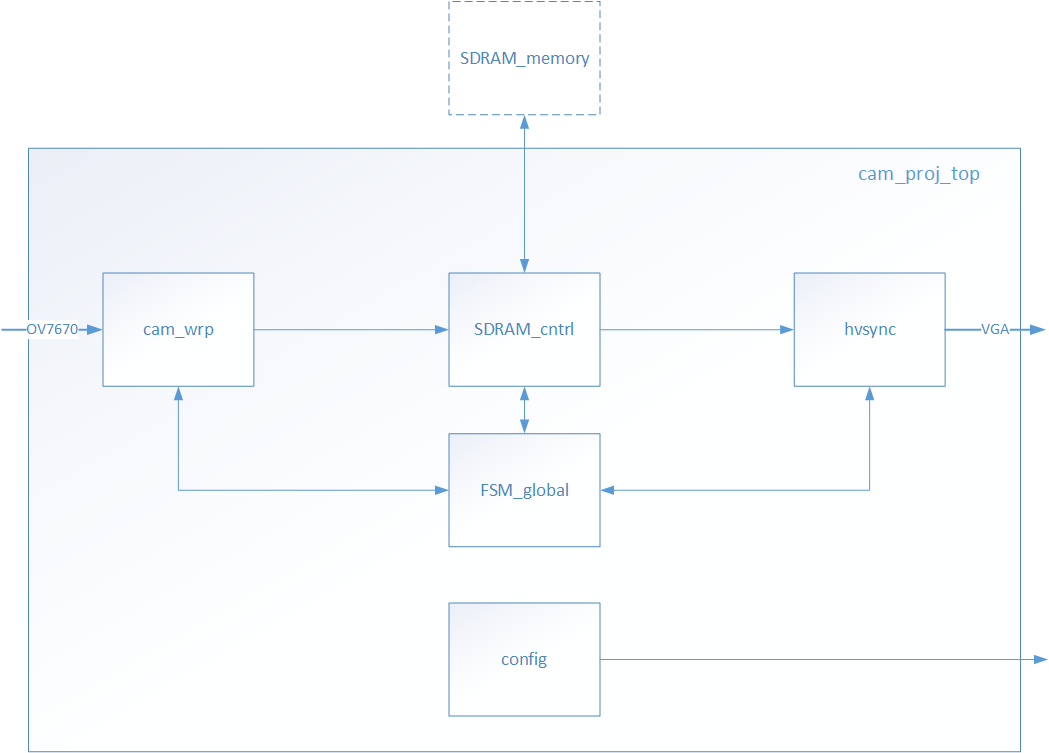

- cam_wrp - the module receives data from the camera and writes it to the input FIFO;

- hvsync - the module generates gates for VGA, receives data from SDRAM, writes them to the input FIFO and sends it to the DAC by the gate;

- sdram_cntr - SDRAM controller;

- FSM_global - automatic control;

- camera_configure - camera configuration and control module.

Figure 3 shows the functional design diagram.

Pic.3

Let us consider in more detail each of the modules.

Cam_wrp module

One of the simplest modules. His task at the time of the action of the hsync strobe camera to take two bytes in succession, form one two-byte word from them and record it in the FIFO. The signal from the SDRAM controller to transfer to him the entire contents of the FIFO.

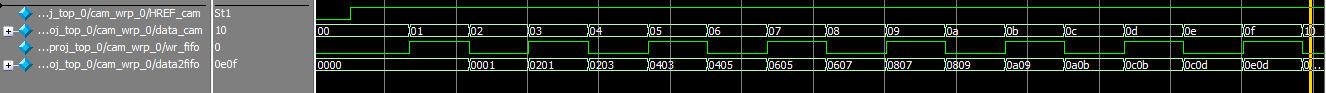

To “pack” 2 consecutive bytes into one word, we use the wr_fifo signal, which we invert by shred (divide the frequency by 2). When this signal is in logical 1, we write data in the low byte, when in logical 0 it is written in the high byte. We also use wr_fifo as a signal to write to the FIFO. In addition to the data bus from the FIFO, a bus is derived on which the number of data written to it is set. This bus is connected to the control unit. Figure 4 shows the timing diagram of “packing” bytes into double-byte words.

Pic.4

FSM_global module

The module has a very pathetic name, in fact it is a simple automaton of only 4 states, but it performs a very important function. By monitoring the readiness signal of the sd_ready SDRAM controller, the fullness of the input and output FIFO, the machine issues the SDRAM commands to the controller to pick up data from the input or write to the output FIFO. Reading and writing occur a little earlier than the FIFO is full or empty. It is necessary to choose the fill level of the FIFO correctly so that operations with the FIFO at high frequency do not end earlier than at low frequency - this is guaranteed to lead to errors. In the part devoted to the SDRAM controller, I will give a picture illustrating this feature.

SDRAM_contr module

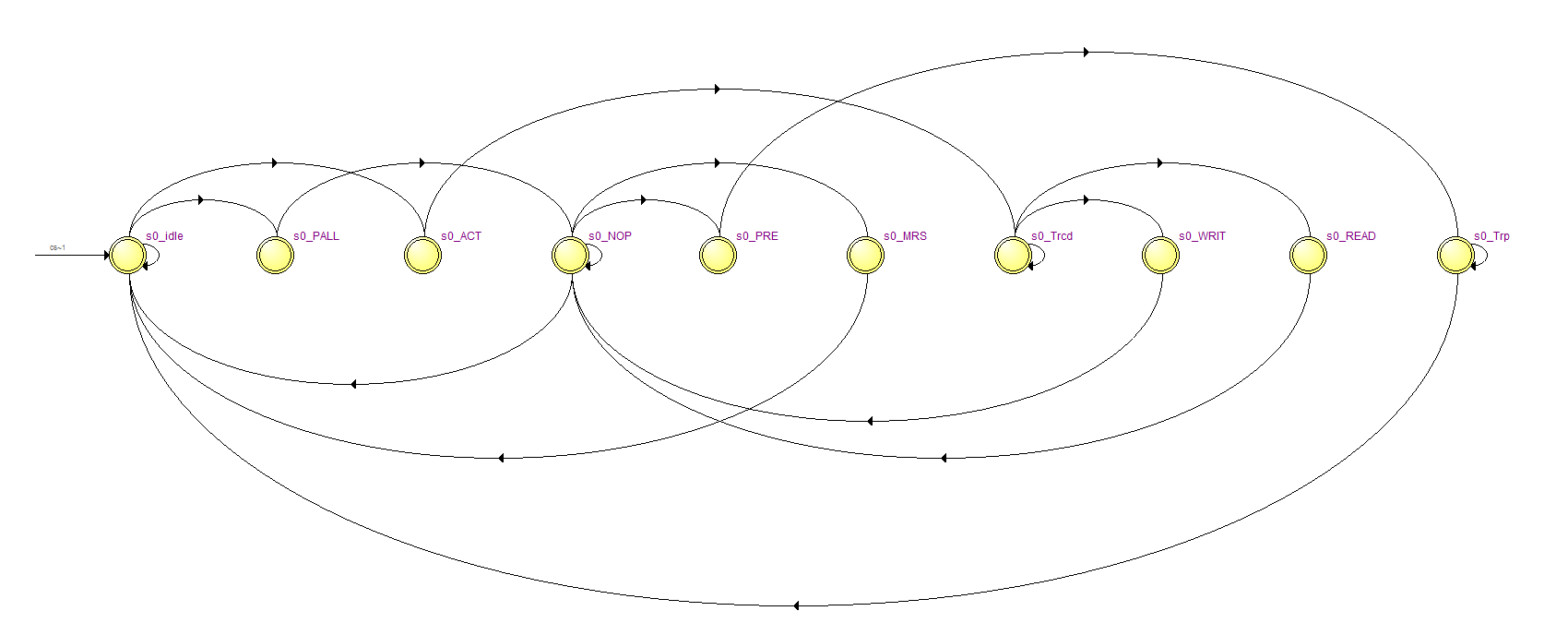

SDRAM controllers have already been written a lot; I didn’t want to reinvent the bike once again, so I decided to reinvent the crawler bike. Namely, the SDRAM controller, sharpened for this particular project. This will simplify management and slightly speed up the work. The transition graph of the automaton for a full-fledged SDRAM controller is presented in Figure 5.

Pic.5

Let's think that we can exclude from it.

First, we will not refresh the data. This assumption is definitely not suitable for a general purpose controller, but in our case we will use the same memory area, constantly referring to it. Data will not have time to degrade.

Secondly, since we will always write and read data with a vector of length 640, we can refuse to work with individual numbers; we will only write burst.

Third, do not think about the address, we will simply increment it after each burst and zero it at the end of each frame. The resulting transition graph is shown in Figure 6.

Pic.6

The controller starts in the idle state. Before starting normal operation, it is necessary to initialize the memory chip (state of the s0_MRS machine), after which the mode_flag flag is set, the controller goes into the standby state, and we can write and read data. To do this, the fsm_global module receives a command to start reading or writing, open the required column in the selected bank (state s0_ACT), and then read or write should occur (state s0_WRIT, s0_READ). Unfortunately, doing away with one burst will not come out, the depth of the column in our memory chip is only 256 16-bit words, and we need to write a vector of length 640. We will have to write for 3 burst, two by 256 and one by 128. the lines remain empty, that is, we use resources rationally, but since we don’t have a shortage of them, I decided not to complicate the machine and accept this.

As for addresses, they are allocated different registers for reading and writing, which are incremented before each bust. Thus, to write a vector with a length of 640, we pass 640 * 4 = 1440 addresses. The vertical sync strobe of the camera or the VGA addresses are reset for writing and reading, respectively.

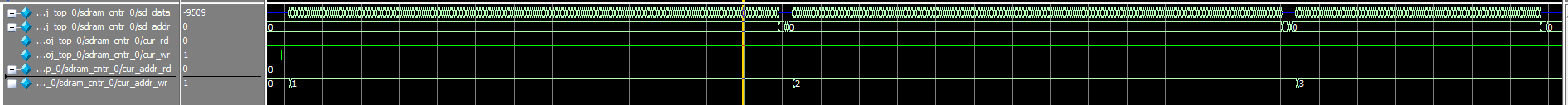

We use double buffering: we write to one buffer from another. To simplify one buffer I placed in the bank 0, and the second in the bank 1 of the SDRAM chip. Banks for reading and writing are reversed after the end of the reception frame from the camera. Figure 7 shows the time diagrams of a single vector recording. It can be seen that the entry is divided into 3 parts: after each address is incremented, the entire transfer occurs under the cur_wr gate. To read the chart is similar.

Fig.7

Figure 8 shows how the data from the camera is written to the SDRAM compared to the fill time of the FIFO. Please note that we are starting to write in SDRAM, without waiting for the final filling of the FIFO.

Fig.8

Hvsync module

This is one of two modules in this project, not written by me. Once I was implementing a similar module, I was not interested in repeating, so I used an excellent module written by the authors of the site marsohod.org . There is nothing superfluous in this module, it is parameterized and can be easily configured for any screen resolution. I practically did not change it, I added only the FIFO connected to the SDRAM controller, and the binding signals for it. With the FIFO, the bus is displayed, on which the amount of data recorded in it is set, this bus is connected to the control unit by analogy with the input FIFO. FIFO output is connected to the wire that go to the DAC.

Module camera_configure

Initially allowing myself the liberty of a home project and inattentively reading the documentation, I wanted to launch the camera with the “default” settings, but it turned out that without setting the OV7670 transmits information in YUV422 format, not RGB565. I didn’t want to rewrite anything, and I decided that I had to do everything according to my mind and properly initialize the camera. Since the camera is controlled by I2C, an idea to use NIOS came to mind. NIOS with an I2C crust with opencore could not be started with a half-kick, but I stumbled upon the Verilog module initialization and it was for OV7670. He so easily integrated into the code that he didn’t have to change almost anything, he changed only one line: instead of RGB565 he activated the RGB444 mode, since there are exactly 4 bit DACs on the board. Figure 9 shows the time diagram of the software reset of the camera by recording the number 0x80 at the address 0x12.

Fig.9

Demonstration of the result

After all the modules are written, we connect them together in a top module, assemble them into Quartus, and can be tested. The video shows the result.

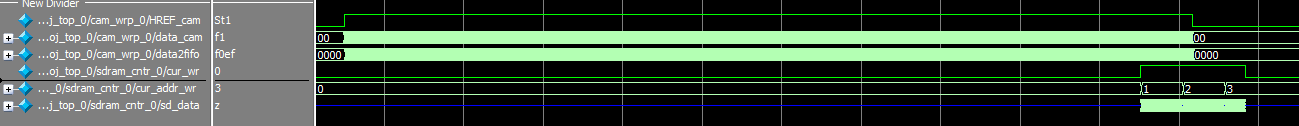

I had chosen a not very good time for shooting - the sunset and a very bright sun - the camera does not adequately respond to too bright sun glare. It is seen that moving objects are displayed correctly, there is no jerking and no loops. This is exactly what I achieved using FPGA, which allows you to process all 30 (and more camera capabilities) fps with a little blood. If we talk about image clarity, I can say that the text from A4 sheet is read without complications, unfortunately, the photo from the monitor is worse than in reality. Figure 10 shows a fragment of A4 paper with camera documentation.

"

"On the presented videos and photos some disadvantages are visible: the first with sharpness and the second with color.

I associate the problem with the sharpness on the video with the imperfectly exposed focus. The focus is adjusted on the camera mechanically, by screwing in or twisting the lens thread. The thread is plastic and has a fairly large gap, even the sharpness may deteriorate from a slight shaking.

The problem with the excessive green yellowness of the white sheet, it seems to me, is related to the problem with the white balance: the shooting was done indoors with lighting that was far from natural. Also the camera settings may affect the chroma situation. I practically did not experiment, take off, I used it as a magic number.

Conclusion

The task was to output the image from the OV7670 camera to a VGA monitor in real time, it has been solved. If you compare the result obtained in this project with the result obtained by other developers using microcontrollers or Arduino, you can see that they are inferior in the display speed of moving objects. The complexity of this project does not exceed the same, performed using a microcontroller. A person with basic knowledge in the design of an FPGA can implement it in a few days. The project has a great potential for expansion, it is possible to filter the resulting image, object recognition and so on. The design on the Cyclone II chip occupies the following resources: LE - 745 (4%), memory bits - 32768 (14%), PLL - 1 (25%), Embedded Multiplier - 0 (0%) - thus, developers still have enough resources to implement their ideas.

Afterword

What's next? In the future, I plan to expand the project by adding image processing in real time using matrix filters .

I express my thanks to ishevchuk for the advice on the content and design of the article and to my girlfriend for the spelling check.

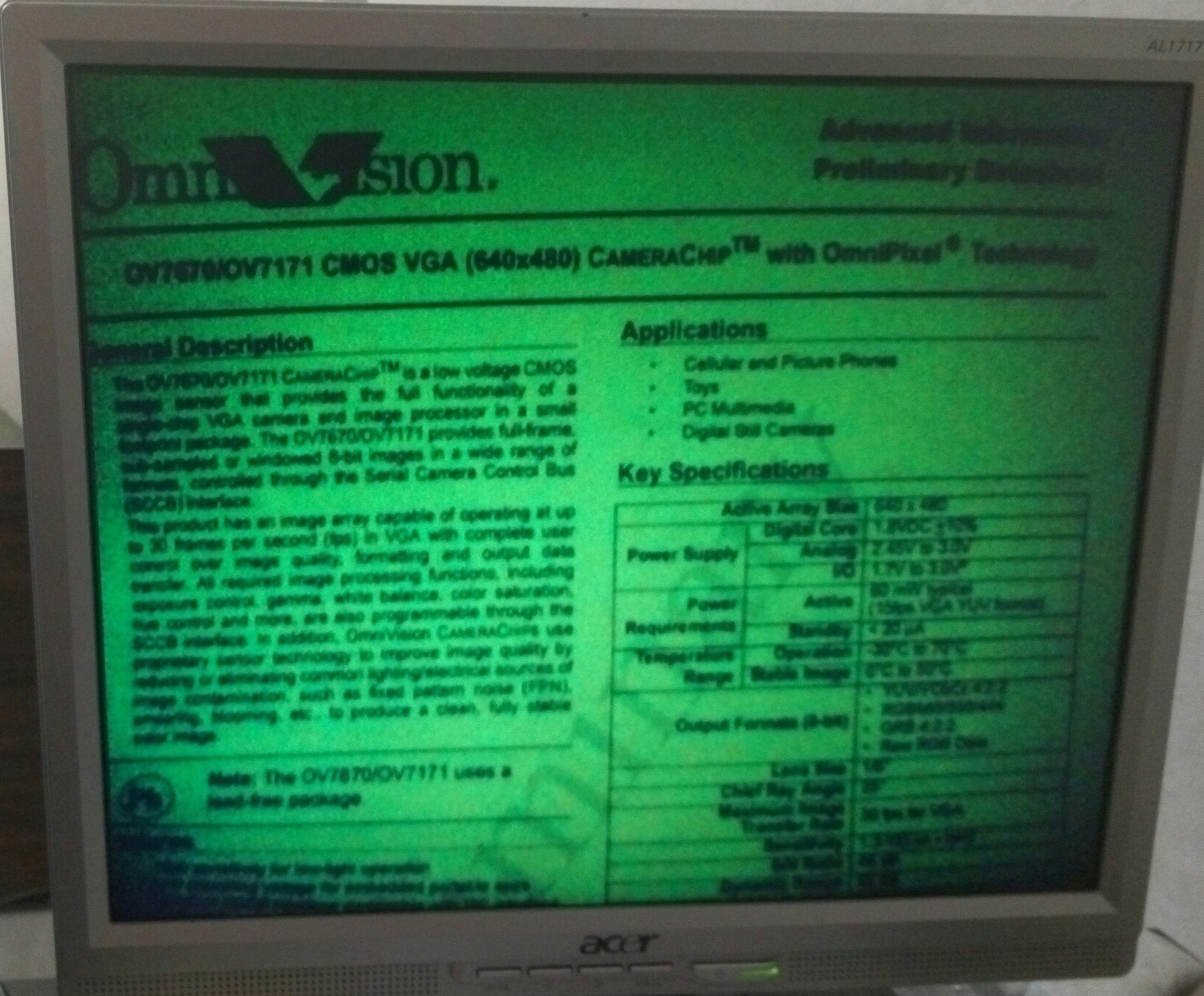

Bad doubles

When you first turn on the screen appeared mysterious patterns. Long thought it could be that. As a result, it turned out that the focus was not placed in the chamber. After I twisted the lens on the lens, everything fell into place.

"alt =" image "/>

"alt =" image "/>

The second time the camera was turned on, it was incorrectly initialized, which led to an unexpected selfie.

"

"

"alt =" image "/>

"alt =" image "/>The second time the camera was turned on, it was incorrectly initialized, which led to an unexpected selfie.

Download the archive with the source code here (Ya.Disk) .

Source: https://habr.com/ru/post/283488/

All Articles