Once again about how not to make a “sieve” from your network

Hello! I have been working in IT and information security for almost 10 years, I have always been interested in practical security, currently I work as a pentester. For all the time I was working, I constantly encountered typical errors in the settings and design of the infrastructure. These errors are often annoying, easily removable, but quickly turn the network into a testing ground for hacking. Sometimes it seems that somewhere they specifically teach how to tune, how often they met. This prompted me to write this article, having collected all the most basic things that can improve security.

In this article, I will not talk about the use of complex passwords, maximum restriction of access rights, change of default accounts, software updates, and other "typical" recommendations. The purpose of the article is to tell about the most frequent mistakes in the settings, make the administrators and information security specialists think about the question “is everything OK in my network?”, And also show how you can quickly cover up some typical vulnerabilities using built-in or free tools without resorting to additional purchases.

Instructions, recipes are deliberately not putting, as much is searched very easily by keywords.

This is a strong repetition, but here you need to repeat and repeat, since this error is very frequent - do not create local accounts on domain hosts by domain group policy. The danger of this action is extremely high. It’s about where it’s written, but usually it’s written on resources that are strictly related to practical security, and not IT.

')

The reason for the high danger is that information about this account, including its password, is stored in clear text in the groups.xml file in the sysvol resource of the domain controller, which by default is available to ALL domain members for reading. The password is encrypted, but the encryption is symmetrical, and the key is one for all copies of Windows, and written in clear text in MSDN. From here follows: any user can download this file, and by simple manipulations find out the password from the distributed account. Usually, an account with administrator rights is distributed, and often it is created indiscriminately everywhere ...

Solution - group policies add only domain accounts to local groups on the hosts. An example of decrypting such a password manually during the pentest, pay attention to the number of manipulations before receiving the password:

Administrators who are not connected with pentest and practical information security rarely know about utilities such as mimikatz and wce. Briefly about their work - with local administrator privileges, you can extract the Kerberos access ticket, password hash and even the clear password from accounts that recently logged on to this host from RAM. And since administrators log in frequently and in many places, this leads to a high risk of compromising their authority.

Often companies have machines where, for some reason, users work with administrative rights, and not the staff of IT and IS departments. How they got these rights is a separate issue, sometimes it is an inevitable evil. Usually, administrators in such cases see the threat of installing unauthorized software, virus infection, maximum risk of launching keyloggers to steal passwords, but they are not aware that their access rights are already under threat.

Against these utilities, there are measures of varying degrees of efficiency, for example, such , but often they are not applicable for various reasons: the old AD scheme, the “zoo” in the network, which has reached the stage of metastasis, is too big infrastructure, where there are poorly controlled areas.

Additionally, it should be noted that in certain cases it is not even necessary to install these utilities on the host for an attack. A user with administrator rights can easily take a dump of the desired area of RAM, and perform all manipulations outside the working network. This greatly increases the risk of compromising more privileged user accounts.

Decision at no additional cost: to get to manage the infrastructure not even 2-3 accounts, as is customary (I hope you have it?):

- for local work;

- for server and PC administration;

- for domain controllers,

and at least 4 accounts (or even more), in accordance with the conditional "zones of trust" in the domain, and do not use them outside your zone. That is, keep separate accounts:

- to work with your personal car;

- to log on to domain controllers and manage them.

- for servers;

- for workstations;

- for remote affiliates, if your domain is there, but you are not sure what is happening there at a particular point in time;

- for the DMZ zone, if suddenly the domain hosts were in the DMZ;

- if you are a frequent visitor to the anonymous paranoid club - break these zones into smaller groups, or change your passwords very often.

For a similar reason (see paragraph above), it is as good as possible not to set up public “test” hosts in the domain. Such hosts are most often found in companies with software development departments, “to speed up” and save a license, they are often not equipped with antiviruses (which, by the way, “catch” unencrypted mimikatz and wce), and all involved developers and testers have administrative rights to them , in order to implement various dubious actions. Using their administrator rights, they can easily steal the domain accounts of other administrators.

Windows accounts have a variety of different logging rights. This is a local input, an input as a batch job, as a service, etc. In a large domain there are always service accounts that are necessary for the mass work of various software, services, launch tasks, and so on. Be sure to not be lazy and minimize the rights of these accounts under their scope, and explicitly prohibit unnecessary authority. This will reduce the risks of the rapid spread of threats in the event of a leak of control over such a record.

It is better to address file resources (SMB) in a domain only through a domain name, and not through IP. In addition to the ease of administration, you will explicitly force the host to authenticate using the Kerberos protocol, which, although it has its drawbacks, is much more secure than the NTLMv2 protocol, which is used when accessing an IP file resource. The interception of the NTLMv2 hash is dangerous in that it is possible with a dictionary attack to slowly recover the user's password offline, that is, without disturbing the infrastructure being attacked. Obviously, this is not noticeable to administrators, as opposed to online password brute force attacks.

Regarding the NTLM protocol (which is without “v2”) - it should be prohibited. In addition to brute force attacks, you can use the Pass-the-hash attack. This attack essentially permits NTLM hash re-sending without modification and attempts to find a password on an arbitrary host where NTLM is allowed. The hash itself can be stolen from another session during the attack. If you have both of the NTLM protocols enabled, it is possible that an attacker can lower the preference from NTLMv2 to NTLM, and the victim host will choose the weakest authentication.

Be careful, many infrastructures are a slowly modernized “zoo”, which with obscure goals keeps the old traditions of the early 2000s, so everything is possible there.

There are two mechanisms that are enabled by default, and collectively allow for the man-in-the-middle attack, with almost no detecting attacker. This is the automatic proxy detection mechanism through a special network name (WPAD), and the LLMNR broadcast name resolution mechanism.

Through WPAD, some software (in the domain it is often WSUS update service and some browsers) performs an HTTP proxy search, and is ready, if necessary, to log in transparently with NTLM (v2). Thus, it voluntarily "gives" the hash of the account that initiated the connection. It can later go through the dictionary, and recover the password. Or use the “Pass-the-hash” attack described above, if NTLM is not disabled (for this, see the paragraph above).

Devices search for the WPAD server through DNS, and if that fails, use the LLMNR or NetBIOS broadcast request. And here it is already much easier for an attacker to answer the question of the host, where the “correct” proxy configuration server is located. An additional negative effect - such a search directly slows down the connection speed, as time is spent searching for a proxy.

Solution - in group policies, prohibit both autodetection of proxies for software and the LLMNR protocol. On the DNS address of the WPAD (wpad.domain.name) in the DNS put a stub. LLMNR is actually a DNS simulation on the L2 network segment by broadcast requests. In a domain network with a properly running DNS, it is not needed. With NetBIOS, everything is more complicated, it is still used in many cases, and turning it off can bring down the work, so there is still a loophole for imposing WPAD.

Do not turn off User Account Control (UAC), but rather the opposite, set it to maximum. UAC, of course, is not very convenient or informative, but you can’t imagine how offensive it is for the pentester to get the formal ability to remotely execute commands on behalf of a user with administrator rights, but without actually being able to perform just privileged actions, which are just in “normal” work. bored with requests for confirmation. Of course, it is possible to bypass UAC or increase rights, but these are unnecessary obstacles.

At least for the machines of administrators, “important” users and servers, be sure to disable the hidden resources $ ADMIN, C $, D $, etc. This is the primary favorite goal of any network malware and intruders when obtaining more or less privileged rights in the domain.

A controversial decision, and obviously not suitable for everyone, but there was a case when it saved a cryptographer from an epidemic. Whenever possible, provide remote file shares to users not as network drives, but as shortcuts on the desktop. The reason is simple - malware sometimes scans the drive letters, and is not always able to find resources that are not connected in the form of disks.

Check the SPF record of your mail domain. And then check it again.

Many people underestimate the significance of this record, and at the same time do not understand the operation of the SMTP protocol. The SPF record shows which resources can legitimately send mail on behalf of your domain. For the particular case of sending letters to your domain from your domain (that is, for internal correspondence within your own domain), this entry shows who (ie, specific hosts) can send letters without authorization, on behalf of any user . Most often there are two seemingly minor errors that lead to huge problems.

1) “~ all” at the end of the SPF record of the mail domain. I do not know why, but most public manuals recommend leaving this setting. This setting gives the letter the status “failed the test, but is marked as dangerous and still delivered” when sending mail from resources not directly listed in the SPF domain. This tells the recipient that the decision on the legitimacy of the sent mail is shifted to the rigidity of the settings of his spam filter and other filtering mechanisms. Further, it is likely that your own spam filter is set up gently (especially often with “public” users of the company who are afraid to “miss” the letters), and this causes any Internet host to send you the same email message. domain, and with some probability it will go to the inbox, and not to spam. It is very amusing to see users and even IT administrators who unquestioningly follow urgent instructions from the “important authorities” of such fake emails during pentests with a social component. Of course, the situation with weak spam filters and your counterparties is not excluded, and then you will make them attractive offers without your knowledge.

For the overwhelming majority of companies, SPF should contain only –all at the end, of course, after thorough verification of its contents.

2) It happens that by mistake in the SPF of the corporate domain there are external addresses through which users of the company access the Internet. The consequences are clear - the possibility of illegitimate sending letters from anyone from the corporate domain, anywhere. Usually, administrators in such situations say - “but we also have authorization by mail”, completely forgetting about the mechanism of the SMTP protocol itself.

Once I saw a situation when an exit WiFi guest appeared in the SPF. This immediately enables an attacker to send legitimate mail from the target domain, even without obtaining privileged access to the victim’s internal network.

To combat such attacks, the DKIM signature system will also help, showing who the sender really is, but its implementation is not instantaneous, so you should start with the SPF settings - tighten it up and do a full audit on the allowed addresses.

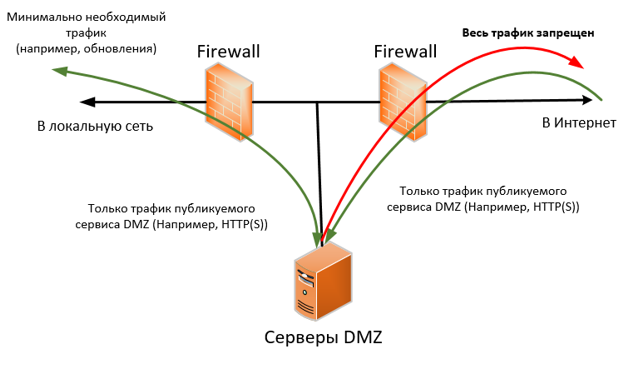

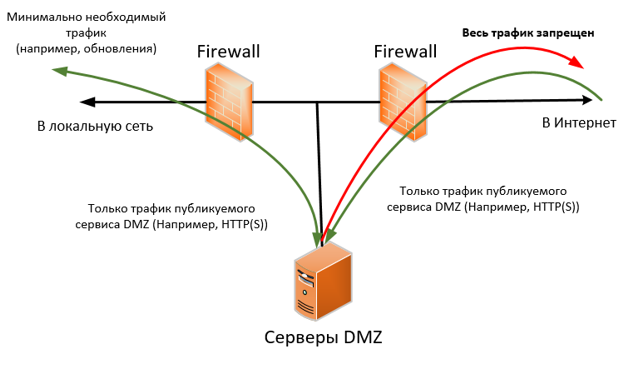

Organize the DMZ, and permanently stop "forwarding" ports from the Internet to the main network. The correct DMZ is the one in which resources are closed from two sides by firewalls (both from the internal network and from the Internet), and traffic is allowed extremely selectively, and only minimally necessary. In my opinion, a real IS specialist should think that his host from the DMZ has already been hacked, and assess the risks based on this. Such thoughts are suggested by the fact that in the DMZ very often non-standard applications that carry specific business logic, are put by contractors on the stream, as an option, are made to order and very poorly checked, for example, banal for WEB vulnerabilities. A staff information security specialist is most often able to create a DMZ, but is not able to test applications for vulnerabilities. Based on this and need to act.

A typical variant of a properly organized DMZ and the general principle of traffic flow. Arrows - directions to initiate traffic from / to the DMZ.

A budget DMZ design for paranoids, in which each resource is in a separate isolated network, and the resources do not “eat” a lot of traffic:

As for the port forwarding, in addition to the risk of service hacking, there is an implicit risk of collecting information about the internal network. For example, during pentest, you can often see RDP ports that were “hidden” by changing from the standard 3389 to some 12345. Of course, they are easily detected by scanners, easily identified as RDP, but apart from simple detection, they can, for example, provide information about the computer name and domain (by viewing the certificate of the RDP service), or information about user logins.

Guest WiFi should be as isolated as possible from the main network (a separate VLAN, a separate wire from the Internet router to the access point, etc.). Additionally, if possible, turn off guest WiFi during off-hours according to the schedule on the equipment.

There is one nuance here. Many correctly allocate guest WiFi to a separate isolated VLAN, but for some reason they give clients DNS addresses of Active Directory servers. The most rational excuse is “to ensure that resources from the DMZ work on internal addresses.” However, this is a vulnerability, and it helps the attacker well in unauthorized analysis of the internal structure of the network, because requests to the DNS (PTR over the internal IP ranges) are usually not limited in any way.

The solution is to create a lightweight internal DNS server specifically for WiFi, or use public DNS on the Internet.

This is a very common problem. One key to WiFi often lives for years, because "you can’t disturb users once more." This inevitably reduces the security of such a configuration to zero over time. The main reasons are the turnover of employees in the organization, theft of devices, the work of malware, the possibility of brute force network password.

Solution: WiFi authorization only through WPA2-Enterprise, so that each user has his own personal, easily blocked credentials that are centrally controlled. If the internal network is not really needed for WiFi devices, and only the Internet is needed, you need to make WiFi as a guest. The WPA2-Enterprise authentication design itself is the subject of a separate article.

Maximize network segmentation on VLAN, limit broadcast traffic as much as possible. All manuals on network design write about this; for some reason, this is rarely followed by anyone, especially in the small and medium segment of companies, but it is extremely useful both from the point of view of ease of administration and from the point of view of security.

In any case, be sure to keep the users' PCs separate from the servers, have a separate VLAN for the management interfaces of the devices (network devices, iLO / IMPI / IP KVM interfaces, etc.). All this will reduce the possibility of forgery of addresses, simplify the configuration of network access, reduce the likelihood of attacks due to broadcast requests, and thus increase the stability of work.

In my opinion, as a safe person (but I already feel flying tomatoes from networkers), the number of VLANs for users depends more on the number of access switches and on the number of conditional user groups based on administrative features, and not on the number of users themselves. It makes sense to make the number of user VLANs at the level of the number of access switches (even if they are stacked). So the broadcast traffic will be very much limited, there will be no “smearing” of one VLAN across multiple access switches. This will not make unnecessary work for the network, since most often user traffic goes either to the server segment or to the Internet (that is, in any case, towards the core network level or distribution level), and not between the users themselves. In this case, it is easier to debug the network and prevent attacks related to broadcast packets.

For small networks, the situation is very different - there is often nothing to segment, the network is too small. However, in all organizations where a minimum set of servers appears, usually managed switches are purchased with the servers, sometimes with L3 functions. For some reason, they are used as simple switches, often without even minimal configuration, although setting up L3 for simple routing between 2-3 networks is very simple.

Two mechanisms in the language of Cisco technologies: DHCP snooping and ARP Inspection, will help to upset different pranksters-users and real intruders. After their implementation, you will be able to prevent false DHCP servers from appearing on the network (which may appear by mistake - someone confused the desktop “home” router of the “Soviet soap” form factor with the same type of switch and as a result of the attack), and man-in-the-middle attacks via ARP protocol. In combination with the small size of the VLAN (the previous item) it will perfectly reduce the possible damage.

If such functions cannot be configured, at a minimum, you should specify a hard binding of the MAC address of the network gateway to the physical port of the switch.

If the cable network and equipment allow, configure port security on the access switches. It protects well, though not completely, from connecting “left” and “extra” devices, unauthorized movement of computers in space.

Even with the security measures described above (port security, dhcp snooping, arp inpection), the appearance of “left devices” is possible, and, as practice shows, the most likely device in this case is a “home” router with the “clone computer’s MAC address on front end". Therefore, there is the next step in the development of network tyranny - 802.1x. However, this is already a serious decision that requires good planning, so a simpler way of detecting unauthorized routers is possible (namely, detection, and not suppression). Here, a good analysis of traffic on the intermediate network for the parameter of the protocol IP - TTL. You can make a port on the switch that mirrors traffic to the server with a sniffer, and analyze this traffic for the presence of packets with abnormal TTL. This will help banal tcpdump / Wireshark. Traffic from such routers will have TTL less by 1 than legal traffic.

Alternatively, you can configure the filtering rule for specific TTLs, if network devices allow it. Of course, it will not save from serious intruders, but the more obstacles, the better for security.

When making a remote connection to the network, forget about PPTP. Of course, it is configured quickly and easily, but in addition to the presence of vulnerabilities in the protocol itself and its components, it is bad because it is very well seen by network scanners (due to working on TCP on the same port), it often goes badly through NAT at the expense of transport on the GRE, from which users complain about it, and in its design does not contain the second factor of authentication.

To make it easier for an external attacker to work, authentication on such a VPN is usually tied to domain accounts without introducing a second authentication factor, which is often exploited.

Solution-minimum: use protocols on UDP (or freely encapsulated in UDP) that are not so visible when scanning, with pre-authentication by PSK, or by certificate (L2TP / IPSec, OpenVPN, different vendor IPSec implementations). Be sure to configure the list of addresses and ports within the network, which can be accessed using a VPN.

Direct access to the Internet for users is evil, although with the cheapening of Internet channels it is becoming more and more, especially in small and medium-sized companies. Many administrators restrict outgoing ports for users to a minimum set, like 80, 443, trying to save bandwidth. From the point of view of Malware and intruders, this is no different from free access to the Internet.

In my opinion, a proxy server is always needed, even without caching and authorization, even in the smallest networks, and a ban on direct access to the Internet for users. The main reason is that every kind of malware addresses its control centers via HTTP (S), or via pure TCP with popular ports (80/443/25/110 ...), but it is often unable to detect proxy settings in the system.

If you were not interested in using IPv6, repeat all actions on filtering traffic as applied to IPv6, or disable it. Your infrastructure may implicitly use it, but you may not know about it. This is dangerous because of unauthorized access to the network.

If you have a large network with remote branches under control, and Site-to-Site VPN is configured in it, do not be too lazy to limit the traffic that goes to your center as much as possible, especially on central equipment, and not in branches. Do not take channels with remote affiliates as your “own” network. Usually in companies, branches are protected by a smaller center, and most attacks begin precisely with branches, as with “trusted” for the network center.

Limit the list of addresses that can "ping" important services. This will reduce the detection of your hosts, albeit only slightly. At the same time, do not forget that ping is not the entire icmp protocol, but only one type of messages in icmp, and you do not need to prohibit it (icmp) entirely. At the very least, you will definitely need some messages of the type Destination Unreachable for correct interaction with all networks. Do not completely prohibit ICMP on your routers with the so-called MTU Discovery Black Hole.

Do not be lazy to protect with encryption all services where you can attach SSL / TLS. For hosts from an Active Directory domain, you can configure AD Certificate Services, or distribute service certificates through group policies. If there is no money to protect public resources, use free certificates, for example, from startssl.

For myself, i.e. administrators, you can always use a lightweight self-certification center, for example, easy-rsa, or self-signed certificates. You will notice that on your internal administrative resource suddenly there was an error of trust to the previously approved certificate?

If it seems to you that your business site does not threaten anything, then we must bear in mind that encryption will save you not only from theft of credentials, but also from the substitution of traffic. Probably seen everything on sites with access via open HTTP advertising carefully built by telecom operators? And if you do not embed advertising, and the "correct" script?

If you have become a happy owner of a wildcard certificate (for “* .your-domain” domains), do not rush to distribute it to all public servers. Make yourself a separate server (or cluster), for example, on Nginx, which will “unload” incoming traffic from encryption. So you will greatly reduce the number of channels leakage of the private key of your certificate, and in the case of its compromise, you will not change it immediately on many services.

Linux hosts are most often seen in the role of web servers, the classic LAMP bundle (Linux + Apache + Mysql + PHP, or any other scripting language), which is most often broken by holes in the web application, therefore I will describe the minimum for this bundles, which is relatively easy to implement, and does not require much experience in setting up and debugging (such as measures like SELinux / AppArmor).

Do not be lazy to deny logging in via ssh for root, configure authorization for certificates only, and shift the ssh port somewhere far from the standard 22.

If there is not one administrator on the server, activate the sudo mechanism for each and use a password for root that nobody knows except for the paper, which is always in the envelope and in the safe. In this case, logging in via ssh with passwords is absolutely not allowed.

If, on the contrary, the administrator is one, then deactivate sudo (especially if you for some reason have logged in via ssh with a password) and use the root account for administrator tasks.

And of course, a classic - do not work under the root.

Do not be lazy to configure iptables, even if it seems to you that iptables will not solve anything, for example, if only Web and ssh work on the server from network applications, which should be allowed anyway. In the case of obtaining minimal powers by an attacker on the server, iptables will help prevent the further spread of the attack.

Properly configured iptables, like any other firewall, allows only the minimum necessary (including outgoing connections through the output rules chain). In the case of the minimum configuration of the Web server in iptables there will be no more than 10 lines. In terms of complexity, it is much easier to configure a firewall for a Windows domain host, where you can inadvertently disable dynamic RPC ports, domain services, and other communications that are important for the operation, since even the communication sequence is not always obvious. Therefore, to configure iptables for a web-server does not need special knowledge of the networks, and you can configure the firewall much easier.

Send Apache to work in chroot. Alternatively, make separate partitions in the file system for / var / and / tmp, mount them with the flags noexec, nosuid. So you protect the server from the execution of local exploits in binary form, which can enhance the rights of the attacker. Prohibit the execution of interpreters of scripting languages like Perl, Python for “other users” (chmod ox), where the web server belongs, if this is not required by the web server and other services. The less in the system of "other services", and accordingly, users for them, the easier it is to do.

It lists what a system administrator can do to protect a web application without even knowing the specifics of web programming.

- Prohibit the presence of VirtualHost, which responds to requests of the form http (s): // ip-address /, even if the application on the server is one. So you will significantly reduce the possibility of detecting the application.

- Disable error output in the apache configuration (ServerSignature Off, ServerTokens Prod options), this will make it difficult to determine the OS version and Apache.

- Set through mod_headers the options for HTTP_only and Secure Cookies for your web application, even if your web application does it itself. This will protect you from intercepting Cookies through tapping unencrypted HTTP and some XSS attacks.

- Disable error output on the site pages in the site language config (this is primarily for PHP). This makes it harder for an attacker to learn about the weak points of your web application.

- Control that directory indices are turned off (the “–Indexes” option in the site settings or .htaccess files).

- Additionally, make a basic-authorization on the directory with the admin panel of the site.

- Set the forced redirection from port 80 to 443, if you have configured TLS. Disable outdated SSL algorithms (SSLProtocol all -SSLv2 -SSLv3), weak encryption algorithms and the option of its complete absence through the SSLCipherSuite directive. This will protect against attacks on "lowering" the level of encryption.

You can check your SSL settings, for example, via https://www.ssllabs.com/ssltest/

Enable and configure auditd for significant events (user creation, rights change, configuration change) with sending notifications to a remote server. All hacks are not performed simultaneously, therefore periodically inspecting the logs, even if manually, you can detect suspicious activity. In addition, it will help with “debriefing” in case of incidents.

Linux-based operating systems are good in that you can flexibly disable / remove unnecessary components. In terms of security, in the first place, this applies to network and local daemons. If you see that demons are working on your newly installed server that do not directly or indirectly relate to the task of this server, think about whether they really are needed. For most cases, this is just a standard installation, and they are easily and without consequences turned off and / or removed. For example, not everyone needs RPC, which is enabled by default in Debian. Do not wait until such services find vulnerabilities and exploit, and you forget to upgrade.

Even without the skills of a penetration testing specialist, one can additionally include in the self-control the minimal “Pentester” actions. Help you:

Check the actual visibility of open ports with nmap / zenmap. Sometimes it gives results that you don’t expect, for example, in case of incorrectly configured traffic filtering rules, or when the network is large and the segmentation of personnel by areas of responsibility is also very significant.

Check the hosts on the network for typical vulnerabilities with the OpenVAS scanner. The results are also unexpected, especially in large networks, where chaos of varying degrees usually exists. The main thing when using security scanners is to distinguish between false positives and real vulnerabilities.

All listed utilities are free. Do it regularly, do not forget to update scanners.

Check employee accounts for weak passwords. In the minimum configuration, this will help the file resource available to any domain user after authorization, and the utility THC Hydra. Create dictionaries from passwords that would fit your password policy, but would be simple, like “123QAZxsw”. The number of words in each is no more than the number of valid authorization attempts in the domain, minus 2-3 for the margin. And let all domain accounts go through brute force, I'm sure you will find a lot of interesting things.

Of course, it is necessary to obtain official (preferably “paper”) permission from the management in advance of these checks, otherwise it will be like an attempt to gain unauthorized access to information.

Finally, the Pentester bike is about passwords. In the fall of 2015, we did social pentest using phishing - lured domain passwords. The two users who got the password were “Jctym2015” (“Autumn 2015”). They laughed, forgotten. In November 2015, we do a similar pentest in another organization that is not related to the first one, again the same passwords, and again at once with several users. They began to suspect something, found in one orange social network such a guide to action:

I hope someone this simple tips will help to significantly protect their infrastructure. Of course, there are still a lot of different technical and organizational subtleties concerning the questions of "how to do it," and "how not to do it," but all of them can be disclosed only within a specific infrastructure.

In this article, I will not talk about the use of complex passwords, maximum restriction of access rights, change of default accounts, software updates, and other "typical" recommendations. The purpose of the article is to tell about the most frequent mistakes in the settings, make the administrators and information security specialists think about the question “is everything OK in my network?”, And also show how you can quickly cover up some typical vulnerabilities using built-in or free tools without resorting to additional purchases.

Instructions, recipes are deliberately not putting, as much is searched very easily by keywords.

1. Strengthen the security of your Windows infrastructure

Do not create local accounts with domain policies

This is a strong repetition, but here you need to repeat and repeat, since this error is very frequent - do not create local accounts on domain hosts by domain group policy. The danger of this action is extremely high. It’s about where it’s written, but usually it’s written on resources that are strictly related to practical security, and not IT.

')

The reason for the high danger is that information about this account, including its password, is stored in clear text in the groups.xml file in the sysvol resource of the domain controller, which by default is available to ALL domain members for reading. The password is encrypted, but the encryption is symmetrical, and the key is one for all copies of Windows, and written in clear text in MSDN. From here follows: any user can download this file, and by simple manipulations find out the password from the distributed account. Usually, an account with administrator rights is distributed, and often it is created indiscriminately everywhere ...

Solution - group policies add only domain accounts to local groups on the hosts. An example of decrypting such a password manually during the pentest, pay attention to the number of manipulations before receiving the password:

Counteraction to domain account hijacking through mimikatz / wce

Administrators who are not connected with pentest and practical information security rarely know about utilities such as mimikatz and wce. Briefly about their work - with local administrator privileges, you can extract the Kerberos access ticket, password hash and even the clear password from accounts that recently logged on to this host from RAM. And since administrators log in frequently and in many places, this leads to a high risk of compromising their authority.

Often companies have machines where, for some reason, users work with administrative rights, and not the staff of IT and IS departments. How they got these rights is a separate issue, sometimes it is an inevitable evil. Usually, administrators in such cases see the threat of installing unauthorized software, virus infection, maximum risk of launching keyloggers to steal passwords, but they are not aware that their access rights are already under threat.

Against these utilities, there are measures of varying degrees of efficiency, for example, such , but often they are not applicable for various reasons: the old AD scheme, the “zoo” in the network, which has reached the stage of metastasis, is too big infrastructure, where there are poorly controlled areas.

Additionally, it should be noted that in certain cases it is not even necessary to install these utilities on the host for an attack. A user with administrator rights can easily take a dump of the desired area of RAM, and perform all manipulations outside the working network. This greatly increases the risk of compromising more privileged user accounts.

Decision at no additional cost: to get to manage the infrastructure not even 2-3 accounts, as is customary (I hope you have it?):

- for local work;

- for server and PC administration;

- for domain controllers,

and at least 4 accounts (or even more), in accordance with the conditional "zones of trust" in the domain, and do not use them outside your zone. That is, keep separate accounts:

- to work with your personal car;

- to log on to domain controllers and manage them.

- for servers;

- for workstations;

- for remote affiliates, if your domain is there, but you are not sure what is happening there at a particular point in time;

- for the DMZ zone, if suddenly the domain hosts were in the DMZ;

- if you are a frequent visitor to the anonymous paranoid club - break these zones into smaller groups, or change your passwords very often.

The ban on the introduction of "test" hosts in the domain

For a similar reason (see paragraph above), it is as good as possible not to set up public “test” hosts in the domain. Such hosts are most often found in companies with software development departments, “to speed up” and save a license, they are often not equipped with antiviruses (which, by the way, “catch” unencrypted mimikatz and wce), and all involved developers and testers have administrative rights to them , in order to implement various dubious actions. Using their administrator rights, they can easily steal the domain accounts of other administrators.

Detailing the rights of service accounts

Windows accounts have a variety of different logging rights. This is a local input, an input as a batch job, as a service, etc. In a large domain there are always service accounts that are necessary for the mass work of various software, services, launch tasks, and so on. Be sure to not be lazy and minimize the rights of these accounts under their scope, and explicitly prohibit unnecessary authority. This will reduce the risks of the rapid spread of threats in the event of a leak of control over such a record.

Call SMB by name and prohibit the use of NTLM

It is better to address file resources (SMB) in a domain only through a domain name, and not through IP. In addition to the ease of administration, you will explicitly force the host to authenticate using the Kerberos protocol, which, although it has its drawbacks, is much more secure than the NTLMv2 protocol, which is used when accessing an IP file resource. The interception of the NTLMv2 hash is dangerous in that it is possible with a dictionary attack to slowly recover the user's password offline, that is, without disturbing the infrastructure being attacked. Obviously, this is not noticeable to administrators, as opposed to online password brute force attacks.

Regarding the NTLM protocol (which is without “v2”) - it should be prohibited. In addition to brute force attacks, you can use the Pass-the-hash attack. This attack essentially permits NTLM hash re-sending without modification and attempts to find a password on an arbitrary host where NTLM is allowed. The hash itself can be stolen from another session during the attack. If you have both of the NTLM protocols enabled, it is possible that an attacker can lower the preference from NTLMv2 to NTLM, and the victim host will choose the weakest authentication.

Be careful, many infrastructures are a slowly modernized “zoo”, which with obscure goals keeps the old traditions of the early 2000s, so everything is possible there.

WPAD lock

There are two mechanisms that are enabled by default, and collectively allow for the man-in-the-middle attack, with almost no detecting attacker. This is the automatic proxy detection mechanism through a special network name (WPAD), and the LLMNR broadcast name resolution mechanism.

Through WPAD, some software (in the domain it is often WSUS update service and some browsers) performs an HTTP proxy search, and is ready, if necessary, to log in transparently with NTLM (v2). Thus, it voluntarily "gives" the hash of the account that initiated the connection. It can later go through the dictionary, and recover the password. Or use the “Pass-the-hash” attack described above, if NTLM is not disabled (for this, see the paragraph above).

Devices search for the WPAD server through DNS, and if that fails, use the LLMNR or NetBIOS broadcast request. And here it is already much easier for an attacker to answer the question of the host, where the “correct” proxy configuration server is located. An additional negative effect - such a search directly slows down the connection speed, as time is spent searching for a proxy.

Solution - in group policies, prohibit both autodetection of proxies for software and the LLMNR protocol. On the DNS address of the WPAD (wpad.domain.name) in the DNS put a stub. LLMNR is actually a DNS simulation on the L2 network segment by broadcast requests. In a domain network with a properly running DNS, it is not needed. With NetBIOS, everything is more complicated, it is still used in many cases, and turning it off can bring down the work, so there is still a loophole for imposing WPAD.

Enable UAC to maximum

Do not turn off User Account Control (UAC), but rather the opposite, set it to maximum. UAC, of course, is not very convenient or informative, but you can’t imagine how offensive it is for the pentester to get the formal ability to remotely execute commands on behalf of a user with administrator rights, but without actually being able to perform just privileged actions, which are just in “normal” work. bored with requests for confirmation. Of course, it is possible to bypass UAC or increase rights, but these are unnecessary obstacles.

Disable hidden file resources

At least for the machines of administrators, “important” users and servers, be sure to disable the hidden resources $ ADMIN, C $, D $, etc. This is the primary favorite goal of any network malware and intruders when obtaining more or less privileged rights in the domain.

Network drives as shortcuts

A controversial decision, and obviously not suitable for everyone, but there was a case when it saved a cryptographer from an epidemic. Whenever possible, provide remote file shares to users not as network drives, but as shortcuts on the desktop. The reason is simple - malware sometimes scans the drive letters, and is not always able to find resources that are not connected in the form of disks.

2. Mail system. SPF

SPF content revision

Check the SPF record of your mail domain. And then check it again.

Many people underestimate the significance of this record, and at the same time do not understand the operation of the SMTP protocol. The SPF record shows which resources can legitimately send mail on behalf of your domain. For the particular case of sending letters to your domain from your domain (that is, for internal correspondence within your own domain), this entry shows who (ie, specific hosts) can send letters without authorization, on behalf of any user . Most often there are two seemingly minor errors that lead to huge problems.

1) “~ all” at the end of the SPF record of the mail domain. I do not know why, but most public manuals recommend leaving this setting. This setting gives the letter the status “failed the test, but is marked as dangerous and still delivered” when sending mail from resources not directly listed in the SPF domain. This tells the recipient that the decision on the legitimacy of the sent mail is shifted to the rigidity of the settings of his spam filter and other filtering mechanisms. Further, it is likely that your own spam filter is set up gently (especially often with “public” users of the company who are afraid to “miss” the letters), and this causes any Internet host to send you the same email message. domain, and with some probability it will go to the inbox, and not to spam. It is very amusing to see users and even IT administrators who unquestioningly follow urgent instructions from the “important authorities” of such fake emails during pentests with a social component. Of course, the situation with weak spam filters and your counterparties is not excluded, and then you will make them attractive offers without your knowledge.

For the overwhelming majority of companies, SPF should contain only –all at the end, of course, after thorough verification of its contents.

2) It happens that by mistake in the SPF of the corporate domain there are external addresses through which users of the company access the Internet. The consequences are clear - the possibility of illegitimate sending letters from anyone from the corporate domain, anywhere. Usually, administrators in such situations say - “but we also have authorization by mail”, completely forgetting about the mechanism of the SMTP protocol itself.

Once I saw a situation when an exit WiFi guest appeared in the SPF. This immediately enables an attacker to send legitimate mail from the target domain, even without obtaining privileged access to the victim’s internal network.

To combat such attacks, the DKIM signature system will also help, showing who the sender really is, but its implementation is not instantaneous, so you should start with the SPF settings - tighten it up and do a full audit on the allowed addresses.

3. LAN design

Organize DMZ

Organize the DMZ, and permanently stop "forwarding" ports from the Internet to the main network. The correct DMZ is the one in which resources are closed from two sides by firewalls (both from the internal network and from the Internet), and traffic is allowed extremely selectively, and only minimally necessary. In my opinion, a real IS specialist should think that his host from the DMZ has already been hacked, and assess the risks based on this. Such thoughts are suggested by the fact that in the DMZ very often non-standard applications that carry specific business logic, are put by contractors on the stream, as an option, are made to order and very poorly checked, for example, banal for WEB vulnerabilities. A staff information security specialist is most often able to create a DMZ, but is not able to test applications for vulnerabilities. Based on this and need to act.

A typical variant of a properly organized DMZ and the general principle of traffic flow. Arrows - directions to initiate traffic from / to the DMZ.

A budget DMZ design for paranoids, in which each resource is in a separate isolated network, and the resources do not “eat” a lot of traffic:

As for the port forwarding, in addition to the risk of service hacking, there is an implicit risk of collecting information about the internal network. For example, during pentest, you can often see RDP ports that were “hidden” by changing from the standard 3389 to some 12345. Of course, they are easily detected by scanners, easily identified as RDP, but apart from simple detection, they can, for example, provide information about the computer name and domain (by viewing the certificate of the RDP service), or information about user logins.

Fully insulated guest wifi

Guest WiFi should be as isolated as possible from the main network (a separate VLAN, a separate wire from the Internet router to the access point, etc.). Additionally, if possible, turn off guest WiFi during off-hours according to the schedule on the equipment.

There is one nuance here. Many correctly allocate guest WiFi to a separate isolated VLAN, but for some reason they give clients DNS addresses of Active Directory servers. The most rational excuse is “to ensure that resources from the DMZ work on internal addresses.” However, this is a vulnerability, and it helps the attacker well in unauthorized analysis of the internal structure of the network, because requests to the DNS (PTR over the internal IP ranges) are usually not limited in any way.

The solution is to create a lightweight internal DNS server specifically for WiFi, or use public DNS on the Internet.

Do not create "corporate" WiFi on a single Preshared-key

This is a very common problem. One key to WiFi often lives for years, because "you can’t disturb users once more." This inevitably reduces the security of such a configuration to zero over time. The main reasons are the turnover of employees in the organization, theft of devices, the work of malware, the possibility of brute force network password.

Solution: WiFi authorization only through WPA2-Enterprise, so that each user has his own personal, easily blocked credentials that are centrally controlled. If the internal network is not really needed for WiFi devices, and only the Internet is needed, you need to make WiFi as a guest. The WPA2-Enterprise authentication design itself is the subject of a separate article.

Proper network segmentation

Maximize network segmentation on VLAN, limit broadcast traffic as much as possible. All manuals on network design write about this; for some reason, this is rarely followed by anyone, especially in the small and medium segment of companies, but it is extremely useful both from the point of view of ease of administration and from the point of view of security.

In any case, be sure to keep the users' PCs separate from the servers, have a separate VLAN for the management interfaces of the devices (network devices, iLO / IMPI / IP KVM interfaces, etc.). All this will reduce the possibility of forgery of addresses, simplify the configuration of network access, reduce the likelihood of attacks due to broadcast requests, and thus increase the stability of work.

In my opinion, as a safe person (but I already feel flying tomatoes from networkers), the number of VLANs for users depends more on the number of access switches and on the number of conditional user groups based on administrative features, and not on the number of users themselves. It makes sense to make the number of user VLANs at the level of the number of access switches (even if they are stacked). So the broadcast traffic will be very much limited, there will be no “smearing” of one VLAN across multiple access switches. This will not make unnecessary work for the network, since most often user traffic goes either to the server segment or to the Internet (that is, in any case, towards the core network level or distribution level), and not between the users themselves. In this case, it is easier to debug the network and prevent attacks related to broadcast packets.

For small networks, the situation is very different - there is often nothing to segment, the network is too small. However, in all organizations where a minimum set of servers appears, usually managed switches are purchased with the servers, sometimes with L3 functions. For some reason, they are used as simple switches, often without even minimal configuration, although setting up L3 for simple routing between 2-3 networks is very simple.

Protection against ARP spoofing and fake DHCP servers

Two mechanisms in the language of Cisco technologies: DHCP snooping and ARP Inspection, will help to upset different pranksters-users and real intruders. After their implementation, you will be able to prevent false DHCP servers from appearing on the network (which may appear by mistake - someone confused the desktop “home” router of the “Soviet soap” form factor with the same type of switch and as a result of the attack), and man-in-the-middle attacks via ARP protocol. In combination with the small size of the VLAN (the previous item) it will perfectly reduce the possible damage.

If such functions cannot be configured, at a minimum, you should specify a hard binding of the MAC address of the network gateway to the physical port of the switch.

Port security

If the cable network and equipment allow, configure port security on the access switches. It protects well, though not completely, from connecting “left” and “extra” devices, unauthorized movement of computers in space.

Countering "left" devices

Even with the security measures described above (port security, dhcp snooping, arp inpection), the appearance of “left devices” is possible, and, as practice shows, the most likely device in this case is a “home” router with the “clone computer’s MAC address on front end". Therefore, there is the next step in the development of network tyranny - 802.1x. However, this is already a serious decision that requires good planning, so a simpler way of detecting unauthorized routers is possible (namely, detection, and not suppression). Here, a good analysis of traffic on the intermediate network for the parameter of the protocol IP - TTL. You can make a port on the switch that mirrors traffic to the server with a sniffer, and analyze this traffic for the presence of packets with abnormal TTL. This will help banal tcpdump / Wireshark. Traffic from such routers will have TTL less by 1 than legal traffic.

Alternatively, you can configure the filtering rule for specific TTLs, if network devices allow it. Of course, it will not save from serious intruders, but the more obstacles, the better for security.

Remote access

When making a remote connection to the network, forget about PPTP. Of course, it is configured quickly and easily, but in addition to the presence of vulnerabilities in the protocol itself and its components, it is bad because it is very well seen by network scanners (due to working on TCP on the same port), it often goes badly through NAT at the expense of transport on the GRE, from which users complain about it, and in its design does not contain the second factor of authentication.

To make it easier for an external attacker to work, authentication on such a VPN is usually tied to domain accounts without introducing a second authentication factor, which is often exploited.

Solution-minimum: use protocols on UDP (or freely encapsulated in UDP) that are not so visible when scanning, with pre-authentication by PSK, or by certificate (L2TP / IPSec, OpenVPN, different vendor IPSec implementations). Be sure to configure the list of addresses and ports within the network, which can be accessed using a VPN.

Prohibit direct random access to the Internet for the "user" network

Direct access to the Internet for users is evil, although with the cheapening of Internet channels it is becoming more and more, especially in small and medium-sized companies. Many administrators restrict outgoing ports for users to a minimum set, like 80, 443, trying to save bandwidth. From the point of view of Malware and intruders, this is no different from free access to the Internet.

In my opinion, a proxy server is always needed, even without caching and authorization, even in the smallest networks, and a ban on direct access to the Internet for users. The main reason is that every kind of malware addresses its control centers via HTTP (S), or via pure TCP with popular ports (80/443/25/110 ...), but it is often unable to detect proxy settings in the system.

Take control of IPv6

If you were not interested in using IPv6, repeat all actions on filtering traffic as applied to IPv6, or disable it. Your infrastructure may implicitly use it, but you may not know about it. This is dangerous because of unauthorized access to the network.

Interbranch traffic

If you have a large network with remote branches under control, and Site-to-Site VPN is configured in it, do not be too lazy to limit the traffic that goes to your center as much as possible, especially on central equipment, and not in branches. Do not take channels with remote affiliates as your “own” network. Usually in companies, branches are protected by a smaller center, and most attacks begin precisely with branches, as with “trusted” for the network center.

Icmp ping limit

Limit the list of addresses that can "ping" important services. This will reduce the detection of your hosts, albeit only slightly. At the same time, do not forget that ping is not the entire icmp protocol, but only one type of messages in icmp, and you do not need to prohibit it (icmp) entirely. At the very least, you will definitely need some messages of the type Destination Unreachable for correct interaction with all networks. Do not completely prohibit ICMP on your routers with the so-called MTU Discovery Black Hole.

4. Traffic Encryption

SSL certificates for everything and everywhere

Do not be lazy to protect with encryption all services where you can attach SSL / TLS. For hosts from an Active Directory domain, you can configure AD Certificate Services, or distribute service certificates through group policies. If there is no money to protect public resources, use free certificates, for example, from startssl.

For myself, i.e. administrators, you can always use a lightweight self-certification center, for example, easy-rsa, or self-signed certificates. You will notice that on your internal administrative resource suddenly there was an error of trust to the previously approved certificate?

If it seems to you that your business site does not threaten anything, then we must bear in mind that encryption will save you not only from theft of credentials, but also from the substitution of traffic. Probably seen everything on sites with access via open HTTP advertising carefully built by telecom operators? And if you do not embed advertising, and the "correct" script?

Wildcard SSL certificate

If you have become a happy owner of a wildcard certificate (for “* .your-domain” domains), do not rush to distribute it to all public servers. Make yourself a separate server (or cluster), for example, on Nginx, which will “unload” incoming traffic from encryption. So you will greatly reduce the number of channels leakage of the private key of your certificate, and in the case of its compromise, you will not change it immediately on many services.

5. A few words about Linux based web servers

Linux hosts are most often seen in the role of web servers, the classic LAMP bundle (Linux + Apache + Mysql + PHP, or any other scripting language), which is most often broken by holes in the web application, therefore I will describe the minimum for this bundles, which is relatively easy to implement, and does not require much experience in setting up and debugging (such as measures like SELinux / AppArmor).

Access to server

Do not be lazy to deny logging in via ssh for root, configure authorization for certificates only, and shift the ssh port somewhere far from the standard 22.

If there is not one administrator on the server, activate the sudo mechanism for each and use a password for root that nobody knows except for the paper, which is always in the envelope and in the safe. In this case, logging in via ssh with passwords is absolutely not allowed.

If, on the contrary, the administrator is one, then deactivate sudo (especially if you for some reason have logged in via ssh with a password) and use the root account for administrator tasks.

And of course, a classic - do not work under the root.

Iptables

Do not be lazy to configure iptables, even if it seems to you that iptables will not solve anything, for example, if only Web and ssh work on the server from network applications, which should be allowed anyway. In the case of obtaining minimal powers by an attacker on the server, iptables will help prevent the further spread of the attack.

Properly configured iptables, like any other firewall, allows only the minimum necessary (including outgoing connections through the output rules chain). In the case of the minimum configuration of the Web server in iptables there will be no more than 10 lines. In terms of complexity, it is much easier to configure a firewall for a Windows domain host, where you can inadvertently disable dynamic RPC ports, domain services, and other communications that are important for the operation, since even the communication sequence is not always obvious. Therefore, to configure iptables for a web-server does not need special knowledge of the networks, and you can configure the firewall much easier.

Countering elevation of rights in case of hacking

Send Apache to work in chroot. Alternatively, make separate partitions in the file system for / var / and / tmp, mount them with the flags noexec, nosuid. So you protect the server from the execution of local exploits in binary form, which can enhance the rights of the attacker. Prohibit the execution of interpreters of scripting languages like Perl, Python for “other users” (chmod ox), where the web server belongs, if this is not required by the web server and other services. The less in the system of "other services", and accordingly, users for them, the easier it is to do.

A couple of words about setting up a web server on the example of Apache

It lists what a system administrator can do to protect a web application without even knowing the specifics of web programming.

- Prohibit the presence of VirtualHost, which responds to requests of the form http (s): // ip-address /, even if the application on the server is one. So you will significantly reduce the possibility of detecting the application.

- Disable error output in the apache configuration (ServerSignature Off, ServerTokens Prod options), this will make it difficult to determine the OS version and Apache.

- Set through mod_headers the options for HTTP_only and Secure Cookies for your web application, even if your web application does it itself. This will protect you from intercepting Cookies through tapping unencrypted HTTP and some XSS attacks.

- Disable error output on the site pages in the site language config (this is primarily for PHP). This makes it harder for an attacker to learn about the weak points of your web application.

- Control that directory indices are turned off (the “–Indexes” option in the site settings or .htaccess files).

- Additionally, make a basic-authorization on the directory with the admin panel of the site.

- Set the forced redirection from port 80 to 443, if you have configured TLS. Disable outdated SSL algorithms (SSLProtocol all -SSLv2 -SSLv3), weak encryption algorithms and the option of its complete absence through the SSLCipherSuite directive. This will protect against attacks on "lowering" the level of encryption.

You can check your SSL settings, for example, via https://www.ssllabs.com/ssltest/

Configure system auditing

Enable and configure auditd for significant events (user creation, rights change, configuration change) with sending notifications to a remote server. All hacks are not performed simultaneously, therefore periodically inspecting the logs, even if manually, you can detect suspicious activity. In addition, it will help with “debriefing” in case of incidents.

Put only the minimum required in the system

Linux-based operating systems are good in that you can flexibly disable / remove unnecessary components. In terms of security, in the first place, this applies to network and local daemons. If you see that demons are working on your newly installed server that do not directly or indirectly relate to the task of this server, think about whether they really are needed. For most cases, this is just a standard installation, and they are easily and without consequences turned off and / or removed. For example, not everyone needs RPC, which is enabled by default in Debian. Do not wait until such services find vulnerabilities and exploit, and you forget to upgrade.

6. Self control

Even without the skills of a penetration testing specialist, one can additionally include in the self-control the minimal “Pentester” actions. Help you:

Security scanners

Check the actual visibility of open ports with nmap / zenmap. Sometimes it gives results that you don’t expect, for example, in case of incorrectly configured traffic filtering rules, or when the network is large and the segmentation of personnel by areas of responsibility is also very significant.

Check the hosts on the network for typical vulnerabilities with the OpenVAS scanner. The results are also unexpected, especially in large networks, where chaos of varying degrees usually exists. The main thing when using security scanners is to distinguish between false positives and real vulnerabilities.

All listed utilities are free. Do it regularly, do not forget to update scanners.

Brute force accounts

Check employee accounts for weak passwords. In the minimum configuration, this will help the file resource available to any domain user after authorization, and the utility THC Hydra. Create dictionaries from passwords that would fit your password policy, but would be simple, like “123QAZxsw”. The number of words in each is no more than the number of valid authorization attempts in the domain, minus 2-3 for the margin. And let all domain accounts go through brute force, I'm sure you will find a lot of interesting things.

Of course, it is necessary to obtain official (preferably “paper”) permission from the management in advance of these checks, otherwise it will be like an attempt to gain unauthorized access to information.

Moment of humor

Finally, the Pentester bike is about passwords. In the fall of 2015, we did social pentest using phishing - lured domain passwords. The two users who got the password were “Jctym2015” (“Autumn 2015”). They laughed, forgotten. In November 2015, we do a similar pentest in another organization that is not related to the first one, again the same passwords, and again at once with several users. They began to suspect something, found in one orange social network such a guide to action:

I hope someone this simple tips will help to significantly protect their infrastructure. Of course, there are still a lot of different technical and organizational subtleties concerning the questions of "how to do it," and "how not to do it," but all of them can be disclosed only within a specific infrastructure.

Source: https://habr.com/ru/post/283482/

All Articles