We present data and code in order: data and markup, part 2

This series of two articles on performance and memory describes the basic principles and provides tips for developers on how to improve software performance. These articles address, in particular, memory performance and composition. In the first part, we talked about the use of registers and the use of blocking algorithms to increase data reuse. This part of the article first describes the layout of data for regular parallelization — programming for shared memory with threads, and then distributed computing over MPI networks. The article describes the concepts related to parallelization: vectorization (SIMD instructions) and working with shared memory (multi-threaded architecture), as well as calculations with distributed memory. Finally, this article compares the “array of structures” (AOS) and “array structures” (SOA) data layouts.

The basic principle of performance is described in the first part: reuse of data in registers or in the cache before they are crowded out. The principles of performance described in this article are as follows: place the data as close as possible to the place where they are most often used; post data for sequential access; Avoid data conflicts.

Programming shared memory with threads

Let's start with programming shared memory with threads. All threads together access the same memory within the process. There are many models of flow control. The most famous among them are Posix * threads and Windows * threads. In the work associated with the proper creation of threads and their management, errors may occur. In modern programs, consisting of many modules and created by large teams of developers, errors in parallel programming with the use of multi-threaded calculations often occur. Several packages have been developed to simplify the creation of threads, their management and the most efficient use of parallel threads. The two most popular packages are OpenMP * and Intel Threading Building Blocks. The third flow control model, Intel Cilk Plus, is not as widely used as OpenMP and Threading Building blocks. All these flow control models create a thread pool that is reused for each of the parallel operations and parallel domains. OpenMP has the advantage of stepwise parallelization using directives. At the same time, OpenMP directives can often be added to existing programs in a step-by-step process and with minimal code changes. If you allow the runtime library to manage the maintenance of threads, this greatly simplifies the development of multi-threaded programs. It also provides a unified flow control model that all code developers must adhere to, which reduces the likelihood of some common errors. An optimized flow control library is used by all developers.

')

The principles of parallel programming mentioned in the introductory part are to place data as close as possible to the place where they will be used and to avoid data movement. In multithreaded programming in the default model, the data is common to the entire process; all threads can access it. The introductory flow management articles emphasize how easy it is to apply OpenMP to do (Fortran *) loops or for © loops. Such methods are usually significantly accelerated when executing code on two or four cores. Often, these methods are scaled to 64 threads or more. However, additional flows are not less often used, and in some such cases this is due to improper data composition. The point is to create an architecture suitable for parallel code.

It is important to explore the possibility of parallelization at a higher level in the code call stack than originally intended by the developer or development tools. If the developer believes that any tasks or data can be processed in parallel, then try to answer the following questions (in the light of Amdal’s law): “Can I start using parallel operations higher in the call stack before going to this place?”, “If I’m I’ll do this, will the parallel area of the code increase, which will speed up the work when more threads are involved? ”

Consideration should be given to the placement of the data, and to what data can be shared through messages. The data layout should include the placement of data where it is most used, and from there it can be sent to other systems. For applications represented in a table or in a physical domain with specified partitions, MPI programs often add a row of “phantom” cells around the subordinate table or subordinate domain. In the "phantom" cells, the data values sent by the MPI process updating these cells are stored. In multi-threaded programs, “phantom” cells are usually not used, but if the length of the interface is reduced for sending messages, it is desirable to reduce the boundaries of partitions using shared memory. This reduces the need for blocking threads (or important sections) and the likelihood of cache conflicts associated with cache ownership.

In large multiprocessor systems, a common global address memory space is used, but the non-uniform memory architecture is also used. Retrieving data located in a memory module closest to another processor takes longer (delay increases) than retrieving data from a memory module closest to the processor that processes the code. The closer the memory to which the call is located, the less delay.

Figure 1. Memory access speed, relative delays in accessing data

If one thread allocates and initializes data, then this data is usually placed in memory closest to the processor in which the thread allocates and initializes the memory (Fig. 1). You can improve performance by forcing each thread to allocate and give the first reference to the memory that will be used to the greatest extent by this thread. Usually this is enough for the thread to run in the memory area closest to the processor. If the thread is created and active, then the OS usually leaves it in the same processor. Sometimes it is beneficial to explicitly bind a thread to a specific core in order to avoid transferring the flow between the cores. If the data has a specific layout, it may be beneficial to assign or bind the threads to specific cores in accordance with this layout. The Intel OpenMP Execution Library (as part of Intel Parallel Studio XE 2016 ) contains explicit mapping attributes that work effectively on Intel Xeon Phi co-processors.

These attributes are Compact, Scatter and Balanced.

- The Compact attribute matches sequential or neighboring threads with symmetric multithreaded sets (SMT) on one core before assigning threads to other cores. This is the ideal solution for situations where streams access data that is common to streams with sequential numbering (i.e., neighboring streams).

- The Scatter attribute assigns one thread to each core, then returns to the original core to plan other threads in the SMT.

- The Balanced attribute uniformly assigns threads with consecutive or adjacent identifiers to the same core. This is the recommended starting attribute for optimizing stream mapping in the Intel 16.0 C ++ compiler documentation. The Balanced option is available only for the Intel Xeon Phi product family. For ordinary CPUs, it is invalid. When all SMTs on the Xeon Phi platform are enabled, the Balanced and Compact attributes work in the same way. If only some SMTs are involved on the Xeon Phi platform, the Compact method will fill all SMTs on the first cores, and some cores will remain free (ideally).

When working with dozens of streams, it is important to place the stream data as close as possible to the place where it will be used. Data layout is important both for programs using MPI networks and for multi-threaded programs.

With regard to memory and data composition, two factors should be considered. They are fairly simple, and can have a significant impact on the work. The first is false sharing, the second is data alignment. False sharing is one of the interesting performance factors in multi-threaded programs. All streams working with data are independent. There is no shared access, but the cache line containing both pieces of data is shared. Therefore, the name “false shared data access” is used: as such, there is no shared data access, but the performance is the same as if shared access was used.

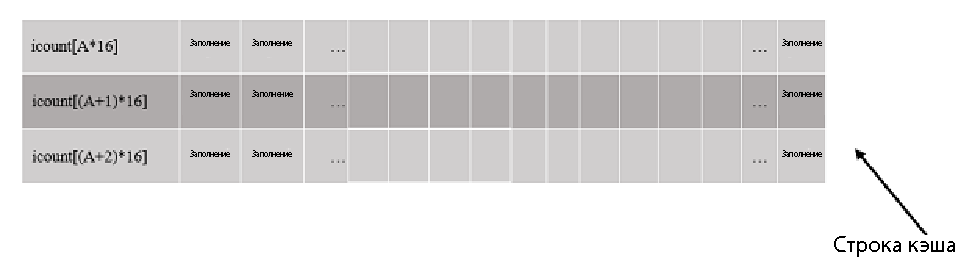

Imagine a situation where each stream increases the value of its own counter, but the counter itself is a one-dimensional array. Each thread increases the value of its counter. To increase the counter value, the kernel must own a cache line. For example, thread A of processor 0 becomes the owner of the cache line and increases the value of the iCount [A] counter. At the same time, the stream A + 1 of processor 1 increases the value of the iCount [A + 1] counter. To do this, processor core 1 takes ownership of the cache line and thread A + 1 updates the value of the counter. Since the value in the cache line changes, this line is invalidated for processor 0. In the next iteration, processor 0 takes ownership of the cache line and changes the value of iCount [A], which, in turn, cancels this cache line for processor 1. When the thread is in processor 1 will be ready to record, the cycle repeats. A significant amount of processor cycles is spent on maintaining cache consistency, since invalidating cache lines, regaining control, and synchronizing with memory affects performance.

The best solution is not to cancel the cache. For example, when entering a loop, each thread can read its counter and store it in a local variable in its stack (the cache is not canceled when reading). When the work is completed, the stream can copy its local value back to a permanent location (see Figure 2). Another alternative solution is to use data padding so that the data is preferably used by one specific stream in its own cache line.

int iCount[nThreads] ; . . . for (some interval){ //some work . . . iCount[myThreadId]++ // may result in false sharing }

int iCount[nThreads*16] ;// memory padding to avoid false sharing . . . for (some interval){ //some work . . . iCount[myThreadId*16]++ //no false sharing, unused memory }

int iCount[nThreads] ; // make temporary local copy . . . // every thread creates its own local variable local_count int local_Count = iCount[myThreadID] ; for (some interval){ //some work . . . local_Count++ ; //no false sharing } iCount[myThreadId] = local_Count ; //preserve values // potential false sharing at the end, // but outside of inner work loop much improved // better just preserve local_Count for each thread Figure 2

False sharing can also occur when assigning scalar values to adjacent memory areas. This case is shown in the code snippet below.

int data1, data2 ; // data1 and data2 may be placed in memory //such that false sharing could occur declspec(align(64)) int data3; // data3 and data4 will be declspec(align(64)) int data4; // on separate cache lines, // no false sharing When a developer creates parallel code from the start and minimizes the use of shared data, it is usually possible to avoid false sharing. If your multithreaded program does not have sufficient scalability (i.e. it does not start working faster when additional streams are used), although there are many independent procedures and few obstacles (mutual exclusions, important sections), then it makes sense to check if a false common access.

Data alignment

Program performance is optimal when data processed by SIMD instructions (AVX512, AVX, SSE4) are aligned to the cache line boundaries. The loss of performance when accessing unaligned data differs depending on the processor family. The work of the Intel Xeon Phi coprocessors is particularly affected by data alignment. On the Intel Xeon Phi platform, data alignment is of paramount importance. This difference is not so great on other Intel Xeon platforms, but performance still noticeably increases when the data is aligned with the cache line boundaries. Therefore, program developers are encouraged to always align data on 64-byte boundaries. On Linux * and Mac OS X * systems, you don’t even need to change the code, you just need to use the corresponding parameter in the command line of the Intel compiler: / align: rec64byte .

For dynamically allocated memory in C, you can replace malloc () with _mm_alloc (datasize, 64) . If _mm_alloc () is used , then _mm_free () should be used instead of free () . A detailed article on data alignment is here .

Also read the compiler documentation. To show the effect of data alignment on two matrices of the same size, we created and launched the block matrix multiplication code used in the first part of this article. In the first case, the matrix A was aligned. In the second case, the matrix A was intentionally shifted by 24 bytes (three Double values). At the same time, the performance (the Intel 16.0 compiler was used) fell by 56–63% for matrices ranging in size from 1200 x 1200 to 4000 x 4000. In the first part, I gave a table with the cycle ordering performance in different compilers. When one matrix was shifted, the Intel compiler stopped producing a performance boost. Developers are advised to read the compiler documentation to learn about data alignment and the options available, so that the compiler can use the aligned data as efficiently as possible. The code for measuring the performance of a matrix that is shifted relative to the cache line is included in the code for the first part of the article .

For more information, see the compiler documentation.

To show the effect of data alignment on two matrices of the same size, we created and launched the block matrix multiplication code used in the first part of this article. The first matrix was aligned. The second matrix was intentionally offset by 24 bytes (three Double values). At the same time, the performance (the Intel 16.0 compiler was used) fell by 56–63% for matrices ranging in size from 1200 x 1200 to 4000 x 4000.

Array of structures or array structure

Processors work very efficiently with sequential memory access. It is very good if each element of the cache line is moved to SIMD registers. If the cache lines are also loaded sequentially, then the preemptive sampling of the processors works in an orderly manner. In an array of structures, the data layout may be approximately the same.

struct { uint r, g, b, w ; // a possible 2D color rgb pixel layout } MyAoS[N] ; In this arrangement, the rgb values are arranged sequentially. If the program works with color plane data, then with high probability the entire structure will be placed in the cache, but only one value will be used each time, for example, g. If the data is stored in an array structure, the layout may be something like this.

struct { uint r[N] ; uint g[N] ; uint b[N] ; uint w[N] ; } MySoA ; If the data is stored in an array structure and the program works with all values of g (or r, or b), then the entire cache will be used with high probability when working in the cache of one cache line. Data is more efficiently loaded into SIMD registers, thereby increasing efficiency and productivity. In many cases, program developers temporarily move data into an array structure for processing, and then copy it back when it becomes necessary. In all possible cases, it is better to avoid this additional copying, because it takes longer to complete.

Intel's Memory Access Pattern (MAP) analysis (Vectorization) Advisor 2016 identifies loops with sequential, non-sequential, and irregular access.

The Strides Distribution column provides cumulative statistics on how often each model is used in a given cycle. In the figure above, two-thirds of the horizontal bar is shaded in blue (this is sequential memory access), and the right third is shaded in red (this is non-sequential access). For code with an “array of structures” layout, Advisor can also receive a “recommendation” for converting an array of structures into an array structure.

Analyzes of access models and memory locality are simplified in the Advisor MAP, they additionally provide a memory usage metric and match the diagnostics of each “step” (i.e. access model) with certain names of objects and arrays of C ++ or Fortran *. For more information about Intel Advisor, see the sites here and here .

Arrays “array structure” and “array of structures” are used in many graphic programs, in programs for calculating particle dynamics (for example, molecular dynamics), for instantaneous data and properties (mass, location, velocity, charge) that can be associated with a point or with a specific body. As a rule, the array structure works more efficiently and faster.

In the Intel compiler, starting from version 2016 Update 1, the transformation “array of structures” -> “structure of arrays” is simplified due to the use of Intel SIMD (Intel SDLT) data composition templates. Using the SDLT templates, you can simply override the container of an array of structures as follows.

SDLT_PRIMITIVE(Point3s, x, y, z) sdlt::soa1d_container<Point3s> inputDataSet(count); This will allow access to Point3s instances by the array structure model. Read more about SDLT here .

There are several articles on the use of arrays of structures and structures of arrays. In particular, it is recommended to read the following articles (English):

- Comparing-Intensive Loop

- How to Manipulate Data Architecture to 32-Bit Intel Architecture

- Structure of Arrays vs Array of Structures in cuda

In most cases, the structure of arrays is faster, but in some cases, the layout and use of data is closer to an array of structures, which at the same time provides higher performance.

Conclusion

Let's sum up. Here are the basic principles to follow in terms of performance and data composition. Structure the code to minimize data movement. Reuse data as long as it is in the registers and in the cache (this also allows you to move less). You can limit the movement of data by locking loops. This is especially important for programs with two-dimensional or three-dimensional layout. Analyze the layout for parallelization: how tasks and data are distributed for parallel computing. Proper domain demarcation techniques improve the efficiency of both messaging (MPI) and shared memory programming. In the structure of arrays, less data is usually moved than in an array of structures, and the speed is higher. Avoid spurious sharing, create truly local variables, or use padding so that multiple threads do not reference values in the same cache line. Finally, adjust the alignment of the data so that it starts in the cache line.

The full code can be downloaded here .

If you have not read the first part of this article, then it is here .

Apply the described techniques and estimate how much the code performance will improve.

Source: https://habr.com/ru/post/283196/

All Articles