Slit survey: implementation on bash (ffmpeg + imagemagick)

I do not remember what and why I searched the Internet a few days ago, but I came across an interesting article with unusual photos . And later on another article where the implementation of the algorithm for creating such photos in python was described. After reading, I was interested in this topic and I decided to spend the evenings of the May holidays with benefit for myself, namely to implement the algorithm of "converting" the video into a slit photo. True, not on python, but with improvised means on bash. But first things first.

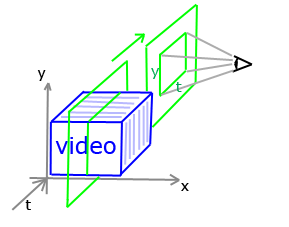

A type of photograph showing not one event at a time, but several events. This is achieved due to the fact that the slit camera shoots frames one pixel wide (this is the “slit”) and “sticks together” them into one photo. It sounds a bit confusing and it's hard to imagine what it is and how it looks. The most intelligible explanation for me was a comment to one of the above articles from the user Stdit :

After that, everything becomes clear.

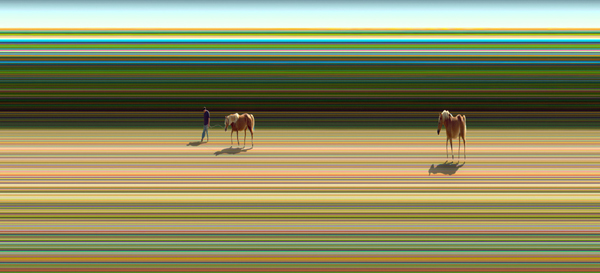

An example for clarity:

')

Sounds scary and simple.

The first and simplest thing that comes to mind is to write a bash script that will process video and photos according to the described steps of the algorithm. To implement my plans, I needed ffmpeg and imagemagick . In a simplified form on a pseudo bash script looks like this:

For a couple of evenings, a script was written, to which we input a video file at the entrance, and at the output we get a photo. In theory, the input can be served video in any format that supports ffmpeg. The output file can be obtained in formats supported by imagemagick.

Using the script is very simple:

where input is a video file for processing, output is the name of the resulting file, slit-shift is the horizontal offset of the slot.

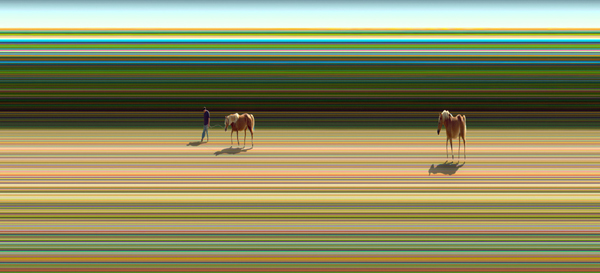

First of all, for quick testing, I did not shoot the video on camera, but downloaded the first available video from youtube and “fed” it to the script. Here's what came of it:

The next day I took my Xiaomi Yi with me for a walk and took some videos. Here's what came of it:

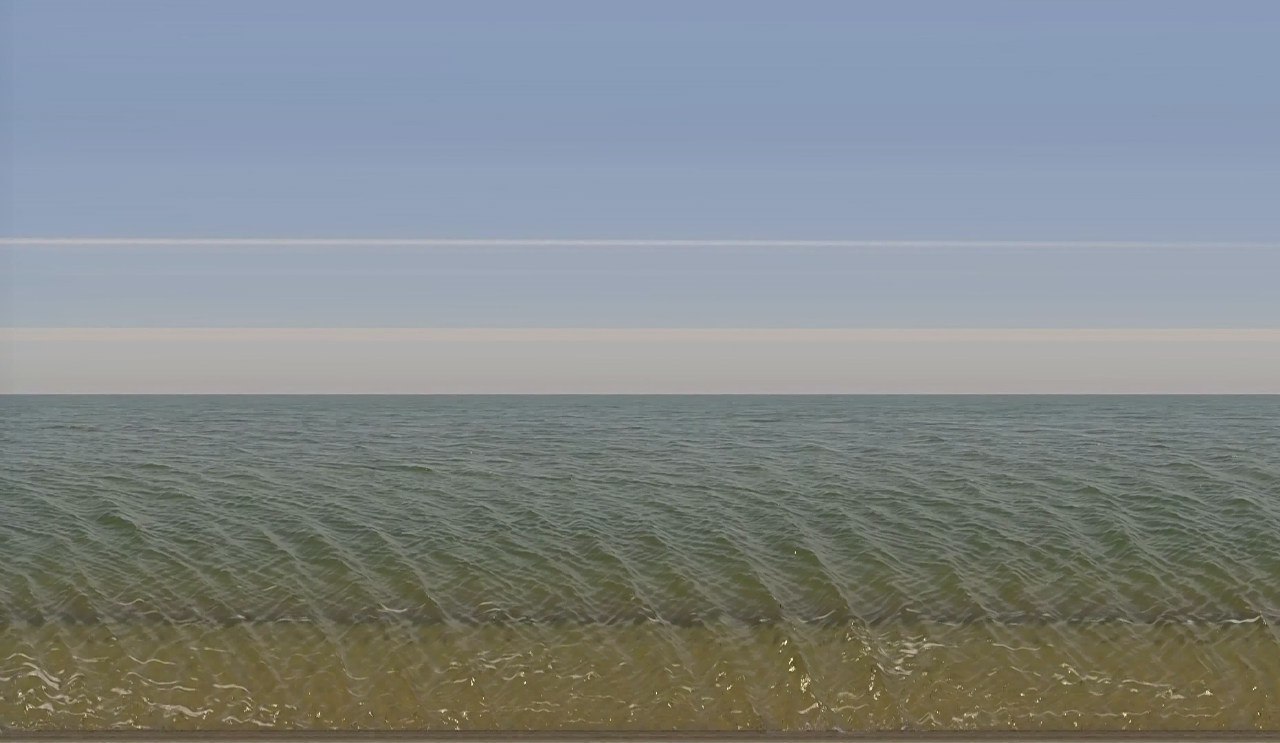

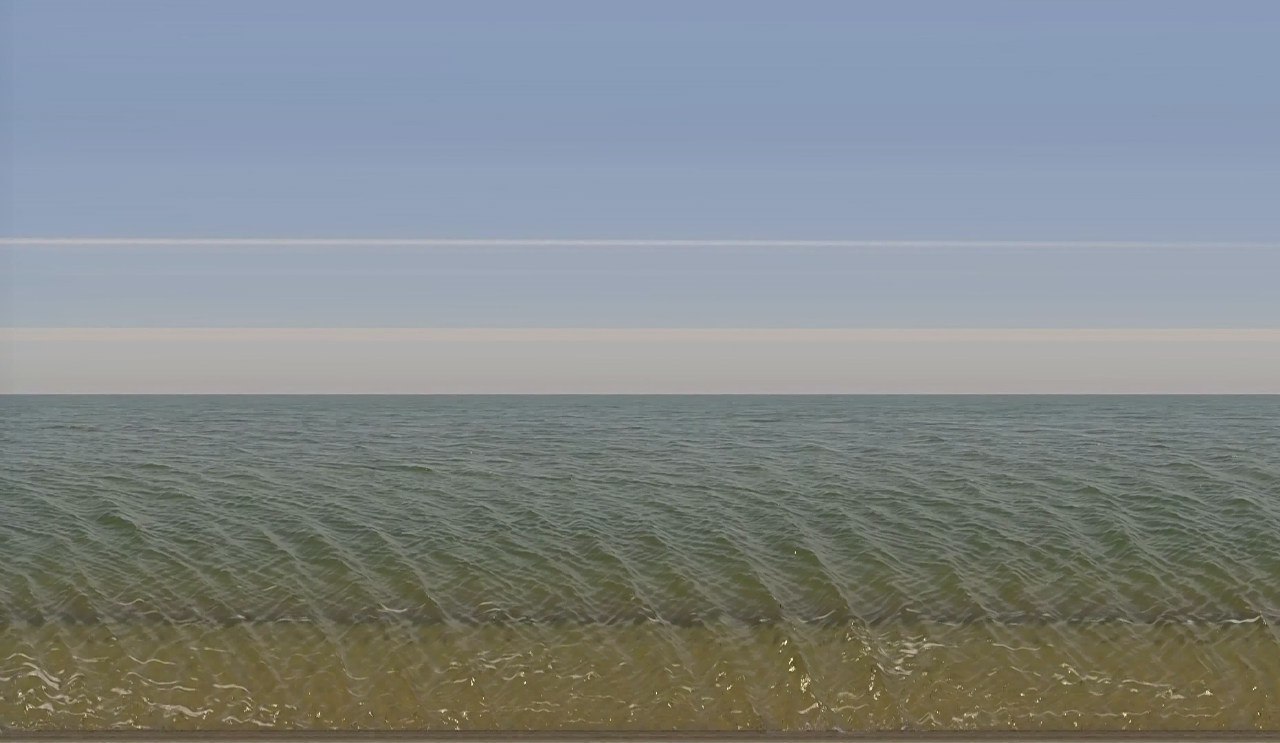

Native Sea of Azov (photo taken from a video resolution of 1920x1080 pixels and a duration of 31 seconds, 60k / s)

And these photos are collected from video resolution of 1280x720 pixels and a duration of 16 seconds, 120k / s. Pay attention to the background of the second photo. It is not static. Against the background was a moving ferris wheel.

You can view and download the script in my repository on GitHub . Suggestions, criticism and pullrequest are welcome.

What is a slit photo

A type of photograph showing not one event at a time, but several events. This is achieved due to the fact that the slit camera shoots frames one pixel wide (this is the “slit”) and “sticks together” them into one photo. It sounds a bit confusing and it's hard to imagine what it is and how it looks. The most intelligible explanation for me was a comment to one of the above articles from the user Stdit :

After that, everything becomes clear.

An example for clarity:

')

Algorithm for constructing a slit photo

- Spread out the video into many images.

- Crop each resulting image width of one pixel with a given offset (slit).

- Collect the resulting set of images into one.

Sounds scary and simple.

Given

- Xiaomi Yi Camera

- The desire to understand and make some unusual photos

- A couple of evenings of free time

Decision

The first and simplest thing that comes to mind is to write a bash script that will process video and photos according to the described steps of the algorithm. To implement my plans, I needed ffmpeg and imagemagick . In a simplified form on a pseudo bash script looks like this:

ffmpeg -i videoFile frame-%d.png for ((i = 1; i <= framesCount; i++)); do convert -crop 1xframeHeight+slitShift+0 frame-$i.png slit-$i.png done montage slit-%d.png[1-framesCount] -tile framesCountx1 -geometry +0+0 outputImage We will understand what is happening here.

- First, using the ffmpeg utility, we split the video into multiple images of the form frame-0.png ... frame-n.png.

- Secondly, using the convert utility from the imagemagick package, we cut each resulting image (-crop key) as follows: width == 1px, height == image height. We also indicate the horizontal displacement of the gap. We save in files of the form slit-0.png ... slit-n.png.

- Thirdly, using the montage utility from the imagemagick package, we collect the resulting images into one photo. The -tile switch indicates that all photos need to be assembled into one according to the “framesCount horizontally and 1 vertically” pattern, that is, to assemble multiple images in one row.

Result

For a couple of evenings, a script was written, to which we input a video file at the entrance, and at the output we get a photo. In theory, the input can be served video in any format that supports ffmpeg. The output file can be obtained in formats supported by imagemagick.

Using the script is very simple:

./slitcamera.sh --input=test.avi --output=test.png --slit-shift=100

where input is a video file for processing, output is the name of the resulting file, slit-shift is the horizontal offset of the slot.

First of all, for quick testing, I did not shoot the video on camera, but downloaded the first available video from youtube and “fed” it to the script. Here's what came of it:

The next day I took my Xiaomi Yi with me for a walk and took some videos. Here's what came of it:

Native Sea of Azov (photo taken from a video resolution of 1920x1080 pixels and a duration of 31 seconds, 60k / s)

And these photos are collected from video resolution of 1280x720 pixels and a duration of 16 seconds, 120k / s. Pay attention to the background of the second photo. It is not static. Against the background was a moving ferris wheel.

You can view and download the script in my repository on GitHub . Suggestions, criticism and pullrequest are welcome.

Source: https://habr.com/ru/post/283122/

All Articles