Creating a continuous deployment system: the Instagram experience

In our blog on Habré, we not only talk about the development of our product - billing for Hydra telecom operators , but also publish materials about working with infrastructure and using technologies from the experience of other companies.

In Instagram, the deployment of a backend-code (the main software and hardware that clients work with) occurs from 30 to 50 times a day, every time engineers confirm a change of the original. And, for the most part, without human participation - it is difficult to believe it, especially given the scale of the social network, but the fact remains.

')

Instagram engineers in their technical blog talked about how they created this system and made it work flawlessly. We bring to your attention the main thoughts of this article.

Why is all this necessary?

Continuous deployment has several advantages:

- Continuous deployment allows engineers to work faster. They are not limited to multiple deployments per day at a fixed time — making changes as needed, which saves time.

- Continuous deployment makes it easier to identify errors that have crept into a particular commit. Engineers do not have to analyze hundreds of changes at once, in order to find the cause of a new error, it is enough to analyze the last two or three commits. Metrics or data that indicate a problem can be used to accurately determine when an error occurred, which means it is immediately clear which commits need to be checked.

- The commits containing errors can be quickly detected and corrected, which means the code does not turn into a mass infested with “bugs”, which is physically impossible to deploy. The system is always in such a state when it is possible to find and correct the error very quickly.

Implementation

The success of the final implementation of the project can be largely associated with an iterative approach to building architecture. The system was not created separately and then switched to it, instead, Instagram engineers developed the existing process of creating software and working with the infrastructure until continuous deployment was implemented.

What was before

Before continuous deployment, engineers made changes as they occurred — if some function was implemented that I wanted to implement as soon as possible, then the deployment process was launched. At the same time, the first deployment took place on test servers — updates were “rolled” on them, then logs were studied, and if everything was fine, then the update was deployed to the entire infrastructure.

All this was implemented in the form of the Fabric script (a library and a set of command line tools for deploying and administering python applications), in addition, the simplest database and graphical user interface “Sauron” was used, which kept the deployment logs.

Canary test

The first step was to add a test using canaries (end-user groups that may not know about participating in the test), which initially involved the creation of scripts for automating embedded tasks. Instead of running a separate deployment on a single machine, the script is deployed on the Kanerek machines, writes user logs, and then requests permission for further deployment. At the next stage, the collected data is processed: the script analyzes the HTTP codes of all requests and categorizes them according to the specified barrier (for example, less than 0.5% or at least 90%).

Instagram engineers had a set of ready-made tests; however, it was only run by IT staff on their work machines. So, reviewers (those who perform code review) had to trust their colleagues for the determination of the success or failure of the test. In addition, test results were unknown when deploying a commit on a combat system.

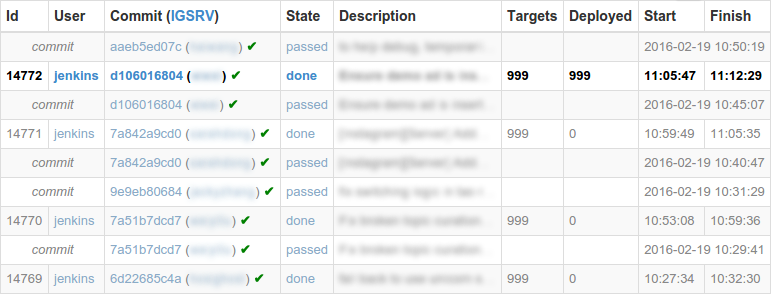

Therefore, it was decided to install the Jenkins integration system to run tests of new changes and create reports for the Sauron GUI. This tool was needed to keep track of the most recent successfully passed commits - if necessary, it was their system that was supposed to offer instead of the most recent commit.

To refactor, Facebook engineers use the Phabricator tool and the Sandcastle system that integrates well with it. Their Instagram colleagues also. used Sandcastle to run the test and get reports.

Automation

Before the start of the deployment automation project, Instagram engineers had to do some preparatory work. In particular, a status was added for each new deployment (started, ready, error) and alerts were issued that appeared if the previous deployment did not have the status “ready.” A button has been added to the GUI to interrupt deployment. In addition, all changes were tracked. As a result, if earlier Sauron knew only about the last successfully passed test, then now all changes of the combat system were recorded, and the status of each of them was known.

Then work was done to automate the remaining decisions that the person had to make. The first of these was the choice of commit that should be deployed. The original algorithm always selected commits that successfully passed the test, and chose as few as possible - this number never exceeded three. If all commits passed the test successfully, each time a new change was selected, moreover, there could be no more than two consecutive changes that did not pass testing. The second was a decision about the success or failure of the passage of the deployment. If it did not pass on more than one percent of the hosts, then such a deployment was considered not completed.

In this configuration, during the deployment process, the person had to answer “yes” several times in the system dialog (receiving a commit, launching a canary test, starting a full deployment) - this action could be automated. After this, Jenkins learned how to run a full deployment script — of course, at the first stage, this feature was only used under the supervision of engineers.

Problems

When implementing a continuous deployment to Instagram to its current state, not everything went smoothly. There were several problems that engineers had to work on.

Testing Errors

First of all, it often happened that the commits contained errors that led to test failures - which means they did not work for all subsequent commits. And this, in turn, meant that in this case nothing could be deployed. To correct the error, you had to first notice the problem, roll back the problem commit, wait for the tests to complete the last successful commit, and then manually deploy the changes to the commit before the process can continue in automatic mode.

All this negated the main advantage of continuous deployment — deploying a small number of commits at a time. The problem was the unreliability and low speed test. To reduce their execution time from 12-15 minutes to 5, Instagram engineers had to tackle performance optimization and fixing bugs in the test infrastructure, which led to its unreliability.

Accumulating a queue of changes

Despite the improvements introduced, there was still a regular lineup of changes waiting to be deployed after an error. The main reason was the errors identified during the canary test (both positive and false positive), but other problems occurred. After fixing the problem, the automation system selected one commit for deployment — to deploy them all, it took time, during which new changes could get into the queue.

Usually, in this situation, the duty engineer could intervene and deploy the entire line at once — but this, again, leveled one of the main advantages of continuous deployment.

To correct this shortcoming, a queue processing algorithm was implemented in the change selection logic, which made it possible to automatically deploy many changes when there is a queue. The algorithm is based on setting the time interval through which each change will be deployed (30 minutes). For each change in the queue, the remaining time interval, the number of deployments that can be performed at that interval, and the number of changes that must be deployed at a time are calculated. The maximum ratio of changes to deployment is selected, but it is limited to three. This allowed Instagram engineers to perform as many deployments as possible while ensuring each commit is deployed in a reasonable amount of time.

One of the specific reasons for the occurrence of the queue was the slowdown in deployment due to the increased size of the service infrastructure. For example, once engineers discovered that the kernel was fully loaded with an SSH agent that performed SSH authentication of connections and a Fabric script. To solve this problem, the agent was replaced with a distributed SSH system from Facebook.

Deployment Guide from Instagram Engineers

What you need to know to carry out such a project? Instagram engineers have identified several basic principles that should be used to create equally effective automated deployment systems.

- Tests The test suite should be fast. The coverage of the tests should also be extensive, but here it is not necessary to try to embrace the immense. Tests should often be run: when evaluating the code, before applying changes (ideally blocking the implementation in case of an error), after implementing the changes.

- Tests using canaries . An automated test using canaries is needed to prevent the deployment of really bad commits across the entire infrastructure. Again, perfectionism is not necessary here, even a simple set of statistical metrics and threshold values will suffice.

- Automate the usual script . No need to automate everything, just standard and simple situations. If something unusual happens, you should stop the automatic execution so that people can intervene in the situation.

- People should be comfortable . One of the biggest obstacles encountered during such automation projects is the fact that people no longer understand what is happening at the current time, and are deprived of control. To solve this problem, the system should provide a good understanding of what has been done, what is being done, and (ideally) will be done in the next step. It also requires a well-functioning emergency stop mechanism for automatic deployment.

- It is worth waiting for bad deployments . You should not hope that all deployments will take place without problems. Errors can not be avoided, but this is normal. The main thing is to be able to quickly find problems and “roll back” changes to a working version.

According to Instagram engineers, to realize a similar project is under the power of IT departments of many companies. A continuous deployment system does not have to be complex. It is worth starting with something simple, focusing on the principles outlined, improving the system step by step.

What Instagram engineers plan on doing next

Now the automatic deployment system on Instagram works without any special complaints, but there are still some points that need to be improved.

- Maintain speed of work . Instagram is growing fast, and the frequency of changes is also increasing. Engineers need to maintain a high speed of deployment to maintain a small number of changes implemented at a time.

- Add more canaries . As the volume of changes made increases, the number of errors on the Canary hosts grows, and more and more developers interact with the queue of pending changes. Engineers need to “catch” more bad commits at an early stage and block their deployment - this is done by constantly modifying the canary-testing process, in particular, its results are checked with the help of “combat” traffic.

- Improved quality of error detection . In addition, the Instagram engineering team plans to reduce the impact of bad commits that were not detected using canary testing. Instead of testing on one machine and deploying on an entire fleet of cars, it is planned to add intermediate stages (clusters and regions), at each of which all metrics will be checked before further deployment.

Other technical articles from Latera :

- We automate the accounting of addresses and bindings in IPoE-networks

- Working with MySQL: how to scale the data warehouse 20 times in three weeks

- DoS on your own: What causes the uncontrolled growth of tables in the database

- Open source application architecture: How nginx works

- How to improve resiliency of billing: The experience of "Hydra"

Source: https://habr.com/ru/post/283102/

All Articles