Notes with MBC Symposium: trying to figure out why deep learning works

I continue to talk about interesting reports at MBC Symposium (MBC, by the way, stands for Mind Brain Computation).

Surya Ganguli is a person from theoretical neuroscience, that is, is engaged in understanding how the brain works, based on measurements of impulses of neurons at various levels.

And here, regardless of neuroscience, deep learning happens in the world, and we get some kind of artificial system to teach something.

Unlike the brain, in which we have limited resolution, complexity with repeatability, etc., etc., we know absolutely everything about the deep network, about all the weights, about all the states. The question arises - if we are going to figure out how the brain works, can we try to begin to understand how and why such a small system works?

Without the hope that the brain works as well, rather with a view to develop some methods that may be applicable later.

Disclaimer: The post is written on the basis of the edited chat logs closedcircles.com , hence the style of presentation, and clarifying questions.

Here is a link to the report itself and to the actual work published, which will be discussed, but, seriously, it is better to read the post.

One of the very old results from neural networks before they began to be called deep learning - what looks like a network is learning hierarchically, first higher-level partitions, then more detailed ones.

What exactly is meant

Here was a study from the old days of this kind.

We give the input of the network some object and relationship, and at the output we ask to learn a feature such as "canary can move".

I note that, in contrast to the current classification tasks, this is a stupid task to memorize information by the network;

At the entrance - one-hot encoding of the object and the relationship, at the exit it is necessary to get the probability of a set of features, the network has only one hidden layer.

It is studied only as a network "teaches" training dataset.

And it turns out that the network first teaches "high-level" divisions into animal-plants, and only then details about each of the species.

Moreover, the actual classes "animal" and "plant" are nowhere to be found. There are only specific types of animals and plants about which the network initially knows nothing.

But how did they determine that this is how it turns out?

Look at the second picture, the three clustering graphs on it are at different stages of the workout.

At first, everything is random and everything is short. In the middle, a “high” main category appears, separating animals from plants, and only then secondary ones “grow” under it.

ok as I understand the activation in the hidden layer - correspond to some properties. therefore, objects with similar properties are closer to each other.

Yes. Moreover, the “animal / plant” high-level separation is the first to learn, although it has not been directly written anywhere, it only learns from the data.

interesting! and this is all repeatable naturally, with any initial data / weights.

Yes.

They are trying to understand why this is happening.

To do this, they consider an even simpler system - a network with one hidden layer with no nonlinearity at all.

This is the system - the one-hot id of the object is given as input, its properties must be output at the output.

Again, we only check how it learns, there is no test dataset.

Although such a network without non-linearity has no representational power compared to a bluntly linear matrix, the presence of two levels makes learning non-linear, because the square deviation is already optimized.

It turns out that the equations of the learning dynamics of such a system can already be solved analytically.

In general, the fact that such a system “learns” as a result was known for a long time - it tends to a singular value decomposition of feature matrix objects.

(It's hard for me to describe what SVD is in a nutshell, but you can imagine that this is some kind of linear decomposition, where for each object a vector of "internal representation" is introduced, from which the resulting transformation results results. A good tutorial, for example, here )

matrix N-dimensional in the number of features ?.

NxM, where N is the number of features, and M is the number of objects.

That is, the resulting embedding is just the inner vector SVD.

More interesting is how the system “strives” for this decomposition.

It turns out that at first the system “learns” the division corresponding to the largest singular value, then learns the following, and then the smaller one.

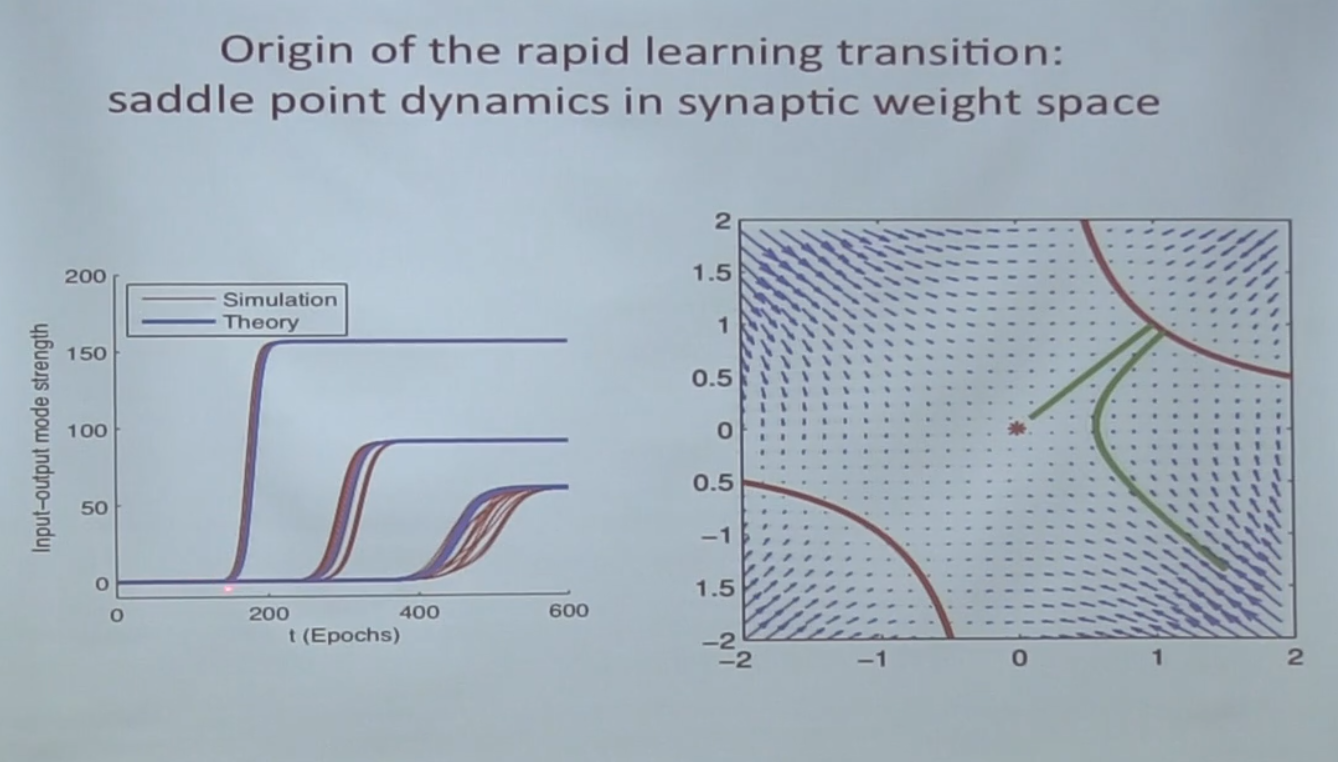

This behavior is explained by the presence of saddle points.

Saddle points are points where the gradient is 0, but they are neither local minima nor local maxima, and gradient descent likes to roll in them and it is difficult to get out of them:

The red dot in the picture below is the same saddle dot:

First, the system rolls into it and wanders there for a long time, and eventually it leaves the green line.

what is it on the graph on the left - input / output mode strength?

This is the actual singular value for each mode.

And about SVD we know well what determines the parameters of the system - they are determined by the correlation matrix between objects, that is, SVD finds the most correlated direction among these objects.

And the more objects are "aligned" in a certain direction, the more singular value of this direction is.

and explain once again about saddle points and gradient descent - and the connection with singular value.

It turns out that in the optimization space of a linear network, each singular value corresponds to a saddle point, and the “learning” of the corresponding singular value corresponds to an “exit” from this saddle point.

')

To learn all the features you need to get into all the saddle points?

Yes. Obviously, here we mean the embedding features, and not the final ones.

And not only to get there, but to get out of them, i.e. for example, plants and animals are separated by a saddle point.

Are the embedding features the activation of neurons in the hidden layer?

Yes. While you rummage in it - you cannot understand what is what, but if you fell in one direction - oops, there are all the trees there!

Yeah, it's clear, but how does it happen that at first it falls to the point of the largest singular number?

Because she has the biggest pit and you roll first of all into her.

and accidentally wander somewhere not there will not work? to some other local minimum

There are no local minima in the linear system.

In general, no, it does not work out statistically, because the main direction out datasets outweighs.

and here is a stupid question - does gradient descent have a purpose to fall into a hole?

Well yes. But the saddle points attract it, although they are not a minimum. It is difficult to get out of them, but when I got out - the gradient descent will take out further down, away from the saddle point.

and it depends on? if for example more data for fish / bird say, than between animal / plant

Yes, it depends. Singular values is a property of the whole dataset, global, not local.

And what does all this tell us in relation to the original task ?.

We figured out that in its somewhat simplified formulation (but which preserves the described property), learning occurs according to the SVD decomposition, starting with the largest singular value.

The main driving force is the strongest correlations between objects.

This does not fully explain the situation, but an important intermediate result.

And now the next moment, how to get to the hierarchical representation.

The idea is this: if the picture of the world is hierarchical (that is, a certain high-level feature is first determined, and then the details depend on it), then the higher the feature in the hierarchy, the more objects it affects and the more correlations it explains.

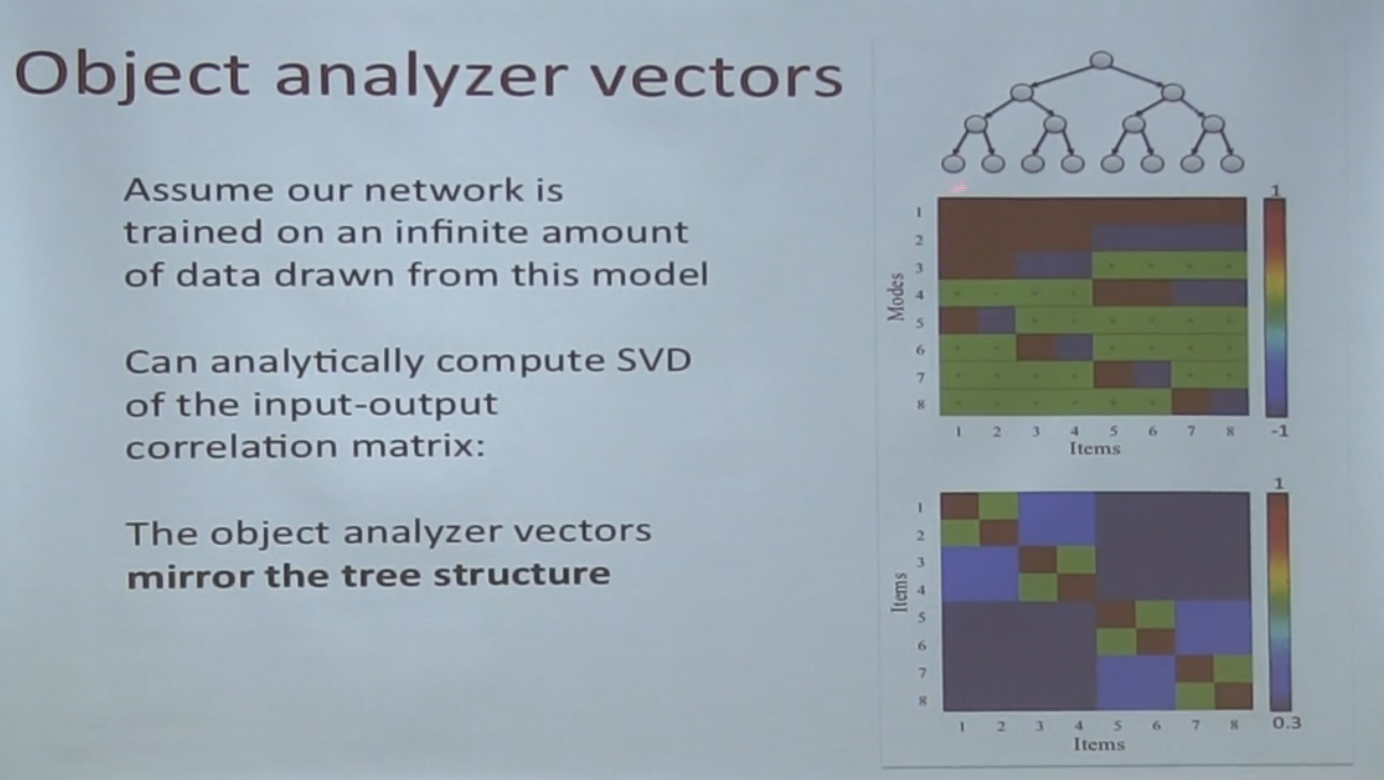

They demonstrate it with such a simple model.

We generate objects in the following simple way - first, we will generate one feature randomly, then for objects where we get 0, we randomly generate another feature, and for those that 1 - the third, and so on the hierarchy.

In the following picture, this is shown in the tree above right:

It turns out that if objects are so nugged, the strongest singular value is just the first node (which is fairly obvious in general, this is the direction in which most objects can be divided)

And this finally gives us an explanation of the original phenomenon.

A simplified network naturally sequentially learns the directions of the strongest correlations of objects, and since objects in real data have hierarchical properties, the properties higher in the hierarchy generate the strongest correlations.

Therefore, the network teaches them first, and more detailed (affecting fewer objects) - second.

This idea can be developed in different directions.

For example, in psychology there is such a mystical moment - the formation of categories.

There are objects in the world, they have features. How does the brain select a category, such as "animals"?

If you think about it consistently, there is a chicken and egg problem:

- We could group objects into categories, but we need to understand on the basis of which features.

- We could come up with a category based on a set of features, but then you need to understand which objects to include there in order to take features!

And here it is known that the extent to which the coherent category is important.

The classic example is that for the category "dogs" we have the word "dog", but for the category "things of blue" - no. Precisely because blue things are not alike.

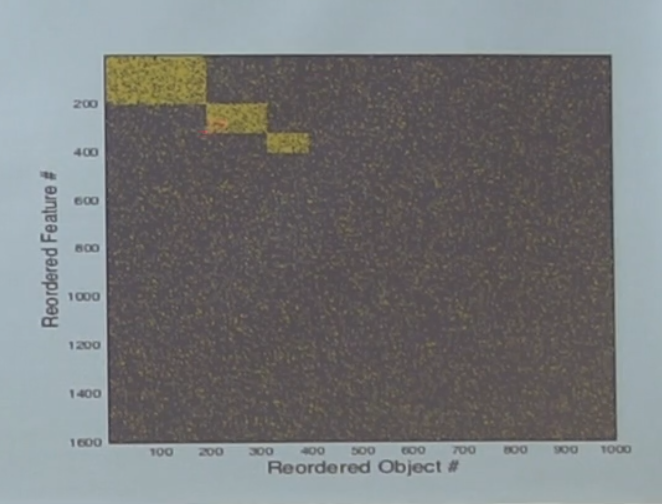

To try to demonstrate this effect in training, let's generate random data:

Here we have generated random objects with random features, on the right - the matrix visualization. We feed these objects at the entrance to the already linear network that we love so that it learns how to match the features to the objects.

This is considered the same situation as was discussed earlier - here is the soup of objects and features, are there categories here?

There are actually categories in the data - we generated the data not accidentally, but throwing a coin is whether the object is of a certain category. If you rearrange the column rows, it looks like this:

That is, in this noise there are actually three categories. The question is, will our network learn them?

It turns out that it can be analytically shown that it depends on the size of this rectangle in relation to the whole dataset. If the category is big enough, it will be learned, and if not, it will drown in noise. In addition, the fact that a category can be identified depends not only on the number of objects in it, namely, on the ratio of the size to the entire dataset.

The critical size of the category is proportional to sqrt (N * M).

By the way, I still don’t really understand why we don’t have a word for "All blue things". Just the ratio to all the data is very decent.

Not! Blue objects have too few matching features.

The square is large but very thin. For the emergence of a category there are restrictions on the size of the region, and on its "density".

Well, the last point.

The fact that the concept of "category" depends on the whole dataset explains why it is so difficult to talk about it in traditional psychology.

The selection of objects in the category depends on the entire experience of mankind, and it is very difficult to approach it “locally”.

But even such a simple model demonstrates how learning solves this chicken and egg problem by finding a structure in noise.

Summarize

On the one hand, this work hints at the expense of what networks are so effectively trained - because the data in the real world has a certain structure, and this is expressed in the optimization landscape, along which learning by gradient descent rolls.

On the other hand, it again shows well how far the theory is from practice - the model under discussion is extremely primitive, even by the standards of the neural networks of the past, not to mention the very modern deep architectures.

Conclusions, however, can be extrapolated.

Source: https://habr.com/ru/post/282826/

All Articles