A touching story: from the first touchscreens to the future with Surfancy

MEGA Accelerator was launched at the beginning of the year, and in the last post we introduced you to ten startups who have become residents of our accelerator and have already started working in the co-working PO2RT. Now you can tell more about each of the projects. Why not start with Surfancy ?

This article is about the history of touchscreen development from the 50s of the 20th century to our contemporaries, Surfancy startups, who invented how to separate the touchscreen from the screen. Why is this not a random decision, but the correct and regular development of the entire touchscreen history? Here you need to understand the history.

For now, remember these two white bars, we will return to them at the end of the article:

')

How could such an important technological solution like a touchscreen capture the world almost instantly? The way of touchscreens was rather thorny and demanded the hard work of several generations of the best engineering minds, and the technology itself has in its history already ten (!) Generations of outstanding devices!

The first thing that strikes you is that for a very long time no one was interested in the touchscreen, the engineers perceived it as a crutch and an impractical toy. Even in the cinema, the touchscreen appeared only in 1965, when the crew of the ship from Star Trek began to press their fingers directly on the screens instead of buttons - then it was perceived as grotesque and did not receive wide distribution. In Star Trek itself, they only returned to touchscreens in 1987 ...

But the story of the real touchscreen begins in the 1950s. Only at first, instead of a finger, one had to use something more electrically conductive.

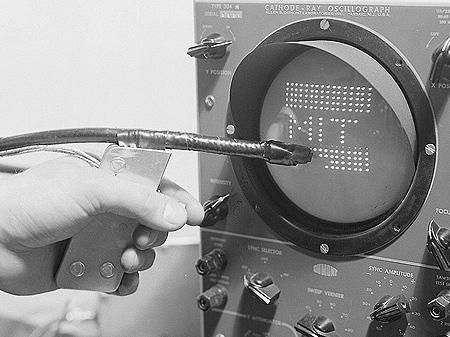

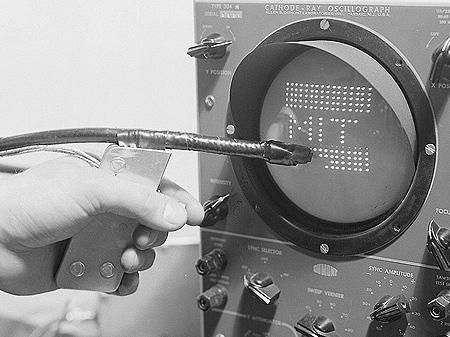

In 1954, Robert Everett of the American Lincoln Lab proposed using a light gun to select and control the icons of the aircraft on the airborne radar. If you had a light gun and a Dandy console, now you know where her legs grow from.

The famous control center from the Dr.Strangelove movie is just the SAGE control center.

Thanks to the cold war - the operators needed to respond as soon as possible to Soviet planes and rockets in the air, so the government spent $ 12 million on developing the SAGE system, which had a great influence on the development of information technology - for the first time, the entire airspace was controlled by 27 300-ton computers, connected analog modems, the algorithms automatically responded to threats and suggested measures for counterattacks. To create the system, 20% of programmers from around the world were involved, who wrote 250,000 lines of the most complex code in history.

SAGE was so complex and reliable that it managed the US missile defense system until 1983 (!!!). Ironically, in the last years of the work of the system, failed vacuum tubes could not be replaced - they were no longer produced from the 50s, so the CIA had to carry out a huge operation to buy the right lamps ... in the USSR. But this is a completely different story, I mention it simply to show what scale of material and scientific investments were required to create a self-evident thing - a computer capable of understanding finger movements.

In the SAGE system, the operator acted instantly - instead of entering coordinates from the keyboard, he simply pointed the pen at the point with a light pen, the opto-electric pen transmitted the signal to the computer, and he already calculated the coordinates. This allowed us to control in real time and eliminate errors.

By 1957, the light gun had been upgraded to a light stylus. Now it was possible not only to allocate aircraft, but also to draw on the screen, as if with a pen. Then the scientists decided that the ideal was achieved.

One of the IBM programmers secretly stitched into the system such an image for the process of diagnosing synchronization of remote computers. Perhaps this screensaver can be considered the first screensaver.

In 1963, the corporation RAND commissioned by DARPA creates a universal graphics tablet with a stylus. Which is fundamentally no different from modern Wacom'ov.

The most interesting part of the RAND tablet was hidden under the surface covered with epoxy. A thin layer of copper was deposited on the thinnest layer of polyester film on both sides, on which the tablet grid was etched. The upper surface contained 1024 tracks of the X coordinates, and the lower surface contained 1024 tracks of the Y coordinates. Thus, the RAND tablet contained a million XY coordinates and provided an unprecedented resolution for that time — 100 tracks per inch.

The tablet used a grid of conductors under the sensor surface, to which electrical impulses coded with the three-fold Gray code were applied. A capacitively connected pen received this signal, which could then be decoded back into coordinates. Special attention was paid to work with maps and target designation on them. And all this for just some $ 18,000. Disadvantages? For a tablet, you need a full-fledged computer with its own screen and such a power supply:

Unfortunately, all data on the application of the system are classified, only two copies 6 were transferred to civilian universities.

In 1965, the touchscreen technology becomes a true touchscreen. Edward Johnson of the Royal Radar Establishment creates the type of touchscreen, which for many years will be the main - capacitive touchscreen. Now the dispatchers at the airport could simply touch the screen with a finger without any stylus.

The technology is simple, as all ingenious - the kinescope screen is covered with a conductive transparent film, a finger touch to the screen changes the resistance and the system receives a signal - there is a short circuit. Simplicity and reliability allowed this system to manage all air travel in England until the very end of the 1990s!

True, the simplicity of the system did not allow it to become a commercial solution - on such a film you cannot measure the pressure and, more importantly, no multitouch. Only one finger at a time.

Unlike capacitive, resistive touchscreens were opened by accident at all. Its author, Samuel Hearst, describes this case as follows:

To study atomic physics, the research group used the Van de Graaf accelerated accelerator, which was available to students only at night. Tedious analysis of data from paper tapes greatly slowed down the research. Sam came up with a way to solve this problem. He, Parks, and Thurman Stewart, another doctoral student, used electrically conductive paper to read a pair of x- and y- coordinates. This idea led to the first touch screen for the computer. Using this prototype, his students could do the necessary calculations within a few hours, although it used to take several days to achieve the same goal.

Having abandoned his research, in 1970 Hurst and nine colleagues took refuge in his garage and brought the accidental invention to perfection. As a result, the technology of the “electric sensor of flat coordinates” was born:

Two electrically conductive layers set the X and Y coordinates, respectively. The pressure on the screen allowed the current to flow between the X and Y layers, which was easily measured at the output and immediately converted into numerical coordinates. Therefore, this type of screen is called resistive - it reacts to pressure (resist - resistance) and not electrical conductivity.

This technology turned out to be surprisingly cheap, and it is precisely to her that we now all use it.

In 1971, all of this was patented and sold by a Californian businessman called Elographics.

In reality, the multitouch was born where it should have been - at CERN. Few people understand that the main problem of CERN is the control of colliders. What is the problem? Large Hadron Collider (LHC); Every second there will be about a billion collisions, in each of which dozens of particles of different types will be born. The annual experimental data is estimated at 10 petabytes (1 PB = 10 15 bytes) - the LHC will provide 1% of the information produced by humanity. For processing and storing such a data flow in 1994, CERN will invent the Internet. But in 1973, engineers face a completely unexpected task.

The launch of the proton supersynchrotron rested in the impossibility of operational control. Being 10 times larger, more powerful and more complicated than the previous accelerator, it required an almost infinite number of buttons and switches on the dashboard, and only a few people should manage it all - otherwise it will not be possible to exercise centralized control. How to be?

To output a separate toggle switch from each of the one hundred thousand switches or ... to transfer all the work to the computers that will display only the necessary data to the operator on the screen. To do this, you only need to come up with these supercomputers and screens that allow you to easily switch the buttons by pressing your fingers.

As you understand, CERN chose the second option and created capacitive screens with multitouch support - it is their solution that we use today when we hold our iPhone in our hands.

And it all began with these sensory matrices. On the left of the 1977, on the right - 1972:

In a note dated March 11, 1972, Bern Stumpe presented his solution - a capacitive touchscreen with a fixed number of programmable buttons on the display. The screen was to consist of a plurality of capacitors melted into a film or glass of copper wires, each capacitor must be constructed so that a nearby conductor, such as a finger, would increase the power by a significant amount. The capacitors had to be copper wires on glass — thin (80 μm) and far enough apart (80 μm) to be invisible. In the final device, the screen was simply coated with varnish, which prevented the fingers from touching the capacitors.

The film was almost transparent and completely invisible:

As a result, the proton synchrotron was controlled from here with only three operators:

The system was so ahead of its time that there was not a single commercial component in it - all elements were made by CERN themselves and were not perceived as highly specialized solutions, but rather even as “crutches” - instead of pride, engineers believed that their solution was “cheat” and entertainment and not serious work. After the collider, the technology was used in the cafeteria "Drinkoin" - the device allowed the fingers to mix a cocktail on the screen and get it from the device.

It is amazing that, having essentially opened a multitouch, in CERN it was never used - no gestures or familiar functions - the multitouch was then perceived simply as an opportunity to press several buttons on the screen at the same time. I note to the side that nothing prevented the CERN's touchscreen from determining the force of pressing the screen.

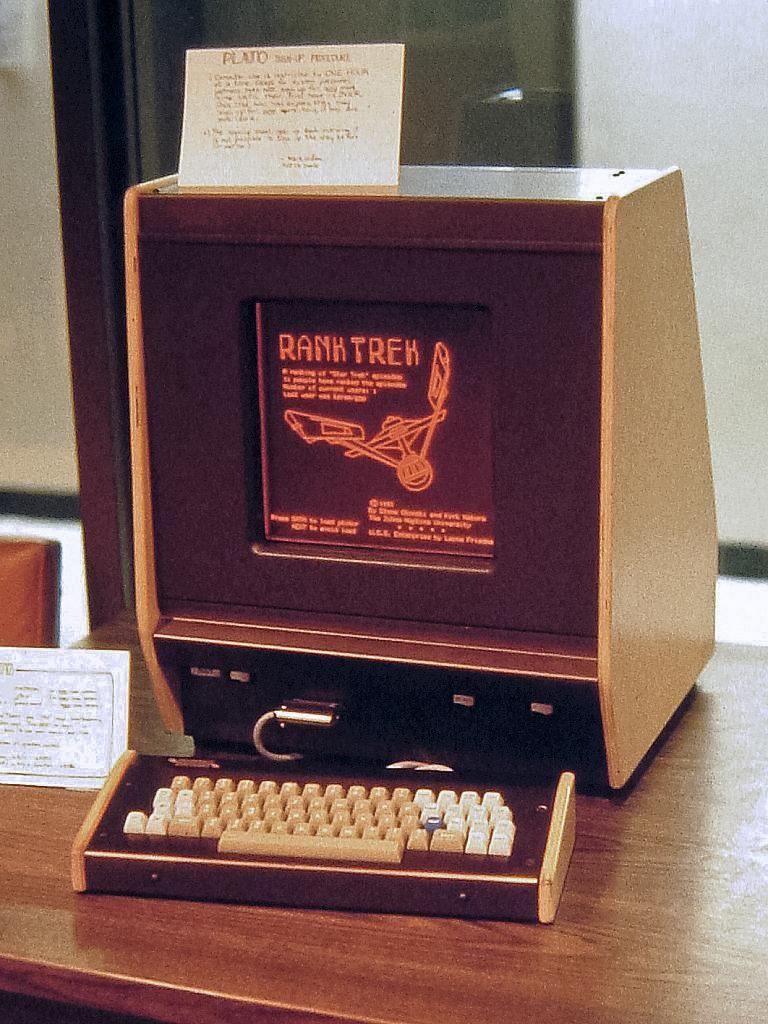

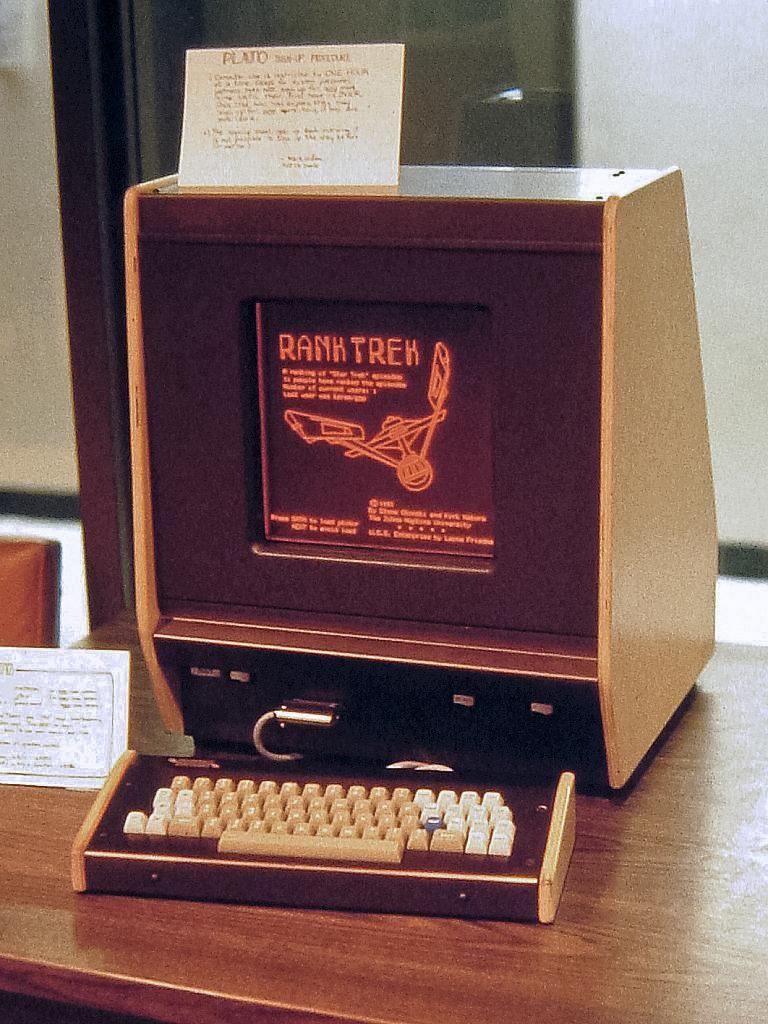

Impressed by the success of European scientists, Americans knock out a government grant for the conversion of military touchscreens for educational purposes. Programmed Logic for Automated Teaching Operations (PLATO) is the first e-learning system, which by the end of the 70s consisted of several thousand terminals around the world and more than a dozen mainframes connected by a common computer network. The PLATO system was developed at the University of Illinois and has been used for 40 years. It was originally created to perform simple term papers by students at the University of Illinois, local high school students, and students at several other universities.

But we are interested in how exactly the terminals of this system functioned. The PLATO IV display also had a 16x16 grid of an infrared touch panel, which allowed students to answer questions by touching their finger anywhere on the screen.

With the advent of microprocessors, newer, cheaper and more powerful terminals have been developed. The Intel 8080 microprocessors built into the terminals allowed the system to execute programs locally, as Java applets or ActiveX controls are running nowadays, as well as loading small software modules into the terminal to diversify training courses due to complex animations and other features not available in ordinary terminals. Separately, we note the orange gas-plasma screen, which gave an impressive picture.

It is curious that in 1972, the PLATO system was demonstrated to researchers at Xerox PARC at the University of Illinois. The individual modules of the system were shown, such as the image generator program for PLATO and the program for “drawing” new characters. Many of these innovations became the further foundation for the development of other computer systems. Some of these technologies in an improved form appeared in the Xerox Alto system, which, in turn, became the prototype for the development of Apple.

By 1975, the PLATO system, running on the CDC Cyber 73 supercomputer, served almost 150 places, including not only PLATO III users, but also gymnasiums, lyceums, colleges, universities, and military facilities. PLATO IV offered text, graphics, and animation as part of the courses. In addition, there was a shared memory mechanism ("common" variables), which allowed using the TUTOR programming language to transfer data between different users in real time. This is how chat programs were first created, as well as the first multi-user flight simulator. All this for 30 years before the Internet!

Disadvantages? The resolution of the sensor is only 32 squares, there is still no multitouch and the cost is $ 12,000.

By the 1980s, it became obvious to everyone that without a multitouch, the touchscreen would not be able to enter the market. As is well known from antiquity, man is the measure of all things. And a man has ten fingers on his hands.

In 1983, there were many discussions of multi-touch screens that led to the development of a screen that uses more than one hand to control. Bell Labs focused on the development of multi-touch software and made significant progress in this field by presenting a touch screen in 1984, which could be flipped through with two hands.

The next step in the development of optical sensors was the emergence of "touch frames", developed in 1985 at Carnegie Mellon University. The principle of operation of such displays was that the frame of the display was illuminated by IR rays, and the touch was determined by the change in light scattering at the glass-air border. Such displays could recognize simultaneous touch with three fingers.

Optical touch displays are also used today. Their main advantage is the absence of an additional layer of transparent material. In particular, the IR touch is used in the Sony PRS-350 “reader” and various industrial devices. The main disadvantage is the excessive accuracy and complexity of such systems - and it is expensive and offensive to waste resources that will never be used.

In 1985, a group of scientists at the University of Toronto developed small capacitive sensors to replace bulky cameras based on optical sensor systems.

Inventors are trying to solve the problem through all sorts of crutches - someone uses a shadow from his hands to determine the coordinates, color projectors or even microphones for echolocation. These systems did not receive any distribution, but all modern control gestures known to us were created on them. The only thing that these systems could not do was to register clicks on the screen, which killed them.

The first attempt to enter the mass market for sensory technologies occurred in 1983, when HP decided to capture the market by releasing the legendary HP-150. A solid, solid person for $ 3000 on an Intel 8088 with a frequency of 8 MHz was twice as fast as the standard personal computers of those days with a frequency of 4.77 MHz, besides, there were from 256 to 640 kilobytes of RAM and MS-DOS 3.20 onboard, but the main thing was the screen . On top of the screen are infrared emitters, forming a touch frame, 40 horizontal to 24 vertical sensors.

This computer could not give a real multi-touch and in general turned out to be a commercial failure - the sensor holes from the bottom quickly filled with dust and stopped working; one finger on the screen blocked two lines at once. In 1984, Bob Boyle from Bell Labs was able to improve the system, replacing the rays and sensors with a transparent layer on top of the screen, which made it possible to realize a real multi-touch, but everything was hopeless ... very quickly it turned out that human hands were completely unsuitable for "poking" with a finger into the vertical The screen is on weight for more than 10-15 minutes. That is, such interfaces are impossible in principle:

It is strange and unexpected - holding a hand for ten minutes is not a problem (but also not sugar), but it’s impossible to poke a weight on the wall with your finger. The hand is swollen and very tired, the researchers called this effect "Gorrilla Arm", that is, "paw of the gorilla."

The fight against Gorilla paw syndrome sheds light on the history of Apple and Steve Jobs, in any case makes it understandable. Steve Jobs was convinced that there is only one way to defeat this flaw - transfer the touchscreen directly to your hand. Put the screen in the palm or in any other way to eliminate the distance between the fingers and the screen, forcing to keep hands on weight. Remember how Steve specifically explained why MacBooks will never have touchscreens?

Steve insists that it is necessary not just to eliminate this gap, but to go further - to give users a clean, intuitive multitouch without any intermediaries. Multitouch and nothing but him!

But Steve is not listened to - his ideas are accepted, but he himself is “left” from Apple. As a result, Apple makes its first iPhone in 1987 - Newton MessagePad is only a parody of Jobs' dream - yes, you can hold it in your hand, but no multitouch and control through the stylus. As a result, it is inconvenient to use it, it cannot recognize handwritten input and everything slows down. Critics delighted, users curse the raw product. But Apple goes ahead and releases its tablet for another six years, which puts the corporation on the verge of bankruptcy.

Palm uses it, releasing its handheld - its only difference is that it does what it promises - for example, at least it recognizes handwritten text. But also no multitouch and work only through the stylus. All this works on resistive screens.

Despite the fact that the first multitouch-enabled surface appeared in 1984, when Bell Labs developed a similar screen on which images could be manipulated with more than one hand, this development was not promoted.

Starting from 2000, the multitouch race starts. Sony launches the SmartSkin project, Microsoft does the Surface table with IKEA, the FTIR system becomes a hit on YouTube.

This method was continued only twenty years later - in the early 2000s, FingerWorks developed and began to produce ergonomic keyboards with the ability to use multicashes / gestures, for which she developed a special sign language. A few years later (2005), it was purchased by ... Apple.

Returning to the CEO post, Jobs directed all Apple's resources to one simple task - to make a product that will be fully built around multitouch technology. He fights for every gram of weight and a millimeter of the size of the future iPhone, to defeat the "paw of the gorilla." He fundamentally hates styluses, and subordinates all the software and design to a single task, not even a task, but only one gesture, which he will show in the presentation and blow up the industry. Here is this gesture:

At the famous presentation of 2007, he unlocks the iPhone with a simple swipe and scrolls through the lists with the same simple finger movement. This is natural and obvious - we do not even understand how cool it was just ten years ago!

It is the simplicity and naturalness that became the key to the success of the iPhone - the entire interface design that imitates the natural surfaces — the so-called skevomorphism. And besides, now you know where such hatred for the stylus comes from.

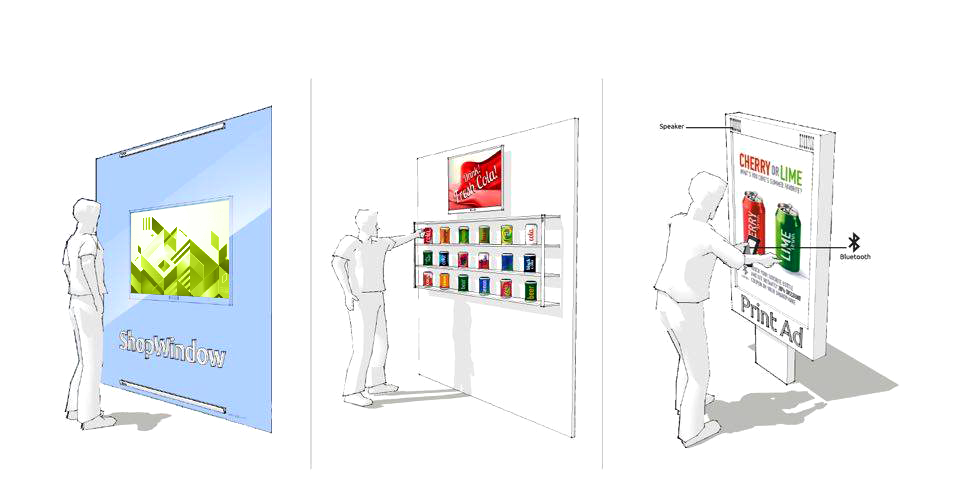

So, it took us 50 years to make the way from the light gun to the iPhone. What's next? The answer to this question is trying to grope Moscow startup Surfancy .

All the previous 50 years, we tried to get rid of the keyboard and move the controls directly to the screen. As a result, today sensory solutions have become hostages of their screens. But what if you take one more step and ... abandon the screen? It is easy to see that all large corporations went in this direction - it suffices to recall the Nintendo Wii or Microsoft Kinect. But they are also extremely limited - they need a special room with strictly defined parameters of illumination, a few meters of space, and they are unable to recognize finger movements.

But what if you imagine a solution that can be very cheap and just make it touch any flat surface, regardless of external conditions, or of the presence of the surface itself? So the idea of Surfancy was born.

The main features of the device are ease of installation, scalability of the sensor area and high noise immunity, the system does not impose a framework (in the literal and figurative sense) on what I want to make touch. “Our system can be fixed on any surface and get an interactive advertising poster, a large touch screen or a smart board. But the format is set not by us, but by the person who uses Surfancy. After the implementation of the first prototype, I cannot get rid of the desire to “press” the drawn button on paper posters in elevators or subway cars, ”laughs Dmitry. In addition, the system is reliable. Among existing optical systems, only Surfancy does not impose any requirements on ambient light and curvature.

The idea of creating a new type of touch panel originated from one of the team members during his work in a major Russian company producing gaming machines. The high accuracy provided by the ultrasonic sensor principle used in them was very redundant, which gave impetus to the development of a simpler and cheaper device.

And such a device was developed. In the original version, it was a printed circuit board covering the edge of the monitor with phototransistors mounted on it, which were alternately illuminated by two infrared diodes spaced along the surface of the monitor. Touching the surface of the screen caused the intersection of the optical fluxes of the infrared diodes and the appearance of corresponding shadows on the surfaces of the phototransistors. In fact, all this resembles the device of a long-known infrared frame, i.e. devices forming a grid of rays optically connected with a set of photodetectors. However, a significant difference in the new scheme is only 2 emitters. The novelty of this technical solution was confirmed by the patent of the Russian Federation for invention No. 2278423.

Figure 1 shows the functional diagram protected by this patent.

Picture 1

For well-known reasons, the production of gaming machines was discontinued, and the project of the touch panel was buried for a long time. , , – . Kinect Microsoft. Kinect – . Those. , -. – – , .

– , , , , .

, 3D- . – 3D-. Kinect, , . - , Kinect, . , . , Kinect .

« », , - , 2.

Figure 2

S Ψ1, Ψ2, Ψ3, Ψ4 L1 L2 . , .1, . , .. , D1…D4. №2575388 , 3.

3

, , 6 .

, ( 1 2- ) , , 2- 4- . 4- . .

Surfancy :

. , Surfancy .

, — , . , !

, Surfancy , , . , , , .

— , , , .

This article is about the history of touchscreen development from the 50s of the 20th century to our contemporaries, Surfancy startups, who invented how to separate the touchscreen from the screen. Why is this not a random decision, but the correct and regular development of the entire touchscreen history? Here you need to understand the history.

For now, remember these two white bars, we will return to them at the end of the article:

')

How could such an important technological solution like a touchscreen capture the world almost instantly? The way of touchscreens was rather thorny and demanded the hard work of several generations of the best engineering minds, and the technology itself has in its history already ten (!) Generations of outstanding devices!

The first thing that strikes you is that for a very long time no one was interested in the touchscreen, the engineers perceived it as a crutch and an impractical toy. Even in the cinema, the touchscreen appeared only in 1965, when the crew of the ship from Star Trek began to press their fingers directly on the screens instead of buttons - then it was perceived as grotesque and did not receive wide distribution. In Star Trek itself, they only returned to touchscreens in 1987 ...

But the story of the real touchscreen begins in the 1950s. Only at first, instead of a finger, one had to use something more electrically conductive.

First generation

In 1954, Robert Everett of the American Lincoln Lab proposed using a light gun to select and control the icons of the aircraft on the airborne radar. If you had a light gun and a Dandy console, now you know where her legs grow from.

The famous control center from the Dr.Strangelove movie is just the SAGE control center.

Thanks to the cold war - the operators needed to respond as soon as possible to Soviet planes and rockets in the air, so the government spent $ 12 million on developing the SAGE system, which had a great influence on the development of information technology - for the first time, the entire airspace was controlled by 27 300-ton computers, connected analog modems, the algorithms automatically responded to threats and suggested measures for counterattacks. To create the system, 20% of programmers from around the world were involved, who wrote 250,000 lines of the most complex code in history.

SAGE was so complex and reliable that it managed the US missile defense system until 1983 (!!!). Ironically, in the last years of the work of the system, failed vacuum tubes could not be replaced - they were no longer produced from the 50s, so the CIA had to carry out a huge operation to buy the right lamps ... in the USSR. But this is a completely different story, I mention it simply to show what scale of material and scientific investments were required to create a self-evident thing - a computer capable of understanding finger movements.

In the SAGE system, the operator acted instantly - instead of entering coordinates from the keyboard, he simply pointed the pen at the point with a light pen, the opto-electric pen transmitted the signal to the computer, and he already calculated the coordinates. This allowed us to control in real time and eliminate errors.

By 1957, the light gun had been upgraded to a light stylus. Now it was possible not only to allocate aircraft, but also to draw on the screen, as if with a pen. Then the scientists decided that the ideal was achieved.

One of the IBM programmers secretly stitched into the system such an image for the process of diagnosing synchronization of remote computers. Perhaps this screensaver can be considered the first screensaver.

Second generation

In 1963, the corporation RAND commissioned by DARPA creates a universal graphics tablet with a stylus. Which is fundamentally no different from modern Wacom'ov.

The most interesting part of the RAND tablet was hidden under the surface covered with epoxy. A thin layer of copper was deposited on the thinnest layer of polyester film on both sides, on which the tablet grid was etched. The upper surface contained 1024 tracks of the X coordinates, and the lower surface contained 1024 tracks of the Y coordinates. Thus, the RAND tablet contained a million XY coordinates and provided an unprecedented resolution for that time — 100 tracks per inch.

The tablet used a grid of conductors under the sensor surface, to which electrical impulses coded with the three-fold Gray code were applied. A capacitively connected pen received this signal, which could then be decoded back into coordinates. Special attention was paid to work with maps and target designation on them. And all this for just some $ 18,000. Disadvantages? For a tablet, you need a full-fledged computer with its own screen and such a power supply:

Unfortunately, all data on the application of the system are classified, only two copies 6 were transferred to civilian universities.

Third generation

In 1965, the touchscreen technology becomes a true touchscreen. Edward Johnson of the Royal Radar Establishment creates the type of touchscreen, which for many years will be the main - capacitive touchscreen. Now the dispatchers at the airport could simply touch the screen with a finger without any stylus.

The technology is simple, as all ingenious - the kinescope screen is covered with a conductive transparent film, a finger touch to the screen changes the resistance and the system receives a signal - there is a short circuit. Simplicity and reliability allowed this system to manage all air travel in England until the very end of the 1990s!

True, the simplicity of the system did not allow it to become a commercial solution - on such a film you cannot measure the pressure and, more importantly, no multitouch. Only one finger at a time.

Fourth generation

Unlike capacitive, resistive touchscreens were opened by accident at all. Its author, Samuel Hearst, describes this case as follows:

To study atomic physics, the research group used the Van de Graaf accelerated accelerator, which was available to students only at night. Tedious analysis of data from paper tapes greatly slowed down the research. Sam came up with a way to solve this problem. He, Parks, and Thurman Stewart, another doctoral student, used electrically conductive paper to read a pair of x- and y- coordinates. This idea led to the first touch screen for the computer. Using this prototype, his students could do the necessary calculations within a few hours, although it used to take several days to achieve the same goal.

Having abandoned his research, in 1970 Hurst and nine colleagues took refuge in his garage and brought the accidental invention to perfection. As a result, the technology of the “electric sensor of flat coordinates” was born:

Two electrically conductive layers set the X and Y coordinates, respectively. The pressure on the screen allowed the current to flow between the X and Y layers, which was easily measured at the output and immediately converted into numerical coordinates. Therefore, this type of screen is called resistive - it reacts to pressure (resist - resistance) and not electrical conductivity.

This technology turned out to be surprisingly cheap, and it is precisely to her that we now all use it.

In 1971, all of this was patented and sold by a Californian businessman called Elographics.

Fifth generation

In reality, the multitouch was born where it should have been - at CERN. Few people understand that the main problem of CERN is the control of colliders. What is the problem? Large Hadron Collider (LHC); Every second there will be about a billion collisions, in each of which dozens of particles of different types will be born. The annual experimental data is estimated at 10 petabytes (1 PB = 10 15 bytes) - the LHC will provide 1% of the information produced by humanity. For processing and storing such a data flow in 1994, CERN will invent the Internet. But in 1973, engineers face a completely unexpected task.

The launch of the proton supersynchrotron rested in the impossibility of operational control. Being 10 times larger, more powerful and more complicated than the previous accelerator, it required an almost infinite number of buttons and switches on the dashboard, and only a few people should manage it all - otherwise it will not be possible to exercise centralized control. How to be?

To output a separate toggle switch from each of the one hundred thousand switches or ... to transfer all the work to the computers that will display only the necessary data to the operator on the screen. To do this, you only need to come up with these supercomputers and screens that allow you to easily switch the buttons by pressing your fingers.

As you understand, CERN chose the second option and created capacitive screens with multitouch support - it is their solution that we use today when we hold our iPhone in our hands.

And it all began with these sensory matrices. On the left of the 1977, on the right - 1972:

In a note dated March 11, 1972, Bern Stumpe presented his solution - a capacitive touchscreen with a fixed number of programmable buttons on the display. The screen was to consist of a plurality of capacitors melted into a film or glass of copper wires, each capacitor must be constructed so that a nearby conductor, such as a finger, would increase the power by a significant amount. The capacitors had to be copper wires on glass — thin (80 μm) and far enough apart (80 μm) to be invisible. In the final device, the screen was simply coated with varnish, which prevented the fingers from touching the capacitors.

The film was almost transparent and completely invisible:

As a result, the proton synchrotron was controlled from here with only three operators:

The system was so ahead of its time that there was not a single commercial component in it - all elements were made by CERN themselves and were not perceived as highly specialized solutions, but rather even as “crutches” - instead of pride, engineers believed that their solution was “cheat” and entertainment and not serious work. After the collider, the technology was used in the cafeteria "Drinkoin" - the device allowed the fingers to mix a cocktail on the screen and get it from the device.

It is amazing that, having essentially opened a multitouch, in CERN it was never used - no gestures or familiar functions - the multitouch was then perceived simply as an opportunity to press several buttons on the screen at the same time. I note to the side that nothing prevented the CERN's touchscreen from determining the force of pressing the screen.

Sixth generation

Impressed by the success of European scientists, Americans knock out a government grant for the conversion of military touchscreens for educational purposes. Programmed Logic for Automated Teaching Operations (PLATO) is the first e-learning system, which by the end of the 70s consisted of several thousand terminals around the world and more than a dozen mainframes connected by a common computer network. The PLATO system was developed at the University of Illinois and has been used for 40 years. It was originally created to perform simple term papers by students at the University of Illinois, local high school students, and students at several other universities.

But we are interested in how exactly the terminals of this system functioned. The PLATO IV display also had a 16x16 grid of an infrared touch panel, which allowed students to answer questions by touching their finger anywhere on the screen.

With the advent of microprocessors, newer, cheaper and more powerful terminals have been developed. The Intel 8080 microprocessors built into the terminals allowed the system to execute programs locally, as Java applets or ActiveX controls are running nowadays, as well as loading small software modules into the terminal to diversify training courses due to complex animations and other features not available in ordinary terminals. Separately, we note the orange gas-plasma screen, which gave an impressive picture.

It is curious that in 1972, the PLATO system was demonstrated to researchers at Xerox PARC at the University of Illinois. The individual modules of the system were shown, such as the image generator program for PLATO and the program for “drawing” new characters. Many of these innovations became the further foundation for the development of other computer systems. Some of these technologies in an improved form appeared in the Xerox Alto system, which, in turn, became the prototype for the development of Apple.

By 1975, the PLATO system, running on the CDC Cyber 73 supercomputer, served almost 150 places, including not only PLATO III users, but also gymnasiums, lyceums, colleges, universities, and military facilities. PLATO IV offered text, graphics, and animation as part of the courses. In addition, there was a shared memory mechanism ("common" variables), which allowed using the TUTOR programming language to transfer data between different users in real time. This is how chat programs were first created, as well as the first multi-user flight simulator. All this for 30 years before the Internet!

Disadvantages? The resolution of the sensor is only 32 squares, there is still no multitouch and the cost is $ 12,000.

Seventh generation

By the 1980s, it became obvious to everyone that without a multitouch, the touchscreen would not be able to enter the market. As is well known from antiquity, man is the measure of all things. And a man has ten fingers on his hands.

In 1983, there were many discussions of multi-touch screens that led to the development of a screen that uses more than one hand to control. Bell Labs focused on the development of multi-touch software and made significant progress in this field by presenting a touch screen in 1984, which could be flipped through with two hands.

The next step in the development of optical sensors was the emergence of "touch frames", developed in 1985 at Carnegie Mellon University. The principle of operation of such displays was that the frame of the display was illuminated by IR rays, and the touch was determined by the change in light scattering at the glass-air border. Such displays could recognize simultaneous touch with three fingers.

Optical touch displays are also used today. Their main advantage is the absence of an additional layer of transparent material. In particular, the IR touch is used in the Sony PRS-350 “reader” and various industrial devices. The main disadvantage is the excessive accuracy and complexity of such systems - and it is expensive and offensive to waste resources that will never be used.

In 1985, a group of scientists at the University of Toronto developed small capacitive sensors to replace bulky cameras based on optical sensor systems.

Inventors are trying to solve the problem through all sorts of crutches - someone uses a shadow from his hands to determine the coordinates, color projectors or even microphones for echolocation. These systems did not receive any distribution, but all modern control gestures known to us were created on them. The only thing that these systems could not do was to register clicks on the screen, which killed them.

The first attempt to enter the mass market for sensory technologies occurred in 1983, when HP decided to capture the market by releasing the legendary HP-150. A solid, solid person for $ 3000 on an Intel 8088 with a frequency of 8 MHz was twice as fast as the standard personal computers of those days with a frequency of 4.77 MHz, besides, there were from 256 to 640 kilobytes of RAM and MS-DOS 3.20 onboard, but the main thing was the screen . On top of the screen are infrared emitters, forming a touch frame, 40 horizontal to 24 vertical sensors.

This computer could not give a real multi-touch and in general turned out to be a commercial failure - the sensor holes from the bottom quickly filled with dust and stopped working; one finger on the screen blocked two lines at once. In 1984, Bob Boyle from Bell Labs was able to improve the system, replacing the rays and sensors with a transparent layer on top of the screen, which made it possible to realize a real multi-touch, but everything was hopeless ... very quickly it turned out that human hands were completely unsuitable for "poking" with a finger into the vertical The screen is on weight for more than 10-15 minutes. That is, such interfaces are impossible in principle:

It is strange and unexpected - holding a hand for ten minutes is not a problem (but also not sugar), but it’s impossible to poke a weight on the wall with your finger. The hand is swollen and very tired, the researchers called this effect "Gorrilla Arm", that is, "paw of the gorilla."

Eighth generation

The fight against Gorilla paw syndrome sheds light on the history of Apple and Steve Jobs, in any case makes it understandable. Steve Jobs was convinced that there is only one way to defeat this flaw - transfer the touchscreen directly to your hand. Put the screen in the palm or in any other way to eliminate the distance between the fingers and the screen, forcing to keep hands on weight. Remember how Steve specifically explained why MacBooks will never have touchscreens?

Steve insists that it is necessary not just to eliminate this gap, but to go further - to give users a clean, intuitive multitouch without any intermediaries. Multitouch and nothing but him!

But Steve is not listened to - his ideas are accepted, but he himself is “left” from Apple. As a result, Apple makes its first iPhone in 1987 - Newton MessagePad is only a parody of Jobs' dream - yes, you can hold it in your hand, but no multitouch and control through the stylus. As a result, it is inconvenient to use it, it cannot recognize handwritten input and everything slows down. Critics delighted, users curse the raw product. But Apple goes ahead and releases its tablet for another six years, which puts the corporation on the verge of bankruptcy.

Palm uses it, releasing its handheld - its only difference is that it does what it promises - for example, at least it recognizes handwritten text. But also no multitouch and work only through the stylus. All this works on resistive screens.

Ninth generation

Despite the fact that the first multitouch-enabled surface appeared in 1984, when Bell Labs developed a similar screen on which images could be manipulated with more than one hand, this development was not promoted.

Starting from 2000, the multitouch race starts. Sony launches the SmartSkin project, Microsoft does the Surface table with IKEA, the FTIR system becomes a hit on YouTube.

This method was continued only twenty years later - in the early 2000s, FingerWorks developed and began to produce ergonomic keyboards with the ability to use multicashes / gestures, for which she developed a special sign language. A few years later (2005), it was purchased by ... Apple.

Returning to the CEO post, Jobs directed all Apple's resources to one simple task - to make a product that will be fully built around multitouch technology. He fights for every gram of weight and a millimeter of the size of the future iPhone, to defeat the "paw of the gorilla." He fundamentally hates styluses, and subordinates all the software and design to a single task, not even a task, but only one gesture, which he will show in the presentation and blow up the industry. Here is this gesture:

At the famous presentation of 2007, he unlocks the iPhone with a simple swipe and scrolls through the lists with the same simple finger movement. This is natural and obvious - we do not even understand how cool it was just ten years ago!

It is the simplicity and naturalness that became the key to the success of the iPhone - the entire interface design that imitates the natural surfaces — the so-called skevomorphism. And besides, now you know where such hatred for the stylus comes from.

So, it took us 50 years to make the way from the light gun to the iPhone. What's next? The answer to this question is trying to grope Moscow startup Surfancy .

Tenth generation

All the previous 50 years, we tried to get rid of the keyboard and move the controls directly to the screen. As a result, today sensory solutions have become hostages of their screens. But what if you take one more step and ... abandon the screen? It is easy to see that all large corporations went in this direction - it suffices to recall the Nintendo Wii or Microsoft Kinect. But they are also extremely limited - they need a special room with strictly defined parameters of illumination, a few meters of space, and they are unable to recognize finger movements.

But what if you imagine a solution that can be very cheap and just make it touch any flat surface, regardless of external conditions, or of the presence of the surface itself? So the idea of Surfancy was born.

The main features of the device are ease of installation, scalability of the sensor area and high noise immunity, the system does not impose a framework (in the literal and figurative sense) on what I want to make touch. “Our system can be fixed on any surface and get an interactive advertising poster, a large touch screen or a smart board. But the format is set not by us, but by the person who uses Surfancy. After the implementation of the first prototype, I cannot get rid of the desire to “press” the drawn button on paper posters in elevators or subway cars, ”laughs Dmitry. In addition, the system is reliable. Among existing optical systems, only Surfancy does not impose any requirements on ambient light and curvature.

The idea of creating a new type of touch panel originated from one of the team members during his work in a major Russian company producing gaming machines. The high accuracy provided by the ultrasonic sensor principle used in them was very redundant, which gave impetus to the development of a simpler and cheaper device.

And such a device was developed. In the original version, it was a printed circuit board covering the edge of the monitor with phototransistors mounted on it, which were alternately illuminated by two infrared diodes spaced along the surface of the monitor. Touching the surface of the screen caused the intersection of the optical fluxes of the infrared diodes and the appearance of corresponding shadows on the surfaces of the phototransistors. In fact, all this resembles the device of a long-known infrared frame, i.e. devices forming a grid of rays optically connected with a set of photodetectors. However, a significant difference in the new scheme is only 2 emitters. The novelty of this technical solution was confirmed by the patent of the Russian Federation for invention No. 2278423.

Figure 1 shows the functional diagram protected by this patent.

Picture 1

For well-known reasons, the production of gaming machines was discontinued, and the project of the touch panel was buried for a long time. , , – . Kinect Microsoft. Kinect – . Those. , -. – – , .

– , , , , .

, 3D- . – 3D-. Kinect, , . - , Kinect, . , . , Kinect .

« », , - , 2.

Figure 2

S Ψ1, Ψ2, Ψ3, Ψ4 L1 L2 . , .1, . , .. , D1…D4. №2575388 , 3.

3

, , 6 .

, ( 1 2- ) , , 2- 4- . 4- . .

Surfancy :

- , .

- , .

- Touchscreen-. Those. .

. , Surfancy .

, — , . , !

, Surfancy , , . , , , .

— , , , .

Source: https://habr.com/ru/post/282706/

All Articles