P2P in the browser

Author: Alexander Trischenko

I will talk about my hobby - organizing video broadcasts in a browser using the WebRTC technology (Web Real-Time Communication - real-time web communication). Google has been actively developing this open source project since 2012, and the first stable release appeared in 2013. Now WebRTC is already well supported by the most common modern browsers, with the exception of Safari.

The WebRTC technology allows video conferencing between two or more users using the P2P principle. Thus, data between users is transmitted directly, and not through the server. However, we still need the server, but I’ll say this later. First of all, WebRTC is designed to work in the browser, but there are also libraries for different platforms, which also allow you to use a WebRTC connection.

')

If we use WebRTC, we solve the following problems:

- We reduce the cost of maintaining servers. Servers are only needed to initialize the connection and for users to share network information about each other. They are also used to send some events, for example, alerts about connecting and disconnecting users (so that the information on each client is relevant).

- We increase the speed of data transfer and reduce the delay in transmitting video and sound - because the server is not needed for this.

- We strengthen data privacy : there is no third party through which the data flow would go (of course, with the exception of gateways, through which the data passes before the network is reached).

Connection initialization

JavaScript Session Establishment Protocol

The connection is initialized using the JavaScript Session Establishment protocol - now there is only a draft that describes the specification of this solution. It describes:

- the process of connecting to the server;

- generation of a unique token for a new user, which will allow identifying it on the back-end;

- getting the user key to access the conversation - signaling;

- initializing RTCStreamConnection to connect two users;

- All participants receive a broadcast message about a new participant.

A new participant joins a video conference in the following way: we send a message to this user that we are already having a video conference, the user sends a connection request, which we satisfy by sending him all the necessary information to connect. At the same time, we will send information to all conference participants to connect with the new user.

How it works? There is a browser level that allows clients to directly exchange media data, and there is a signaling server level at which the rest of the interaction between clients takes place:

Session Description Protocol

For the session description protocol (SDP - Session Description Protocol), which allows you to describe information about a specific user, there is already an approved RFC 4556 specification.

SDP describes the following parameters:

- v = (protocol version; now version is always 0);

- o = (identifiers of the creator / owner and session);

- s = (session name, cannot be empty);

- i = * (information about the session);

- u = * (URL used by WWW clients, with additional information about the session);

- e = * (e-mail of the person responsible for the conference);

- p = * (telephone number of the person responsible for the conference);

- c = * (information for the connection is not required if it is in the description of all media data);

- b = * (information about the occupied bandwidth of the communication channel);

- one or more lines with description of time parameters (see example below);

- z = * (setting for the time zone);

- k = * (encryption key);

- a = * (one or more lines describing session attributes, see below).

SDP information about a client looks like this:

Interactive Connectivity Establishment (ICE)

ICE technology allows users who are behind the firewall to connect. It includes four specifications:

- RFC 5389: Session Traversal Utilities for NAT ( STUN ).

- RFC 5766: Traversal Using Relays around NAT ( TURN ): Relay Extensions to STUN.

- RFC 5245: Interactive Connectivity Establishment ( ICE ): A Protocol for NAT Traversal for Offer / Answer Protocols.

- RFC 6544: TCP Candidates with Interactive Connectivity Establishment (ICE)

STUN is a client-server protocol that is actively used for VoIP. The STUN server is considered a priority here: it allows you to route UDP traffic. If we cannot use the STUN server, WebRTC will try to connect to the TURN server. Also in the list is the RFC for the ICE itself and the RFC for TCP candidates.

The client implementation for ICE servers in WebRTC is already preinstalled, so we can simply specify multiple STUN or TURN servers. Moreover, to do this, during initialization, it is enough just to transfer an object with the appropriate parameters (hereinafter, I will give examples).

GetUserMedia API

One of the most interesting parts of the WebRTC API is the GetUserMedia API, which allows you to capture audio and video information directly from the client and broadcast it to other feasts. This API is actively promoting Google - so, since the end of 2014, Hangouts has been working entirely on WebRTC. Also GetUserMedia fully works in Chrome, Firefox and Opera; There is also an extension that allows you to work with WebRTC on IE. Safari doesn’t support this technology.

It is important to keep in mind that, according to the recently released restriction, the Chrome GetUserMedia API will work only under HTTPS on the beacon server. Therefore, many examples that lie on the HTTP servers will not work for you.

Interestingly, before the GetUserMedia API allowed to broadcast the screen, but now there is no such possibility - there are only custom solutions using add-ons, or you can enable the corresponding flag in Firefox during development.

Now GetUserMedia has the following features:

- selection of the minimum, “ideal” and maximum resolution for the video stream, which implies the ability to change the video resolution depending on the connection speed;

- the ability to choose any of the cameras on the phone;

- the ability to specify the frame rate.

All this is very easy to set up. When we call the GetUserMedia API, we pass in an object that has two properties, “audio” and “video”:

{ audio: true, video: { width: 1280, height: 720 } } Where video is, there can also be “true” - then the settings will be the default. If it is “false”, the audio or video will be disabled. We can configure the video - specify the width and height. Or we can, for example, set the minimum, ideal and maximum width:

width: { min: 1280 } width: { min: 1024, ideal: 1280, max: 1920 } And this is how we choose the camera we want to use (“user” - front, “environment” - back):

video: { facingMode: "user" } video: { facingMode: "environment" } We can also specify the minimum, ideal and maximum frame rate, which will be selected depending on the connection speed and computer resources:

video: { frameRate: { ideal: 10, max: 15 } These are the features of the GetUserMedia API. On the other hand, it clearly lacks the ability to determine the bitrate of the video and audio stream and the ability to somehow work with the stream before its actual transmission. Also, of course, it would not hurt to return the possibility of screen broadcast, which was present earlier.

WebRTC Features

WebRTC allows us to change the audio and video codecs - you can specify them directly in the transmitted information SDP. In general, WebRTC uses two audio codecs, G711 and OPUS (automatically selected depending on the browser), as well as the VP8 video format (Google's WebM, which works fine with HTML5 video).

The WebRTC technology is more or less supported in Chromium 17+, Opera 12+, Firefox 22+. For other browsers, you can use the webrtc4all extension, but I personally could not run it on Safari. There are also C ++ libraries for WebRTC support - most likely, this suggests that in the future we will be able to see WebRTC implementations as desktop applications.

For security purposes, DTLS is used, the transport layer security protocol, which is described in RFC 6347. And, as I said before, users who connect to NAT and firewalls use TURN and STUN servers.

Routing

Now let's take a closer look at how routing is performed using STUN and TURN.

Traversal Using Relay NAT ( TURN ) is a protocol that allows a node behind a NAT or firewall to receive incoming data via TCP or UDP connections. This is an old technology, so the priority is to use Session Traversal Utilities for NAT ( STUN ) - a network protocol that allows you to establish only a UDP connection.

To ensure fault tolerance, it is possible to select several STUN servers, as shown in the instructions . And you can test the connection to STUN- and TURN-servers here .

STUN- and TURN-servers work as follows. Suppose there are two clients with internal IP and external access to the network through firewalls:

To connect these two clients and redirect all the ports, we need to use STUN and TURN servers, after which the necessary information is transmitted to the user and a direct connection between the computers is established.

Algorithm of working with ICE-servers

- Provide access to the STUN server at the time of initialization RTCPeerConnection. You can use public STUN servers (for example, from Google).

- Listen to the connection initialization event and, if successful, start streaming.

- In case of an error, make a request to the TURN server and try to connect clients through it.

- Take care of sufficient bandwidth of the TURN server.

Performance and speed of video streaming

- 720p at 30 FPS: 1.0 ~ 2.0 Mbps

- 360p at 30 FPS: 0.5 ~ 1.0 Mbps

- 180p at 30 FPS: 0.1 ~ 0.5 Mbps

The greatest impact on the performance of WebRTC traffic, which should be allocated to broadcast video. If only two users participate in the broadcast, then it is possible to organize without problems even FullHD, in the case of a videoconference for 20 people we will have problems.

Here, for example, the results obtained with the MacBook Air 2015 (4 GB of RAM, Core i processor at 2 GHz). Simply implementing GetUserMedia loads the processor by 11%. When the Chrome-Chrome connection is initialized, the processor is already loaded by 50%, if three Chrome are 70% loaded, four are full CPU usage, and the fifth client leads to the brakes, and WebRTC eventually crashes. On mobile browsers, of course, it is even worse: I managed to connect only two users, and when I tried to connect a third, everything began to slow down and the connection was broken.

How can this be handled?

Scaling and problem solving

So what do we have? For each conference user, you must open your UDP connection and transfer data. As a result, one user needs 1-2 mebagit to broadcast 480p video with sound, and 2-4 megabits in the case of two-way communication. A heavy load is placed on the iron of the translator.

The performance problem is solved by using a proxy server (relay), which will receive data from the broadcaster, if it is a conference, and distribute it to everyone else. If necessary, for this you can use not one server, but several.

It is also worth considering that if we conduct video broadcasting, we transmit a lot of redundant client information, which we can refuse. For example, when conducting a course, we may need from others only sound without video or even just a message. Also, after changing the active speaker, it may make sense to interrupt one of the connections, change the type of information that we receive in the stream, and then reconnect. This allows you to optimize the network load.

It is important to take into account the software limitations - we can connect up to 256 peers to a single WebRTC instance. This makes it impossible for us to use, for example, some huge and expensive instance on Amazon for scaling, because sooner or later we will have to connect several servers to each other.

CreateOffer API

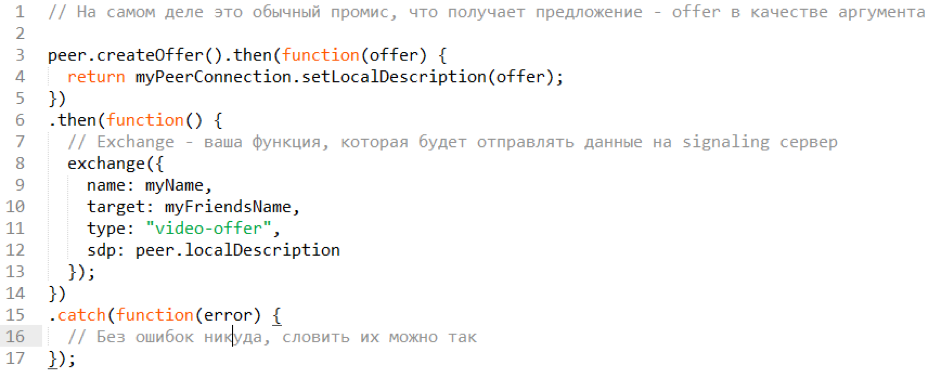

How does all this work? There is a CreateOffer () API, which allows you to create SDP-data that we will send:

CreateOffer - promise, which, after creating an offer, allows you to directly receive a localDescription, place an offer in it and use it as an SDP field in the future. sendToServer is an abstract function for a request to the server, where the name of our machine is written (name), the name of the target machine (target), the type of offer and SDP.

I want to say a few words about the server: for these purposes it is very convenient to use WebSocket. As soon as someone connects, we can emit an event and add a listener to it. It will also be quite easy for us to emit our events with the client.

GetUserMedia API in action

In real life, everything can be done a little easier - we can refer to the GetUserMedia API. Here is how the call initialization looks like:

Here we want to transmit both video and sound. At the output, we get a promise, which takes the mediaStream object (this is the data stream).

And this is how we answer the call:

When someone wants to answer a call, he receives our offer (getRemoteOffer — an abstract function that receives our data from the server). Then getUserMedia initializes streaming, and I already told about onaddstream and addstream. setRemoteDescription - we initialize this for our RTC and specify it as an offer and pass the answer. send the answer ... → here we simply describe the process of transferring the offer to the server, after which the connection between the browsers and the start of the broadcast take place.

Client-server architecture for WebRTC

How does all this look if we scale through the server? As usual client-server architecture:

Here, there is a WebSocket connection and an RTCPeerConnection between the broadcast and the web server that relays the stream. The rest set up RTCPeerConnections. Information about the translator can be obtained by pooling, you can save on the number of WebSocket-connections.

There are not very many ways to scale such an architecture, and they are all similar. We can:

- Increase the number of servers.

- Place the servers in the areas with the largest concentration of the target audience.

- Provide excess performance - because the technology is still actively developing, so that malfunctions are possible.

Increase fault tolerance

First, to improve fault tolerance, it makes sense to separate the server beacon and the relay server. We can use a WebSocket server that will distribute network information about clients. The relay server will communicate with the WebSocket server and broadcast the media stream through itself.

Further, we can arrange the ability to quickly switch from one broadcasting server to another in the event of a priority failure.

For the extreme case, it is possible to envisage the possibility of establishing a direct connection between users in case of failure of the broadcasting servers, but this is only suitable in the case when there are few people in our conference (less than 10).

Display Media Stream

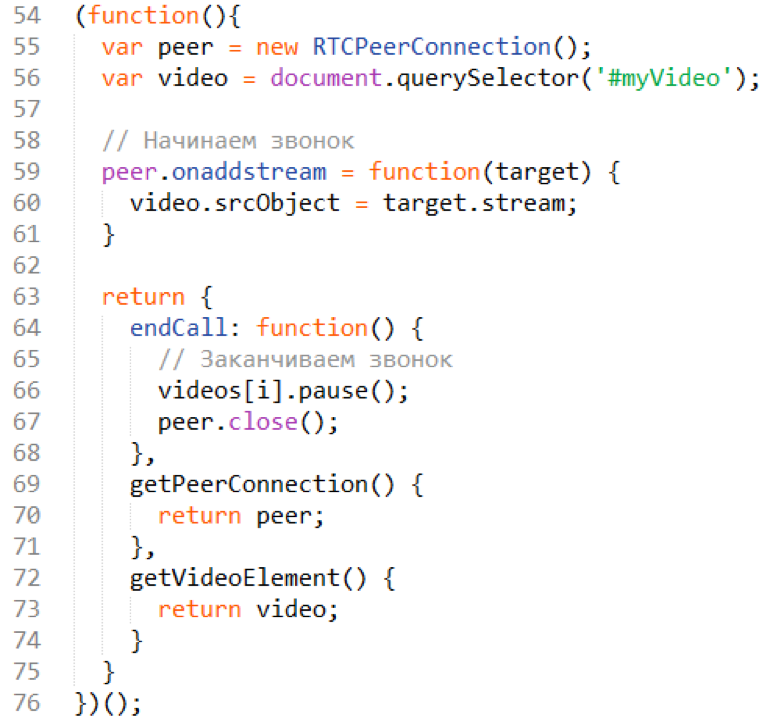

Here is an example of how we can display the flow that is exchanged between clients:

onaddstream - we add a listener. When a listener is added, we create a video element, add this element to our page, and specify the stream as the source. That is, to see all this in the browser, simply specify the source HTML5 video as the stream that was received. Everything is very simple.

If the call is completed (endCall method), we go through the video elements, stop these videos and close the peer connection so that there are no artifacts and freezes. In case of an error, we complete the call in the same way.

Useful Libraries

Finally, some libraries that you can use to work with WebRTC. They allow you to write a simple client in a few dozen lines:

- Simple peer - I really like this library, which solves the problem of creating a connection between two users.

- Easyrtc - allows you to create video conferences.

- SimpleWebRTC is a similar library.

- js-platform / p2p.

- node-webrtc - the library allows you to organize a server beacon.

Source: https://habr.com/ru/post/282612/

All Articles