How GearVR differs from a cardboard box, or the pursuit of latency

Disclaimer: The post is written on the basis of fairly edited chat logs closedcircles.com , hence the style of presentation, and the availability of clarifying questions.

The main term you need to know about VR is motion-to-photon latency .

In other words, the delay between the turn of the head and the last photon of the image (drawn from the perspective of the new head position) left the screen.

It is empirically deduced that motion-to-photon latency is 20 msec and below allows to reach presence - i.e. feeling that you move your head in the virtual world.

Whether values less than 20 ms are important or not is not clear, but in general the goal is to achieve 20.

GearVR by hook or by crook reaches, and I will tell you how.

I apologize in advance for the free use of English terms interspersed with transliterated and translated.

GearVR is if someone does not know the headset in which the phone is stuck. There is no screen in the headset itself - there are lenses and IMUs .

IMU is a standard gyroscope + accelerometer. Whether there is a magnetometer in GearVR, I don’t know exactly, it seems not - but in any case, in practice, the magnetometer is not very important.

There are standard ways to get device orientation using gyro and accelerometer, I will not stop there.

Important point: The GearVR has its own IMU, which provides new data on orientation at a frequency of 1000 Hz with minimal latency (most likely about 1 ms).

This is many times better than the built-in sensors in the phones (in Samsung Note 5 gyro the frequencies are 200 Hz and latency is 10-15 ms).

And how does it feed? From the phone?

As far as I understand, it is powered from the phone via USB - there is almost no iron inside there, so probably the flow is minimal.

This means that the basic arrangement - we have IMU data, we read them at the beginning of the frame and we need to render two frames (stereo image). There is no positional tracking in GearVR - we only know the orientation of the head.

Using the orientation of the head, we obtain approximate data for the cameras of two eyes - conditionally, if we turned our head to the right, then the position of the eyes also moves to the right, since the head rotates around the joint in the neck, and it is known how far the eyes from this point of rotation are displaced.

Well, we know the approximate (average) distance between the user's eyes, so with simple geometry we get two cameras, draw two pictures.

As already mentioned, there are two lenses in GearVR - one for each eye. Lenses are needed for two reasons:

- FOV increase. If you measure the FOV of a screen stuck to your head, it turns out that the value is not high enough - you want 90+ degrees for each eye so that the screen can be small enough.

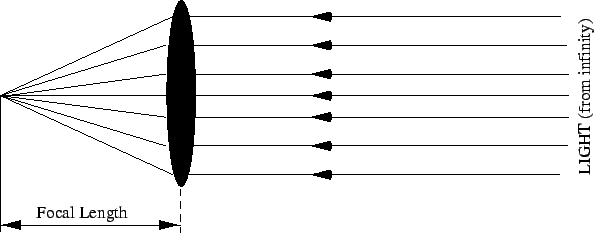

- Change the optical focal length.

If there were no lenses, then different pixels would be in different focal points because the screen is very close and the difference in the distances of different pixels is quite significant.

The lens "straightens" the rays coming from the eye, you get the feeling of an optical focus at infinity:

Actually, it turns out that a lens with such parameters has quite a significant distortion - if you just render the picture in both eyes, then the objects will be noticeably distorted; the farther from the center of the lens (eye), the greater.

Therefore, we take the picture obtained by the standard 3D renderer and distort it when displaying on the screen, so that after distorting the lens, it will be about the same.

For this, a distortion mesh is usually drawn that looks like this:

Plus, if you have a lot of extra time on the GPU, you can try to correct chromatic aberration in the same pass.

(which also results from the properties of the lens).

In practice, on GearVR, by default this filter is disabled - to compensate for it, you need to do 3 instead of 1 texture sample in the shader, and this is mobile hardware.

So, the introductory is finished, let's deal with latency :)

In the proposed scheme, there will be no 20 ms and in general everything will be very bad.

Because...

- At the beginning of the frame we read IMU data - with say 1 ms latency;

- Then we collected the frame matrices, rendered the scene - this is generally a long process, especially considering the quality of OpenGL drivers on Android;

(this is another 16 ms for example, if 60 FPS render ...) - Then we gave the frame to the GPU - it draws this frame for a long time and persistently, and then it makes distortion to get the final picture;

(this is still 16 ms) - Then the display scan-out starts!

The display is clearly not a CRT at all, but the concept of a “beam” reading the data still remains - the display controller reads the display behind the scanline and lights the pixels.

And in order to read the entire display - it takes 16 ms.

Scanlines on the phone are usually “short” - when you use VR, the phone is in landscape mode, and the scanlines are vertical stripes going from left to right (well, or right to left if the phone is turned upside down ...)

Accordingly, the leftmost scanline (in the left eye) is updated 16 ms earlier than the rightmost (in the right eye).

- And finally, there is another joy:

The described mechanism is standard double buffering ...

The gallant operating system is actually triple buffering.

Those. when the GPU draws a picture, it is given not to the display but to the composer, which may be combined with other windows and so on.

And it turns out another frame (16 ms) delay.

As a result, something like 65 ms latency turned out to be shorter.

What is unimaginably more than 20.

Plus there is another problem ...

Usually the display works in full persistence mode - namely, the scanline is lit with new colors, and then virtually the entire frame remains in this mode - i.e. 16 ms

It turns out that in 16 ms you can turn your head far enough, and as a result this scanline is registered with the eye at different points of the retina with the same color.

And it turns out smearing when turning the head.

So if all this does not win - GearVR games would look like Google Cardboard.

We must win.

The key technology is the technology that Oculus calls Time Warp .

It's also called reprojection, and probably something else, but I like the word timewarp.

The idea is as follows - if you take a picture rendered by camera C, then take camera C 'which is camera C turned (but not displaced) for a not very long distance (units or tens of degrees), and render a picture to it ...

It turns out that the second picture from the first can be obtained by a simple 2D transformation.

Why is this so? On the fingers, since in a picture rendered from the camera, each pixel corresponds to a beam from the camera to the point where this pixel is located, it is clear that when you turn the camera, the intersection of two frustums will contain rays that will correspond to pixels of the same color.

If you fix the matrix and turn your head left-right, then at small angles of rotation the fact that you fixed the matrix is not obvious.

This is actually the main test of the fact that time warp is working correctly.

(fixed the matrix of your render and see if you can turn your head, plus or minus 5 degrees).

It is possible and more than 5 degrees, but there is already a lot of information to take nowhere, so most of the screen turns black.

Actually, “shake the device” is a standard stress test for headsets that have positional tracking - fix the head and then with high-frequency hands move the headset on the head left-right, which means the picture must be stable - for this, you actually need sub-20 ms (well quality tracking).

Listen, and if on Gear VR to render with a fixed matrix, I twist the \ projack and turn on the repro, then start shaking the device, does the picture shake on it?

Mmm, shaking is bad. no positional tracking and that will be incomprehensible

Well, if there is no positional tracking, then shaking - it will just change the angle, even if it is small.

Well, yes, but in the real world the position will change and there is no position in the headset - i.e. stable pictures will not work.

You can try to turn the head high-frequency left-right if the neck does not fall off :)

Timewarp is simple - we already draw the same distortion mesh.

Therefore, you can simply “rotate” the texture coordinates in the vertex shader (in 3D space).

The pixel shader does not change, timewarp is actually free.

How much to turn?

The difference between turning the head now and turning the head with which the original picture was drawn.

The greater the turn - the more empty space, you can fill it with black

(or you can do clamp addressing, at small angles of rotation it turns out a little better than black, but at large it turns out strange).

Now, when we have a mechanism for "turning" the image, let's see how it can be used to reduce latency.

First, let's deal with full persistence - this does not technically affect the motion-to-photon, but still need to be removed.

For phones that GearVR supports, a special display driver is written that includes low persistence mode :

Each scanline lights up as before with the necessary colors, and then almost immediately - I don’t know exactly how much, but let's say 3-4 ms - it goes dead (turns black).

It turns out that a sufficiently large number of people due to the peculiarities of perception do not notice the "blinking".

It is said that the perception boundary varies from somewhere 50 Hz blinking to 85 Hz - which is one of the main reasons why desktop VR headsets (Rift / Vive) run at 90 Hz.

Plus, low persistence in a headset is perceived better than just a flashing 60 Hz panel in the real world, because everything seems to be blinking, and the brain adapts ...

Reference links and videos:

https://www.youtube.com/watch?v=_FlV6pgwlrk

https://en.wikipedia.org/wiki/Persistence_of_vision

https://en.wikipedia.org/wiki/Flicker_fusion_threshold

Aah, that is because we have already “removed” the image (the screen has been turned off), is this better perceived? And the problem is when it burns all 16 ms, and the head moves?

Yes sir.

There is a thing called vestibulo ocular reflex:

When you turn your head, your eye automatically turns in the opposite direction.

Therefore, when turning the head, you can fix the eyes on the object and, for example, read when not very strong turns without problems.

And that means if your pixels are burning for a long time, then when you project one pixel on the retina, you get a strip because of the turn of the eye and you get smearing / ghosting.

And if the pixel immediately almost turns off, then smearing does not appear, and in view of some features of the view, high-frequency blinking is not perceived as blinking - I do not know exactly why.

VOR is generally a cool thing, the vestibular apparatus is naturally directly connected to the eye muscles.

Brain involvement is practically not required, and ultra low latency :)

Now when we move our head, we no longer have smearing, but still have 65 ms latency ...

Let's now see how the render actually works in GearVR.

What I wrote above about the fact that at the beginning of the render frame we read the data from the IMU, then we generate OpenGL commands and then give them to the GPU that draws the frame - it remains valid.

This is a game thread and game GL context.

There is another thread that creates GearVR SDK and it has its own GL context, which works in high priority mode.

(on newer versions of Android, this context can now be created by using the EGL extension).

High priority mode means that commands issued by the GPU in this context will be executed almost immediately by the GPU.

I don’t know the details of the GPU scheduling specifically on the GPUs that support GearVR - I assume that there is a preemption granularity draw call - that is, if you don’t have huge 10 msec draw calls, you can finish the current one and two milliseconds and start the next one.

On NVidia’s desktop GPU, this granularity is usually in a draw call and on AMD, if I’m not mistaken 1 wave (for compute), well, desktop vendors are working on improving since This feature is also important on desktop VR ...

The second feature of this VR context is ...

What he draws at once in the front buffer is the very buffer from which the scan out occurs, thus skipping all the composers and other nonsense.

As we have already figured out, scan out takes 16 ms and follows the scanlines one after another in a strict order.

Scanlines are vertical stripes that go from left to right (in landscape mode), so half of the stripes contain a picture from the left eye (scanned for 8 ms), and the second half of the stripes contains a picture from the right eye (scanned for 8 ms).

So you can try to draw in the right eye while the display scans the left and vice versa.

I will note just in case - this does not mean that we will draw straight triangles from the frame of the game!

Here you need a very neat timing and clear guarantees, so the game there as it turns out draws a frame, and the VR context will adjust it using time warp.

So, this very VR thread wakes up twice - when the vsync interval started, and 8 ms after that (in the middle of scan out).

Reads the latest IMU data (with latency 1 ms), and then generates two corrective rotation matrices.

Why two?

Due to scan out, the left scanline of the left eye and the right scanline of the left eye are separated by 8 ms in time (from the point of view of the photon emission time), therefore, ideally, they should be adjusted differently.

Therefore, we read IMU data (latency 1 ms), and generate two head orientations using a gyroscope data for prediction ahead.

We predict the first one by 8 ms, the second by 16 ms (now it will be clear why).

Then we send a draw call to a high priority context in which half of the distortion mesh is on top for the desired eye and we hope that the GPU will quickly select and draw it.

After 8 ms after we generated the orientation, scan out will reach the part of the screen where we just drew the data and start to show it.

As a result, full latency to the photon emission of the leftmost scanline of each eye is approximately 9 ms, and the rightmost one is approximately 17 ms.

(those who wrote race-the-beam rasterizers 30 years ago on Amiga - shed a tear)

Actually, if you cut the distortion mesh into vertical stripes and have clearer timing guarantees - as I understand it, this is impossible with the current GPU - then you could reduce the motion-to-photon to <10 ms.

As you can see, half (8 ms) of the current latency is a type of safety buffer to fit and draw a call on the GPU and so that it draws it, and half is the worst scanout latency for the scanline.

If you cut into stripes and have a clearer timing, then you can try to solve it.

Or directly integrate the time warp into the scanout controller, the warp operation is just a bilinear texture sampling, not very difficult.

But by and large, the current latency is good enough. If you increase the display frequency to 90 Hz in order to remove blinking problems for more sensitive people, you will get about 10 ms on the same technology right away.

Yes, 8 ms is generally an arbitrary buffer.

It is possible theoretically and less

Theoretically, it can be less, almost noticeably less probably becomes dangerous.

If preemption is on a draw call basis, then this should also be taken into account

Especially shorter in VR on mobile there is a tendency to push the whole scene in 1-2 draw calls

Because OpenGL is slow, Unity is slow, and so forth.

So, we woke up right after vsync - at that time scan out began to process the left eye - and drew the right eye in the front buffer.

Then we fell asleep until the middle of the vsync interval, woke up - at that time scan out began to process the right eye - and drew the left eye in the front buffer.

Ad infinitum.

I note, time warp has a funny problem ...

He can only screw the picture.

It is not able to shift it - at the offset the disocclusion problems begin, the depth buffer is needed, more resources and so on.

But in fact, there are two eyes, and when you turn your head, your eyes shift and turn at the same time.

Therefore, the more timewarp correction, the more incorrect the stereo picture is and the harder it is to focus.

In practice, it turns out that a time warp of 20-30 msec does not lead to visible problems, except at high turning speeds where the convergence stops working, I guess.

A couple more moments that GearVR can do, and which are impossible in normal Android ...

First, you can turn on realtime priority to your render stream (well, it turns on VR stream)

So that garbage collection streams of every kind do not interfere very much.

Secondly, you can turn on (more precisely, you need) fixed clock frequency .

By default, the frequency of the CPU and GPU varies depending on the load and other factors.

In practice, in Android, these mechanisms work very strangely, and can lead to the fact that the load that should fit at 16 ms per frame does not fit for several seconds in a row.

Therefore, the SDK disables the dynamic adjustment and allows the application to select fixed frequency scales for the CPU / GPU separately.

Here, I mean clarify. That this whole cycle is for this special thread about time warp

And the actual rendering of the application is parallel to it.

Yes, I wrote it somewhere above. Render thread interacts with this time warp thread through the function "I finished submit commands for the next frame on the GPU, hold it."

And so two independent threads and two actually independent contexts (well, probably fences are inserted into the first context so as not to have problems with synchronization).

And in the application there may still be 16 msec on the CPU in the driver, and 16 msec on the drawing

In principle, it can. Maybe 40 ms on the CPU. :)

These are the numbers you need to reduce it for the rest of the time warp to take out?

It's hard to say for sure. Very dependent on the content. In general, Oculus recommends keeping a stable 60 fps (16 ms cpu / gpu) and counting on a time warp to remove these extra 20-30 ms latency, and to smooth out sudden frame spikes.

But if you have content plus or minus static - for example, imagine an adventure game - then in the SDK there is a mode in which they expect that you give them frames every 33 ms and not 16.

And time warp works almost perfectly (almost - because see above about eye movement when turning)

60 FPS, but 32 msec latency, huh?

20-30, it depends on how specifically the driver for the GPU works which is usually tiled, how quickly you can add all the draw calls for the first frame buffer, etc.

But overall 32.

You will mainly see this in the positions of other objects, if you will.

Those. this is no different from standard latency questions in regular games - the reaction is from pressing a key to movement, etc., it’s all not as critical as head tracking.

Oh, I forgot!

Pro Cardboard.

As you can see from the above, there is exactly ONE moment in hardware for GearVR.

Good sensors with low latency and high refresh rate.

All the rest is software.

(there are still lenses, but in GearVR the lenses, as far as I understand, do not stand out with anything, consider the same in the cardboard devices)

And about this you can read somewhere in detail? About the lens itself. I wonder why with objects that get very close garbage. There, the left-right pictures diverge so much that I cannot focus. And on a real object - I can. It's easy to test on the HTC Vive - bring the controller to the helmet, then take off the helmet and look at it in real life.

There is a problem called vergence-accomodation conflict, maybe because of this ...

Vergence is a phenomenon in which the eyes turn so as to look at an object:

If the object is far away then the eyes look straight;

If the object is very close, then they turn to the nose, for example, if the object is in front of the nose.

Accomodation is the very focusing of view, the optical properties of the eye change there to focus ( https://en.wikipedia.org/wiki/Accommodation_(eye )).

And so the following thing happens in VR:

Vergence works correctly. Each eye is given its own picture, and if the object is close, then in the left and right eyes there are different images - the object is greatly displaced and the eyes turn as it should.

And accomodation doesn't work because the lens is one and it is not adjusted in any way therefore all objects are at optical infinity.

This conflict is differently resolved by different people in different ways, and the closer the object, the greater the conflict.

Judging by my experiments with sensors on different phones with tz. latency ...

Samsung and Apple have noticeably worse tracking, but Nexus 6 has worse tracking but within reason.

(EMNIP 4ms refresh interval, avg 3 ms latency)

Those. on Nexus 6 - you can do everything that I wrote above and have a natural experience comparable to GearVR, but in a cardboard box.

The problem is that almost everything I wrote above cannot be made of user mode - you need to patch the kernel and drivers.

So the authors of the applications can not do this, but Google is fine.

I was played yesterday just on Gear VR - I know who as it is, but it makes me sick, although I do not notice lag at a conscious level

Well, the pixels are huge!

The main problem GearVR with tz. motion sickness - lack of positional tracking.

When you move your head, the picture does not reflect this movement - it creates a conflict between the vestibular and the optical system.

There were rumors that the direction of the future ocular scan in the Oculus is the positional tracking for mobile and AR

Well, I'm not surprised, positional tracking is missing feature # 1, # 2 and # 3 in gear.

# 4 probably full RGB display

So far, all successful positional tracking approaches require an external camera.

Or heaps of cameras on the headset as in HoloLens ...

so I tried both that rift dk2 that gear vr - equally seemed like a useless toy

well, I still have a squint

I, too, it does not bother me personally.

This is what I wrote above about convergence

Personally, I do not care what the IPD is in cameras :)

I have a large part of perception through one of my eyes, not through both - depending on which one you focus on.

yeah - or I see bad 3d

I had the feeling that I was inside a projection sphere

not inside 3d world

By the way, it is possible that for people with squint, positional tracking is more critical by default - when you have stereo vision, then you perceive the distance to objects as such, and when it is not very good, then the main visual cue is parallax

And parallax is obtained mainly from the movement of the head

Especially in gunjack where the turret around you

But here I am a little out of my depth, I don’t understand very well the significance of various perceptual factors, so I’m not exactly sure.

I generally agree that GearVR didn’t really insert me personally, unlike desktop experiences.

')

Source: https://habr.com/ru/post/282562/

All Articles