Silent revolution: the introduction of x86-architecture instead of RISC-machines for bank processing

- The estimate was 200 million rubles, and it became 650 million! Are you stunned?

According to rumors, this is how this project began on the board of directors of the bank. The exchange rate difference in one of the server shipments was 450 million rubles. Naturally, I wanted to somehow reduce these costs.

For a long time, it was believed that the x86 “out of the box” architecture was not intended for serious calculations. The most serious calculations in the world (according to the load and requirements for reliability) are the banking core, processing. There, not to finish counting on time 2-3 operational days in a row simply means closing the bank (and problems with the country's banking system) because of the gap that cannot be reached.

')

One bank from the TOP-10 a couple of years ago I planned to purchase the P-series machines, which are known for their reliability, scalability and performance. They didn't even think about x86 until the crisis came. But the crisis has come. One car for 5-7 million dollars (and not even one or two are needed) is a bit overkill. Therefore, management decided to carefully examine the issue of replacing RISC with x86.

Below is a comparison of two similar configurations (they are not exactly the same): P-series with RISC processors with 4 GHz cores at the rate of one RISC core per two x86 2.7 GHz cores. We mounted all this in the data center of the bank, drove a real base there, showing several banking days over the past year (they have a specially prepared test environment that fully simulates the reality and full load from transactions, ATMs, requests, etc. ), and found out that x86 is suitable and costs several times cheaper.

In the right corner of the ring

RISC machines are good for their ability to do calculations quickly and reliably. Before the appearance of clusters, as described below, there were no other alternatives in the banking core — it was impossible to scale. In addition, RISC machines lack the traditional x86 deficiency under high loads — their performance does not drop with a long constant load above 70–80%. But, given the rarity of the decisions, the price matches. Plus, banks always take an advanced service for the supply of parts, and this is comparable to the cost of the machine itself for 3 years (30% of the cost of the purchase per year). Another feature is an upgrade by throwing out an old piece of iron. For example, the P-series of three years ago is often simply written off in test environments, because it does not have combat use in kernel systems - new machines must be purchased all the time. Naturally, manufacturers motivate in every possible way for an “upgrade purchase” - and so they live. A frequent way is to increase the cost of extended support for machines older than 3 years.

Here is the schedule of delivery of such machines around the world:

But the ratio of the cost of purchase to operating costs:

In the left corner of the ring

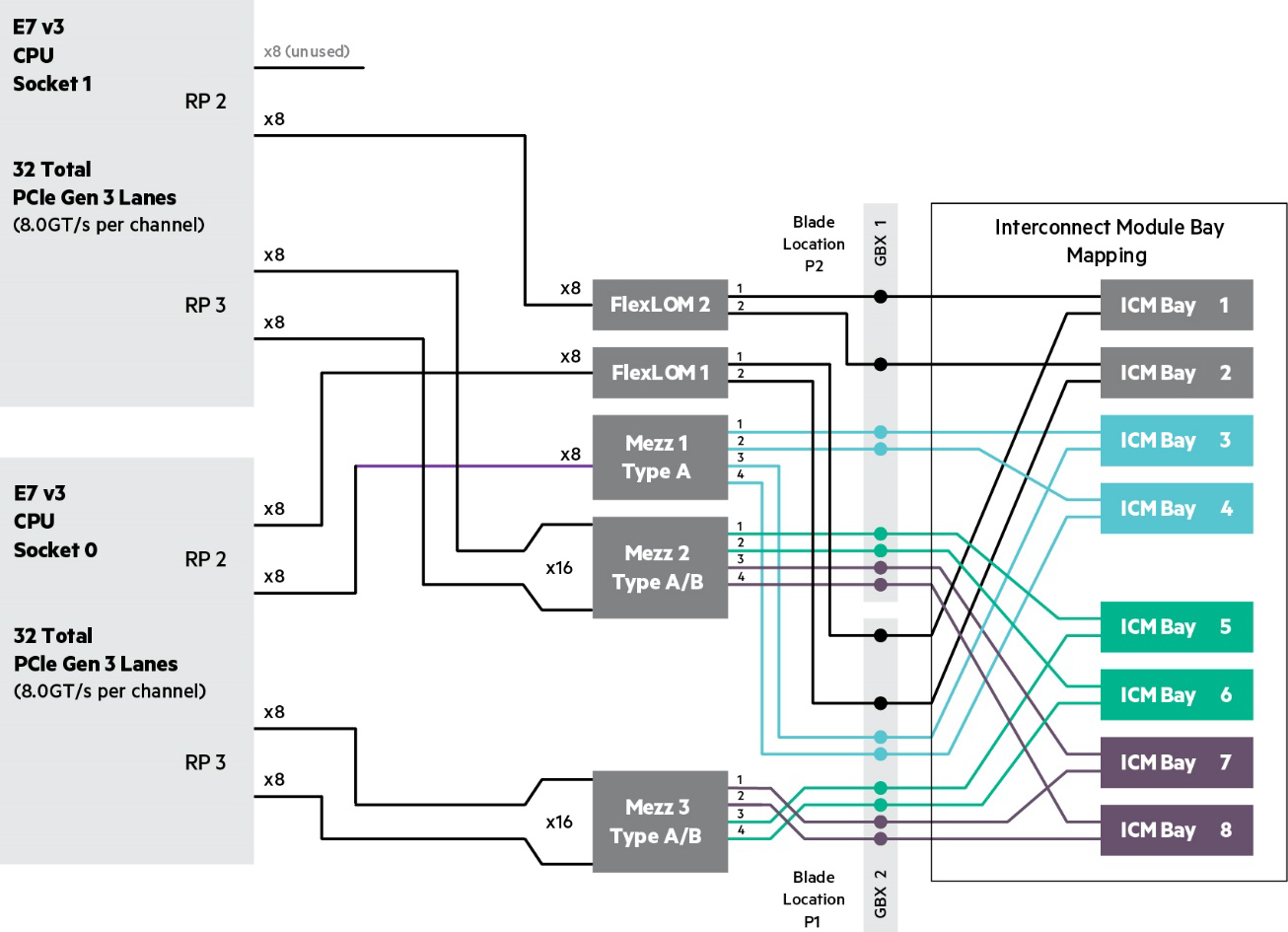

HPE found a suitable Superdome architectural solution - a classic implementation of the ccNUMA architecture based on the "processor bus" system switch with the possibility of free expansion when adding cores. Prior to this architecture, in fact, x86-clusters somehow quickly rested against their limits of power increase due to the high costs of dragging data between cores.

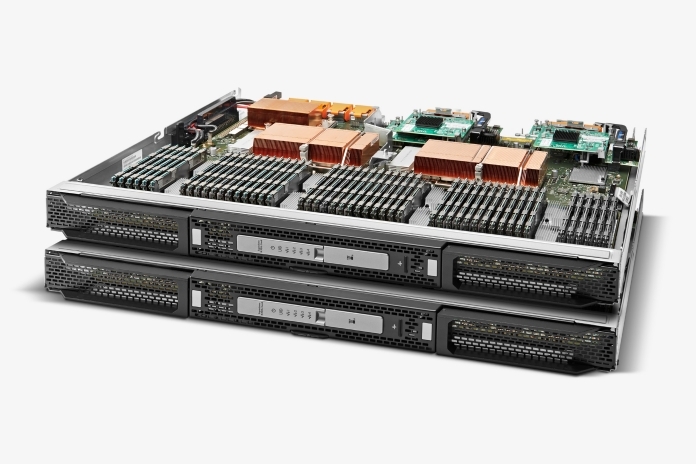

By scalability, these are x86 blades interconnected:

OS - RED HAT + Oracle. The cost is several times lower than for the RISC architecture, since all the details are large-scale and widely distributed throughout the market. Plus licenses come out cheaper. It is worth adding that the price of the service is also significantly more attractive, since the architecture is much less “shamanistic”.

A bit of hiqorna:

BL920s Gen9 Server Blade Memory Subsystem

BL920s Gen9 Server Blade I / O Subsystem

Our test build: Integrity Superdome X, 8 x Intel Xeon E7-2890 v2, (15c / 2.8 GHz / 37.5 M / 155 W), RAM

2048 GB (64 x 32 GB PC3-14900 DDR3 ECC registered Load Reduced DIMMs), Linux Red Hat 7.1, Oracle 11.2.0.4 with data on Oracle ASM, ports 1 GbE: 4 x 1G SFP RJ45; 10GbE: 4 x 10G SFP +; 16 Gb FC: 8 x 16Gb SFP +. With it, the storage HDS VSP G1000, at least 40K IOPS, for a write-oriented load, 16 LUNs of 2TB each, two ports of 8Gb.

Here is the scheme of the test stand (some of the names are smeared, it’s still a bank):

Short summary

An x86 cluster can clearly be the same as “heavy” RISC machines. With some features, but it can. Win - reducing the total cost of ownership by an order of magnitude. For this it is worth picking and sorting out.

Yes, to move to x86, you will need to migrate from AIX OS (this is a UNIX-like proprietary operating system) to Linux, most likely in the RED HAT build. And from one Oracle to another Oracle. If for a business like retail this is a real difficulty, then the bank colleagues took everything pragmatically and calmly. And they explained that working with the core of the bank is still a constant migration from one machine and system to another each year, and the process does not stop. So, for the heap of money that x86 will give, they are ready and not for that. And they have already switched from AIX to Linux, there is some experience. And some of their inherited subsystems are already 10 years old - in the bank it is a real petrified legacy that needs to be maintained.

And support, not the first time.

As for our test machine, it is in the wildest demand. From this TOP-10 bank, it has already moved to another, where a similar program of tests is going. Next - another bank out of ten, and then a queue of several banks of the top thirty. Until next winter, it is unlikely to be released, but HPE has another test build, it seems to be quieter with it.

References:

- On CNEWS with clever long words

- Documents at the Superdom: Architecture and Integrity Superdome X spec .

- A useful link is Server Performance Benchmarks to view test results and reports for all HPE server models. Simply select Superdome X and look for record results.

- My mail is YShvydchenko@croc.ru.

Yes, the solution - the bank implements the system.

Source: https://habr.com/ru/post/282449/

All Articles