"And how well everything began ...", or the benefits of O-notation, not only for the analysis of algorithms

The term “O-large” , familiar to us from the course of mathematical analysis, was introduced by Paul Bachmann at the end of the 19th century to describe the asymptotic behavior of functions. In the late 1970s, Donald Knuth came up with the use of this term to evaluate the effectiveness and resource-intensiveness of algorithms, so that most programmers are familiar with “O-Large”. Understanding the asymptotics of speed and resource intensity makes it possible to choose the most appropriate method for solving the problem, depending on current needs. The “bad” asymptotics allows you to immediately discard the inappropriate method.

The idea that I want to share cannot be called deep: rather, it lies on the surface, but not everyone notices it.

Methods similar to the analysis of algorithms can be used to evaluate the asymptotics of the characteristics of a software development project management system, such as the total time and cost of developing additional functionality.

')

For example, in the list of advantages of a product, the word “scalability” is often mentioned, although instead of this rather vague term one could say that the asymptotics of performance or cost of administration relative to the number of users of the system / program modules / instances are, say, no worse than . If this is not the case, then “scalability” is nothing more than a word for marketing brainwashing.

. If this is not the case, then “scalability” is nothing more than a word for marketing brainwashing.

On the other hand, another project is said to be “falling apart under its own weight.” This project could start quite well, but was architecturally built in such a way that it was destroyed by the poor asymptotics of the cost of making changes.

Let us consider three examples, which, although they describe outwardly different effects, on closer examination have much in common.

At the beginning of their career, each programmer came up against the fact that the program, which seemed to be fine with its task on a small initial data set, began to “slow down” incredibly as soon as the information volumes increased. Nothing foreshadowed troubles: we were sure that we had debugged all the errors and the test set of a dozen records takes into account all possible options. Future users were also satisfied: they checked everything on the initial data set, achieved the elimination of their comments and believe that the program can be used in a working environment. The program is launched, and a catastrophe happens: you cannot use the system in real conditions, everything is “hung up”.

The whole thing, of course, is a non-optimal algorithm or data structure. A harmless-looking nested loop, a call to a convenient method that quickly worked on a small number of elements (some addition of elements one by one to a dynamic array) - and we have a system that gives -growth of work on the number of elements. To prevent this from happening in the future, just a little personal experience and mastering of a textbook on algorithms and data structures is enough. In this example, we will not stop.

-growth of work on the number of elements. To prevent this from happening in the future, just a little personal experience and mastering of a textbook on algorithms and data structures is enough. In this example, we will not stop.

Development project managers know how much the general state of affairs can deteriorate and get confused when introducing a new developer into a project, unless he succeeds in delivering a “detachable”, “isolated” task. The law, described in F. Brooks’s classic book Mythical Man-Month , states: “attracting new employees does not reduce, but lengthens the work schedule for creating a software product”. This statement has a very simple and mathematically exact explanation.

The dependence of the time required for the development project, the number of actors involved, Brooks sets as follows: - the number of programmers working on the project. The total amount of work consists of the labor costs

- the number of programmers working on the project. The total amount of work consists of the labor costs

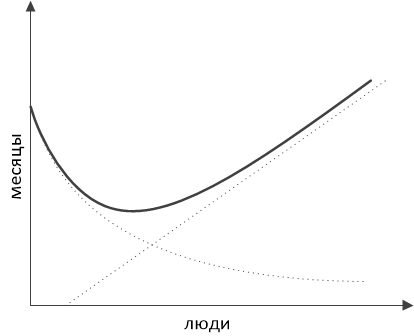

So, the total project execution time is determined by the following curve:

having such a schedule:

The costs in man-hours are determined by the formula of the form

The main idea of F. Brooks is as follows: an increase in the number of developers in a team leads to a reduction in the project implementation timeframe only to a certain limit, beyond which the increase in time occurs. Applying the O-notation to the obtained laws, we can say that in the "Brooks" project with an increase in the number of performers the execution time increases as and the cost of the project is altogether

and the cost of the project is altogether  .

.

Valuable knowledge for the manager, on which the decision to connect new employees to the project with deadlines depends, isn't it?

Another classic example of “badly growing” dependence is given in all books on the Extreme Programming methodology : this is the increase in the cost of change depending on the stage at which the project is located:

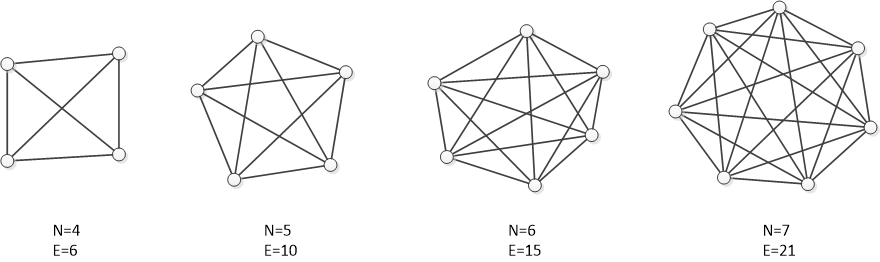

It is easy to explain this dependence again if you understand that a software project in its development begins to resemble a complete graph, whose vertices are artifacts related to the project: software modules, requirements, tests, documentation:

Adding each next vertex to such a graph is more and more expensive each time, since there are more and more connections with already existing vertices. Moreover, it often requires updating several existing vertices that are connected to a new vertex (change interfaces, tests, documentation), after which changes begin to occur in the entire system in a cascade and avalanche until reaching equilibrium. Depending on how the links between artifacts work in a project, we can get a polynomial with a high exponent The rising cost of change from the number of project artifacts already present.

The rising cost of change from the number of project artifacts already present.

As a result of such activities, at some point the development of the project “gets stuck”, all the time it starts to go into patching up old problems, new changes are being made unacceptably slow and with enormous difficulties, programmers say that “all this should be rewritten”, in other words - the project is falling apart under its own weight.

Despite the fact that these examples describe completely different effects, it is easy to see that they all have a lot in common: everything started well and ended badly, and the reason is the inability to assess the asymptotics of the behavior of key parameters of the system during further developments.

In the first of the examples, the problem is solved by choosing an algorithm or data structure with suitable asymptotics.

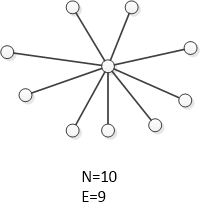

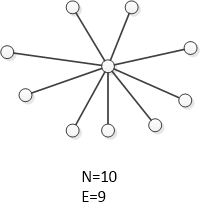

In the second and third examples, the problem lies in the nonlinear growth of connections in the full graph: if your system resembles a full graph, you cannot wait for a good development of events with an increase in the number of elements. The model of a graph with connections is, of course, not quantitative, but illustrative, qualitative, and therefore the relationship graph does not have to be complete in the strict sense of the word: it only needs to “have a tendency” to be complete in order to manifest Brooks’s law and the effect of increasing the cost of change. On the other hand, the smallest number of links that may be present in a graph from vertices equals

vertices equals  , as, for example, in the "star" column:

, as, for example, in the "star" column:

Having built links in a star-shaped system, either linearly or in any other way, generating ribs at

ribs at  tops, we get a system that behaves in a qualitatively different way with its growth: the cost of adding a new vertex in such a system is always constant.

tops, we get a system that behaves in a qualitatively different way with its growth: the cost of adding a new vertex in such a system is always constant.

A few words about how to achieve this in practice. Using the general principle, the project should be optimized in all aspects: from the software architecture to the organization of the work of specialists working on the project.

At the design level of the application, we can take advantage of the "star-like" architecture of the platform-add-in or platform-plug-ins architecture. In this architecture, there is some basic part, "core" or "platform" of the application, developed with all possible care and rarely subject to change. All functions of the application are developed as independent “plug-ins” that use the services of the kernel and interact with each other only with the help of the kernel. The kernel itself does not depend on add-ins. In object-oriented development environments, a very convenient software implementation of this principle is possible with the help of the source-subscriber pattern, and especially with the help of the mediator, also known as the mediator design pattern.

In view of the above, it is already clear that some of the initial overhead of programming such an architecture will pay off in the future with savings on the interaction between the add-on developers and the coordination of the add-ons with each other. Tasks begin to become detachable by themselves, connecting new developers to the project becomes simple and safe.

The effect described by Brooks should also be reduced by establishing communications in the project (reducing the “quadratic” part of labor costs): general meetings, scheduling, general email-broadcasts with maximum information about what is happening for all participants create the opportunity for each of the ordinary participants to interact with everyone through “Central peak” , without wasting time searching for the right addressee. Project managers know that the problem of communication is not only a problem of giant projects, it begins to arise even between four participants, and with their growth can reach truly cosmic proportions.

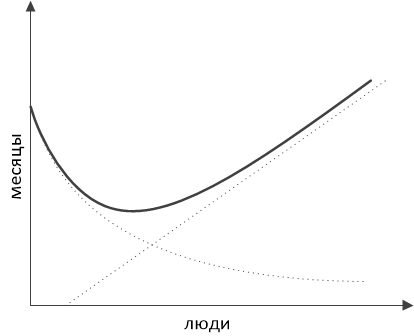

Extreme Programming Technique offers a whole range of measures in order to achieve - asymptotic growth of the cost of change. In addition to the importance of establishing communications (including such radical measures as pair programming), special attention is paid to the development through testing. In this situation, in the project, rigid and isolated from each other bundles of “functional requirement - test code” are formed, which do not lead to the emergence of unnecessary links and dependencies of “everyone from everyone”. The ideal, from the point of view of Extreme Programming, dependence of the cost of change on the project stage, is as follows:

- asymptotic growth of the cost of change. In addition to the importance of establishing communications (including such radical measures as pair programming), special attention is paid to the development through testing. In this situation, in the project, rigid and isolated from each other bundles of “functional requirement - test code” are formed, which do not lead to the emergence of unnecessary links and dependencies of “everyone from everyone”. The ideal, from the point of view of Extreme Programming, dependence of the cost of change on the project stage, is as follows:

As already mentioned, the idea presented in the article lies on the surface. If we, the developers, are able to evaluate the behavior of the algorithm with the complication of the task, then why not begin to predict in a similar way the behavior of the parameters of the development project in the process of its growth and development? If we are able to optimize algorithms, getting rid of unnecessary cycles and calculations, why not optimize the entire development project in a similar way, removing unnecessary links and dependencies from it?

I recommend this article about the signs of good architecture. The ideas about correct decomposition provided there that ensure the scaling of the system are, in fact, a more substantive discussion of very general ideas from my article.

The idea that I want to share cannot be called deep: rather, it lies on the surface, but not everyone notices it.

Methods similar to the analysis of algorithms can be used to evaluate the asymptotics of the characteristics of a software development project management system, such as the total time and cost of developing additional functionality.

')

For example, in the list of advantages of a product, the word “scalability” is often mentioned, although instead of this rather vague term one could say that the asymptotics of performance or cost of administration relative to the number of users of the system / program modules / instances are, say, no worse than

On the other hand, another project is said to be “falling apart under its own weight.” This project could start quite well, but was architecturally built in such a way that it was destroyed by the poor asymptotics of the cost of making changes.

Let us consider three examples, which, although they describe outwardly different effects, on closer examination have much in common.

Example 1, trivial

At the beginning of their career, each programmer came up against the fact that the program, which seemed to be fine with its task on a small initial data set, began to “slow down” incredibly as soon as the information volumes increased. Nothing foreshadowed troubles: we were sure that we had debugged all the errors and the test set of a dozen records takes into account all possible options. Future users were also satisfied: they checked everything on the initial data set, achieved the elimination of their comments and believe that the program can be used in a working environment. The program is launched, and a catastrophe happens: you cannot use the system in real conditions, everything is “hung up”.

The whole thing, of course, is a non-optimal algorithm or data structure. A harmless-looking nested loop, a call to a convenient method that quickly worked on a small number of elements (some addition of elements one by one to a dynamic array) - and we have a system that gives

Example 2

Development project managers know how much the general state of affairs can deteriorate and get confused when introducing a new developer into a project, unless he succeeds in delivering a “detachable”, “isolated” task. The law, described in F. Brooks’s classic book Mythical Man-Month , states: “attracting new employees does not reduce, but lengthens the work schedule for creating a software product”. This statement has a very simple and mathematically exact explanation.

The dependence of the time required for the development project, the number of actors involved, Brooks sets as follows:

- non-shared tasks - the time to complete these tasks does not depend on the number of employees and is always equal to

;

- shared tasks - the time for their execution decreases with increasing number of employees and is equal to

;

- information sharing - Brooks literally writes the following: “If all tasks need to be separately coordinated among themselves, then costs increase as

". It is understood that in the presence of

number of employees spent on coordinating “all with all” is proportional to the number of links in a full graph (a graph in which each pair of vertices is connected):

Because these labor costs are distributed betweenemployees, their input at runtime is

.

So, the total project execution time is determined by the following curve:

having such a schedule:

The costs in man-hours are determined by the formula of the form

The main idea of F. Brooks is as follows: an increase in the number of developers in a team leads to a reduction in the project implementation timeframe only to a certain limit, beyond which the increase in time occurs. Applying the O-notation to the obtained laws, we can say that in the "Brooks" project with an increase in the number of performers the execution time increases as

Valuable knowledge for the manager, on which the decision to connect new employees to the project with deadlines depends, isn't it?

Example 3

Another classic example of “badly growing” dependence is given in all books on the Extreme Programming methodology : this is the increase in the cost of change depending on the stage at which the project is located:

It is easy to explain this dependence again if you understand that a software project in its development begins to resemble a complete graph, whose vertices are artifacts related to the project: software modules, requirements, tests, documentation:

Adding each next vertex to such a graph is more and more expensive each time, since there are more and more connections with already existing vertices. Moreover, it often requires updating several existing vertices that are connected to a new vertex (change interfaces, tests, documentation), after which changes begin to occur in the entire system in a cascade and avalanche until reaching equilibrium. Depending on how the links between artifacts work in a project, we can get a polynomial with a high exponent

As a result of such activities, at some point the development of the project “gets stuck”, all the time it starts to go into patching up old problems, new changes are being made unacceptably slow and with enormous difficulties, programmers say that “all this should be rewritten”, in other words - the project is falling apart under its own weight.

findings

Despite the fact that these examples describe completely different effects, it is easy to see that they all have a lot in common: everything started well and ended badly, and the reason is the inability to assess the asymptotics of the behavior of key parameters of the system during further developments.

In the first of the examples, the problem is solved by choosing an algorithm or data structure with suitable asymptotics.

In the second and third examples, the problem lies in the nonlinear growth of connections in the full graph: if your system resembles a full graph, you cannot wait for a good development of events with an increase in the number of elements. The model of a graph with connections is, of course, not quantitative, but illustrative, qualitative, and therefore the relationship graph does not have to be complete in the strict sense of the word: it only needs to “have a tendency” to be complete in order to manifest Brooks’s law and the effect of increasing the cost of change. On the other hand, the smallest number of links that may be present in a graph from

Having built links in a star-shaped system, either linearly or in any other way, generating

A few words about how to achieve this in practice. Using the general principle, the project should be optimized in all aspects: from the software architecture to the organization of the work of specialists working on the project.

At the design level of the application, we can take advantage of the "star-like" architecture of the platform-add-in or platform-plug-ins architecture. In this architecture, there is some basic part, "core" or "platform" of the application, developed with all possible care and rarely subject to change. All functions of the application are developed as independent “plug-ins” that use the services of the kernel and interact with each other only with the help of the kernel. The kernel itself does not depend on add-ins. In object-oriented development environments, a very convenient software implementation of this principle is possible with the help of the source-subscriber pattern, and especially with the help of the mediator, also known as the mediator design pattern.

In view of the above, it is already clear that some of the initial overhead of programming such an architecture will pay off in the future with savings on the interaction between the add-on developers and the coordination of the add-ons with each other. Tasks begin to become detachable by themselves, connecting new developers to the project becomes simple and safe.

The effect described by Brooks should also be reduced by establishing communications in the project (reducing the “quadratic” part of labor costs): general meetings, scheduling, general email-broadcasts with maximum information about what is happening for all participants create the opportunity for each of the ordinary participants to interact with everyone through “Central peak” , without wasting time searching for the right addressee. Project managers know that the problem of communication is not only a problem of giant projects, it begins to arise even between four participants, and with their growth can reach truly cosmic proportions.

Extreme Programming Technique offers a whole range of measures in order to achieve

As already mentioned, the idea presented in the article lies on the surface. If we, the developers, are able to evaluate the behavior of the algorithm with the complication of the task, then why not begin to predict in a similar way the behavior of the parameters of the development project in the process of its growth and development? If we are able to optimize algorithms, getting rid of unnecessary cycles and calculations, why not optimize the entire development project in a similar way, removing unnecessary links and dependencies from it?

UPD: Read more

I recommend this article about the signs of good architecture. The ideas about correct decomposition provided there that ensure the scaling of the system are, in fact, a more substantive discussion of very general ideas from my article.

Source: https://habr.com/ru/post/282435/

All Articles