Notes with MBC Symposium: applying deep learning in brain modeling

Attended the Stanford Symposium on the intersection of deep learning and neurosciencé, received a lot of pleasure.

I am talking about interesting things - for example, a report by Dan Yamins on the use of neural networks to simulate the operation of the visual cortex.

Disclaimer: The post is written on the basis of pretty edited chat logs closedcircles.com , hence the style of presentation, and clarifying questions.

Here is a link to the full report, it's cool, but it's probably better to look after reading the post.

Dan is involved in computational neuroscience, i.e. trying computational methods to help brain research. And there, as elsewhere, deep learning happens.

In general, the device of the visual cortex at a high level, we barely understand

When we see some kind of image, the eye triggers the activation of neurons, the activation passes through different parts of the brain, which release an increasingly higher-level representation from them.

V1 is also called the primary visual cortex and it is well researched - there are neurons that drive certain filters over the image, and are activated on the lines at different angles and simple gradients.

(by the way, the patterns on which neurons activate in this area are often similar to the first learned levels in CNN, which is very cool in itself)

There is even success in modeling this part - they say, invent some model, see how neurons are activated on the input image, zapititit, and then this model quite predicts the activation of these neurons in new pictures.

With V4 and IT (higher processing levels) this does not work.

Where does the data about biological neurons come from?

A typical experiment looks like this - a monkey is taken, electrodes are stuck in some part of the brain, which take signals from the neurons in which they fall. Monkey show different pictures and remove the signal from the electrodes. This is done on several hundreds of neurons - the number of neurons in the entire brain lobe under study is enormous, only hundreds are measured.

It turns out that if we try to fit the model on the activation of biological neurons in V4 and IT, overfitting occurs - there is little data and the model does not predict anything for new pictures.

Dan companions are trying to do differently

Let's take a model and train it for some kind of recognition problem, so that the artificial neurons in it recognize something in these pictures.

Suddenly they will predict the activation of biological neurons better?

Now carefully monitor the hands.

They train models (CNNs and simpler models from ordinary computer vision) to recognize objects in synthetic pictures.

Pictures are as follows:

The object does not correlate with the background - maybe a plane on the background of a lake, and a head on the background of some wild forest (as I understand, in order to exclude prior learning).

In total, there are 8 categories of objects in the pictures - heads, cars, airplanes, something else.

And now they train models of different structure and depth to recognize the category of an object. Among other models, there are CNNs pretrained on Imagenet, and they remove from the training dataset the categories of objects that they used in their synthetic pictures.

Further, on the basis of trained CNN, they "predict" the activation of biological neurons as follows.

They take some kind of training set (separate categories of objects), choose some level in CNN and neurons in some part of the brain and build a linear classifier that predicts the activation of biological neurons on the basis of artificial ones.

That is, they try to approximate the activation of a biological neuron as a linear combination of activations of artificial neurons in a certain layer (after all, they cannot be exactly combined one to one, there are completely different numbers of them). And then they check to what extent it has predictive power on the test set, where there were completely different objects.

I hope to explain it turned out.

That is, they have as a CNN output - something like the identification of a biological neuron?

Not! CNN output is a classifier of objects in pictures.

CNN is training to classify images, she knows nothing about biological neurons. Marked data for the grid is what kind of object in the picture, without knowledge of biological neurons.

And then weights in CNN were recorded, and the fitthym activates biological neurons as a linear combination of artificial neuron activations.

and why linear combination, but not one more grid?

Predicting biological by artificial one wants to make it as simple as possible so that the system does not retrain and takes the main signal from neuron activations in CNN.

And then on the new test images we check whether we succeeded in predicting activation in biological neurons.

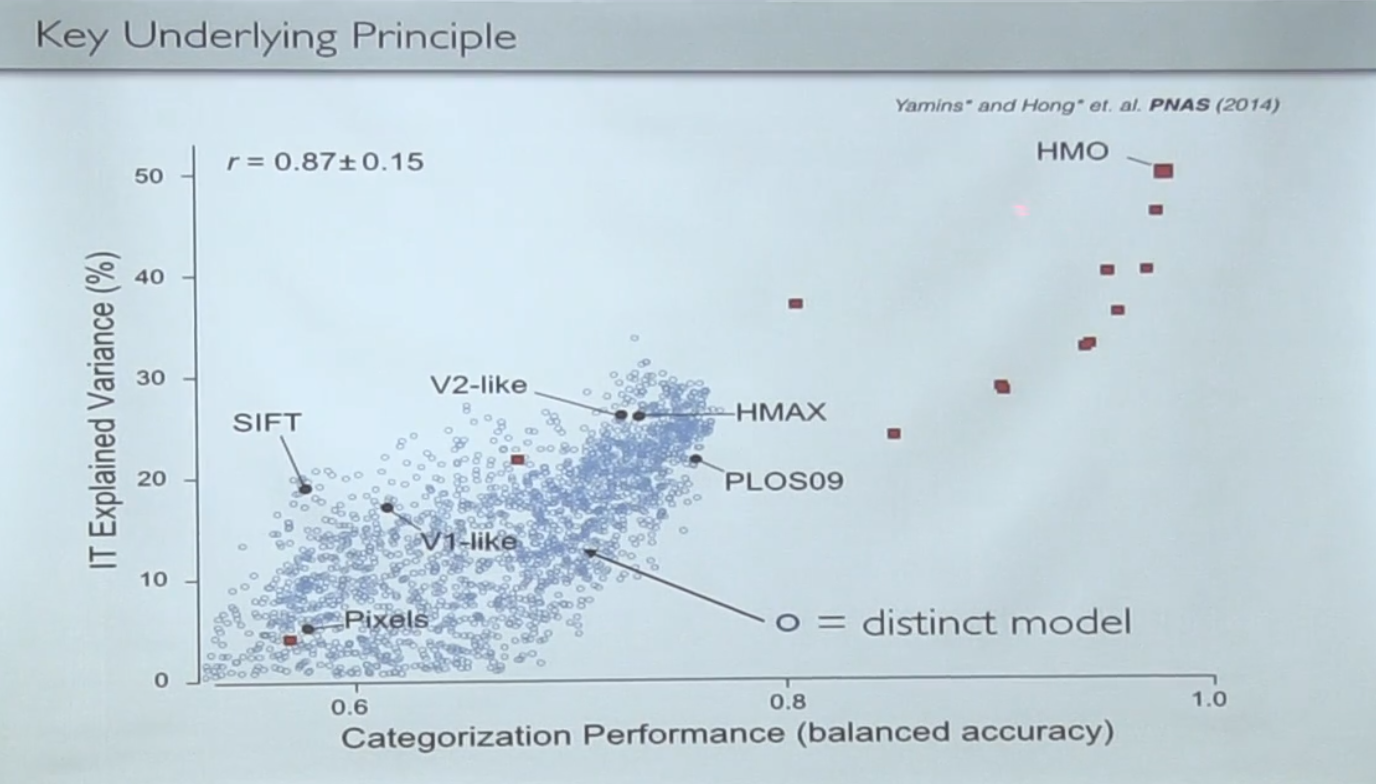

So, the picture with the results!

Each point on this graph is some kind of tested model.

On the X axis - how well it classifies, on the Y axis - how well it predicts biological neurons.

The blue cloud is the models that either did not train at all, or were trained from scratch and there are not many very deep ones, and the red dots in the upper right are the models pretrained with imagenet and total deep learning.

It can be seen that how well the model classifies strongly correlates with how well it predicts biological activations. That is, putting a restriction that the model should also be functional - it turns out to better approximate the model of activation of biological neurons.

The question may be off to the side, but still. But is it possible then to teach the model to classify something, using as a teacher - neurons in the brain? Type showed a picture + took data from the brain and fed it to CNN?

This is the same thing that I talked about earlier - since you know little neurons, such a model begins to overfit and does not have predictive power.

That is, training on neuron activations is not generalized, and training on the allocation of objects is a much more powerful constraint.

And now the fusion.

You can see how different levels of the neural network predict the activation of different parts of the brain:

It turns out that the last levels predict IT (last stage) well, but not V4 (intermediate). And V4 is best predicted by intermediate network levels.

Thus, the hierarchical representation of features in a neural network “touches” not only at the end, but also in the middle of processing, which again suggests that there is some kind of commonality of what is happening there and there.

That is, they have a neural network looks about the same as biological neurons in the brain?

Rather, there is something similar in which stages the recognition process goes through.

To say that the "architecture" is the same, of course, cannot be (of course, this cannot be considered as proof at all, etc., etc.)

The next stage - well, ok, suppose we got the opportunity to model the unknown as a working brain by some other incomprehensible like a working box. What is the joy in it?

Further work - how can this be used to understand something new about the work of the brain.

I will tell about one example, there are two more in the speech itself.

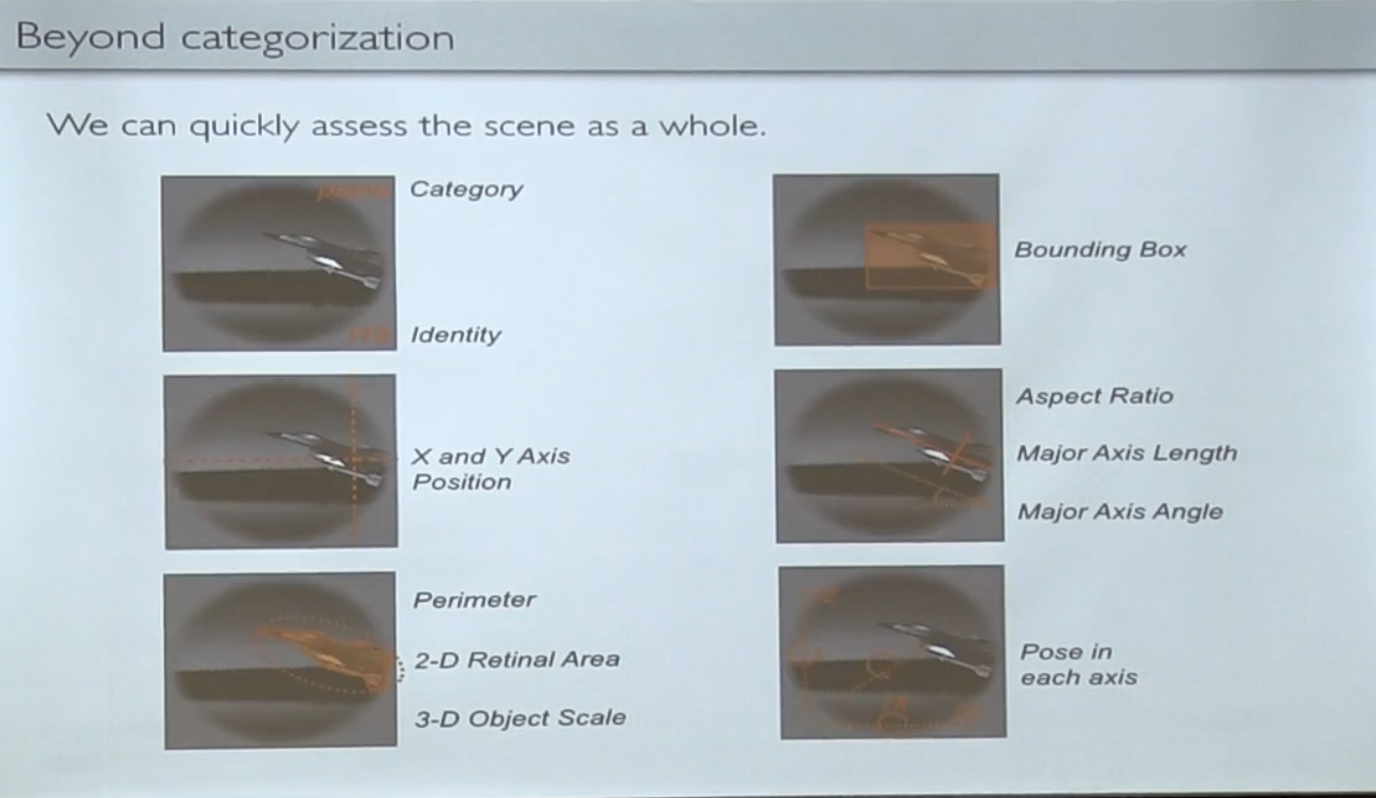

Let's try to pull out not only the classification, but also some other signals from the image - the angle of inclination, size, position, etc.

It would seem that these are more "low-level" features than the class of an object, and you can expect them to be defined at lower levels of recognition in the brain - let's check it on the model.

It turns out figs there!

Even such "low-level" features correlate better with high-level activations, rather than low-level activations. Then they conducted additional experiments on the living brain and saw the same thing - those lines and corners that we used to see in the patterns of the first layers are not related to the "lines" of the orientation of high-level objects.

Information about the position and the relative position of objects is quite a high level.

This already confirms the existing theory that the last stage (IT) - works with a high-level model, where there are objects, their location, mutual relations, etc. and so on, and converts them into something the brain needs.

(other examples, if anyone is interested, about testing a hypothesis about a dedicated place in the brain for face recognition through training a virtual brain that has never seen people in their lives, and about sound recognition)

(Continuing amateurish questions) Did they use a model with the same number of layers in their CNN? Well, that is, and if you reduce the number of layers, the similarity effects disappear?

No, the number of "layers" in the brain is a complicated thing - there are both end-to-end connections and feedback. The number of layers in CNN is fundamentally less than in the brain.

How then is the conversation about IT and V4?

Well, in the brain, IT and V4 are many, many levels, and there are few levels in the neural network. Biological neurons from IT and V4 are those into which the electrode fell. From what "biological leuer" inside them it came out - from that it came out.

The following is also interesting. If we believe Ramachandran, visual cortex is not just a feed-forward network, all layers communicate with all layers in both directions . There is even an example when the visual system can be disrupted through various kinds of optical illusions.

That is, no one guarantees that the activation of biological neurons is somehow related to the recognition process itself, and not to the fact that the monkey’s foot begins to itch and the neurons are already reacting to the fact that it’s foot itches?

No, these are neurons from a region that is known to be associated with visual cortex (this part about the brain, we somehow know, it’s impossible to scratch it, it is further down the stack).

Summing up, the direct direction of work is rather about using new models to study the work of the brain, but there are some indirect hints that somehow this works too well, perhaps there are general mechanisms.

Hopefully converge in singularity!

')

Source: https://habr.com/ru/post/282277/

All Articles