What does the convolutional neural network look at when it sees nudity?

Last week at Clarifai, we formally announced our NSFW, Not Safe for Work) recognition model .

Warning and disclaimer. This article contains images of nudity for scientific purposes. We ask not to read further those who are under 18 or who are offended by nudity.

')

Automatic identification of nude photos has been a central computer vision problem for more than two decades, and because of its rich history and well-defined task, it has become an excellent example of how technology has evolved. I use the problem of detecting obscenity to explain how the training of modern convolutional networks differs from the research conducted in the past.

Back in 1996 ...

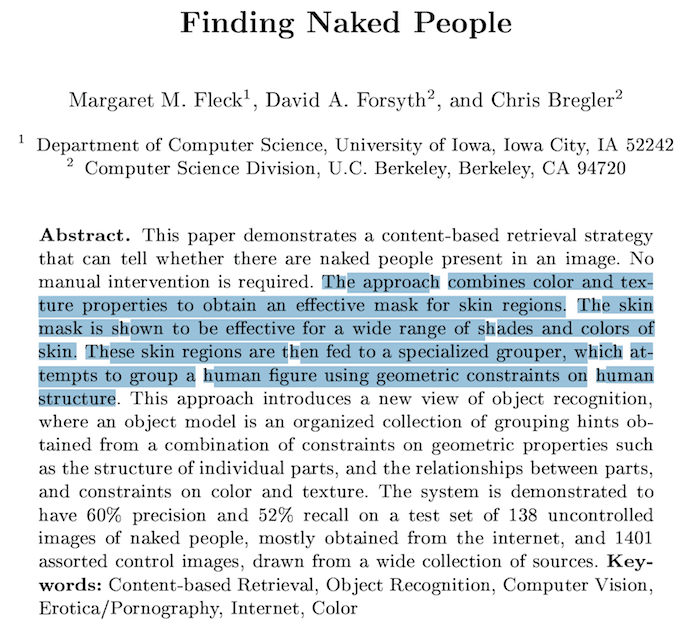

One of the first works in this area had a simple and understandable title: “Search for nudity,” by Margaret Fleck and others. It was published in the mid-90s and is a good example of what computer vision specialists did before mass distribution of convolutional networks. In part 2 of the scientific article, they give a generalized description of the technique:

Algorithm:

- First, find images with large areas of skin-colored pixels.

- Then, in these areas, find elongated areas and group them into possible human limbs or combined groups of limbs, using specialized grouping modules that contain a significant amount of information about the structure of the object.

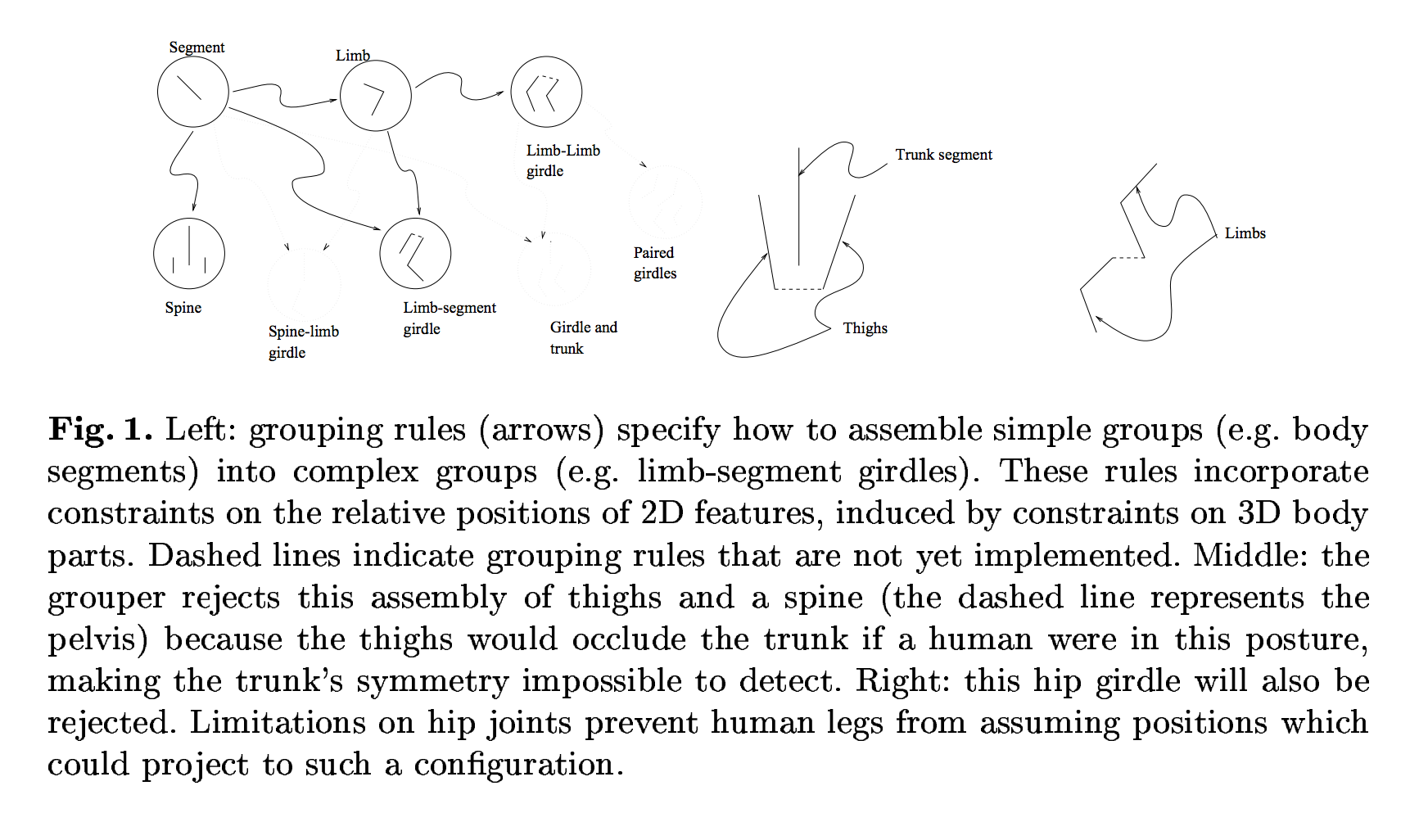

Skin detection was carried out by filtering the color space, and grouping of skin regions was carried out using human figure modeling as “a set of almost cylindrical parts, where the individual outlines of the parts and the connections between the parts are limited to the skeleton geometry (section 2). The development methods of such an algorithm become more understandable if we study Figure 1 in a scientific article, where the authors showed some of the grouping rules that were compiled manually.

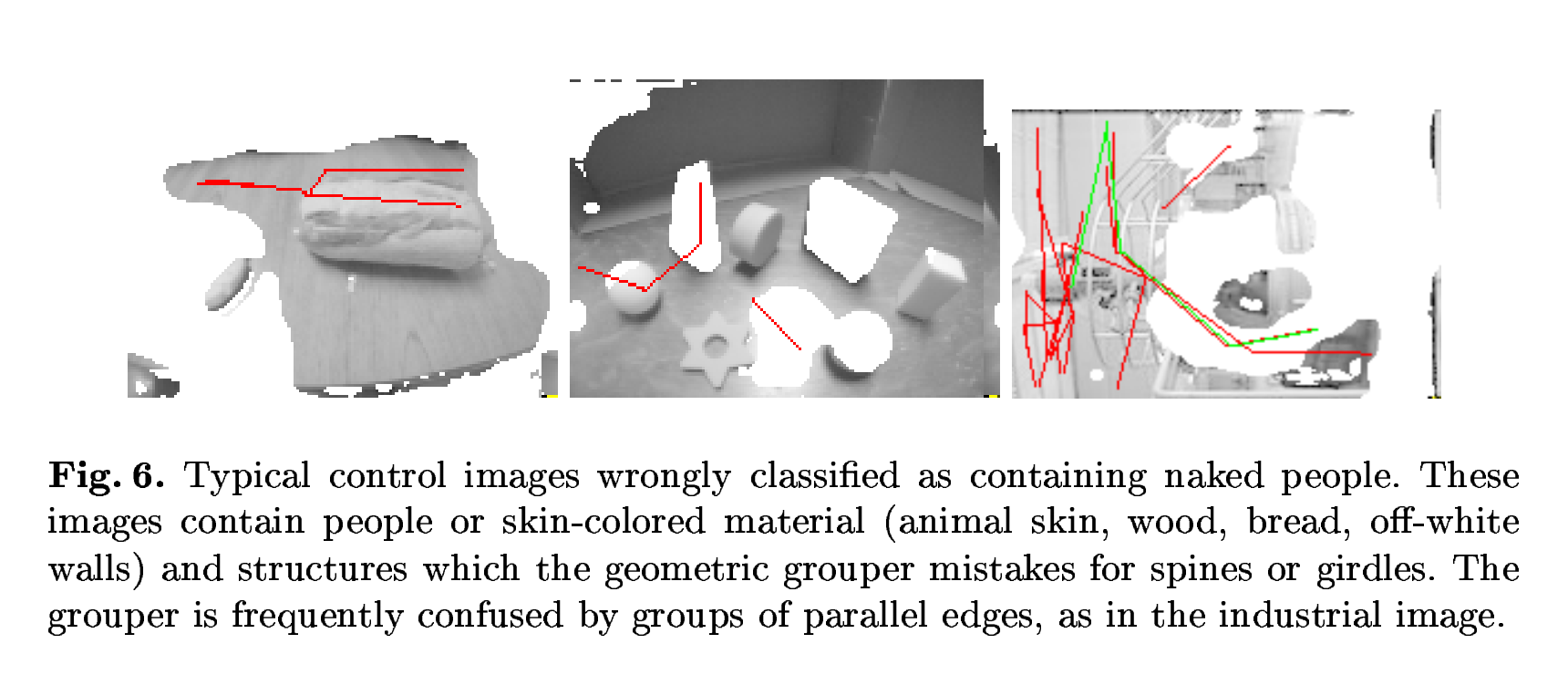

The scientific article talks about “60% recognition accuracy and 52% recall on an uncontrolled sample of 138 images of naked people.” The authors also show examples of correctly recognized images and false positives with visualization of the areas that the algorithm processed.

The main problem with manual drafting of rules is that the complexity of the model is limited by the patience and imagination of the researchers. In the next section, we will see how a convolutional neural network trained to perform the same task demonstrates a much more complex representation of the same data.

Now in 2014 ...

Instead of inventing formal rules to describe how input should be presented, depth learning researchers come up with network architectures and data sets that will allow the AI system to master these views directly from the data. However, due to the fact that the researchers do not indicate exactly how the network should respond to the given input data, a new problem arises: how to understand what the neural network is responding to?

To understand the actions of the convolutional neural network, it is necessary to interpret the activity of the trait at various levels. In the rest of the article, we explore the early version of our NSFW model, highlighting activity from the top level down to the level of the pixel space at the input. This will allow you to see which specific input patterns caused a certain activity on the feature map (that is, why, in fact, the image is marked as "NSFW").

Barrier sensitivity

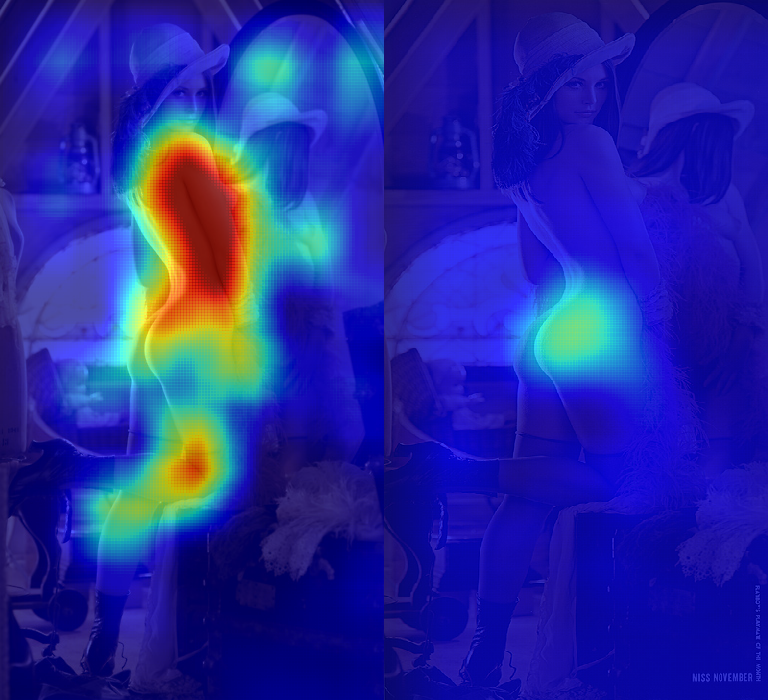

The illustration below shows photographs of Lena Söderberg after applying 64x64 sliding windows in increments of 3 of our NSFW model to the cropped / obscured versions of the original image.

To build a heatmap on the left, we sent each window to our convolutional neural network and averaged the NSFW score for each pixel. When a neural network encounters a fragment filled with skin, it tends to evaluate it as “NSFW”, which leads to the appearance of large red areas on Lena’s body. To create a heat map on the right, we systematically obscured parts of the original image and noted -1 as the NSFW rating (that is, the SFW rating). When most of the NSFW regions are closed, the “SFW” score increases, and we see higher values on the heat map. For clarity, here are examples of images that we gave to the convolutional neural network for each of the two experiments above.

One of the remarkable features of these experiments is that they can be carried out even if the classifier is an absolute “black box”. Here is a snippet of code that reproduces these results through our APIs:

# NSFW occlusion experiment from StringIO import StringIO import matplotlib.pyplot as plt import numpy as np from PIL import Image, ImageDraw import requests import scipy.sparse as sp from clarifai.client import ClarifaiApi CLARIFAI_APP_ID = '...' CLARIFAI_APP_SECRET = '...' clarifai = ClarifaiApi(app_id=CLARIFAI_APP_ID, app_secret=CLARIFAI_APP_SECRET, base_url='https://api.clarifai.com') def batch_request(imgs, bboxes): """use the API to tag a batch of occulded images""" assert len(bboxes) < 128 #convert to image bytes stringios = [] for img in imgs: stringio = StringIO() img.save(stringio, format='JPEG') stringios.append(stringio) #call api and parse response output = [] response = clarifai.tag_images(stringios, model='nsfw-v1.0') for result,bbox in zip(response['results'], bboxes): nsfw_idx = result['result']['tag']['classes'].index("sfw") nsfw_score = result['result']['tag']['probs'][nsfw_idx] output.append((nsfw_score, bbox)) return output def build_bboxes(img, boxsize=72, stride=25): """Generate all the bboxes used in the experiment""" width = boxsize height = boxsize bboxes = [] for top in range(0, img.size[1], stride): for left in range(0, img.size[0], stride): bboxes.append((left, top, left+width, top+height)) return bboxes def draw_occulsions(img, bboxes): """Overlay bboxes on the test image""" images = [] for bbox in bboxes: img2 = img.copy() draw = ImageDraw.Draw(img2) draw.rectangle(bbox, fill=True) images.append(img2) return images def alpha_composite(img, heatmap): """Blend a PIL image and a numpy array corresponding to a heatmap in a nice way""" if img.mode == 'RBG': img.putalpha(100) cmap = plt.get_cmap('jet') rgba_img = cmap(heatmap) rgba_img[:,:,:][:] = 0.7 #alpha overlay rgba_img = Image.fromarray(np.uint8(cmap(heatmap)*255)) return Image.blend(img, rgba_img, 0.8) def get_nsfw_occlude_mask(img, boxsize=64, stride=25): """generate bboxes and occluded images, call the API, blend the results together""" bboxes = build_bboxes(img, boxsize=boxsize, stride=stride) print 'api calls needed:{}'.format(len(bboxes)) scored_bboxes = [] batch_size = 125 for i in range(0, len(bboxes), batch_size): bbox_batch = bboxes[i:i + batch_size] occluded_images = draw_occulsions(img, bbox_batch) results = batch_request(occluded_images, bbox_batch) scored_bboxes.extend(results) heatmap = np.zeros(img.size) sparse_masks = [] for idx, (nsfw_score, bbox) in enumerate(scored_bboxes): mask = np.zeros(img.size) mask[bbox[0]:bbox[2], bbox[1]:bbox[3]] = nsfw_score Asp = sp.csr_matrix(mask) sparse_masks.append(Asp) heatmap = heatmap + (mask - heatmap)/(idx+1) return alpha_composite(img, 80*np.transpose(heatmap)), np.stack(sparse_masks) #Download full Lena image r = requests.get('https://clarifai-img.s3.amazonaws.com/blog/len_full.jpeg') stringio = StringIO(r.content) img = Image.open(stringio, 'r') img.putalpha(1000) #set boxsize and stride (warning! a low stride will lead to thousands of API calls) boxsize= 64 stride= 48 blended, masks = get_nsfw_occlude_mask(img, boxsize=boxsize, stride=stride) #viz blended.show() Although such experiments make it easy to see the result of the classifier, they have a drawback: the generated visualizations are often quite vague. This makes it difficult to truly understand what the neural network is really doing and to understand what can go wrong during its training.

Deploying Neural Networks (Deconvolutional Networks)

After learning the network on a given set of data, we would like to take an image and a class, and ask the neural network for something like, “How can we change this image to better fit the specified class?”. For this, we use a deploying neural network, as described in section 2 of the above-mentioned scientific article of Seiler and Fergus 2014:

A developmental neural network can be represented as a convolutional neural network that uses the same components (filtering, pooling), but vice versa, so instead of displaying pixels for attributes, it does the opposite. To study the specific activation of the convolutional neural network, we set all other activations in this layer to zero and skip the feature maps as input parameters to the attached layer of the deploying neural network. Then we successfully produce 1) unpling; 2) correction and 3) filtering to restore activity in the lower layer, which gave rise to the selected activation. Then the procedure is repeated until we reach the original pixel layer.

[...]

The procedure is similar to the reverse propagation of one strong activation (as opposed to ordinary gradients), for example, calculatingwhere

Is an element of the feature map with strong activation, and

- the original image.

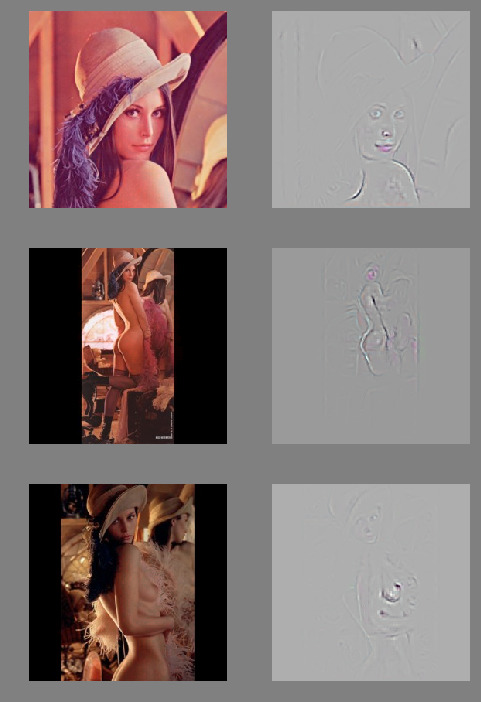

Here is the result obtained from the reversing neural network, which was given the task to show the necessary changes in Lena's photo to make it look more like pornography (note: the reaming neural network used here only works with square images, so we added Lena's photo to the square):

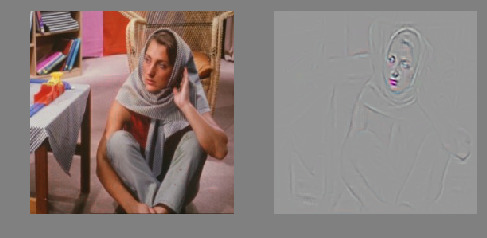

Barbara is a more decent version of Lena. If you believe the neural network, you can fix it by adding red color to the lips.

The next frame with Ursula Andress in the role of Honey Rider from the film “Doctor Nou” with James Bond won the first place in the 2003 poll for the “sexiest moment in the history of cinema”.

The outstanding result of the above experiments is that the neural network was able to understand that the red lips and navels are the "NSFW" indicators. Most likely, this means that we have not included a sufficient number of images of red lips and navels in our training data set "SFW". If we only evaluated our model by studying the accuracy / completeness and ROC curves (shown below, a set of test images: 428,271), we would never have discovered this fact, because our test set has the same drawback. This shows the fundamental difference between rule-based classifiers and modern AI research. Instead of processing the features manually, we reshape the data set until the feature improves.

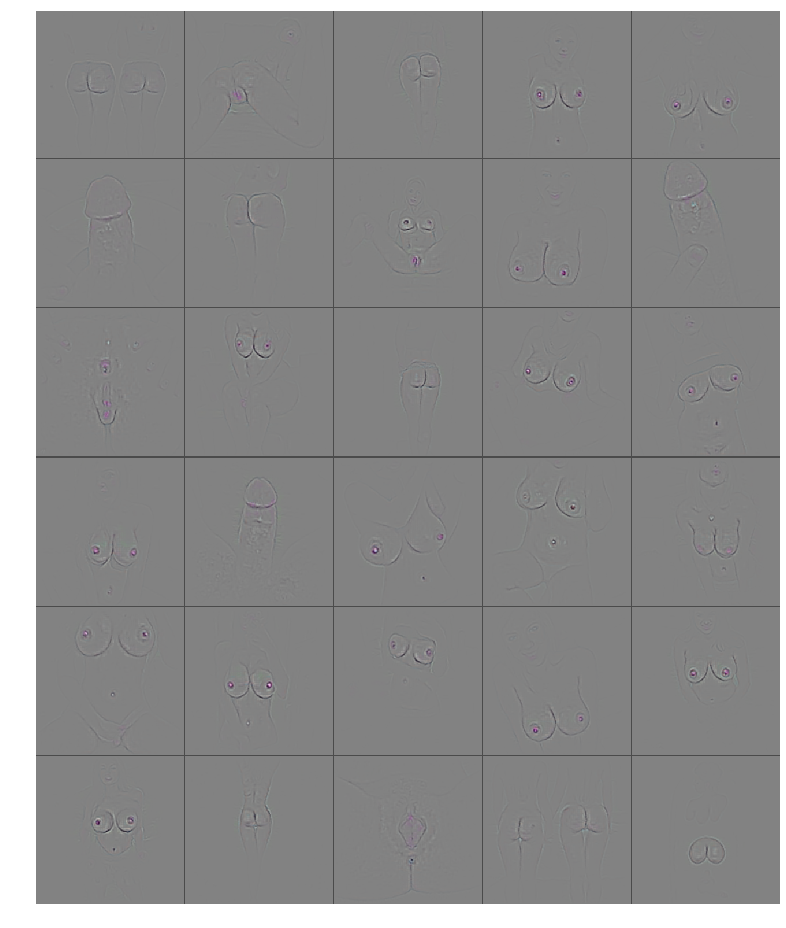

In the end, to check the reliability, we launched a reversing neural network on hardcore pornography to make sure that the learned signs really correspond to objects that obviously belong to NSFW.

Here we clearly see that the convolutional neural network correctly assimilated the objects “penis”, “anus”, “vagina”, “nipple” and “buttocks” - those objects that our model should recognize. Moreover, the detected signs are much more detailed and complex than the researchers can manually describe, and this explains the significant success that we have achieved using convolutional neural networks to recognize obscene photos.

Source: https://habr.com/ru/post/282071/

All Articles