How to degrade the performance of your application - typical mistakes of developers

Performance is considered one of the most important non-functional requirements of the application. If you are reading this article, you are probably using an application, such as a web browser or a program for reading documents, and understand how great the performance value is. In this article I will talk about application performance and the three mistakes of developers, because of which it is not possible to achieve high application performance.

It doesn't matter what programming experience you have: perhaps you have just recently graduated from school or have many years of experience, but when the need arises to develop a program, you will most likely try to find a code that has already been developed earlier. It is desirable, of course, that this code be in the same programming language that you are going to use.

There is nothing wrong. This approach often leads to faster development. On the other hand, while you lose the opportunity to learn something new. When using this approach, it is extremely rare to find time to properly analyze the code and understand not only the algorithm, but also the inner workings of each line of code.

')

This is one example of a situation where we, the developers, are going the wrong way. However, there are many such ways. For example, when I was younger and had just begun my acquaintance with software development, I tried to imitate my boss in everything: everything he did seemed to me flawless and absolutely correct. When I needed to do something, I watched the boss do the same, and tried to repeat all his actions as precisely as possible. Many times it happened that I simply did not understand why his approach worked, but does it really matter? The main thing is that everything works!

There is a type of developers who will work as hard as possible to solve their task. As a rule, they are looking for ready-made components, components, blocks, put all these pieces together and ready! Mission accomplished! Such developers rarely take the time to study and understand the code snippets they find, and they don’t care at all about such “minor” things as scalability, ease of maintenance or performance.

Another scenario is possible in which they will not understand exactly how each component of the program works: if the developers have never encountered problems. If you are using a technology for the first time and you have difficulties, then you study this technology in detail and eventually find out how it works.

Let's look at a few examples that will help us understand the difference between understanding technology and its usual use. Since I am mainly engaged in the development of web-based solutions. NET *, then let's talk about it.

Consider the following code example. It's simple. The code just updates the style of a single element in the DOM. The problem (now in modern browsers this problem is no longer so relevant, but it is quite suitable for illustrating my thought) is that the code bypasses the DOM tree three times. If the code is repeated, and the document is quite large and complex, the application performance will significantly decrease.

To fix this problem is quite simple. Look at the following sample code. Before working with an object in the variable myField , a direct link is held. The new code is more compact, it is more convenient to read and understand, and it works faster, because access to the DOM tree is carried out only once.

Consider another example. This example is taken from here .

The following figure shows two equivalent code fragments. Each of them creates a list with 1000 li items. The code on the right adds an id attribute to each li element, and the code on the left adds a class attribute to each li element.

As you can see, the second part of the code snippet simply refers to each of the thousand li elements created. I measured the speed in Internet Explorer * 10 and Chrome * 48 browsers: the average execution time of the code shown on the left was 57 ms, and the execution time of the code shown on the right was only 9 ms, significantly less. The difference is huge, moreover, in this case, it is due only to different ways of access to the elements.

This example should be taken very carefully. In this example, there are still many interesting moments for analysis, for example, the order of checking selectors (here it is right to left order). If you are using jQuery *, then read about the DOM context. For information on general performance principles of CSS selectors, see here .

The final example is javascript code. This example is more memory-related, but it helps to understand how things really work. Excessive memory consumption in browsers will result in poor performance.

The following figure shows two different ways to create an object with two properties and one method. On the left, the class constructor adds two properties to the object, and an additional method is added through the class prototype. On the right, the constructor immediately adds both properties and a method.

After creating such an object, thousands of objects are created using these two methods. If you compare the amount of memory used by these objects, you will notice a difference in the use of Shallow Size and Retained Size memory areas in Chrome. The prototype approach uses about 20% less memory (20 KB compared to 24 KB) in the Shallow Size area and 66% less in the Retained Memory area (20 KB compared to 60 KB).

For more information about the Shallow Size and Retained Size memory features, see here .

You can create objects, knowing how to use the desired technology. By understanding how this or that technology works, you can optimize applications in terms of memory management and performance.

In preparation for speaking at a conference on this topic, I decided to prepare an example with server code. I decided to use LINQ * because LINQ was widely used in the .NET world for new developments and has significant potential for productivity gains.

Consider the following common scenario. The following figure shows two functionally equivalent code fragments. The purpose of the code is to create a list of all departments and all courses for each department in a school. In the code called Select N + 1, we display a list of all departments, and for each department a list of courses. This means that if there are 100 branches, we will need 1 + 100 calls to the database.

This problem can be solved differently. One of the simplest approaches is shown in the code snippet on the right side. When using the Include method (in this case, I will use a hard-coded string for ease of understanding) there will be only one call to the database, and this single call will issue all the departments and courses at once. In this case, when performing the second foreach cycle, all the collections of Courses for each branch will already be in memory.

Thus, you can improve performance hundreds of times by simply avoiding the Select N + 1 approach.

Consider a less obvious example. In the figure below, the whole difference between the two code fragments lies in the data type of the destination list in the second line. Here you may be surprised: does the destination data type affect anything? If you examine the operation of this technology, then you will understand: the type of the destination data actually determines the moment at which the database request is made. And from this, in turn, depends on the time of application of filters for each request.

In the Code # 1 example, where the IEnumerable data type is expected, the query is executed immediately before executing Take Employee (10) . This means that if there are 1000 employees, they will all be obtained from the database, after which 10 of them will be selected.

In the Code # 2 example, the query is executed after the execution of Take Employee (10) . In this case, a total of 10 records are retrieved from the database. The following article explains in detail the differences when using different types of collections.

In SQL, many features need to be explored to achieve the highest database performance. Working with SQL Server is not easy: you need to understand how data is used, which tables are requested most often and by which fields.

However, a number of general principles can be applied to improve performance, for example:

For brevity, I will not give specific examples, but these principles can be used, analyzed and optimized.

So, how should we, the developers, change the way we think in order to avoid the erroneous approach number 1?

I have been developing on .NET since version 1.0. I know in great detail all the features of the work of web forms, as well as many .NET client libraries (I changed some of them myself). When I found out about the release of the Model View Controller (MVC), I didn’t want to use it: “We don’t need it”.

In fact, this list can be continued for quite a long time. I mean a list of things that I didn’t like at first, and which I now often and confidently use. This is just one example of a situation where developers are leaning in favor of some technical solutions and avoid others, which makes it difficult to achieve higher performance.

I often hear discussions about either LINQ connections to objects in the Entity Framework, or SQL stored procedures when querying data. People are so used to using one or the other solution that they try to use them everywhere.

Another factor affecting the preferences of developers (the predominant choice of some technologies and the rejection of others) is a personal attitude towards open source software. Developers often choose not the solution that is best suited for the current situation, but the one that best fits with their philosophy of life.

Sometimes external factors (for example, tight deadlines) force us to make non-optimal decisions. It takes time to choose the best technology: you need to read, try, compare and draw conclusions. When developing a new product or a new version of an existing product, it often happens that we are already late: “The deadline is yesterday”. Two obvious ways out of this situation are: request extra time or work overtime for self-education.

How we, the developers, must change our way of thinking in order to avoid the erroneous approach # 2.

So, we put a lot of effort and created the best application. It's time to move on to deploying it. We all tested. On our machines, everything works perfectly. All 10 testers were completely satisfied with the application itself, and its performance.

Therefore, now certainly no problems can arise, everything is in perfect order?

Not at all, it may not be all right!

Have you asked yourself the following questions?

I understand that not all of these issues relate directly to the infrastructure, but everything has its time. As a rule, the final actual conditions in which our application will work differ from the conditions on the servers at the intermediate stage.

Let's look at several possible factors that may affect the performance of our application. Suppose that users are in different countries of the world. Perhaps, the application will work very quickly, without any complaints from users in the US, but users from Malaysia will complain of low speed.

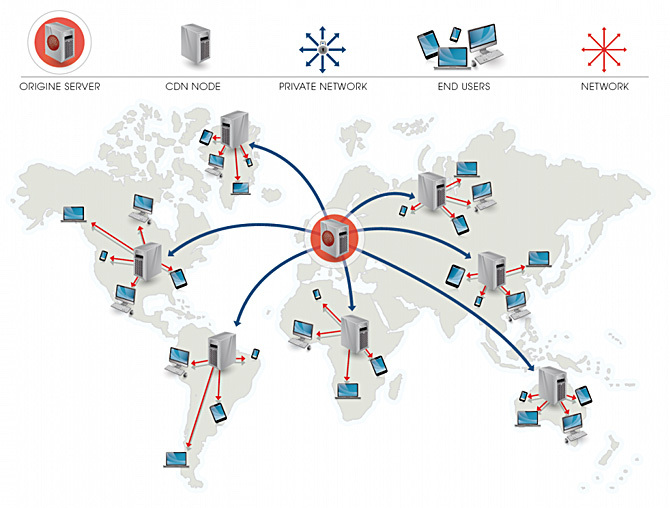

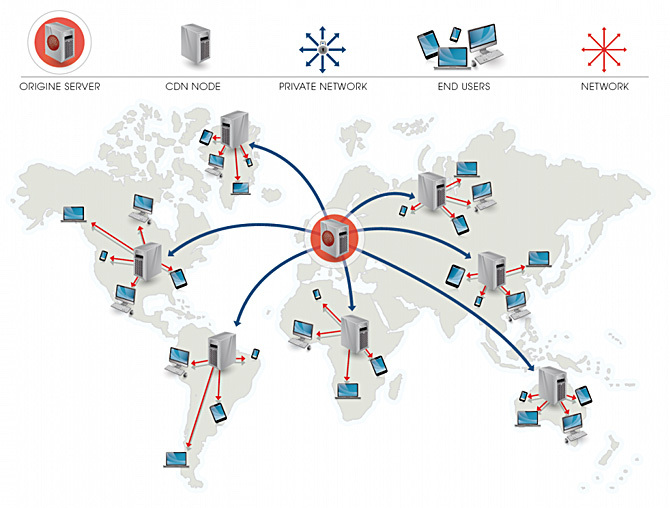

To solve such problems in different ways. For example, you can use data distribution networks (CDNs) and place static files in them. After that, loading pages from users who are in different places will be faster. What I am talking about is shown in the following figure.

Another situation is possible: suppose that applications run on servers that are running SQL Server DBMS and a web server simultaneously. In this case, two server systems run simultaneously on the same physical server with heavy CPU load. How to solve this problem? If we are talking about the .NET application on the Internet Information Services (IIS) server, then we can use the corresponding processors. Processor matching is the binding of one process to one or more specific computer CPU cores.

For example, suppose that a computer with four processors is running SQL Server and IIS Web Server.

If you allow the operating system to decide which CPU will be used for SQL Server, and which for IIS, resources may be allocated differently. It is possible that two processors will be assigned to each server load.

And it is possible that all four processors will be allocated only to one load.

In this case, a deadlock may occur: IIS may serve too many requests and will occupy all four processors, while some requests may require access to SQL, which in this case will be impossible. In practice, such a scenario is unlikely, but it perfectly illustrates my point.

Another possible situation: one process will not run all the time on the same CPU. There will often be a context switch. Frequent context switching will lead to a decrease in the performance of the server itself first, and then the applications running on that server.

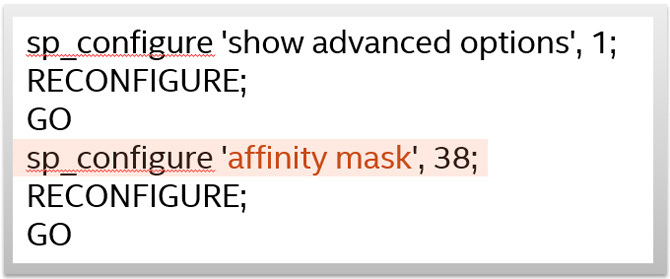

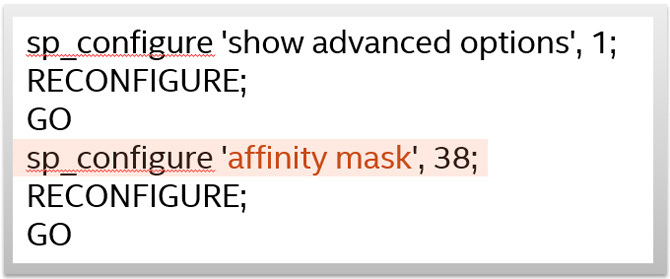

One way to solve this problem is to use “processor matching” for IIS and SQL. In this case, we decide how many processors are needed for SQL Server, and how many - for IIS. To do this, you need to configure the settings in the Processor Compatibility section of the CPU category in IIS and the Compliance Mask in the SQL Server database. Both of these cases are shown below.

This topic can be continued further with other infrastructure features related to performance improvements, for example when using web farms.

How do we, the developers, have to change our way of thinking in order to avoid the erroneous approach number 3?

You have nothing to reproach yourself for. Not everything depends on you. Indeed there is no time. All families, all hobbies, all need to rest.

But it is important to understand that good work is not only writing good code. We all at some time used some or all of the erroneous approaches described.

Here's how to avoid them.

Wrong approach no. 1. Insufficient understanding of development technologies.

It doesn't matter what programming experience you have: perhaps you have just recently graduated from school or have many years of experience, but when the need arises to develop a program, you will most likely try to find a code that has already been developed earlier. It is desirable, of course, that this code be in the same programming language that you are going to use.

There is nothing wrong. This approach often leads to faster development. On the other hand, while you lose the opportunity to learn something new. When using this approach, it is extremely rare to find time to properly analyze the code and understand not only the algorithm, but also the inner workings of each line of code.

')

This is one example of a situation where we, the developers, are going the wrong way. However, there are many such ways. For example, when I was younger and had just begun my acquaintance with software development, I tried to imitate my boss in everything: everything he did seemed to me flawless and absolutely correct. When I needed to do something, I watched the boss do the same, and tried to repeat all his actions as precisely as possible. Many times it happened that I simply did not understand why his approach worked, but does it really matter? The main thing is that everything works!

There is a type of developers who will work as hard as possible to solve their task. As a rule, they are looking for ready-made components, components, blocks, put all these pieces together and ready! Mission accomplished! Such developers rarely take the time to study and understand the code snippets they find, and they don’t care at all about such “minor” things as scalability, ease of maintenance or performance.

Another scenario is possible in which they will not understand exactly how each component of the program works: if the developers have never encountered problems. If you are using a technology for the first time and you have difficulties, then you study this technology in detail and eventually find out how it works.

Let's look at a few examples that will help us understand the difference between understanding technology and its usual use. Since I am mainly engaged in the development of web-based solutions. NET *, then let's talk about it.

▍JavaScript * and DOM

Consider the following code example. It's simple. The code just updates the style of a single element in the DOM. The problem (now in modern browsers this problem is no longer so relevant, but it is quite suitable for illustrating my thought) is that the code bypasses the DOM tree three times. If the code is repeated, and the document is quite large and complex, the application performance will significantly decrease.

To fix this problem is quite simple. Look at the following sample code. Before working with an object in the variable myField , a direct link is held. The new code is more compact, it is more convenient to read and understand, and it works faster, because access to the DOM tree is carried out only once.

Consider another example. This example is taken from here .

The following figure shows two equivalent code fragments. Each of them creates a list with 1000 li items. The code on the right adds an id attribute to each li element, and the code on the left adds a class attribute to each li element.

As you can see, the second part of the code snippet simply refers to each of the thousand li elements created. I measured the speed in Internet Explorer * 10 and Chrome * 48 browsers: the average execution time of the code shown on the left was 57 ms, and the execution time of the code shown on the right was only 9 ms, significantly less. The difference is huge, moreover, in this case, it is due only to different ways of access to the elements.

This example should be taken very carefully. In this example, there are still many interesting moments for analysis, for example, the order of checking selectors (here it is right to left order). If you are using jQuery *, then read about the DOM context. For information on general performance principles of CSS selectors, see here .

The final example is javascript code. This example is more memory-related, but it helps to understand how things really work. Excessive memory consumption in browsers will result in poor performance.

The following figure shows two different ways to create an object with two properties and one method. On the left, the class constructor adds two properties to the object, and an additional method is added through the class prototype. On the right, the constructor immediately adds both properties and a method.

After creating such an object, thousands of objects are created using these two methods. If you compare the amount of memory used by these objects, you will notice a difference in the use of Shallow Size and Retained Size memory areas in Chrome. The prototype approach uses about 20% less memory (20 KB compared to 24 KB) in the Shallow Size area and 66% less in the Retained Memory area (20 KB compared to 60 KB).

For more information about the Shallow Size and Retained Size memory features, see here .

You can create objects, knowing how to use the desired technology. By understanding how this or that technology works, you can optimize applications in terms of memory management and performance.

▍LINQ

In preparation for speaking at a conference on this topic, I decided to prepare an example with server code. I decided to use LINQ * because LINQ was widely used in the .NET world for new developments and has significant potential for productivity gains.

Consider the following common scenario. The following figure shows two functionally equivalent code fragments. The purpose of the code is to create a list of all departments and all courses for each department in a school. In the code called Select N + 1, we display a list of all departments, and for each department a list of courses. This means that if there are 100 branches, we will need 1 + 100 calls to the database.

This problem can be solved differently. One of the simplest approaches is shown in the code snippet on the right side. When using the Include method (in this case, I will use a hard-coded string for ease of understanding) there will be only one call to the database, and this single call will issue all the departments and courses at once. In this case, when performing the second foreach cycle, all the collections of Courses for each branch will already be in memory.

Thus, you can improve performance hundreds of times by simply avoiding the Select N + 1 approach.

Consider a less obvious example. In the figure below, the whole difference between the two code fragments lies in the data type of the destination list in the second line. Here you may be surprised: does the destination data type affect anything? If you examine the operation of this technology, then you will understand: the type of the destination data actually determines the moment at which the database request is made. And from this, in turn, depends on the time of application of filters for each request.

In the Code # 1 example, where the IEnumerable data type is expected, the query is executed immediately before executing Take Employee (10) . This means that if there are 1000 employees, they will all be obtained from the database, after which 10 of them will be selected.

In the Code # 2 example, the query is executed after the execution of Take Employee (10) . In this case, a total of 10 records are retrieved from the database. The following article explains in detail the differences when using different types of collections.

▍SQL Server

In SQL, many features need to be explored to achieve the highest database performance. Working with SQL Server is not easy: you need to understand how data is used, which tables are requested most often and by which fields.

However, a number of general principles can be applied to improve performance, for example:

- cluster or nonclustered indices;

- the correct order of instructions JOIN;

- understanding when to use #temp tables and variable tables;

- use of views or indexed views;

- use of precompiled instructions.

For brevity, I will not give specific examples, but these principles can be used, analyzed and optimized.

▍ Change the way of thinking

So, how should we, the developers, change the way we think in order to avoid the erroneous approach number 1?

- Stop thinking: "I am an interface developer" or "I am an internal code developer." Perhaps you are an engineer, you specialize in one area, but do not use this specialization to bar out of other areas.

- Stop thinking: “Let a specialist do it, because he will succeed faster.” In today's world of flexibility, we must be multifunctional resources, we must explore areas in which our knowledge is insufficient.

- Stop telling yourself: "I don't understand this." Of course, if it were simple, then everyone would have become experts long ago. Feel free to spend time reading, consulting and studying. It is not easy, but sooner or later it will pay off.

- Stop saying, "I have no time." I can understand this objection. It happens so often. But one day a colleague at Intel said: "If you are really interested in something, then there will be time for that." Here I am writing this article, for example, on Saturday at midnight!

Wrong approach number 2. Preference for certain technologies

I have been developing on .NET since version 1.0. I know in great detail all the features of the work of web forms, as well as many .NET client libraries (I changed some of them myself). When I found out about the release of the Model View Controller (MVC), I didn’t want to use it: “We don’t need it”.

In fact, this list can be continued for quite a long time. I mean a list of things that I didn’t like at first, and which I now often and confidently use. This is just one example of a situation where developers are leaning in favor of some technical solutions and avoid others, which makes it difficult to achieve higher performance.

I often hear discussions about either LINQ connections to objects in the Entity Framework, or SQL stored procedures when querying data. People are so used to using one or the other solution that they try to use them everywhere.

Another factor affecting the preferences of developers (the predominant choice of some technologies and the rejection of others) is a personal attitude towards open source software. Developers often choose not the solution that is best suited for the current situation, but the one that best fits with their philosophy of life.

Sometimes external factors (for example, tight deadlines) force us to make non-optimal decisions. It takes time to choose the best technology: you need to read, try, compare and draw conclusions. When developing a new product or a new version of an existing product, it often happens that we are already late: “The deadline is yesterday”. Two obvious ways out of this situation are: request extra time or work overtime for self-education.

▍ Change the way of thinking

How we, the developers, must change our way of thinking in order to avoid the erroneous approach # 2.

- Stop saying: “This method has always worked”, “We have always done this way”, etc. You need to know and use other options, especially if these options are somewhat better.

- Do not try to use inappropriate solutions! It happens that the developers are persistently trying to apply some kind of technology that does not and cannot give the desired results. Developers spend a lot of time and effort, trying to somehow somehow refine this technology, adjust it to the result, without considering other options. Such an ungrateful process can be called an “attempt to pull an owl on the globe”: this is the desire, at the cost of any effort, to adapt the existing inappropriate solution instead of focusing on the problem and finding another, more elegant and quick solution.

- "I am busy". Of course, we always do not have enough time to study the new. I can understand this objection.

Wrong approach no. 3. Insufficient understanding of the application infrastructure

So, we put a lot of effort and created the best application. It's time to move on to deploying it. We all tested. On our machines, everything works perfectly. All 10 testers were completely satisfied with the application itself, and its performance.

Therefore, now certainly no problems can arise, everything is in perfect order?

Not at all, it may not be all right!

Have you asked yourself the following questions?

- Is the application suitable for working in a load balanced environment?

- The application will be hosted in the cloud, where there will be many copies of this application?

- How many other applications run on the machine for which my application is intended?

- What other programs run on this server? SQL Server? Reporting Services? Any SharePoint extensions *?

- Where are the end users? Are they distributed around the world?

- How many users will my application have over the next five years?

I understand that not all of these issues relate directly to the infrastructure, but everything has its time. As a rule, the final actual conditions in which our application will work differ from the conditions on the servers at the intermediate stage.

Let's look at several possible factors that may affect the performance of our application. Suppose that users are in different countries of the world. Perhaps, the application will work very quickly, without any complaints from users in the US, but users from Malaysia will complain of low speed.

To solve such problems in different ways. For example, you can use data distribution networks (CDNs) and place static files in them. After that, loading pages from users who are in different places will be faster. What I am talking about is shown in the following figure.

Another situation is possible: suppose that applications run on servers that are running SQL Server DBMS and a web server simultaneously. In this case, two server systems run simultaneously on the same physical server with heavy CPU load. How to solve this problem? If we are talking about the .NET application on the Internet Information Services (IIS) server, then we can use the corresponding processors. Processor matching is the binding of one process to one or more specific computer CPU cores.

For example, suppose that a computer with four processors is running SQL Server and IIS Web Server.

If you allow the operating system to decide which CPU will be used for SQL Server, and which for IIS, resources may be allocated differently. It is possible that two processors will be assigned to each server load.

And it is possible that all four processors will be allocated only to one load.

In this case, a deadlock may occur: IIS may serve too many requests and will occupy all four processors, while some requests may require access to SQL, which in this case will be impossible. In practice, such a scenario is unlikely, but it perfectly illustrates my point.

Another possible situation: one process will not run all the time on the same CPU. There will often be a context switch. Frequent context switching will lead to a decrease in the performance of the server itself first, and then the applications running on that server.

One way to solve this problem is to use “processor matching” for IIS and SQL. In this case, we decide how many processors are needed for SQL Server, and how many - for IIS. To do this, you need to configure the settings in the Processor Compatibility section of the CPU category in IIS and the Compliance Mask in the SQL Server database. Both of these cases are shown below.

| CPU | |

|---|---|

| Limit (interest) | 0 |

| Action restrictions | No action |

| Period of limitation (minutes) | five |

| Processor Matching Allowed | False |

| Processor Match Mask | 4294967295 |

| Processor Compliance Mask (64-bit) | 4294967295 |

This topic can be continued further with other infrastructure features related to performance improvements, for example when using web farms.

▍ Change the way of thinking

How do we, the developers, have to change our way of thinking in order to avoid the erroneous approach number 3?

- Stop thinking: "This is not my job." We, engineers, must strive to broaden our horizons in order to provide our customers with the best possible solution.

- "I am busy". Of course, we always have no time. This is the most common circumstance. Allocating time is what distinguishes a successful, experienced, outstanding professional.

▍ Do not make excuses!

You have nothing to reproach yourself for. Not everything depends on you. Indeed there is no time. All families, all hobbies, all need to rest.

But it is important to understand that good work is not only writing good code. We all at some time used some or all of the erroneous approaches described.

Here's how to avoid them.

- Allocate time, if necessary - with a margin. When you are asked to estimate the time needed to work on a project, do not forget to set aside time for research and testing, for preparing conclusions and making decisions.

- Try to create a personal application for testing at the same time. In this application, you can try different solutions before their implementation (or refusal to implement them) in the application being developed. We all make mistakes sometimes.

- Find people who already own the technology, and try programming together. Work with a specialist who understands the infrastructure when he will deploy the application. This time will be well spent.

- Avoid stack overflow! Most of my problems have already been resolved. If you simply copy the answers without analyzing them, you will end up with an incomplete solution.

- Do not consider yourself a narrow specialist, do not limit your capabilities. Of course, if you succeed in becoming an expert in any field, this is great, but you should be able to keep the conversation up to the mark and if the conversation concerns areas where you are not yet an expert.

- Help others! This is probably one of the best ways to learn. If you help others solve their problems, then ultimately you will save your own time, because you will already know what to do if you encounter such problems.

see also

about the author

Alexander García is a computer engineer from Intel’s Costa Rica branch. He has 14 years of professional software development experience. The sphere of interests of Alexander is very diverse - from the practice of software development and software security to performance, data mining and related areas. Currently, Alexander has a master’s degree in computer science.

For more information on compiler optimization, see our optimization notice.

For more information on compiler optimization, see our optimization notice.

Source: https://habr.com/ru/post/281823/

All Articles