Hadoop performance comparison on DAS and Isilon

I already wrote about that, with the help of Isilon, you can create data lakes that can simultaneously serve several clusters with different versions of Hadoop. In that post, I mentioned that in many cases, Isilon systems run faster than traditional clusters that use DAS storage. Later this was confirmed in IDC, having banished various Hadoop benchmarks on the respective clusters. And this time I want to consider the reasons for the higher performance of Isilon clusters, as well as how it changes depending on the distribution of data and balancing within the clusters.

Test environment

- Distribution Cloudera Hadoop CDH5.

- DAS cluster of 7 nodes (one master, six workers), with eight ten-thousand disks of 300 GB each.

- Isilon cluster of 4 x410 nodes, each of which is equipped with 57 TB on disks and 3.2 TB on SSD, connected by 10 GBE.

Other details can be found in the report .

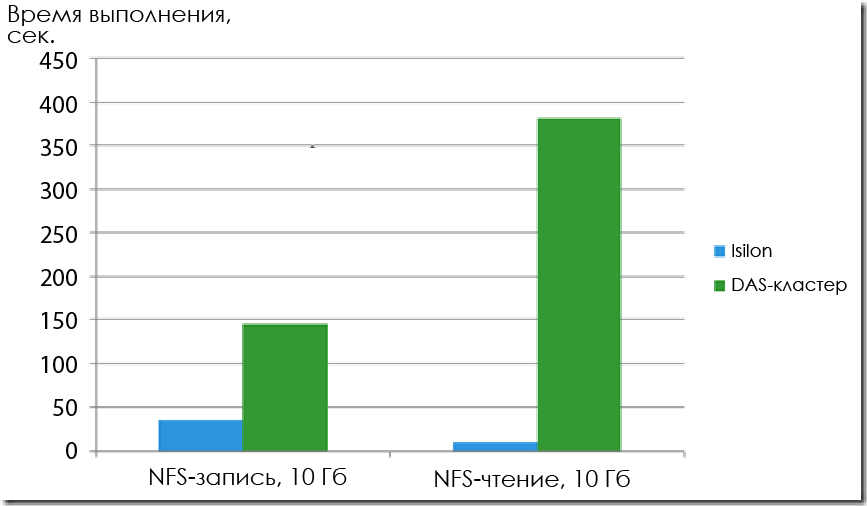

NFS access

First of all, IDC tested reading and writing with NFS access. As expected, Isilon showed MUCH better results even with four nodes.

')

The duration of copying a file of 10 GB in size (the block length is not indicated, but most likely it is 1 MB or more).

According to the record, Isilon was 4.2 times faster. This is especially important if it is important if you want to receive data via NFS. And the reading speed is 37 times faster.

Hadoop workload

During testing, three types of workload were compared using standard Hadoop benchmarks:

- TeraGen Serial Recording

- Alternate Write / Read with TeraSort

- TeraValidate sequential read

Runtime with three different load types: TeraGen, TeraSort and TeraValidate.

When recording, Isilon's performance turned out to be 2.6 times higher, and on the other two types, 1.5 times higher. Specific results are presented in the table:

| Isilon | Hadoop das | |

|---|---|---|

| Teragen | 1681 MB / s | 605 MB / s |

| TeraSort | 642 MB / s | 416 MB / sec. |

| Teravalidate | 2832 MB / s | 1828 MB / sec. |

The performance of Isilon and DAS clusters with the same configuration of computing nodes (rounded).

The data speak for themselves. Let's now see how OneFS managed to achieve such a performance advantage over the DAS cluster.

Reading Isilon Files

Although I / O operations are distributed across all nodes in a DAS cluster, each individual 64 MB block is serviced by only one node. At the same time, in Isilon, the workload is divided between nodes in smaller portions. The reading operation consists of the following steps:

- The compute node requests HDFS metadata from the Name Node service running on all Isilon nodes (without SPoF).

- The service returns the IP addresses and block numbers of each of the three nodes in the same rack in which the computing node is located. This increases the efficiency of the locality of the rack.

- The compute node requests the readout of a 64 MB HDFS block from the Data Node service running on the first node in the resulting list.

- The requested node via the internal network Infiniband collects all 128-kilobyte Isilon-blocks that make up the required 64-megabyte HDFS-block. If these blocks are no longer in the second level cache, then they are read from disks. This is a fundamental difference from the DAS cluster, in which the entire 64 MB block is read from a single node. In other words, in the Isilon cluster, the I / O operation is serviced by a much larger number of disks and processors than in the DAS cluster.

- The requested node is returned to the compute node by the full HDFS block.

Write files to Isilon

When a client wants to write a file to the cluster, the node to which the client has connected is receiving and processing the file.

- The node creates a plan for recording the file, including the FEC calculation (from the point of view of volume, this is much more economical than the DAS cluster, which usually creates three copies of each block to ensure data integrity).

- The data blocks assigned to this node are recorded in its NVRAM. The presence of NVRAM cards is one of the advantages of Isilon, they cannot be used in DAS clusters.

- The data blocks assigned to other nodes are first transmitted over the Infiniband network to the second-level caches of these nodes, and from there to NVRAM.

- As soon as the corresponding data and FEC blocks are loaded into NVRAM of all nodes, the client receives confirmation of the successful recording. This means that you can not expect to write data to the disks while all I / O operations are buffered in NVRAM.

- Data blocks are stored in the second-level caches of each node in case read requests are received.

- Then the data is written to the disks.

The myth of the importance of disk locality for Hadoop performance

Sometimes we are faced with the objections of admins, who say that disk locality is important for high performance of Hadoop. But it must be remembered that Hadoop was originally designed to work in slow networks with a star topology, which are characterized by a capacity of 1 Gbit / s. In such conditions, it remains only to strive to carry out all I / O operations within a specific server (locality of disks).

A number of facts suggest that disk locality is not related to Hadoop performance:

I. Fast networks have already become standard.

- Today, a single non-blocking 10-gigabit switch port (full duplex up to 2500 Mbit / s) has a higher throughput than the typical disk subsystem with 12 disks (360 - Mbit / s).

- There is no longer any need to maintain data locality for the sake of a satisfactory level of I / O operations.

- In Isilon, rack locality is provided, not disk, which reduces Ethernet traffic between the racks.

This illustration shows the route of I / O operations. Obviously, the bottleneck is the disks, not the network (if it is a 10 GBE network).

The route of the I / O operation in the DAS architecture. Even if you double the number of disks, they still remain a bottleneck. So the locality of disks in most cases does not affect performance.

Ii. The localization of disks is lost in the following typical situations:

- In a DAS cluster with block replication, all nodes perform the maximum number of jobs. This is extremely typical for high-loaded clusters!

- Incoming files are compressed using non-splittable codecs like gzip.

- “The analysis of Hadoop tasks on Facebook proves the difficulty of achieving disk locality: only 34% of the task is performed on the same node where the input data is stored.”

- The disk locality provides a very low latency when performing I / O operations, but it has very little value when performing batch jobs like MapReduce.

Iii. Data Replication for Performance

- In high-load traditional clusters, a high degree of replication can be useful when working with files that are often used in multiple simultaneous tasks. This is required to ensure data locality and high speed parallel reading.

- Isilon does not require a high degree of replication, because:

- No need for data locality.

- Read operations are distributed across multiple nodes with a global coherent cache (globally coherent cache), which provides a very high parallel read speed.

Other technologies affecting Isilon performance

OneFS is a very mature product that has been improved for over a decade in terms of high performance and low latency with multi-protocol access. You can find a lot of information about this on the net. I will mention only the key points:

- All write operations are buffered using backup NVRAM, which provides very high performance.

- In OneFS, the first level cache, globally coherent second level cache, and third level caches on SSD are used to speed up reading.

- You can configure access templates for the entire cluster, for a pool, or even at the folder level. This allows you to optimize and balance the pre-fetching procedure. Templates can be random, parallel or streaming.

- Work with metadata is accelerated using a third-level cache, or configured separately. OneFS stores all metadata on SSD.

Finally

Isilon is a horizontally scalable NAS storage with a distributed file system designed for intensive workloads like Hadoop. HDFS is implemented as a protocol and Name Node and Data Node services, access to which is provided on all nodes. IDC performance testing showed a 2.5-fold advantage of the Isilon cluster over the DAS cluster. Thanks to advances in networking, disk locality does not affect the operation of Hadoop on an Isilon system. In addition to performance, Isilon has a number of other advantages, like more efficient use of disk space and various features typical of corporate storage. Moreover, compute nodes and storage nodes can be scaled independently of each other. You can also access the same data from several different versions and distributions of Hadoop at the same time.

Source: https://habr.com/ru/post/281799/

All Articles