Intel RealSense Technology in Ombre Fabula Gesture Management

Ombre Fabula is a prototype of an application that combines the traditions of European and Asian shadow theaters. This application uses the Intel RealSense SDK to create an interactive game with shadows controlled with gestures. During the development process, the game's creators team (Thi Binh Minh Nguyen) and members of Prefrontal Cortex company had to overcome a number of difficulties. In particular, it was required to ensure that the Intel RealSense camera accurately recognized the various hand gestures using its own BLOB detection algorithms. It took extensive testing with multiple users.

The Ombre Fabula project conceived Thi Binh Minh Nguyen as an educational project for a bachelor’s degree. Then, with the help of the Prefrontal Cortex design company, this project was transformed into an interactive installation running on a PC or laptop equipped with a camera aimed at the user. Min explains that there was a desire to give this project a new interactive level, to erase the boundaries between the audience and the player.

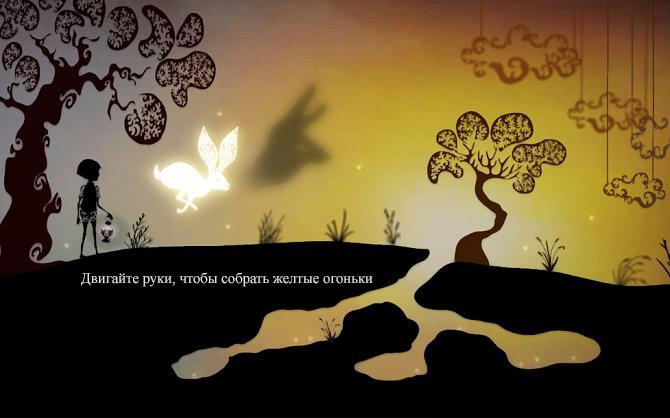

Players enter the mysterious two-dimensional world inhabited by shadow puppets. The user calls up different animals on the screen, folding his hands into corresponding figures, for example in the form of a rabbit. Then the user moves the hand shadow to control the main character of the game - a child collecting multicolored fragments of light to restore his grandmother's vision.

The Ombre Fabula game took part in the Intel RealSense App Challenge 2014 competition and took second place in it, losing to the Ambassador game.

')

Solutions and problems

The developers had experience with more specialized HID devices, they were attracted by the wide capabilities of Intel RealSense technology in terms of gestures, face tracking and voice recognition, although in the end, only gestures were used in the Ombre Fabula interface.

▍Create User Interface

At the same time, the interface of the application was not altered by refining another interface, but created from scratch, focusing on the management of hands and gestures from the very beginning. In addition, the Ombre Fabula project was conceived as an interactive installation with projecting an image onto a wall, in accordance with the traditions of shadow theaters, and so that everyone in the room could watch the images.

The Ombre Fabula project was conceived as an interactive room installation with as much involvement as possible. Here the user makes a bird shape.

From the point of view of developers, for complete immersion in the gaming atmosphere, the connection between the real world and the virtual world is important, in which users cast virtual shadows on the wall. To increase the authenticity and closeness to the traditions of classical theaters of shadows, candlelight is used.

The user interface in Ombre Fabula is purposely made extremely minimalist: there are no buttons, no cursors, no on-screen menus, no controls at all, with the exception of various hand gestures recognized by the application. The application is intended for use in a controlled installation environment, where there is always a presenter who can tell users what to do. Designers of this project often create large interactive installations of this kind, and in Ombre Fabula they purposely tried to offer users a short but deepest immersion in the game world, comparable to the sensations in an interactive gallery or other similar place. Since the time of user interaction with the game is deliberately chosen to be short enough, for the quick acquaintance of users with the game world, the direct instructions of the lead act much more efficiently than the training materials built into the game. The user interface, consisting only of shadows, also contributes to a deeper immersion in the gaming atmosphere and in the tradition of the theater of shadows.

The user leads his hand to the right to bring the main character of the game from his grandmother's house.

▍ Hand tracking implementation

At the initial stage of development, the creators of the game used the capabilities of the Intel RealSense SDK to track palms and fingers to perceive hand gestures made up of simple shapes, such as rabbit, bird, or dragon. It was precisely these figures that were chosen, since they are the most traditional for the style of the theater of shadows, based on which this application was created, and also because for users such figures are the most simple and intuitive. To make the shape of a rabbit, you need to connect the thumb and index fingers of one hand, straightening the middle and ring fingers so that they depict the "ears" of the rabbit. To get the shape of a bird, you need to link the thumbs of both hands, straightening your fingers to the sides like "wings". To get a figure of a dragon, you need to bring two hands together so that you get a "closing toothy jaw."

Simple hand gestures allow you to create a rabbit, bird and dragon.

However, it quickly became clear that the Intel RealSense SDK algorithms could not properly process the shadows due to inhomogeneous visibility: the fingers merged together and intersected in the same plane. The Intel RealSense camera recognized simple gestures (splayed five fingers, a “V” sign with index and middle fingers, a raised thumb, etc.), but this was not enough for the recognition of more complex animal figures.

Therefore, developers had to abandon the built-in hand tracking features of the Intel RealSense SDK and use a blob detection algorithm that tracks hand contours. This algorithm produces labeled images, such as an image of the left hand, after which the Intel RealSense SDK provides the outlines of this image.

In this illustration, the gestures of a bird, a dragon, and a rabbit are shown with marked hand outlines, so that Ombre Fabula recognizes different gestures.

At first, extracting the necessary circuit data from the Intel RealSense SDK was not easy. Unity * integration is great for keeping track of your palms and fingers, but is not suitable for effective contour tracking. However, after reviewing the documentation and working with the Intel RealSense SDK, the developers learned how to extract the detailed contour data needed to recognize non-standard shapes.

The user puts his fingers into the rabbit shape so that the shadow rabbit doll appears on the screen.

The user moves his hand to lead the rabbit around the game world and collect points of yellow light.

▍Using your own BLOB detection algorithm

After obtaining BLOB data from the Intel RealSense SDK, it was necessary to simplify this data so that recognition would work effectively for each of the three figures: the rabbit, the bird, and the dragon. This process turned out to be more complicated than one could have supposed; It took extensive testing and many improvements to simplify the shapes to increase the likelihood of their uniform and accurate recognition by the application.

// Code snippet from official Intel "HandContour.cs" script for blob contour extraction int numOfBlobs = m_blob.QueryNumberOfBlobs(); PXCMImage[] blobImages = new PXCMImage[numOfBlobs]; for(int j = 0; j< numOfBlobs; j++) { blobImages[j] = m_session.CreateImage(info); results = m_blob.QueryBlobData(j, blobImages[j], out blobData[j]); if (results == pxcmStatus.PXCM_STATUS_NO_ERROR && blobData[j].pixelCount > 5000) { results = blobImages[j].AcquireAccess(PXCMImage.Access.ACCESS_WRITE, out new_bdata); blobImages[j].ReleaseAccess(new_bdata); BlobCenter = blobData[j].centerPoint; float contourSmooth = ContourSmoothing; m_contour.SetSmoothing(contourSmooth); results = m_contour.ProcessImage(blobImages[j]); if (results == pxcmStatus.PXCM_STATUS_NO_ERROR && m_contour.QueryNumberOfContours() > 0) { m_contour.QueryContourData(0, out pointOuter[j]); m_contour.QueryContourData(1, out pointInner[j]); } } } After that, the simplified data was processed using the $ P Point-Cloud Recognizer * algorithm. This is a freely available algorithm, often used for character recognition, such as ink strokes. The developers made some minor improvements to this algorithm and made it work correctly in Unity, and then applied it to determine the shape of the hands in Ombre Fabula. The algorithm determines (with a probability of about 90%) which animal’s figure is represented by the user's hands, after which the recognized figure is displayed on the screen.

// every few frames, we test if and which animal is currently found void DetectAnimalContour () { // is there actually a contour in the image right now? if (handContour.points.Count > 0) { // ok, find the most probable animal gesture class string gesture = DetectGestureClass(); // are we confident enough that this is one of the predefined animals? if (PointCloudRecognizer.Distance < 0.5) { // yes, we are: activate the correct animal ActivateAnimalByGesture(gesture); } } } // detect gesture on our contour string DetectGesture() { // collect the contour points from the PCSDK Point[] contourPoints = handContour.points.Select (x => new Point (xx, xy, xz, 0)).ToArray (); // create a new gesture to be detected, we don't know what it is yet var gesture = new Gesture(contourPoints, "yet unknown"); // the classifier returns the gesture class name with the highest probability return PointCloudRecognizer.Classify(gesture, trainingSet.ToArray()); } // This is from the $P algorithm // match a gesture against a predefined training set public static string Classify(Gesture candidate, Gesture[] trainingSet) { float minDistance = float.MaxValue; string gestureClass = ""; foreach (Gesture template in trainingSet) { float dist = GreedyCloudMatch(candidate.Points, template.Points); if (dist < minDistance) { minDistance = dist; gestureClass = template.Name; Distance = dist; } } return gestureClass; } The code uses the $ P algorithm to determine which animal is represented by the user's hands.Getting early user feedback through testing and observation

At the very early stage of development, the creators of the game realized that all people put animal figures in several different ways, not to mention the fact that all people differ in the shape of their hands and their size. It followed from this that for debug detection of contours it was required to test the detection algorithm with the participation of many people.

Fortunately, this was possible: since Min was studying at the university, it was not difficult to find about 50 volunteers from among the students of this university and invite them to test the application. Since the game uses a small number of gestures, this number of people turned out to be enough to optimize the algorithm for determining the contours and increase the probability of recognizing exactly the animal that the user is trying to portray with a hand gesture.

When the hands move left or right, the camera moves, and the main character of the game follows the animal through the world of shadows.

Virtual shadows of the user's hands on the screen not only contribute to a more complete immersion in the gaming atmosphere, but also form a visual feedback. When testing, the developers noticed that if the animal depicted on the screen was not what the user was trying to create, the user reacted to this visual feedback and folded his fingers differently to achieve the desired result. This process was extremely intuitive for users, no hints from developers were required.

One of the common problems associated with the recognition of gestures is that users can make a very quick gesture (for example, lift a thumb), because of which the application may not have time to respond. In Ombre Fabula gestures are continuous, it is necessary that the desired animal was on the screen. Testing has shown that, thanks to the constancy of the gestures, the application managed to respond correctly to them. No optimization in terms of the response speed of the application to gestures was required.

Ombre Fabula is optimized for short-term gaming sessions lasting 6–10 minutes. People recruited for testing noted that there was no fatigue of the hands and forearms, since users naturally became accustomed to keeping their arms raised for a certain time.

Under the hood: tools and resources

The previous experience of creating interactive installations helped the developers to make the right decisions regarding the tools and software components for the realization of their design.

▍Intel RealSense SDK

The Intel RealSense SDK was used to match hand contours, for which the contour tracking documentation included in the SDK was very useful. The developers also used the Unity samples provided by Intel when they first tried working with the built-in hand tracking capabilities. Hand tracking alone was not enough, so we went over to the images and implemented our own BLOB tracking algorithm.

UnUnity software

Both Min and the members of the Prefrontal Cortex team consider themselves primarily as designers, it is important for them not to waste time on developing platforms and writing code, but quickly translating ideas into working prototypes. In this regard, the Unity platform allowed for rapid prototyping and moving on to further development stages. In addition, it turned out that the Intel RealSense Unity Toolkit in the Intel RealSense SDK is easy to use and allows you to get started quickly.

▍ $ P Point-Cloud Recognizer

$ P Point-Cloud Recognizer is a two-dimensional program gesture recognition algorithm that determines (with some level of probability) which shape the pen strokes on paper or similar graphical data form into. This algorithm is often used to quickly create prototypes in gesture-driven user interfaces. The developers modified this algorithm somewhat and used it in Unity to determine the shape formed by the user's hands in Ombre Fabula. By counting the probability, the algorithm decides which animal the user is trying to portray, and the application displays the corresponding animal image.

In order for a dragon to appear, two hands must be folded so that the “jaws” are formed, as shown in this screenshot.

Ombre Fabula: what next?

Ombre Fabula has obvious potential for further development and the addition of other shadow animal figures created and managed by users, although at this time the developers do not plan to continue working on this game. Their ultimate goal is to arrange an international tour with Ombre Fabula and show it to the audience in the form of an interactive installation, which was conceived from the very beginning.

Intel RealSense SDK: Outlook

Felix Herbst (Felix Herbst) from the Prefrontal Cortex team is firmly convinced that control systems with gestures must be created from scratch, and attempts to adapt existing applications to gesture control in most cases will lead to inconvenience to users. He emphasizes the importance of analyzing the respective strengths of all possible management interfaces (including gesture control) and choosing the most appropriate model when developing an application.

Properly chosen, useful and effective ways of interacting are essential for the long-term diffusion of technology with natural interfaces. Herbst believes that if a sufficient number of developers use Intel RealSense technology, then these types of interfaces will become widespread in the future.

Source: https://habr.com/ru/post/281424/

All Articles