Superscalar Stacking Processor: Optimization

Continuation of a series of articles that analyze the idea of a superscalar processor

OoO and stack machine frontend . The topic of this article is the optimization of memory accesses.

Previous articles:

1 - description of work on a linear piece

2 - function call, save registers

3 - function call, inside view

Until now, we have not paid attention to the weak point of all stack machines — redundant memory accesses.

')

In fact, when the naive Kodo generator of a stack machine wants to get the value of the variable 'a', it writes the instruction 'push a'. There is no possibility to refer to an already computed expression or its piece by the stack processor.

For register processors, the compiler solves this problem by introducing temporary variables with possible placement in registers. It is worth inventing a similar mechanism for our seemingly non-register architecture.

However, there is almost no need to invent something, “everything is already stolen before us” (C).

- we will enter the device of bookmarks (bookmarks)

- bookmark - the register to which the compiler can refer to the number, register and bookmark numbers are not required to match, although it would simplify life

- the compiler for each function determines the number of bookmarks that it is going to have

- at the start of the function, the required number of registers is allocated under the bookmarks;

- bookmarks get inside the register window and are acted upon by the FILL / SPILL mechanism

- if the compiler considers the calculated value to be valuable, then it sets the bookmark, for example, with the instruction 'bmk 1', which means: the value at the top of the stack is now considered bookmark number 1. Does the value copy to the bookmark register N1 or is the register responsible for this bookmark, no matter implementation details

- when in the future the compiler needs the value from this tab, it can use it, for example, like this: 'add_bmk 1', i.e. the value from the top of the stack will be summed with the value of tab 1 and replaced with this value

- from the point of view of the processor backend, the good old mop summation of the two registers into the third one will be generated

- there is a need for a second line of arithmetic and logical instructions (add-> add_bmk, mul-> mull_bmk, cmp-> cmp_bmk), but it's worth it

- or a more general variant - any of the arguments or the result of the three (two) -address instructions can be bookmarked

- You can imagine the dynamic allocation of bookmarks, without allocating the maximum number of bookmarks in order to optimize the number of registers used, but this (at first glance) looks too cruel in relation to the gland

As a result, the compiler has two conditionally new resources for optimization.

The first is the identification and placement of bookmarks, the number of which can be quite large, it is not limited to the number of available registers for the lack thereof. By and large, this is the equivalent of local variables located in the “fast” stack.

The second is an equivalent transformation of expressions to a form in which internal parallelism is maximally manifested. The compiler tries to reduce the height of expression trees by growing in breadth.

Well, let's not forget about the traditional optimization techniques that are not focused on registers and their distribution.

In order to figure out how this can be implemented, take a look at those

the possibilities that the LLVM assembler provides us. We are not going to really ignore the entire “cultural layer” accumulated in this area.

Llvm

int a(int m, int n) { if (m == 0) return n + 1; if (n == 0) return a(m - 1, 1); return a(m - 1, a(m, n - 1)); } received LLVM asm

; Function Attrs: nounwind readnone

define i32 @a (i32% m, i32% n) # 0 {

% 1 = icmp eq i32% m, 0

br i1% 1, label% tailrecurse._crit_edge, label% .lr.ph

tailrecurse._crit_edge:; preds =% tailrecurse.backedge,% 0

% n.tr.lcssa = phi i32 [% n,% 0], [% n.tr.be,% tailrecurse.backedge]

% 2 = add nsw i32% n.tr.lcssa, 1

ret i32% 2

.lr.ph:; preds =% 0,% tailrecurse.backedge

% n.tr2 = phi i32 [% n.tr.be,% tailrecurse.backedge], [% n,% 0]

% m.tr1 = phi i32 [% 4,% tailrecurse.backedge], [% m,% 0]

% 3 = icmp eq i32% n.tr2, 0

% 4 = add nsw i32% m.tr1, -1

br i1% 3, label% tailrecurse.backedge, label% 5

; <label>: 5; preds =% .lr.ph

% 6 = add nsw i32% n.tr2, -1

% 7 = tail call i32 @a (i32% m.tr1, i32% 6)

br label% tailrecurse.backedge

tailrecurse.backedge:; preds =% 5,% .lr.ph

% n.tr.be = phi i32 [% 7,% 5], [1,% .lr.ph]

% 8 = icmp eq i32% 4, 0

br i1% 8, label% tailrecurse._crit_edge, label% .lr.ph

} Here we see only the instructions of the control flow; there are no places where the specificity of the stack machine could manifest itself (except for such a calculation + -1).

What about the FFT “butterfly” (this is rather from the data flow area)?

FFT fragment

...

for (n = 1; n <= LogN; n ++)

{

rw = Rcoef [LogN - n];

iw = Icoef [LogN - n];

if (Ft_Flag == FT_INVERSE) iw = -iw;

in = ie >> 1;

ru = 1.0;

iu = 0.0;

for (j = 0; j <in; j ++)

{

for (i = j; i <N; i + = ie)

{

io = i + in;

rtp = Rdat [i] + Rdat [io];

itp = Idat [i] + Idat [io];

rtq = Rdat [i] - Rdat [io];

itq = Idat [i] - Idat [io];

Rdat [io] = rtq * ru - itq * iu;

Idat [io] = itq * ru + rtq * iu;

Rdat [i] = rtp;

Idat [i] = itp;

}

sr = ru;

ru = ru * rw - iu * iw;

iu = iu * rw + sr * iw;

}

ie >> = 1;

}

...

for (n = 1; n <= LogN; n ++)

{

rw = Rcoef [LogN - n];

iw = Icoef [LogN - n];

if (Ft_Flag == FT_INVERSE) iw = -iw;

in = ie >> 1;

ru = 1.0;

iu = 0.0;

for (j = 0; j <in; j ++)

{

for (i = j; i <N; i + = ie)

{

io = i + in;

rtp = Rdat [i] + Rdat [io];

itp = Idat [i] + Idat [io];

rtq = Rdat [i] - Rdat [io];

itq = Idat [i] - Idat [io];

Rdat [io] = rtq * ru - itq * iu;

Idat [io] = itq * ru + rtq * iu;

Rdat [i] = rtp;

Idat [i] = itp;

}

sr = ru;

ru = ru * rw - iu * iw;

iu = iu * rw + sr * iw;

}

ie >> = 1;

}

...

Body of the most nested loops assembler LLVM

(clang -c bc -O3 --target = xcore -emit-llvm -S -o b_o3.ll) looks like this:

obtained LLVM assembler

.lr.ph21 :; preds =% .preheader19,% .lr.ph21

% i.020 = phi i32 [% 76,% .lr.ph21], [% j.025,% .preheader19]

% 57 = add nsw i32% i.020,% 54

% 58 = getelementptr inbounds double *% Rdat, i32% i.020

% 59 = load double *% 58, align 4,! Tbaa! 1

% 60 = getelementptr inbounds double *% Rdat, i32% 57

% 61 = load double *% 60, align 4,! Tbaa! 1

% 62 = fadd double% 59,% 61

% 63 = getelementptr inbounds double *% Idat, i32% i.020

% 64 = load double *% 63, align 4,! Tbaa! 1

% 65 = getelementptr inbounds double *% Idat, i32% 57

% 66 = load double *% 65, align 4,! Tbaa! 1

% 67 = fadd double% 64,% 66

% 68 = fsub double% 59,% 61

% 69 = fsub double% 64,% 66

% 70 = fmul double% ru.023,% 68

% 71 = fmul double% iu.024,% 69

% 72 = fsub double% 70,% 71

store double% 72, double *% 60, align 4,! tbaa! 1

% 73 = fmul double% ru.023,% 69

% 74 = fmul double% iu.024,% 68

% 75 = fadd double% 74,% 73

store double% 75, double *% 65, align 4,! tbaa! 1

store double% 62, double *% 58, align 4,! tbaa! 1

store double% 67, double *% 63, align 4,! tbaa! 1

% 76 = add nsw i32% i.020,% ie.028

% 77 = icmp slt i32% 76,% N

br i1% 77, label% .lr.ph21, label% ._ crit_edge22

...

% i.020 = phi i32 [% 76,% .lr.ph21], [% j.025,% .preheader19]

% 57 = add nsw i32% i.020,% 54

% 58 = getelementptr inbounds double *% Rdat, i32% i.020

% 59 = load double *% 58, align 4,! Tbaa! 1

% 60 = getelementptr inbounds double *% Rdat, i32% 57

% 61 = load double *% 60, align 4,! Tbaa! 1

% 62 = fadd double% 59,% 61

% 63 = getelementptr inbounds double *% Idat, i32% i.020

% 64 = load double *% 63, align 4,! Tbaa! 1

% 65 = getelementptr inbounds double *% Idat, i32% 57

% 66 = load double *% 65, align 4,! Tbaa! 1

% 67 = fadd double% 64,% 66

% 68 = fsub double% 59,% 61

% 69 = fsub double% 64,% 66

% 70 = fmul double% ru.023,% 68

% 71 = fmul double% iu.024,% 69

% 72 = fsub double% 70,% 71

store double% 72, double *% 60, align 4,! tbaa! 1

% 73 = fmul double% ru.023,% 69

% 74 = fmul double% iu.024,% 68

% 75 = fadd double% 74,% 73

store double% 75, double *% 65, align 4,! tbaa! 1

store double% 62, double *% 58, align 4,! tbaa! 1

store double% 67, double *% 63, align 4,! tbaa! 1

% 76 = add nsw i32% i.020,% ie.028

% 77 = icmp slt i32% 76,% N

br i1% 77, label% .lr.ph21, label% ._ crit_edge22

...

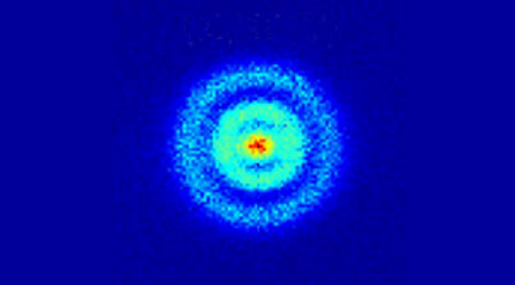

In the form of a dependency graph between instructions, it looks like this:

Offhand, each vertex of the tree from which more than one edge extends is a candidate for the title of a bookmark. At least in terms of floating point calculations.

In any case, the implementation of the described architecture in LLVM does not look like a hopeless event.

Dot file, suddenly come in handy:

fft.dot

digraph graphname {

L000 [label = "% 54"];

L001 [label = "% Rdat"];

L002 [label = "% Idat"];

L003 [label = "% ru.023"];

L004 [label = "% iu.024"];

L005 [label = "% i.020"];

L02 [label = "% 57 = add nsw i32% i.020,% 54"];

L03 [label = "% 58 = getelementptr double *% Rdat, i32% i.020"];

L04 [label = "% 59 = load double *% 58"];

L05 [label = "% 60 = getelementptr double *% Rdat, i32% 57"];

L06 [label = "% 61 = load double *% 60"];

L07 [label = "% 62 = fadd double% 59,% 61"];

L08 [label = "% 63 = getelementptr double *% Idat, i32% i.020"];

L09 [label = "% 64 = load double *% 63"];

L10 [label = "% 65 = getelementptr double *% Idat, i32% 57"];

L11 [label = "% 66 = load double *% 65"];

L12 [label = "% 67 = fadd double% 64,% 66"];

L13 [label = "% 68 = fsub double% 59,% 61"];

L14 [label = "% 69 = fsub double% 64,% 66"];

L15 [label = "% 70 = fmul double% ru.023,% 68"];

L16 [label = "% 71 = fmul double% iu.024,% 69"];

L17 [label = "% 72 = fsub double% 70,% 71"];

L18 [label = "store double% 72, double *% 60"];

L19 [label = "% 73 = fmul double% ru.023,% 69"];

L20 [label = "% 74 = fmul double% iu.024,% 68"];

L21 [label = "% 75 = fadd double% 74,% 73"];

L22 [label = "store double% 75, double *% 65"];

L23 [label = "store double% 62, double *% 58"];

L24 [label = "store double% 67, double *% 63"];

L005-> L02; L000-> L02; L005-> L03; L001-> L03;

L03-> L04; L001-> L05; L02-> L05; L05-> L06; L04-> L07;

L06-> L07; L002-> L08; L005-> L08; L08-> L09; L002-> L10;

L02-> L10; L10-> L11; L09-> L12; L11-> L12; L04-> L13;

L06-> L13; L09-> L14; L11-> L14; L003-> L15; L13-> L15;

L004-> L16; L14-> L16; L15-> L17; L16-> L17; L17-> L18;

L003-> L19; L14-> L19; L004-> L20; L13-> L20; L19-> L21;

L20-> L21; L10-> L22; L21-> L22; L07-> L23; L03-> L23;

L08-> L24; L12-> L24; L05-> L18;

}

L000 [label = "% 54"];

L001 [label = "% Rdat"];

L002 [label = "% Idat"];

L003 [label = "% ru.023"];

L004 [label = "% iu.024"];

L005 [label = "% i.020"];

L02 [label = "% 57 = add nsw i32% i.020,% 54"];

L03 [label = "% 58 = getelementptr double *% Rdat, i32% i.020"];

L04 [label = "% 59 = load double *% 58"];

L05 [label = "% 60 = getelementptr double *% Rdat, i32% 57"];

L06 [label = "% 61 = load double *% 60"];

L07 [label = "% 62 = fadd double% 59,% 61"];

L08 [label = "% 63 = getelementptr double *% Idat, i32% i.020"];

L09 [label = "% 64 = load double *% 63"];

L10 [label = "% 65 = getelementptr double *% Idat, i32% 57"];

L11 [label = "% 66 = load double *% 65"];

L12 [label = "% 67 = fadd double% 64,% 66"];

L13 [label = "% 68 = fsub double% 59,% 61"];

L14 [label = "% 69 = fsub double% 64,% 66"];

L15 [label = "% 70 = fmul double% ru.023,% 68"];

L16 [label = "% 71 = fmul double% iu.024,% 69"];

L17 [label = "% 72 = fsub double% 70,% 71"];

L18 [label = "store double% 72, double *% 60"];

L19 [label = "% 73 = fmul double% ru.023,% 69"];

L20 [label = "% 74 = fmul double% iu.024,% 68"];

L21 [label = "% 75 = fadd double% 74,% 73"];

L22 [label = "store double% 75, double *% 65"];

L23 [label = "store double% 62, double *% 58"];

L24 [label = "store double% 67, double *% 63"];

L005-> L02; L000-> L02; L005-> L03; L001-> L03;

L03-> L04; L001-> L05; L02-> L05; L05-> L06; L04-> L07;

L06-> L07; L002-> L08; L005-> L08; L08-> L09; L002-> L10;

L02-> L10; L10-> L11; L09-> L12; L11-> L12; L04-> L13;

L06-> L13; L09-> L14; L11-> L14; L003-> L15; L13-> L15;

L004-> L16; L14-> L16; L15-> L17; L16-> L17; L17-> L18;

L003-> L19; L14-> L19; L004-> L20; L13-> L20; L19-> L21;

L20-> L21; L10-> L22; L21-> L22; L07-> L23; L03-> L23;

L08-> L24; L12-> L24; L05-> L18;

}

Epilogue

Here we come to the preliminary finish.

Understood why you need a new architecture, offered a solution.

Initially, the question was whether this architecture meets the requirements.

And now we can answer, yes, at least in that small part of C, which we managed to verify, we received

- expected hardware simplification

- outwardly imperceptible scalability by the number of registers

- as well as the number of functional devices

- potential compiler simplification

At the first (amateurish) view, the principal problems that impede the implementation of such an architecture in hardware are not visible.

We intentionally did not consider such things as:

- floating point calculations, do they need a separate stack, ...

- cpu state saving when switching context

- interrupts

- ...

All these questions are important, but less important.

And at the moment the author considers his task accomplished.

Source: https://habr.com/ru/post/281352/

All Articles