Monolithic repositories in Git

Many have chosen Git for its flexibility: in particular, the branch and merge model can effectively decentralize development. In most cases, this flexibility is a plus, but some scenarios are not so elegantly supported. One of them is the use of Git for large monolithic repositories - mono - repositories . This article explores the monopositories problem in Git and suggests ways to mitigate them.

The Uluru Rock in Australia as an example of a monolith - KDPV, no more

Definitions vary, but we will consider the repository monolithic when the following conditions are met:

I see a couple of possible scenarios:

Under such conditions, preference can be given to a single repository, since it makes it much easier to make large changes and refactoring (for example, update all microservices to a specific version of the library).

Facebook has an example of such a monograph .

When conducting performance tests on Facebook, a test repository was used with the following parameters:

There are many conceptual problems with storing unrelated projects in a Git mono-repository.

')

First, Git takes into account the state of the entire tree in each commit made. This is normal for one or more related projects, but becomes clumsy to the repository with many unrelated projects. Simply put, a subtree essential to a developer is affected by commits in unrelated parts of the tree. This problem is clearly manifested with a large number of commits in the history of the tree. Since the top of a branch is changing all the time, sending a change requires frequent

A tag in Git is a named pointer to a particular commit, which, in turn, refers to a whole tree. However, the use of tags is reduced in the context of a mono-repository. Judge for yourself: if you are working on a web application that is constantly being deployed from a monospository (Continuous Deployment), what relation will the release tag have to the versioned client for iOS?

Along with these conceptual problems, there are a number of performance aspects that affect the mono-repository.

Keeping unrelated projects in a single large repository can be troublesome at the commit level. Over time, such a strategy can lead to a large number of commits and a significant growth rate (from the description of Facebook - "thousands of commits per week" ). This becomes especially costly, since Git uses a directed acyclic graph (directed acyclic grap - DAG) to store the project history. With a large number of commits, any team that goes around the graph becomes slower as the history grows.

Examples of such commands are

A large number of pointers — branches and tags — in your mono-repository affect performance in several ways.

An announcement of pointers contains each pointer of your mono-repository. Since the announcement of pointers is the first phase of any remote Git operation, commands such as

If the pointers are not stored in a compressed form, the enumeration of branches will work slowly. After executing the

Any operation that requires traversing the history of the commits of the repository and takes into account each pointer (for example,

The index (

Therefore, the number of files in the repository affects the performance of many operations:

These effects can vary depending on the settings of the caches and the characteristics of the disk, and become noticeable only with a really large number of files, counted in tens and hundreds of thousands of pieces.

Large files in one subtree / project affect the performance of the entire repository. For example, large media files added to an iOS client project in a mono-repository will be cloned even by developers working on completely different projects.

The number and size of files in combination with the frequency of their changes cause an even greater impact on performance:

As a consequence of the effects described, monolithic repositories are a test for any Git repository management system, and Bitbucket is no exception. More importantly, the problems generated by mono-repositories require solutions both on the server side and on the client side.

Of course, it would be great if Git specifically supported the use case with monolithic repositories. The good news for the overwhelming majority of users is that in fact, really large monolithic repositories are the exception rather than the rule, so even if this article turned out to be interesting (what you want to hope for), it hardly applies to those situations which you encountered.

There are a number of methods for reducing the above negative effects that can help in working with large repositories. For repositories with a large history or large binary files, my colleague Nicola Paolucci described several workarounds.

If the number of pointers in your repository is tens of thousands, you should try to delete those pointers that have become unnecessary. The commit graph maintains a history of the evolution of change, and since merge commits contain references to all their parents, the work that was done in the branches can be traced even if these branches themselves no longer exist. In addition, a merge commit often contains the name of a branch, which will allow to restore this information, if necessary.

In a branch-based development process , the number of long-lived branches that should be maintained should be small. Do not be afraid to remove short-term feature-branches after merging them into the main branch. Consider deleting all branches that are already merged into the main branch (for example, in

If there are a lot of files in your repository (their number reaches tens and hundreds of thousands of pieces), a fast local disk and enough memory that can be used for caching will help. This area will require more significant changes on the client side, similar to those implemented by Facebook for Mercurial .

Their approach is to use file system events to track changed files instead of iterating over all files in search of those. A similar solution, also using the daemon that monitors the file system, was also discussed for Git , but at the moment it has not led to a result.

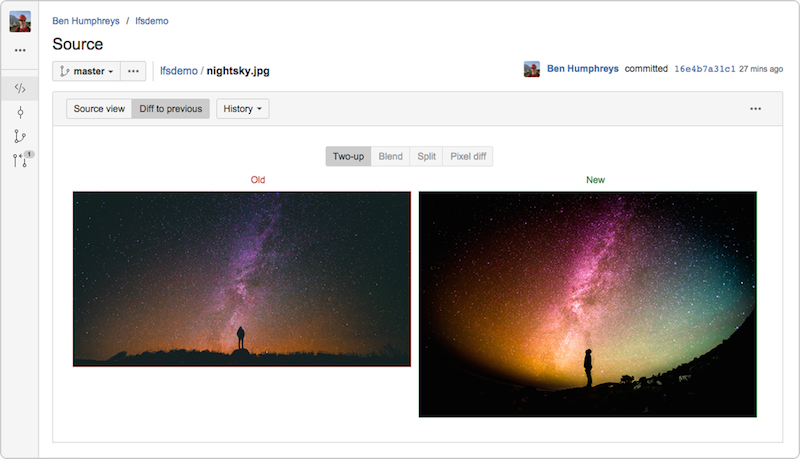

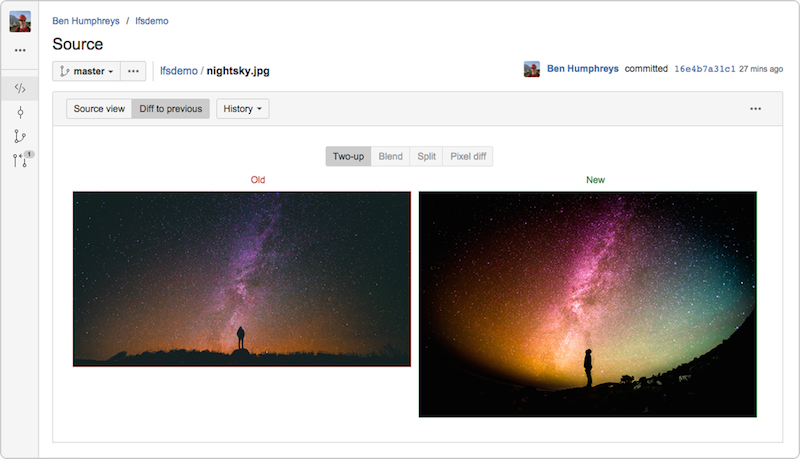

For projects that contain large files, such as videos or graphics, Git LFS is one way to reduce their impact on the size and overall performance of the repository. Instead of storing large objects in the repository itself, Git LFS stores the small pointer file to this object under the same name. The object itself is stored in a special storage of large files. Git LFS is embedded in

Bitbucket Server 4.3 fully supports Git LFS v1.0 + , and in addition, it allows you to view and compare large graphic files stored in LFS.

My colleague Steve Striting is actively involved in the development of the LFS project and recently wrote an article about him .

The most radical solution is to divide the mono-repository into smaller, more focused repositories. Try not to track every change in a single repository, but identify component boundaries, for example, highlighting modules or components that have a common release cycle. A good sign of the components is the use of tags in the repository and how much they make sense for other parts of the source tree.

Although the mono-repository concept is at odds with the decisions that have made Git extremely successful and popular, this does not mean that it is worth giving up the Git features just because your repository is monolithic: in most cases, there are working solutions for the problems that arise.

Stefan Zaazen - Atlassian Bitbucket architect. His passion for DVCS led him to migrate the Confluence team from Subversion to Git and, ultimately, to lead in the development of what is now known as Bitbucket Server. Stefan can be found on Twitter under the pseudonym @stefansaasen .

The Uluru Rock in Australia as an example of a monolith - KDPV, no more

What is a mono-repository?

Definitions vary, but we will consider the repository monolithic when the following conditions are met:

- The repository contains more than one logical project (for example, an iOS client and a web application)

- These projects may be unrelated, weakly connected or connected by third-party means (for example, through a dependency management system)

- The repository is large in many ways:

- By the number of commits

- By the number of branches and / or tags

- By the number of files

- By the size of the content (i.e. the size of the

.gitfolder)

When are mono-repositories convenient?

I see a couple of possible scenarios:

- The repository contains a set of services, frameworks and libraries that make up a single logical application. For example, many microservices and shared libraries, which all together provide the work of the application

foo.example.com. - Semantic versioning of artifacts is not required or does not bring special benefit due to the fact that the repository is used in a variety of environments (for example, staging and production). If it is not necessary to send artifacts to users, then historical versions, such as 1.10.34, may become unnecessary.

Under such conditions, preference can be given to a single repository, since it makes it much easier to make large changes and refactoring (for example, update all microservices to a specific version of the library).

Facebook has an example of such a monograph .

With thousands of commits a week and hundreds of thousands of files, the main source repository when Facebook is huge is many times more,

than even the Linux kernel, in which, as of 2013, there were 17 million lines of code in 44 thousand files.

When conducting performance tests on Facebook, a test repository was used with the following parameters:

- 4 million commits

- Linear story

- About 1.3 million files

- The size of the

.gitfolder is about 15 GB - Index file up to 191 MB

Conceptual issues

There are many conceptual problems with storing unrelated projects in a Git mono-repository.

')

First, Git takes into account the state of the entire tree in each commit made. This is normal for one or more related projects, but becomes clumsy to the repository with many unrelated projects. Simply put, a subtree essential to a developer is affected by commits in unrelated parts of the tree. This problem is clearly manifested with a large number of commits in the history of the tree. Since the top of a branch is changing all the time, sending a change requires frequent

merge or rebase .A tag in Git is a named pointer to a particular commit, which, in turn, refers to a whole tree. However, the use of tags is reduced in the context of a mono-repository. Judge for yourself: if you are working on a web application that is constantly being deployed from a monospository (Continuous Deployment), what relation will the release tag have to the versioned client for iOS?

Performance issues

Along with these conceptual problems, there are a number of performance aspects that affect the mono-repository.

Number of commits

Keeping unrelated projects in a single large repository can be troublesome at the commit level. Over time, such a strategy can lead to a large number of commits and a significant growth rate (from the description of Facebook - "thousands of commits per week" ). This becomes especially costly, since Git uses a directed acyclic graph (directed acyclic grap - DAG) to store the project history. With a large number of commits, any team that goes around the graph becomes slower as the history grows.

Examples of such commands are

git log (studying the history of the repository) and git blame (annotation of file changes). When executing the last command, Git will have to go around a bunch of commits that are not related to the file being examined in order to calculate information about its changes. In addition, the resolution of any reachability issues becomes more complex: for example, whether commit A reachable from commit B Add to this the many unrelated modules in the repository, and performance problems will be exacerbated.Number of pointers ( refs )

A large number of pointers — branches and tags — in your mono-repository affect performance in several ways.

An announcement of pointers contains each pointer of your mono-repository. Since the announcement of pointers is the first phase of any remote Git operation, commands such as

git clone , git fetch or git push are hit. With a large number of pointers, their performance will subside. You can see the announcement of pointers using the git ls-remote , passing it the URL of the repository as an argument. For example, this command will list all pointers in the Linux kernel repository: git ls-remote git://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git If the pointers are not stored in a compressed form, the enumeration of branches will work slowly. After executing the

git gc command, the pointers will be packed into a single file, and then enumeration of even 20,000 pointers will be fast (about 0.06 seconds).Any operation that requires traversing the history of the commits of the repository and takes into account each pointer (for example,

git branch --contains SHA1 ) in the mono-repository will work slowly. For example, with 21.708 pointers, the search for the pointer containing the old commit (which is reachable from almost all of the pointers) took 146.44 seconds on my computer (time may vary depending on the caching settings and the parameters of the storage medium on which the repository is stored).Number of files counted

The index (

.git/index ) counts each file in your repository. Git uses an index to determine whether a file has changed, executing stat(1) for each file and comparing the change information of a file with the information contained in the index.Therefore, the number of files in the repository affects the performance of many operations:

git statuscan be slow because This command checks the file, and the index file will be large.git commitcan also be slow because it checks every file.

These effects can vary depending on the settings of the caches and the characteristics of the disk, and become noticeable only with a really large number of files, counted in tens and hundreds of thousands of pieces.

Large files

Large files in one subtree / project affect the performance of the entire repository. For example, large media files added to an iOS client project in a mono-repository will be cloned even by developers working on completely different projects.

Combined effects

The number and size of files in combination with the frequency of their changes cause an even greater impact on performance:

- Switching between branches or tags, which is relevant in the context of a subtree (for example, a subtree I work with), still updates the entire tree. This process can be slow due to the large number of files affected, but there is a workaround . For example, the following command will update the

./templatesfolder so that it matches the specified branch, but it does not change theHEAD, which will lead to a side effect: the updated files will be marked in the index as changed:`git checkout ref-28642-31335 -- templates` - Cloning and fetching are slowed down and become resource-intensive for the server, since all information is packaged into a pack-file before being sent.

- Garbage collection becomes long and is called by default when

git pushexecuted (the assembly itself only happens if it is needed). - Any command that includes creating a pack-file, for example

git upload-pack,git gc, requires significant resources.

What about bitbucket?

As a consequence of the effects described, monolithic repositories are a test for any Git repository management system, and Bitbucket is no exception. More importantly, the problems generated by mono-repositories require solutions both on the server side and on the client side.

| Parameter | Impact on the server | Impact on user |

|---|---|---|

| Large repositories (many files, large files or both) | Memory, CPU, IO, git clone loads on the network, git gc slow and resource-intensive | Cloning takes considerable time, - both from developers and CI |

| A large number of commits | - | git log and git blame are slow |

| A large number of pointers | Viewing the list of branches, announcing pointers takes considerable time ( git fetch , git clone , git push are slow) | Availability suffers |

| A large number of files | Server side commits get long | git status and git commit are slow |

| Large files | See "Large Repositories" | git add for large files, git push and git gc are slow |

Mitigation strategies

Of course, it would be great if Git specifically supported the use case with monolithic repositories. The good news for the overwhelming majority of users is that in fact, really large monolithic repositories are the exception rather than the rule, so even if this article turned out to be interesting (what you want to hope for), it hardly applies to those situations which you encountered.

There are a number of methods for reducing the above negative effects that can help in working with large repositories. For repositories with a large history or large binary files, my colleague Nicola Paolucci described several workarounds.

Delete pointers

If the number of pointers in your repository is tens of thousands, you should try to delete those pointers that have become unnecessary. The commit graph maintains a history of the evolution of change, and since merge commits contain references to all their parents, the work that was done in the branches can be traced even if these branches themselves no longer exist. In addition, a merge commit often contains the name of a branch, which will allow to restore this information, if necessary.

In a branch-based development process , the number of long-lived branches that should be maintained should be small. Do not be afraid to remove short-term feature-branches after merging them into the main branch. Consider deleting all branches that are already merged into the main branch (for example, in

master or production ).Handling a large number of files

If there are a lot of files in your repository (their number reaches tens and hundreds of thousands of pieces), a fast local disk and enough memory that can be used for caching will help. This area will require more significant changes on the client side, similar to those implemented by Facebook for Mercurial .

Their approach is to use file system events to track changed files instead of iterating over all files in search of those. A similar solution, also using the daemon that monitors the file system, was also discussed for Git , but at the moment it has not led to a result.

Use Git LFS (Large File Storage - storage for large files)

For projects that contain large files, such as videos or graphics, Git LFS is one way to reduce their impact on the size and overall performance of the repository. Instead of storing large objects in the repository itself, Git LFS stores the small pointer file to this object under the same name. The object itself is stored in a special storage of large files. Git LFS is embedded in

push , pull , checkout and fetch operations to transparently ensure the transfer and substitution of these objects into a working copy. This means that you can work with large files the same way as usual, without inflating your repository.Bitbucket Server 4.3 fully supports Git LFS v1.0 + , and in addition, it allows you to view and compare large graphic files stored in LFS.

My colleague Steve Striting is actively involved in the development of the LFS project and recently wrote an article about him .

Define boundaries and share your repository.

The most radical solution is to divide the mono-repository into smaller, more focused repositories. Try not to track every change in a single repository, but identify component boundaries, for example, highlighting modules or components that have a common release cycle. A good sign of the components is the use of tags in the repository and how much they make sense for other parts of the source tree.

Although the mono-repository concept is at odds with the decisions that have made Git extremely successful and popular, this does not mean that it is worth giving up the Git features just because your repository is monolithic: in most cases, there are working solutions for the problems that arise.

Stefan Zaazen - Atlassian Bitbucket architect. His passion for DVCS led him to migrate the Confluence team from Subversion to Git and, ultimately, to lead in the development of what is now known as Bitbucket Server. Stefan can be found on Twitter under the pseudonym @stefansaasen .

Source: https://habr.com/ru/post/280358/

All Articles