Make an autonomous photo booth on raspberry pi

In the “online” age, print photography has become more of a curiosity, as it used to be with digital photography. Recently, various types of photo booths have begun to gain popularity as an interesting way to entertain guests and get a souvenir in the form of photography. I am a photographer who is interested in programming, and with this combination, it would be strange not to try to make a photo booth.

In the “online” age, print photography has become more of a curiosity, as it used to be with digital photography. Recently, various types of photo booths have begun to gain popularity as an interesting way to entertain guests and get a souvenir in the form of photography. I am a photographer who is interested in programming, and with this combination, it would be strange not to try to make a photo booth.Unlike commercial models of photo booths that are on sale, I wanted to make a really compact and autonomous system. So that I am parallel to the main work, I could install it in a field in a couple of minutes and not carry extra tens of kilograms of weight with me. And I did it.

Under the cut there will be a story about hardware, raspberry pi and programming all of this under linux and of course my favorite python. Looking ahead, I would say that I just wanted high-quality photos, so shooting is not on a webcam, but on a DSLR, so the article should get even more and more interesting.

Iron. From simple to complex.

When I started this project, it was winter. In winter, I have a lot of free time and little work, so the project should be as cheap as possible. Besides the financial component, I love the KISS principle - the system should be as simple as possible. From simplicity depends also on such components as reliability, size and, importantly, power consumption.

')

And I will begin the story about iron with how it could be done much easier, and then, in the course of working on the project, “simpler” was replaced with a more complex one. Perhaps the implementation of "easier" someone come in handy.

And so, we have a DSLR camera and the task: at the command of a person, take a picture and send it to print. With this is actually very, very simple. Take a button, connect it to any MK (but at least completely insert arduino). When a person presses a button, count the number of seconds, and after this time, take a picture. It’s also easy to take a picture on a DSLR - it’s enough to “pretend” either with a wired remote (connects to the camera via the 2.5 jack connector and, in fact, “closes” two contacts), or “pretend” with a wireless IR remote control (there are a lot of implementations under the MK). For timing, you can use any segment indicator, or LCD display, but I planned to make an “analog” indication — servo-drive and arrow. Sending photos from the camera to print should also be no problem. There are wonderful SD memory cards with a built-in WiFi transmitter. By the way, I have already written in detail about this in Habré. Further, on a laptop, one could already write a simple script that sends the resulting photos to print.

Further, in my “mental” work on the project, it was necessary to complicate the system. In the first version, one photo is taken, but I want to make a series like in “real” photo booths, and print it on one sheet of paper. This can be realized with the help of the second button (a person chooses, take one photo, or several at once). There is a problem - how the laptop finds out which button was pressed, and accordingly print this photo, or wait another 3, for placement on one sheet. This is also easy to solve - beloved esp8266, which can be connected to the same network as the SD card with WiFi. It will "inform" the laptop about the choice of the user.

The project would have remained so if I had not thought about a very important thing - people should see themselves during the shooting. They do not know at what distance you need to approach, how many people fit into the frame, etc. There is of course the display of the camera itself, which can be included in the LiveView mode, I have already started thinking about the system of mirrors and lenses so that this picture was visible to people (the task was simplified the fact that the display on the camera is turning), but I remembered that there is an HDMI output in the camera and on the well-known “Chinese” electronics store, there must be something I need. As a result, a 7-inch LCD display was ordered with a controller that can receive signals from various sources, including HDMI.

While the display was on, I decided that I really didn't want to carry a laptop with me. It occurred to make a truly autonomous system. And then the “brain” of the entire system comes on the scene - the Raspberry Pi. With his appearance, I decided to completely entrust all the work to him: to give up the buttons, allowing the user to make buttons on the touchscreen, show him a picture from LiveView and most importantly “approve” the photos for printing, since it is not uncommon for a person, for example, corny "blinks" during the shooting.

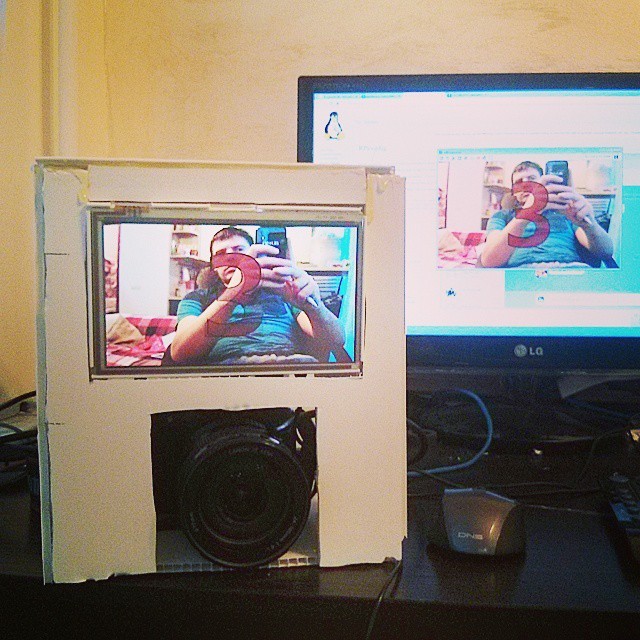

When all the parts came, I started to put everything together. From the tools I had a soldering iron, glue gun and siding (!). The first unit was ready display - a kind of homemade monitor.

In the photo above is the display controller, below is the USB touchscreen controller. The whole set was bought on aliexpress (search this way )

From the display side, it looks like this:

It eats the whole thing from 5 volts and consumes 700-800 milliamperes in peak.

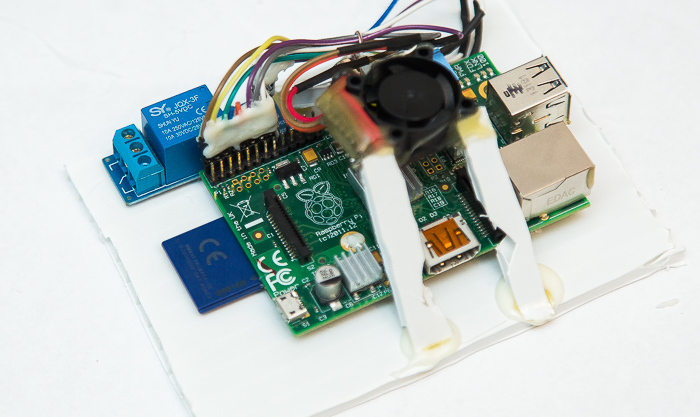

I made the “case” for raspberry pi from the same siding (this is the first thing that caught my eye, and it is very easy to work with it).

Let me explain what the "pie" turned out. On top there is a 5 volt cooler. I was afraid of overheating, and it turns on when the temperature reaches 55 degrees, a simple script that runs from the crown.

The script is very simple

#!/usr/bin/env python import RPIO import subprocess RPIO.setwarnings(False) RPIO.setup(18,RPIO.OUT) sub=subprocess.Popen(['/opt/vc/bin/vcgencmd', 'measure_temp'],stdout=subprocess.PIPE,stderr=subprocess.PIPE,shell=False) temp=float(sub.stdout.read().split('=')[1].split("'")[0]) if temp>55: RPIO.output(18,1) if temp<45: RPIO.output(18,0) On the left you can see the relay module. It is designed to turn on the diode backlight during operation. Let me explain why this is necessary: at various banquets, during a break for dancing it can be very dark. Illumination is needed both for autofocus operation when shooting and for people to see themselves during the “posing”, and not the darkness on the screen. During shooting indoors, I have a stand with a flash and a soft box, which work through a regular radio synchronizer, which is connected to the hot bashkama camera. This way I get good light in the pictures. Although it would be possible to leave just a diode backlight, but the quality of the pictures would be worse.

The prototype enclosures were assembled from the same siding, in order to assess the requirements and dimensions for the final version.

I wanted to stylize the body itself as an old camera. I did not dare to do it myself and ordered the production of friends who are engaged in decor. The body is made of laminate, using kozhzam for "accordion".

The cats liked it very much, but they still had to be driven out to accommodate all the stuffing.

It feeds the whole thing from two banks. In theory, one would suffice for 10,000 mA / h for 5-6 hours of operation, but if both the display and the raspberry are connected simultaneously, the current load is greater and the batteries give less capacity.

A few words about the printing of photos, for which it was started. You can print photos on anything, even on an ordinary household inkjet printer. But again, I needed a compact. In addition to compactness, for autonomous work, you need more reliability. I do not really want to worry about the level of ink, clogged nozzles, and so on. As a result, a cheap and compact thermal sublimation printer was chosen. From the pros: small size of the printer, trouble-free printing (you can forget about the bands, stains of paint, etc.), the ability to transport and print at least upside down, durability of pictures (immediately after printing a picture dipped into the water, and he had nothing). Of the minuses: high cost of printing and the need to replace the paper every 18 shots and tape cartridges every 36 shots. Consumables are only original (complete, except special paper, also a special cartridge with a tape), the cost per print is 25 rubles, although this is included in the final cost of the service. The printer works over WiFi; for this, a “WiFi whistle” is connected to the raspberry pi.

Soft

For "serial" photo booth there is a software for sale, which exists naturally only under PC and Windows. For linux under the arm I had to write everything from scratch (oh well I had to, I just like it!). Python was chosen as the programming language (in fact, I don't know anything else).

First, you need to learn how to interact with a camera that is connected via USB. I was not surprised when I discovered that there are already ready-made tools for linux. This is a gphoto2 console utility.

First of all, the settings screen was needed, which starts immediately after the system starts.

GUI is written on Tkinter, which is more than suitable for these tasks.

Here you can customize the camera for specific shooting conditions, run the main program, adjust its behavior (take one large photo, or allow you to select “one photo” and “4 in 1”, print or just save, as well as print mode, but about this later), and take a test snapshot. About this in more detail.

First, you need to write the settings in the camera, it is done simply:

# bb_list=['','','','','.','. ','',''] sub=subprocess.Popen(['gphoto2', '--set-config', 'whitebalance='+str(bb_list.index(settings['bb'])), '--set-config', 'iso='+t_iso, '--set-config', 'aperture='+settings['a'], '--set-config', 'imageformat=7' # (jpeg 19201080) ],stdout=subprocess.PIPE,stderr=subprocess.PIPE,shell=False) err=sub.stderr.read() Now you need to take a test picture and show it on the screen.

sub=subprocess.Popen(['gphoto2','--capture-image-and-download','--filename','/tmp/booth.jpg','--force-overwrite'],stdout=subprocess.PIPE,stderr=subprocess.PIPE,shell=False) err=sub.stderr.read() The camera will take a picture and it will be written to the file /tmp/booth.jpg (to speed up the work and save the resource of the memory card in raspberry, such things are better done in / tmp, which is mounted by default in tmpfs, that is, it works in RAM). After that, the picture is displayed on the screen for evaluation, and the settings are written to the file using the usual open ('/ home / pi / settings.dat', 'wb'). Write (repr (settings))

So, the camera is set up, it's time to start the user interface.

The interface itself is written using the well-known pygame library.

Naturally, so that users could not click "where not to", the application runs full screen:

pygame.init() pygame.mouse.set_visible(0) screen = pygame.display.set_mode((800,480), pygame.HWSURFACE | pygame.FULLSCREEN,32) The architecture of the application is painfully simple: the interface is drawn from pre-stored images, and the program itself works according to the state machine method, with predetermined states. In more detail, I would like to tell about the interaction with the camera.

The first thing we need is to get a LiveView image from the camera so that users can see themselves while preparing for the shoot. I tried to do it with the help of the gphoto2 utility, but for reasons I don’t remember, I didn’t succeed. A library was found for the piggyphoto python , where this problem is solved simply:

cam = piggyphoto.camera() cam.leave_locked() while WORK: s=self.cam.capture_preview() s.save('/tmp/preview.jpg') s.clean() # , https://github.com/alexdu/piggyphoto/issues/2 from_camera = pygame.image.load('/tmp/preview.jpg') # from_camera=pygame.transform.scale(from_camera, (800, 530)) # from_camera=pygame.transform.flip(from_camera,1,0) # , ) Under the LiveView mode, you also need to configure the camera. For example, if the shooting itself is carried out with ISO 100, then this may not be enough on LiveView. Before switching on, you need to do something like this:

sub=subprocess.Popen(['gphoto2', '--set-config', 'whitebalance=0', # '--set-config', 'iso=0', # ISO '--set-config', 'aperture=2.8', # '--set-config', 'imageformat=7' ],stdout=subprocess.PIPE,stderr=subprocess.PIPE,shell=False) After completing the timer, you can already do the frame itself, as described above. If the “4 frames in 1” mode is selected, then this is all repeated 4 times to get 4 different photos, which are then glued together into one. The Pillow library is already engaged in this (I strongly recommend using it rather than an outdated PIL, at least because of performance), which in addition to merging several photos onto one sheet, imposes a logo, and makes it possible to do some branding:

For the demo, I made a short video:

The photo is ready, you can send it to print. In linux, this is done with just one command: lpr FILENAME. Sending a photo for printing over WiFi to a canon selphie printer has one nuance: the photo must be sent to print “directly”, that is, the printer accepts a ready-made jpg file: lpr -o RAW filename.

Actually, the main functionality is ready. Later several more features were added. The first thing that I wanted to realize was the delivery of photographs in electronic form. Since WiFi is already working in the access point mode to which the printer is connected, why not open it. On raspberry a web server was raised, with a simple gallery. In order to avoid problems with the search for this web server, I configured the Captive Portal - when connected to an access point, the page with the gallery opens itself. This is done by installing a DNS server, which for all requests gave the IP address of the web server.

In fact, in one article, it is impossible to tell about all the nuances. As far as possible, I will try to answer your questions in the comments. My next article also promises to be interesting, in which we will cross the old unusual technologies in photo printing, and new technologies in the field of software.

Source: https://habr.com/ru/post/279785/

All Articles