QNX RTOS: Qnet - transparent inter-tasking network interaction

I hope that the long-awaited continuation of the cycle of notes on the QNX real-time operating system . This time, I would like to talk about Qnet, the QNX proprietary network protocol. I’ll clarify right away that in addition to Qnet’s native network, QNX also supports the TCP / IP protocol stack, which you should generally be familiar with for administrators of Unix-like systems. Therefore, in the note, I first talk a little about the network administrator

I hope that the long-awaited continuation of the cycle of notes on the QNX real-time operating system . This time, I would like to talk about Qnet, the QNX proprietary network protocol. I’ll clarify right away that in addition to Qnet’s native network, QNX also supports the TCP / IP protocol stack, which you should generally be familiar with for administrators of Unix-like systems. Therefore, in the note, I first talk a little about the network administrator io-pkt , and then in more detail about the Qnet protocol. In the course of the narrative, four lyrical and one technical digressions are also waiting for us.What is Qnet?

A QNX network is a group of interconnected target systems, each of which runs the QNX Neutrino RTOS. In such a network, any program has access to any resource on any node (node, this is the name of the individual computers on the network). A resource can be a file, a device or a process (including the launch of processes on another node). At the same time, the target systems (those nodes) can be computers of various architectures — x86, ARM, MIPS, and PowerPC (the current implementation of Qnet also works in a cross-endian environment). But as if this is not enough, any POSIX application ported to QNX (for migration often requires only rebuilding) without any modification will have the above capabilities of working in the Qnet network. Intrigued, how does this work?

The Qnet protocol extends the messaging engine to the QNX Neutrino micronuclei network. Here is the biggest secret of Qnet, and further in the article we will look at how it all works.

')

As a lyrical digression.

This note is about QNX 6.5.0, but sometimes I will remember about other versions of QNX6 and even QNX4. And now I remember ... A similar approach with its own network was in QNX4, where the network was called FLEET. Protocols Qnet and FLEET are not compatible with each other, affect the differences in the implementation of the kernel. There are also differences in the addressing nodes. Nevertheless, the same principle is based on the extension of the messaging mechanism to the microkernel network.

Io-pkt network subsystem

The network subsystem is a typical representative of one of the QNX subsystems, i.e. consists of an

io-pkt* administrator (built using QNX resource manager technology), device modules (drivers), for example, devnp-e1000.so , protocol modules, for example, lsm-qnet.so , and utilities, for example, ifconfig and nicinfo . By the way, QNX has three network managers: io-pkt-v4 , io-pkt-v4-hc and io-pkt-v6-hc . The suffix v4 says that this manager supports only IPv4, and the version with v6 supports IPv4 and IPv6. The suffix hc (high capacity) means an advanced version with support for encryption and Wi-Fi. Therefore, sometimes in the literature one can find the name io-pkt* , but we will call the manager io-pkt (without an asterisk), since In our case, it does not matter what version of TCP / IP we are talking about, because this note is about Qnet.As a lyrical digression.

In previous versions of QNX6, the network manager was called

At the time of

io-net , and the TCP / IP protocol module was not linked to io-net , but was a stand-alone module like lsm-qnet.so . Although wait, the modules then had a different prefix, since TCP / IP modules were called npm-tcpip-v4.so and npm-tcpip-v6.so , and Qnet was npm-qnet.so . Although the latter is not entirely true, in ancient times (in the QNX 6.3.2 era) there were two Qnet modules — npm-qnet-compat.so (for compatibility with older versions of QNX6) and npm-qnet-l4_lite.so ( which is supported by the QNX 6.5.0 lsm-qnet.so module). By the way, npm means Network Protocol Module, and lsm means Loadable Shared Module.At the time of

io-net , network driver modules carried the proud prefix devn. The drivers for io-pkt have another prefix - devnp. Older drivers can also be connected to io-pkt , io-pkt interlayer module is automatically used.

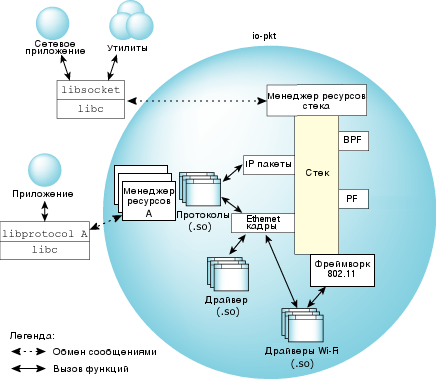

Fig. 1. Detailed view of

io-pkt .The

io-pkt is shown in Figure 1. At the bottom level are the drivers of wired and wireless networks. These are loadable modules (DLL, shared libraries). It is worth noting that in addition to Ethernet, other transmission media are supported. For example, the devn-fd.so driver allows devn-fd.so to organize the transmission and reception of data using a file descriptor (fd is just a file descriptor), so you can organize the network, for example, on a serial port. Speed, of course, will be appropriate, but sometimes it is very saving. Device drivers connect to a second-level multi-thread component (stack). The stack, first, provides the possibility of bridging and relaying. Secondly, the stack provides a unified interface for packet management and processing of IP, TCP and UDP protocols. At the top level is the resource manager, which implements the transfer of messages between the stack and user applications, i.e. provides the functions open() , read() , write() and ioctl() . The libsocket.so library converts the io-pkt to the BSD socket layer API, which is the standard for most modern networking code.The stack also provides interfaces for working with data on the Ethernet and IP levels, which allows protocols (for example, Qnet) executed as a DLL to connect to the required level (IP or Ethernet). If interaction with external applications is required, a protocol (for example, Qnet) may include its own resource manager. In addition to drivers and protocols, the stack includes packet filtering tools: BPF (Berkeley Packet Filter) and PF (Packet Filter).

An example of starting

io-pkt with support for the Qnet protocol: io-pkt-v4-hc -d e1000 -p qnet The

-d option indicates the network controller driver devnp-e1000.so , the -p option loads the Qnet module. In fact, when installing the QNX from the installation disk, the network starts automatically from the start-up scenarios; manual start is usually required when QNX is embedded and to reduce the system boot speed.In addition to working with Ethernet frames, Qnet can also be encapsulated in IP packets. This can be convenient, for example, when working in distributed networks. It should be noted that overhead costs in this case increase. An example of running Qnet over IP:

io-pkt-v4-hv -d e1000 -p qnet bind=ip,resolve=dns If for some reason

io-pkt was launched without Qnet support, then the lsm-qnet.so module can be lsm-qnet.so later, for example: mount -Tio-pkt lsm-qnet.so More complete information about

io-pkt can be obtained in the QNX help system, and we will proceed to the main topic of our note.How does Qnet work?

From the user's point of view, Qnet works completely transparent ... Stop. Isn't it time to clarify the meaning of the word transparently in this context? Perhaps it's time. The term transparency, in our case, means that in order to access a remote resource, there is no need to take any additional actions compared to access to a local resource. I will try to explain with examples just below.

Once launched, Qnet creates the / net directory, which displays the file system of each host in a separate directory with the host name. If the host name is not set (i.e., the default name is localhost), then in the / net directory it will be called with the name composed of the MAC address. For example, the following command will display the contents of the zvezda node root directory:

ls -l /net/zvezda The following command allows you to edit one of the startup scripts on the zvezda node:

vi /net/zvezda/etc/rc.d/rc.local With access to the files figured out, nothing unusual here. Access to devices works in a similar way. So, for example, you can work with a serial port on another node:

qtalk -m /net/zvezda/dev/ser1 And here is an example more interesting:

phcalc -s /net/zvezda/dev/photon Typically, Photon applications (this is a regular QNX graphics window subsystem) allow you to specify the server to which you will connect (the

-s ). In this case, the application runs on the local node, and the graphical window will be displayed on the zvezda node. The local node does not even need to start the graphics subsystem. This may be convenient in some cases, for example, when a node does not have a graphic controller, but a graphic display of the collected data from sensors or a system setting is required. Also, this approach may allow the central processor of the graphics server to be unloaded.As a lyrical digression.

The described approach can be applied not only to the graphics subsystem, but also to any other, including oddly enough to network. And even sometimes used in QNX4, which requires a separate license for each module, including a separate license for the TCP / IP stack. You can run the

Tcpip manager Tcpip only one node in the FLEET network, and direct all requests from the socket library to this node. Do not be scared, in QNX6 usually do not. And in the case of io-pkt there is no point in this, because TCP / IP stack is inseparable from io-pkt .With files and devices, I hope, sorted out. But what about the processes? With this, too, everything is simple. A number of system utilities provide the

-n option, which says that you need to either work on a remote node or collect information from a remote node. For example, you can get a list of running processes with command line arguments like this: pidin -n zvezda arg If some utility or program does not support the

-n option, then the regular on utility comes in handy, which is exactly intended for running applications on another node, for example: on -f zvezda ls In this case, the

ls utility is run on the zvezda node, and the utility output is displayed in the current terminal.As a lyrical digression.

In fact, the

on utility provides rich possibilities for controlling the parameters of running processes. You can not only start processes on a remote node, but also change the priority level, dispatching discipline, start processes from another user, and even bind the process to a specific processor core. More details can be found in the help for the utility.It is also easy to stop the process, since The

slay utility supports the -n option. For example: slay -n zvezda io-usb Similarly, with the help of

slay you can send any other signal, not only SIGTERM.How does Qnet work from a programmer's point of view?

In the previous section, we looked at how Qnet works from the point of view of the RTOS user. In most cases, it does not even require software development to support Qnet. Is that really true? Those. to create a network application does not require working with any special frameworks and libraries? Yes, not required. If you work with POSIX, then you will not even notice the difference. But in order not to be unsubstantiated, I will give the code of a small program, which as an example saves a string to a file (including on a remote node):

#include <unistd.h> #include <stdlib.h> #include <stdio.h> #include <fcntl.h> int main(int argc, char *argv[]) { int fd; char str[] = "This is a string.\n"; if ( argc < 2 ) { printf("Please specify file name.\n"); exit(1); } if ( (fd = open(argv[1], O_RDWR|O_CREAT, 0644)) < 0 ) { perror("open()"); exit(1); } write(fd, str, sizeof(str)-1); close(fd); return 0; } This code is compatible with Qnet. After all, there really is no difference in which file the string is stored in

/tmp/1.txt or /net/zvezda/tmp/1.txt . The same with devices, two code fragments for the programmer are identical (the only difference is in the file name): fd = open("/dev/ser1", O_RDWR); and

fd = open("/net/zvezda/dev/ser1", O_RDWR); Due to what is achieved such simplicity of work with the network? POSIX calls, such as

open() , spawn a series of low-level microkernel calls that control message exchanges : ConnectAttach() , MsgSend() , etc. The program code required for network interaction is identical to that used locally. The only difference is in the path name; in the case of networking, the path name will contain the node name. The prefix with the node name is converted into a node handle, which is later used in the low-level call to ConnectAttach() . Each node in the network has its own descriptor. To search for the descriptor, the pathnames of the file system are used. In the case of a single machine, the search result will be a node descriptor, a process id, and a channel id. In the case of a Qnet network, the result will be the same, only the node descriptor will be non-zero (i.e. not equal to ND_LOCAL_NODE, and this indicates a remote connection). However, all these calls are hidden and there is no need to take care of them if you simply use open() .What is hidden behind the simple open()? function open()?

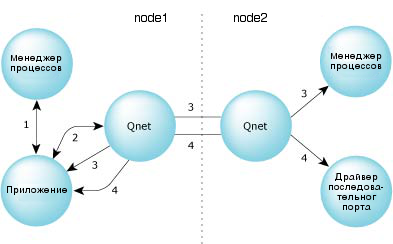

Consider a situation where an application on

node1 needs to use the serial port /dev/ser1 on node2 . In fig. 2 shows what operations are performed when the application calls the open() function with the name /net/node2/dev/ser1 .

Fig. 2. Messaging over the Qnet network.

- The application sends a message to the local process manager with a request to resolve the path name

/net/node2/dev/ser1. Sincelsm-qnet.sois responsible for the namespace/net, then the process manager returns a redirect message, indicating that the application should contact the localio-pktnetwork administrator. - The application sends a message to the local

io-pktnetwork administrator with the same request to resolve theio-pkt. The local network administrator returns a redirection message indicating the node descriptor, process identifier, and channel ID of the nodenode2process manager. - The application creates a connection with the

node2process manager and sends the request for thenode2resolution again. The process manager onnode2in turn returns another redirect message indicating the node descriptor, process identifier, and channel identifier of the serial port driver on its own node (node2). - The application creates a connection with the serial port driver on

node2and obtains a connection identifier that can be used for further message exchange (for example, when callingread(),write()and other POSIX functions).

After all this quest, the exchange of messages on the received connection identifier occurs in the same way as in the case of local exchange. It is important that all subsequent messaging operations are performed directly between the application on

node1 and the serial port driver (also, by the way, an ordinary application in QNX) on node2 .Once again, for the programmer using the

open() POSIX function, all these low-level calls are hidden. As a result of the open() call, either a file descriptor or an error code will be received.As a technical retreat.

When resolving a name, at each step the name components that were resolved are automatically removed from the request. In the example above in step 2, the request to the local network administrator contains only

node2/dev/ser1 . In step 3, the name contains only dev/ser1 . In step 4, only ser1 .Service Quality Policies

The Qnet protocol supports multi-channel transmission. To select the mode of operation when using multiple channels, quality of service (QoS) policies are applied. In Qnet networks, QoS essentially selects the transmission medium. But if there is only one network interface in the system, then QoS does not work. The following quality of service policies are supported:

- loadbalance - the Qnet protocol will use any available communication channels and will be able to distribute data transfer between them. This policy is used by default. In this mode, the bandwidth between nodes will be equal to the sum of bandwidth on all available channels. If one of the channels fails, Qnet will automatically switch to using other available channels. Service packets will be automatically sent to the faulty channel, and when the connection is restored, Qnet will again use this channel.

- preffered - only one specified communication channel is used, and the rest are ignored until the main one fails. After the primary link fails, Qnet enters loadbalance mode using all available channels. When the main channel is restored, Qnet goes into preffered mode.

- exclusive - only one specified communication channel is used, and the remaining channels will NOT be used even if the main one fails.

To set the quality of service policy, the pathname modifier is used, starting with a tilde (~) character. For example, to use the QoS exclusive policy on the interface

en0 to access the serial port on the node zvezda the name would be as follows: /net/zvezda~exclusive:en0/dev/ser1 When to use Qnet?

The choice of a protocol depends on a number of factors. The Qnet protocol is intended for a network of trusted computers running the QNX Neutrino RTOS and having the same byte order. The Qnet protocol does not authenticate requests. Protection of access rights is performed using the usual access rights of users and groups of files. Also, the Qnet protocol is a connection-based protocol, network error messages are sent to the client process.

If computers with different operating systems are working on the network or the network is not trustworthy, then it is worth considering using other protocols with which QNX also works, for example, TCP / IP, in particular, FTP, NFS, Telnet, SSH and others.

Instead of an epilogue

In the note, of course, not all the features and features of Qnet are considered, but a general description of the protocol features is given. If you are interested in Qnet technology or QNX in general, then more information on this and other topics can be found in the QNX Neutrino System Architecture. Documentation is available in electronic form on the website www.qnx.com (in English), partially translation is available on the website www.kpda.ru. There is also a Russian translation in hard copy:

QNX Neutrino Real-Time Operating System 6.5.0 System Architecture. ISBN 978-5-9775-3350-8

QNX Neutrino 6.5.0 Real-Time Operating System User Guide. ISBN 978-5-9775-3351-5

Source: https://habr.com/ru/post/279679/

All Articles