Free Cluster (Proxmox + Nexenta)

Probably many of us, solving the problem of organizing a small IT infrastructure, faced the problem of choosing a hypervisor. What functionality should software have and how much is it worth paying for? Will this or some other part of the solution be compatible with what is already there?

And as if, all this drive on the stand to make sure the correctness of the choice?

Taking into account the rate of one, all known currency, I want something simple, no frills and, if possible, free. Especially when it comes to a small or medium company (startup), limited in the budget.

From Open-Source solutions, Proxmox is the easiest to install and configure. In order not to insult oVirt lovers, etc. (this is not a promotional article), I’ll say that the initial requirement for ease of installation and administration, in my opinion, is still more pronounced for Proxmox. Also, I repeat, we do not have a data center, but only a cluster of 2-3 nodes for a small company.

As a hypervisor, it uses KVM and LXC , respectively, keeps KVM OS (Linux, * BSD, Windows and others) with minimal loss of performance and Linux without loss.

Installing the hypervisor is the simplest, download it from here :

It is installed literally in a few clicks and entering the admin password.

After that, we are given a console window with the address for the web interface of the form

Next, install the second and third nodes, with the same result.

')

1. Set up hosts on pve1.local:

2. Set up hosts on pve2.local:

3. By analogy, configure hosts on pve3.local

4. On the server pve1.local we execute:

5. On the server pve2.local we execute:

6. In the same way, we configure and add the third pve3.local host to the cluster.

We align the repository:

Commenting on the unnecessary repository:

And updated (at each node, respectively):

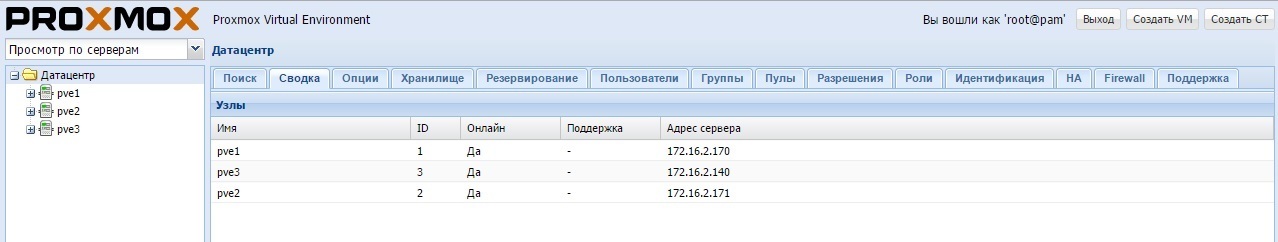

That's it, the cluster is ready for battle!

Here, we already have a backup function (called redundancy) out of the box, which almost immediately impresses!

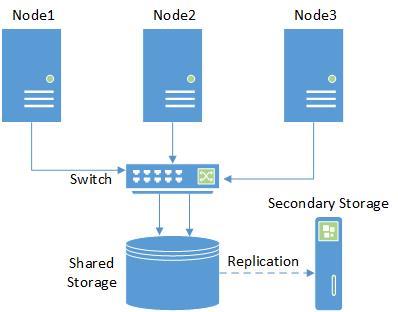

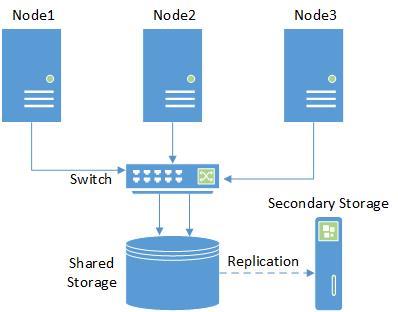

Further, the question arises of the choice of shared storage.

Choose iSCSI (as the most budget option) Storage:

In principle, when setting up, you can limit yourself to one interface, but in a cluster you cannot have one point of failure, therefore it is better to use the multipath from Proxmox, or the combination of interfaces from the storage itself.

Here, you can take some kind of commercial storage, disk shelf, etc.

Actually, the first test was conducted just along with the solution from Infortrend .

But then again, what to do if the budget is simply utterly limited (or is it simply not there) ?!

The easiest way is to fill the disks with the iron that is, and make it a storage facility that allows you to achieve your goals.

As a result, we need opportunities (taking into account the fact that the company may expand dramatically):

After some agonies of choice, OpenFiler and NexentaStor remained .

Of course, I would like to use Starwind or FreeNAS ( NAS4Free ), but if one needs Windows and shamanism with ISCSI for work, then the other has a good file clustering function.

OpenFiler unfortunately has a scant GUI and its latest version is from 2011. So it remains NexentaStor.

She, of course, has a paid active-active scheme in the Nexenta cluster, if you need it later. Also, if there are 2 nodes (controller) in the storage, then the second one’s support is also for money! Actually, all plugins are available only in the Enterprise version.

However, what is available in the Community version covers most of the initial needs. This includes 18TB of storage space, ZFS with snapshots, and the ability to replicate out of the box!

First of all, it is necessary to study the Compatibility List .

Download distribution from here (registration is required to get the key for installation).

The installation procedure is as simple as possible. In its process, we configure an IP address with a port to configure in the GUI.

Next, successively clicking on the requests of the wizard, we set the password and array in the GUI (in Nexenta, it is called the Volume).

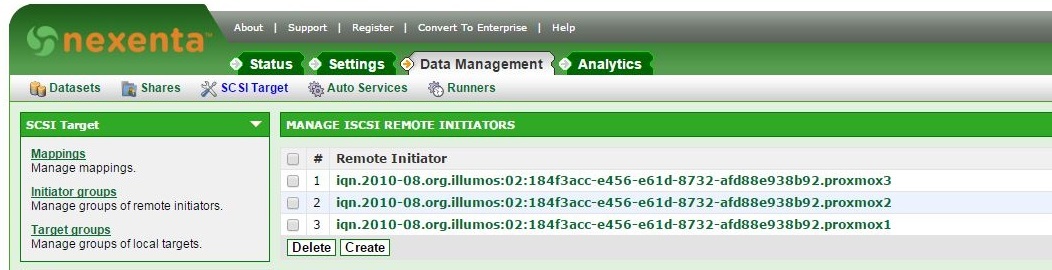

Next, go to the SCSI Target section and sequentially create:

Further, in accordance with the manual :

Copy the key to each node:

You can hook up the iSCSI storage in Proxmox in two ways:

Through the ISCSI menu in adding storage (you will have to create an additional LVM) - see the manual .

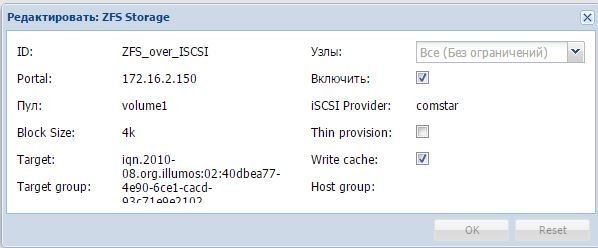

Through the menu ZFS over iSCSI - see the manual .

We will go the second way, as it gives us the opportunity to create and store snapshots. Nexenta can also do this.

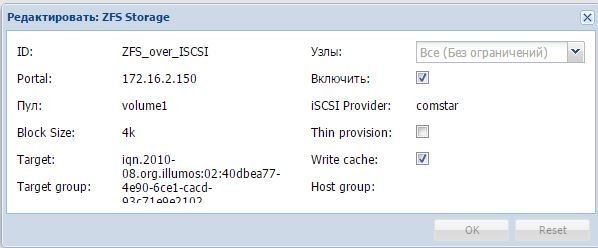

The configuration process in the GUI looks like this:

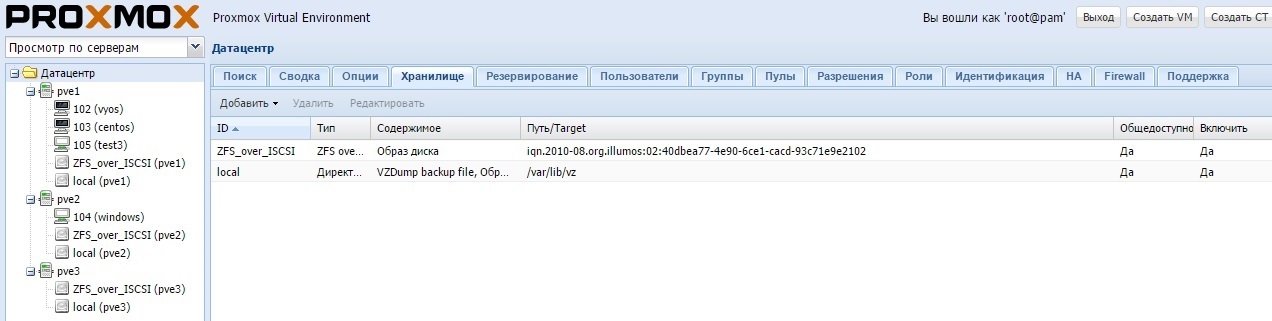

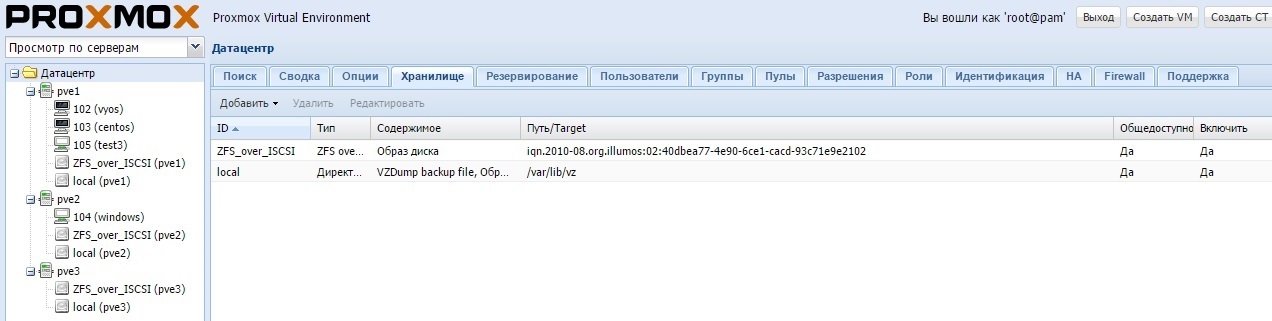

The result is:

When setting up, do not confuse the pool name (in nexenta it is similar to Datastore):

Now we can choose how and what to back up.

We can make an automatic backup of VM to remote storage directly from Proxmox:

Those. go to the “Storage” tab and add an NFS array. This can be either an ordinary NAS storage, or an xNIX server with a folder accessible via NFS (which is more convenient for someone). Then, we specify contents (backup).

Alternatively, you can replicate with Nexenta:

Implemented in the GUI tabs Data Management -> Auto Services -> Auto-Tier Services -> Create .

Here we mean a remote repository (or just a Linux machine) running the rsync service. Therefore, before this we need to create a connection between the hosts.

The Settings tabs -> Network -> SSH-bind help raise the connection.

Fully customizable via GUI.

You can also view the status in the console:

Now we’ll check the performance of the cluster.

Install the VM, with Windows, with the disk in the shared storage, and try the migration manually:

Works!

Ok, now we check its fault tolerance, turn off the server through IPMI. We are waiting for the move. The machine automatically migrates in a minute and a half.

What is the problem? Here it is necessary to understand that the fencing mechanism in version 4.x has changed. Those. Whatchdog fencing, which does not have active hardware support, is currently working. Will be fixed in version 4.2.

So, what we got in the end?

And we got a Production-ready cluster that supports most of the OS, with a simple management interface, multiple data backup (this is also Proxmox and Nexentastor with snapshots and replication).

Plus, we always have the ability to scale and add features, both from the side of Proxmox and from the side of Nexenta (in this case, you will have to buy a license).

And all this is completely free!

In my opinion, setting all this does not require any special time or detailed study of various manuals.

Of course, the rake is not without some, it’s a comparison with ESXi + VMWare vCenter in favor of the latter. However, you can always ask a question on the support forum!

As a result, almost 100% of the functionality, most often used by the administrator of a small project (company), we immediately got out of the box. Therefore, I recommend everyone to think, and is it worth spending on unnecessary features, so long as they are licensed?

PS In the above experiment, the equipment was used:

4 x STSS Flagman RX237.4-016LH Servers consisting of:

Three of the four servers were used as nodes, one was packed with 2TB disks and used as a storage.

In the first experiment, a finished storage Infortrend EonNAS 3016R was used as a NAS .

The equipment was chosen not to test performance, but to evaluate the concept of the solution as a whole.

It is possible that there are more optimal options for implementation. However, performance testing in different configurations was not included in the scope of this article.

Thank you for your attention, waiting for your comments!

And as if, all this drive on the stand to make sure the correctness of the choice?

Taking into account the rate of one, all known currency, I want something simple, no frills and, if possible, free. Especially when it comes to a small or medium company (startup), limited in the budget.

What do we need?

- Hardware compatible hypervisor

- Cluster with access to admin panel via web interface

- High Availability for Virtual Machines in a Cluster

- Backup and restore function out of the box

- Accessibility for understanding and work of the average administrator (yesterday's student-student).

From Open-Source solutions, Proxmox is the easiest to install and configure. In order not to insult oVirt lovers, etc. (this is not a promotional article), I’ll say that the initial requirement for ease of installation and administration, in my opinion, is still more pronounced for Proxmox. Also, I repeat, we do not have a data center, but only a cluster of 2-3 nodes for a small company.

As a hypervisor, it uses KVM and LXC , respectively, keeps KVM OS (Linux, * BSD, Windows and others) with minimal loss of performance and Linux without loss.

So, let's go:

Installing the hypervisor is the simplest, download it from here :

It is installed literally in a few clicks and entering the admin password.

After that, we are given a console window with the address for the web interface of the form

172.16.2.150 : 8006(hereinafter addressing the test network).

Next, install the second and third nodes, with the same result.

')

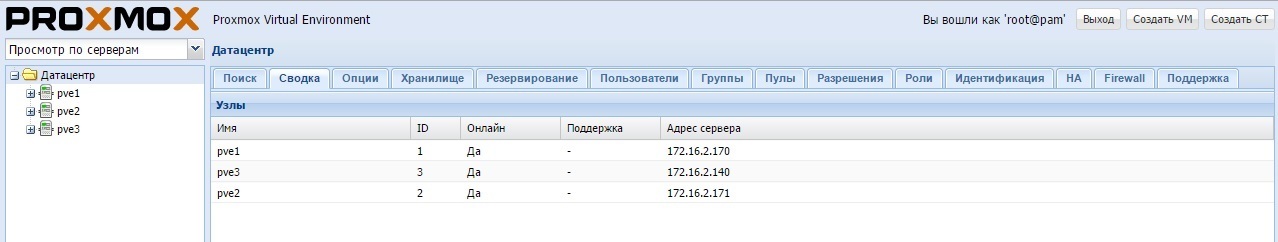

Run the cluster:

1. Set up hosts on pve1.local:

root@pve1:~# nano /etc/hosts 127.0.0.1 localhost.localdomain localhost 172.16.2.170 pve1.local pve1 pvelocalhost 172.16.2.171 pve2.local pve2 2. Set up hosts on pve2.local:

root@pve2:~# nano /etc/hosts 127.0.0.1 localhost.localdomain localhost 172.16.2.171 pve2.local pve2 pvelocalhost 172.16.2.170 pve1.local pve1 3. By analogy, configure hosts on pve3.local

4. On the server pve1.local we execute:

root@pve1:~# pvecm create cluster 5. On the server pve2.local we execute:

root@pve2:~# pvecm add pve1 6. In the same way, we configure and add the third pve3.local host to the cluster.

Update all nodes:

We align the repository:

root@pve1:~# nano /etc/apt/sources.list deb http://ftp.debian.org.ru/debian jessie main contrib # PVE pve-no-subscription repository provided by proxmox.com, NOT recommended for production use deb http://download.proxmox.com/debian jessie pve-no-subscription # security updates deb http://security.debian.org/ jessie/updates main contrib Commenting on the unnecessary repository:

root@pve1:~# nano /etc/apt/sources.list.d/pve-enterprise.list # deb https://enterprise.proxmox.com/debian jessie pve-enterprise And updated (at each node, respectively):

root@pve1:~# apt-get update && apt-get dist-upgrade That's it, the cluster is ready for battle!

Here, we already have a backup function (called redundancy) out of the box, which almost immediately impresses!

Storage:

Further, the question arises of the choice of shared storage.

Choose iSCSI (as the most budget option) Storage:

In principle, when setting up, you can limit yourself to one interface, but in a cluster you cannot have one point of failure, therefore it is better to use the multipath from Proxmox, or the combination of interfaces from the storage itself.

Here, you can take some kind of commercial storage, disk shelf, etc.

Actually, the first test was conducted just along with the solution from Infortrend .

But then again, what to do if the budget is simply utterly limited (or is it simply not there) ?!

The easiest way is to fill the disks with the iron that is, and make it a storage facility that allows you to achieve your goals.

As a result, we need opportunities (taking into account the fact that the company may expand dramatically):

- Unified storage: NAS / SAN

- iSCSI target functionality

- CIFS, NFS, HTTP, FTP Protocols

- RAID 0,1,5,6,10 support

- Bare metal or virtualization installation

- 8TB + Journaled filesystems

- Filesystem Access Control Lists

- Point In Time Copy (snapshots) support

- Dynamic volume manager

- Powerful web-based management

- Block level remote replication

- High Availability clustering

- Key features must be present in the Open-Cource / Community version.

After some agonies of choice, OpenFiler and NexentaStor remained .

Of course, I would like to use Starwind or FreeNAS ( NAS4Free ), but if one needs Windows and shamanism with ISCSI for work, then the other has a good file clustering function.

OpenFiler unfortunately has a scant GUI and its latest version is from 2011. So it remains NexentaStor.

She, of course, has a paid active-active scheme in the Nexenta cluster, if you need it later. Also, if there are 2 nodes (controller) in the storage, then the second one’s support is also for money! Actually, all plugins are available only in the Enterprise version.

However, what is available in the Community version covers most of the initial needs. This includes 18TB of storage space, ZFS with snapshots, and the ability to replicate out of the box!

Installing NexentaStor Community Edition:

First of all, it is necessary to study the Compatibility List .

Download distribution from here (registration is required to get the key for installation).

The installation procedure is as simple as possible. In its process, we configure an IP address with a port to configure in the GUI.

Next, successively clicking on the requests of the wizard, we set the password and array in the GUI (in Nexenta, it is called the Volume).

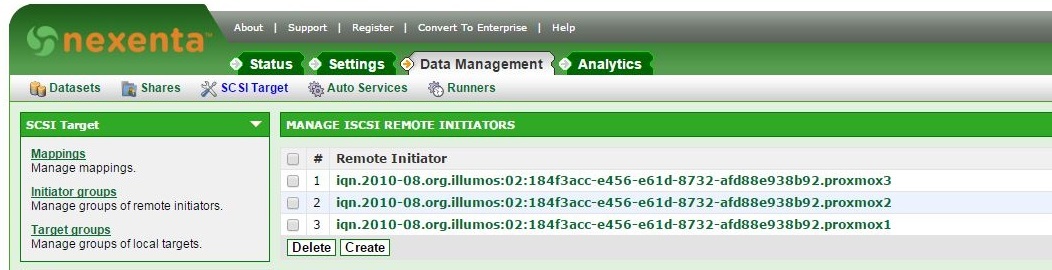

Next, go to the SCSI Target section and sequentially create:

- Target Portal Groups

- Targets

- Remote Initiators - for each node:

Further, in accordance with the manual :

root@pve1:~# mkdir /etc/pve/priv/zfs root@pve1:~# ssh-keygen -f /etc/pve/priv/zfs/172.16.2.150_id_rsa root@pve1:~# ssh-copy-id -i /etc/pve/priv/zfs/192.16.2.150_id_rsa.pub root@172.16.2.150 Copy the key to each node:

root@pve1:~# ssh -i /etc/pve/priv/zfs/172.16.2.150_id_rsa root@172.16.2.150 You can hook up the iSCSI storage in Proxmox in two ways:

Through the ISCSI menu in adding storage (you will have to create an additional LVM) - see the manual .

Through the menu ZFS over iSCSI - see the manual .

We will go the second way, as it gives us the opportunity to create and store snapshots. Nexenta can also do this.

The configuration process in the GUI looks like this:

The result is:

When setting up, do not confuse the pool name (in nexenta it is similar to Datastore):

root@nexenta:~# zpool status pool: volume1 state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM volume1 ONLINE 0 0 0 c0t1d0 ONLINE 0 0 0 errors: No known data errors Now we can choose how and what to back up.

We can make an automatic backup of VM to remote storage directly from Proxmox:

Those. go to the “Storage” tab and add an NFS array. This can be either an ordinary NAS storage, or an xNIX server with a folder accessible via NFS (which is more convenient for someone). Then, we specify contents (backup).

Alternatively, you can replicate with Nexenta:

Implemented in the GUI tabs Data Management -> Auto Services -> Auto-Tier Services -> Create .

Here we mean a remote repository (or just a Linux machine) running the rsync service. Therefore, before this we need to create a connection between the hosts.

The Settings tabs -> Network -> SSH-bind help raise the connection.

HA Setup:

Fully customizable via GUI.

- Select the datacenter , click the HA tab on top.

- Click Groups below and create a group of hosts to which VMs can migrate.

- Next, click Resources and add the VM we want there.

You can also view the status in the console:

root@pve1:~# ha-manager status quorum OK master pve1 (active, Sun Mar 20 14:55:59 2016) lrm pve1 (active, Sun Mar 20 14:56:02 2016) lrm pve2 (active, Sun Mar 20 14:56:06 2016) service vm:100 (pve1, started) Now we’ll check the performance of the cluster.

Install the VM, with Windows, with the disk in the shared storage, and try the migration manually:

Works!

Ok, now we check its fault tolerance, turn off the server through IPMI. We are waiting for the move. The machine automatically migrates in a minute and a half.

What is the problem? Here it is necessary to understand that the fencing mechanism in version 4.x has changed. Those. Whatchdog fencing, which does not have active hardware support, is currently working. Will be fixed in version 4.2.

Conclusion

So, what we got in the end?

And we got a Production-ready cluster that supports most of the OS, with a simple management interface, multiple data backup (this is also Proxmox and Nexentastor with snapshots and replication).

Plus, we always have the ability to scale and add features, both from the side of Proxmox and from the side of Nexenta (in this case, you will have to buy a license).

And all this is completely free!

In my opinion, setting all this does not require any special time or detailed study of various manuals.

Of course, the rake is not without some, it’s a comparison with ESXi + VMWare vCenter in favor of the latter. However, you can always ask a question on the support forum!

As a result, almost 100% of the functionality, most often used by the administrator of a small project (company), we immediately got out of the box. Therefore, I recommend everyone to think, and is it worth spending on unnecessary features, so long as they are licensed?

PS In the above experiment, the equipment was used:

4 x STSS Flagman RX237.4-016LH Servers consisting of:

- X9DRi-LN4F +

- 2 x Xeon E5-2609

- 8 x 4GB DDR-III ECC

- ASR-6805 + FBWC

Three of the four servers were used as nodes, one was packed with 2TB disks and used as a storage.

In the first experiment, a finished storage Infortrend EonNAS 3016R was used as a NAS .

The equipment was chosen not to test performance, but to evaluate the concept of the solution as a whole.

It is possible that there are more optimal options for implementation. However, performance testing in different configurations was not included in the scope of this article.

Thank you for your attention, waiting for your comments!

Source: https://habr.com/ru/post/279589/

All Articles