Testing. Fundamental theory

Good day!

I want to collect all the most necessary theory on testing, which is asked at interviews for trainee, junior and a little middle. Actually, I have already collected quite a few. The purpose of this post is to jointly add the missed and correct / paraphrase / add / make what else with what is already there, so that it becomes good and you can take it all and repeat it before the next interview about every occasion. In general, colleagues, I ask under the cat, who to draw something new, who to systematize the old, and who to contribute.

The result should be an exhaustive cheat sheet, which should be re-read on the way to the interview.

')

Everything listed below is not invented by me personally, but taken from various sources, where I personally liked the wording and definition more. At the end of the list of sources.

Subject: test definition, quality, verification / validation, goals, stages, test plan, test plan items, test design, test design techniques, traceability matrix, tets case, checklist, defect, error / deffect / failure, bug report , severity vs priority, test levels, types / types, approaches to integration testing, testing principles, static and dynamic testing, research / ad-hoc testing, requirements, bug life cycle, software development stages, decision table, qa / qc / test engineer, diagram of communications.

Go!

Software testing is a test of the correspondence between the actual and expected behavior of the program, performed on the final test suite selected in a certain way. In a broader sense, testing is one of the techniques of quality control, which includes work planning activities (Test Management), test design (Test Design), Test Execution and analysis of the results (Test Analysis).

Software Quality (Software Quality) is a combination of software characteristics related to its ability to meet established and perceived needs. [ISO 8402: 1994 Quality management and quality assurance]

Verification is the process of evaluating a system or its components to determine if the results of the current development stage satisfy the conditions formed at the beginning of this stage [IEEE]. Those. whether our goals, deadlines, project development tasks, as defined at the beginning of the current phase, are being fulfilled.

Validation is the determination of the compliance of the software being developed to the expectations and needs of the user with the requirements of the system [BS7925-1].

You can also find a different interpretation:

The process of evaluating the conformity of a product to explicit requirements (specifications) is verification (verification), while at the same time evaluating the compliance of a product with the expectations and requirements of users is validation. You can also often come across the following definition of these concepts:

Validation - 'is this the right specification?'.

Verification - 'is the system correct to specification?'.

Test objectives

Increase the likelihood that an application intended for testing will work correctly under any circumstances.

Increase the likelihood that the application intended for testing will meet all the requirements described.

Providing current information on the status of the product at the moment.

Testing steps:

1. Analysis

2. Develop a testing strategy

and planning quality control procedures

3. Work with requirements

4. Creation of test documentation

5. Testing the prototype

6. Basic testing

7. Stabilization

8. Operation

A Test Plan is a document that describes the entire scope of testing, starting with the description of the object, strategy, schedule, criteria for starting and ending testing, up to the equipment necessary for the operation, special knowledge, and risk assessment with options to resolve them .

Answers the questions:

What should be tested?

What will you test?

How will you test?

When will you test?

Criteria for testing.

Criteria for the end of testing.

Key points of the test plan

The IEEE 829 standard lists the items from which the test plan should (let - may) consist:

a) Test plan identifier;

b) Introduction;

c) Test items;

d) Features to be tested;

e) Features not to be tested;

f) Approach;

g) Item pass / fail criteria;

h) Suspension criteria and resumption requirements;

i) Test deliverables;

j) Testing tasks;

k) Environmental needs;

l) Responsibilities;

m) StafÞng and training needs;

n) Schedule;

o) Risks and contingencies;

p) Approvals.

Test design is a stage in the software testing process where test cases are designed and created (test cases) in accordance with previously defined quality criteria and testing objectives.

Roles responsible for the test design:

• Test analyst - determines "WHAT to test?"

• Test Designer - defines “HOW to test?”

Test design techniques

• Equivalent Partitioning (EP) . As an example, you have a range of valid values from 1 to 10, you must choose one valid value inside the interval, say 5, and one invalid value outside the interval - 0.

• Analysis of Boundary Values (Boundary Value Analysis - BVA) . If we take the example above, as the values for positive testing, we choose the minimum and maximum limits (1 and 10), and the values are larger and smaller than the limits (0 and 11). Analysis Boundary values can be applied to fields, records, files, or to any kind of entity with restrictions.

• Cause / Effect (Cause / Effect - CE) . It is, as a rule, entering combinations of conditions (causes) to get a response from the system (Corollary). For example, you check the ability to add a client using a specific screen form. To do this, you will need to enter several fields, such as "Name", "Address", "Phone Number" and then press the "Add" button - this "Reason". After clicking the “Add” button, the system adds the client to the database and displays its number on the screen - this is “Corollary”.

• Error Guessing (EG) . This is when the test analyst uses his knowledge of the system and the ability to interpret the specification in order to “predict” under what input conditions the system may give an error. For example, the specification says: “the user must enter the code”. The test analyst will think: “What if I do not enter the code?”, “What if I enter the wrong code? ", and so on. This is an error prediction.

• Exhaustive Testing (ET) is an extreme case. Within this technique, you should check all possible combinations of input values, and in principle, this should find all the problems. In practice, the application of this method is not possible, due to the huge number of input values.

The Traceability matrix is a two-dimensional table containing the functional requirements (functional requirements) of the product and the test cases prepared (test cases). The headings of the table columns are the requirements, and the line headings are test scripts. At the intersection, a mark means that the requirement of the current column is covered by the test script of the current line.

The requirements compliance matrix is used by QA engineers to validate product coverage tests. MST is an integral part of the test plan.

A Test Case is an artifact that describes a set of steps, specific conditions and parameters necessary to verify the implementation of a test function or its part.

Example:

Action Expected Result Test Result

(passed / failed / blocked)

Open page "login" Login page is opened

Each test case should have 3 parts:

PreConditions A list of actions that lead the system to a state suitable for conducting a basic check. Or a list of conditions, the fulfillment of which indicates that the system is in a condition suitable for conducting the main test.

Test Case Description List of actions that translate the system from one state to another, to obtain a result, based on which we can conclude that the implementation is satisfied, the requirements set

PostConditions A list of actions that take the system to its original state (the state before the test is the initial state)

Types of Test Cases:

Test cases are divided by the expected result into positive and negative:

• Positive test case uses only valid data and checks that the application has correctly executed the called function.

• Negative test case operates with both correct and incorrect data (at least 1 incorrect parameter) and aims to check for exceptional situations (validation of validators), and also checks that the application called function does not execute when the validator is triggered.

A check list is a document that describes what should be tested. In this case, the checklist can be completely different levels of detail. How detailed the checklist will depend on the reporting requirements, the level of knowledge of the product by employees and the complexity of the product.

As a rule, the checklist contains only actions (steps), without the expected result. The checklist is less formalized than the test script. It is appropriate to use it when test scripts are redundant. Also, the checklist is associated with flexible approaches to testing.

A defect (also known as a bug) is a discrepancy between the actual result of the program and the expected result. Defects are detected at the stage of software testing (software), when the tester compares the results of the program (component or design) with the expected result described in the specification of requirements.

Error - user error, that is, he is trying to use the program in another way.

Example - enters the letters in the field where you want to enter numbers (age, quantity of goods, etc.).

In a quality program, such situations are provided and an error message (error message) is displayed, with a red cross.

Bug (defect) - a programmer's error (or a designer or someone else who takes part in the development), that is, when in the program, something goes wrong as planned and the program gets out of control. For example, when user input is not controlled at all, as a result, incorrect data cause crashes or other “joys” in the program's work. Or inside the program is built in such a way that it does not initially correspond to what is expected of it.

Failure - failure (and not necessarily hardware) in the component, the entire program or system. That is, there are such defects that lead to failures (A defect caused the failure) and there are those that do not. UI defects for example. But a hardware failure that is not related to software in any way is also failure.

Bug Report is a document describing a situation or sequence of actions that led to incorrect operation of the test object, indicating the reasons and the expected result.

Cap

Short Description A brief description of the problem, clearly indicating the cause and type of the error situation.

Project (Project) The name of the test project

Application Component (Component) Name of part or function of the product being tested.

Version number (Version) The version on which the error was found

Severity The most common five-level system for grading the severity of a defect is:

• S1 Blocking (Blocker)

• S2 Critical

• S3 Major (Major)

• S4 Minor (Minor)

• S5 Trivial

Priority Defect Priority:

• P1 High

• P2 Medium

• P3 Low

Status Status of the bag. Depends on the procedure used and the life cycle of the bug (bug workflow and life cycle)

Author (Author) Creator bug report

Assigned To The name of the person assigned to solve the problem.

Environment

OS / Service Pack, etc. / Browser + version / ... Information about the environment on which the bug was found: operating system, service pack, for WEB testing - the name and version of the browser, etc.

...

Description

Steps to play (Steps to Reproduce) Steps by which you can easily reproduce the situation that led to the error.

Actual Result (Result) The result obtained after passing the steps to play.

Expected Result Expected Result

Additions

Attachment A file with logs, a screenshot or any other document that can help clarify the cause of the error or indicate the way to solve the problem.

Severity vs Priority

Severity is an attribute that characterizes the effect of a defect on an application’s performance.

Priority (Priority) - an attribute that indicates the order of the task or the elimination of the defect. It can be said that this is a work planning manager tool. The higher the priority, the faster the defect needs to be corrected.

Severity is exposed by tester

Priority - by manager, team lead or customer

Graduation Grade Defect (Severity)

S1 Blocking (Blocker)

A blocking error that causes the application to become inoperable, as a result of which further work with the system under test or its key functions becomes impossible. Problem solving is necessary for the further functioning of the system.

S2 Critical

Critical error, incorrect key business logic, security hole, problem that caused server to crash temporarily or some part of the system is inoperable, without the possibility of solving the problem using other entry points. Problem solving is necessary for further work with key functions of the system under test.

S3 Major (Major)

Significant error, part of the main business logic does not work correctly. The error is not critical or there is an opportunity to work with the function under test using other input points.

S4 Minor (Minor)

A minor error that does not violate the business logic of the application being tested is an obvious user interface problem.

S5 Trivial

A trivial error that does not relate to the business logic of the application, a poorly reproducible problem, barely noticeable through the user interface, the problem of third-party libraries or services, a problem that does not affect the overall quality of the product.

Grade Priority Defect (Priority)

P1 High (High)

The error should be fixed as soon as possible, because its availability is critical for the project.

P2 Medium

The error should be corrected, its presence is not critical, but requires a mandatory solution.

P3 Low

The error should be corrected, its presence is not critical, and does not require an urgent solution.

Testing Levels

1. Unit Testing

Component (modular) testing verifies the functionality and looks for defects in parts of the application that are available and can be tested separately (program modules, objects, classes, functions, etc.).

2. Integration Testing

The interaction between the system components after component testing is checked.

3. System Testing (System Testing)

The main task of system testing is to check both functional and non-functional requirements in the system as a whole. This reveals defects, such as incorrect use of system resources, unintended combinations of user-level data, incompatibility with the environment, unintended usage scenarios, missing or incorrect functionality, inconvenience of use, etc.

4. Operational Testing (Release Testing).

Even if the system meets all requirements, it is important to make sure that it meets the user's needs and fulfills its role in the environment of its operation, as it was defined in the business model of the system. It should be noted that the business model may contain errors. Therefore, it is important to conduct operational testing as the final step of validation. In addition, testing in the operating environment allows you to identify non-functional problems, such as: conflict with other systems related in the business field or in software and electronic environments; insufficient system performance in the operating environment, etc. It is obvious that finding such things at the implementation stage is a critical and expensive problem. Therefore, it is so important to carry out not only verification, but also validation, from the very early stages of software development.

5. Acceptance Testing

A formal testing process that checks the compliance of the system with the requirements and is conducted with the aim of:

• determine whether the system meets the acceptance criteria;

• the decision of the customer or other authorized person is accepted by the application or not.

Types / types of testing

Functional types of testing

• Functional testing (Functional testing)

• Security Testing (Security and Access Control Testing)

• Interoperability Testing

Non-functional testing types

• All kinds of performance testing:

o load testing (Performance and Load Testing)

o stress testing (Stress Testing)

o stability or reliability testing (Stability / Reliability Testing)

o volume testing (Volume Testing)

• Installation testing

• Usability Testing

• Failover and Recovery Testing

• Configuration Testing (Configuration Testing)

Change of Test Types

• Smoke Testing

• Regression Testing

• Retesting

• Build Verification Test

• Sanitary Testing or Consistency / Health Testing (Sanity Testing)

Functional testing examines predetermined behavior and is based on an analysis of the specifications of the functionality of a component or system as a whole.

Security testing is a testing strategy used to test the security of a system, as well as to analyze risks related to ensuring a holistic approach to protecting an application, hacker attacks, viruses, and unauthorized access to confidential data.

Interoperability Testing is functional testing that tests the ability of an application to interact with one or more components or systems and includes compatibility testing and integration testing.

Load testing is an automated test that simulates the work of a certain number of business users on a shared (shared) resource.

Stress testing (Stress Testing) allows you to check how the application and the system as a whole work under stress and also evaluate the ability of the system to regenerate, i.e. to return to normal after cessation of exposure to stress. Stress in this context may be an increase in the intensity of operations to very high values or an emergency change in the configuration of the server. Also, one of the tasks in stress testing can be to evaluate performance degradation, so stress testing objectives can overlap with performance testing objectives.

Volume Testing . The task of volumetric testing is to obtain an assessment of performance when increasing the amount of data in the database

Stability / Reliability Testing . The task of testing stability (reliability) is to test the performance of the application during long-term (many hours) testing with an average load level.

Testing the installation is aimed at verifying successful installation and configuration, as well as updating or uninstalling software.

Usability testing is a testing method aimed at establishing the degree of usability, learnability, comprehensibility and attractiveness for users of a developed product in the context of specified conditions. This also includes:

User Interface Testing (UI Testing) is a type of testing research that is performed to determine if some artificial object (such as a web page, user interface, or device) is convenient for its intended use.

User eXperience (UX) is the feeling that a user experiences while using a digital product, while the User interface is a tool that allows user-web resource interaction.

Failover and Recovery Testing tests the product under test in terms of its ability to withstand and recover successfully from possible failures caused by software errors, hardware failures or communication problems (for example, a network failure). The purpose of this type of testing is to check the recovery systems (or duplicate basic functionality of the systems), which, in case of failure, will ensure the safety and integrity of the data of the tested product.

Configuration Testing - a special type of testing aimed at checking the operation of software with various system configurations (declared platforms, supported drivers, various computer configurations, etc.)

Smoke (Smoke) testing is considered as a short cycle of tests performed to confirm that after building the code (new or revised) the application to be installed starts and performs the basic functions.

Regression testing is a type of testing aimed at checking changes made in an application or the environment (fixing a defect, merging code, migrating to another operating system, database, web server or application server) to confirm that the existing functionality is working. as before. Regression tests can be either functional or non-functional tests.

Retesting - testing, during which test scripts are run that have detected errors during the last run, to confirm the success of correcting these errors.

What is the difference between regression testing and re-testing?

Re-testing - fixes bug fixes.

Regression testing - verifies that fixing bugs did not affect other software modules and did not cause new bugs.

Build testing or Build Verification Test - testing aimed at determining the compliance of the released version with the quality criteria for starting testing. According to its goals, it is analogous to Smoke Testing, aimed at accepting a new version for further testing or operation. It can penetrate further, depending on the quality requirements of the released version.

Sanitary testing is highly targeted testing is sufficient to prove that a particular function operates according to the requirements stated in the specification. It is a subset of regression testing. It is used to determine the health of a specific part of an application after changes made in it or the environment. Usually done manually.

Error Guessing (EG) . This is when the test analyst uses his knowledge of the system and the ability to interpret the specification in order to “predict” under what input conditions the system may give an error. For example, the specification says: “the user must enter the code”. The test analyst will think: “What if I do not enter the code?”, “What if I enter the wrong code? ", and so on. This is an error prediction.

Approaches to integration testing:

• Bottom Up (Bottom Up Integration)

All low-level modules, procedures, or functions are aggregated and then tested. After that, the next level of modules is collected for integration testing. This approach is considered useful if all or practically all modules of the developed level are ready. Also, this approach helps to determine the level of readiness of the application by the results of testing.

• Top Down Integration

First, all high-level modules are tested, and low-level ones are gradually added one by one. All modules of the lower level are simulated with plugs with similar functionality, then as they become ready, they are replaced with real active components. So we are testing from top to bottom.

• Big Bang (“Big Bang” Integration)

All or almost all developed modules come together as a complete system or its main part, and then integration testing is carried out. This approach is very good for saving time. However, if test cases and their results are not recorded correctly, the integration process itself will become very complicated, which will be an obstacle for the testing team to achieve the main goal of integration testing.

Principles of testing

Principle 1 - Testing demonstrates the presence of defects (Testing shows presence of defects)

Testing can show that defects are present, but cannot prove that they are not. Testing reduces the likelihood of defects in the software, but even if no defects were detected, this does not prove its correctness.

Principle 2 - Exhaustive Testing Unreachable (Exhaustive testing is impossible)

Full testing using all combinations of inputs and preconditions is physically impossible, except in trivial cases. Instead of exhaustive testing, risk analysis and prioritization should be used to more accurately focus testing efforts.

Principle 3 - Early testing

To find defects as early as possible, testing activities should be started as early as possible in the software or system development life cycle, and should be focused on specific goals.

Principle 4 - Defects clustering

Testing efforts should be concentrated in proportion to the expected and later real density of defects by module. As a rule, most of the defects found during testing or resulting in the majority of system failures are contained in a small number of modules.

Principle 5 - Pesticide Paradox (Pesticide paradox)

If the same tests are run many times, in the end, this set of test scenarios will no longer find new defects. To overcome this “pesticide paradox”, test scripts should be regularly reviewed and corrected, new tests should be versatile to cover all components of the software or systems, and to find as many defects as possible.

Principle 6 - Testing is context dependent (Testing is concept depending)

Testing is done differently depending on the context. For example, security-critical software is tested differently than an e-commerce site.

Principle 7 - Absence-of-errors fallacy

Detection and correction of defects will not help if the created system does not suit the user and does not satisfy his expectations and needs.

Static and dynamic testing

Static testing differs from dynamic testing in that it is done without launching the product code. Testing is carried out by analyzing the software code (code review) or compiled code. The analysis can be done both manually and with the help of special tools. The purpose of the analysis is the early detection of errors and potential problems in the product. Also static testing includes specification testing and other documentation.

Research / ad-hoc testing

The simplest definition of research testing is developing and executing tests at the same time. ( , ). , , .

ad hoc exploratory testing , , ad hoc , exploratory . , .

— () , .

, , . , .

:

•

•

•

•

• ()

•

•

— , , . ( «-») , . (« »).

:

• ;

• ;

• ;

• ;

• .

. , .

:

• -

•

•

• -

•

• -

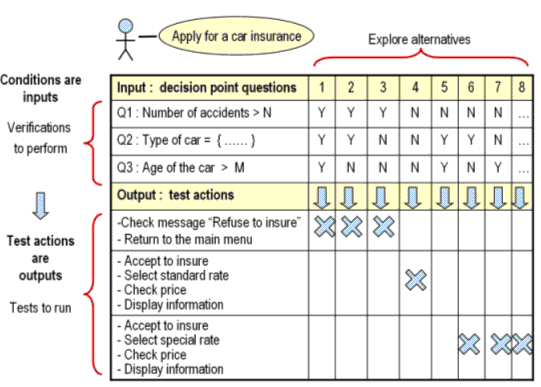

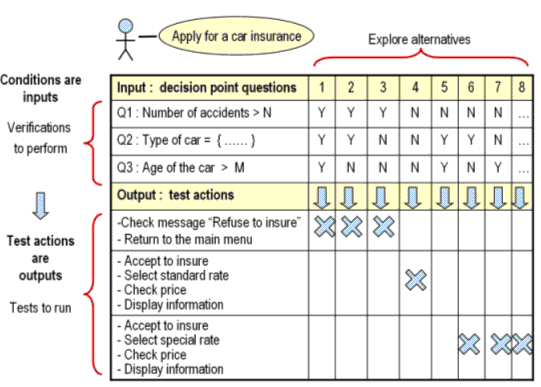

(decision table) — , . , .

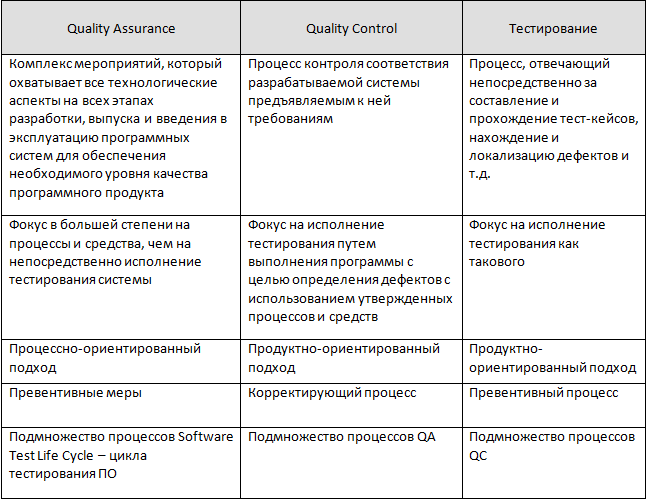

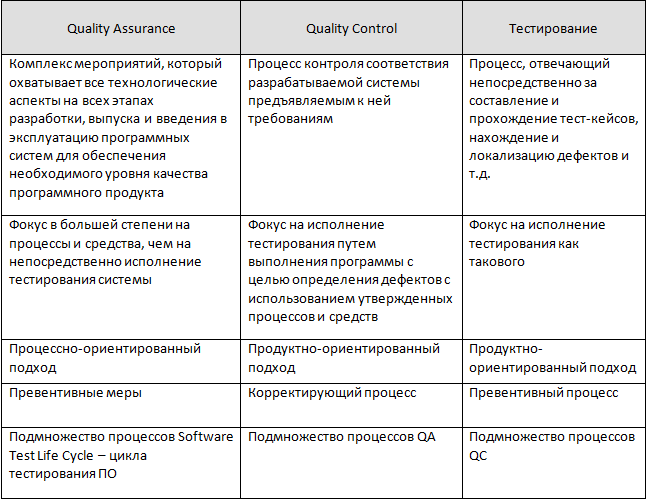

QA/QC/Test Engineer

, : — QC. QC — QA.

— , . .

: www.protesting.ru , www.bugscatcher.net , www.qalight.com.ua , www.thinkingintests.wordpress.com , ISTQB, www.quizful.net , www.bugsclock.blogspot.com , www.zeelabs.com , www.devopswiki.net , www.hvorostovoz.blogspot.com .

I want to collect all the most necessary theory on testing, which is asked at interviews for trainee, junior and a little middle. Actually, I have already collected quite a few. The purpose of this post is to jointly add the missed and correct / paraphrase / add / make what else with what is already there, so that it becomes good and you can take it all and repeat it before the next interview about every occasion. In general, colleagues, I ask under the cat, who to draw something new, who to systematize the old, and who to contribute.

The result should be an exhaustive cheat sheet, which should be re-read on the way to the interview.

')

Everything listed below is not invented by me personally, but taken from various sources, where I personally liked the wording and definition more. At the end of the list of sources.

Subject: test definition, quality, verification / validation, goals, stages, test plan, test plan items, test design, test design techniques, traceability matrix, tets case, checklist, defect, error / deffect / failure, bug report , severity vs priority, test levels, types / types, approaches to integration testing, testing principles, static and dynamic testing, research / ad-hoc testing, requirements, bug life cycle, software development stages, decision table, qa / qc / test engineer, diagram of communications.

Go!

Software testing is a test of the correspondence between the actual and expected behavior of the program, performed on the final test suite selected in a certain way. In a broader sense, testing is one of the techniques of quality control, which includes work planning activities (Test Management), test design (Test Design), Test Execution and analysis of the results (Test Analysis).

Software Quality (Software Quality) is a combination of software characteristics related to its ability to meet established and perceived needs. [ISO 8402: 1994 Quality management and quality assurance]

Verification is the process of evaluating a system or its components to determine if the results of the current development stage satisfy the conditions formed at the beginning of this stage [IEEE]. Those. whether our goals, deadlines, project development tasks, as defined at the beginning of the current phase, are being fulfilled.

Validation is the determination of the compliance of the software being developed to the expectations and needs of the user with the requirements of the system [BS7925-1].

You can also find a different interpretation:

The process of evaluating the conformity of a product to explicit requirements (specifications) is verification (verification), while at the same time evaluating the compliance of a product with the expectations and requirements of users is validation. You can also often come across the following definition of these concepts:

Validation - 'is this the right specification?'.

Verification - 'is the system correct to specification?'.

Test objectives

Increase the likelihood that an application intended for testing will work correctly under any circumstances.

Increase the likelihood that the application intended for testing will meet all the requirements described.

Providing current information on the status of the product at the moment.

Testing steps:

1. Analysis

2. Develop a testing strategy

and planning quality control procedures

3. Work with requirements

4. Creation of test documentation

5. Testing the prototype

6. Basic testing

7. Stabilization

8. Operation

A Test Plan is a document that describes the entire scope of testing, starting with the description of the object, strategy, schedule, criteria for starting and ending testing, up to the equipment necessary for the operation, special knowledge, and risk assessment with options to resolve them .

Answers the questions:

What should be tested?

What will you test?

How will you test?

When will you test?

Criteria for testing.

Criteria for the end of testing.

Key points of the test plan

The IEEE 829 standard lists the items from which the test plan should (let - may) consist:

a) Test plan identifier;

b) Introduction;

c) Test items;

d) Features to be tested;

e) Features not to be tested;

f) Approach;

g) Item pass / fail criteria;

h) Suspension criteria and resumption requirements;

i) Test deliverables;

j) Testing tasks;

k) Environmental needs;

l) Responsibilities;

m) StafÞng and training needs;

n) Schedule;

o) Risks and contingencies;

p) Approvals.

Test design is a stage in the software testing process where test cases are designed and created (test cases) in accordance with previously defined quality criteria and testing objectives.

Roles responsible for the test design:

• Test analyst - determines "WHAT to test?"

• Test Designer - defines “HOW to test?”

Test design techniques

• Equivalent Partitioning (EP) . As an example, you have a range of valid values from 1 to 10, you must choose one valid value inside the interval, say 5, and one invalid value outside the interval - 0.

• Analysis of Boundary Values (Boundary Value Analysis - BVA) . If we take the example above, as the values for positive testing, we choose the minimum and maximum limits (1 and 10), and the values are larger and smaller than the limits (0 and 11). Analysis Boundary values can be applied to fields, records, files, or to any kind of entity with restrictions.

• Cause / Effect (Cause / Effect - CE) . It is, as a rule, entering combinations of conditions (causes) to get a response from the system (Corollary). For example, you check the ability to add a client using a specific screen form. To do this, you will need to enter several fields, such as "Name", "Address", "Phone Number" and then press the "Add" button - this "Reason". After clicking the “Add” button, the system adds the client to the database and displays its number on the screen - this is “Corollary”.

• Error Guessing (EG) . This is when the test analyst uses his knowledge of the system and the ability to interpret the specification in order to “predict” under what input conditions the system may give an error. For example, the specification says: “the user must enter the code”. The test analyst will think: “What if I do not enter the code?”, “What if I enter the wrong code? ", and so on. This is an error prediction.

• Exhaustive Testing (ET) is an extreme case. Within this technique, you should check all possible combinations of input values, and in principle, this should find all the problems. In practice, the application of this method is not possible, due to the huge number of input values.

The Traceability matrix is a two-dimensional table containing the functional requirements (functional requirements) of the product and the test cases prepared (test cases). The headings of the table columns are the requirements, and the line headings are test scripts. At the intersection, a mark means that the requirement of the current column is covered by the test script of the current line.

The requirements compliance matrix is used by QA engineers to validate product coverage tests. MST is an integral part of the test plan.

A Test Case is an artifact that describes a set of steps, specific conditions and parameters necessary to verify the implementation of a test function or its part.

Example:

Action Expected Result Test Result

(passed / failed / blocked)

Open page "login" Login page is opened

Each test case should have 3 parts:

PreConditions A list of actions that lead the system to a state suitable for conducting a basic check. Or a list of conditions, the fulfillment of which indicates that the system is in a condition suitable for conducting the main test.

Test Case Description List of actions that translate the system from one state to another, to obtain a result, based on which we can conclude that the implementation is satisfied, the requirements set

PostConditions A list of actions that take the system to its original state (the state before the test is the initial state)

Types of Test Cases:

Test cases are divided by the expected result into positive and negative:

• Positive test case uses only valid data and checks that the application has correctly executed the called function.

• Negative test case operates with both correct and incorrect data (at least 1 incorrect parameter) and aims to check for exceptional situations (validation of validators), and also checks that the application called function does not execute when the validator is triggered.

A check list is a document that describes what should be tested. In this case, the checklist can be completely different levels of detail. How detailed the checklist will depend on the reporting requirements, the level of knowledge of the product by employees and the complexity of the product.

As a rule, the checklist contains only actions (steps), without the expected result. The checklist is less formalized than the test script. It is appropriate to use it when test scripts are redundant. Also, the checklist is associated with flexible approaches to testing.

A defect (also known as a bug) is a discrepancy between the actual result of the program and the expected result. Defects are detected at the stage of software testing (software), when the tester compares the results of the program (component or design) with the expected result described in the specification of requirements.

Error - user error, that is, he is trying to use the program in another way.

Example - enters the letters in the field where you want to enter numbers (age, quantity of goods, etc.).

In a quality program, such situations are provided and an error message (error message) is displayed, with a red cross.

Bug (defect) - a programmer's error (or a designer or someone else who takes part in the development), that is, when in the program, something goes wrong as planned and the program gets out of control. For example, when user input is not controlled at all, as a result, incorrect data cause crashes or other “joys” in the program's work. Or inside the program is built in such a way that it does not initially correspond to what is expected of it.

Failure - failure (and not necessarily hardware) in the component, the entire program or system. That is, there are such defects that lead to failures (A defect caused the failure) and there are those that do not. UI defects for example. But a hardware failure that is not related to software in any way is also failure.

Bug Report is a document describing a situation or sequence of actions that led to incorrect operation of the test object, indicating the reasons and the expected result.

Cap

Short Description A brief description of the problem, clearly indicating the cause and type of the error situation.

Project (Project) The name of the test project

Application Component (Component) Name of part or function of the product being tested.

Version number (Version) The version on which the error was found

Severity The most common five-level system for grading the severity of a defect is:

• S1 Blocking (Blocker)

• S2 Critical

• S3 Major (Major)

• S4 Minor (Minor)

• S5 Trivial

Priority Defect Priority:

• P1 High

• P2 Medium

• P3 Low

Status Status of the bag. Depends on the procedure used and the life cycle of the bug (bug workflow and life cycle)

Author (Author) Creator bug report

Assigned To The name of the person assigned to solve the problem.

Environment

OS / Service Pack, etc. / Browser + version / ... Information about the environment on which the bug was found: operating system, service pack, for WEB testing - the name and version of the browser, etc.

...

Description

Steps to play (Steps to Reproduce) Steps by which you can easily reproduce the situation that led to the error.

Actual Result (Result) The result obtained after passing the steps to play.

Expected Result Expected Result

Additions

Attachment A file with logs, a screenshot or any other document that can help clarify the cause of the error or indicate the way to solve the problem.

Severity vs Priority

Severity is an attribute that characterizes the effect of a defect on an application’s performance.

Priority (Priority) - an attribute that indicates the order of the task or the elimination of the defect. It can be said that this is a work planning manager tool. The higher the priority, the faster the defect needs to be corrected.

Severity is exposed by tester

Priority - by manager, team lead or customer

Graduation Grade Defect (Severity)

S1 Blocking (Blocker)

A blocking error that causes the application to become inoperable, as a result of which further work with the system under test or its key functions becomes impossible. Problem solving is necessary for the further functioning of the system.

S2 Critical

Critical error, incorrect key business logic, security hole, problem that caused server to crash temporarily or some part of the system is inoperable, without the possibility of solving the problem using other entry points. Problem solving is necessary for further work with key functions of the system under test.

S3 Major (Major)

Significant error, part of the main business logic does not work correctly. The error is not critical or there is an opportunity to work with the function under test using other input points.

S4 Minor (Minor)

A minor error that does not violate the business logic of the application being tested is an obvious user interface problem.

S5 Trivial

A trivial error that does not relate to the business logic of the application, a poorly reproducible problem, barely noticeable through the user interface, the problem of third-party libraries or services, a problem that does not affect the overall quality of the product.

Grade Priority Defect (Priority)

P1 High (High)

The error should be fixed as soon as possible, because its availability is critical for the project.

P2 Medium

The error should be corrected, its presence is not critical, but requires a mandatory solution.

P3 Low

The error should be corrected, its presence is not critical, and does not require an urgent solution.

Testing Levels

1. Unit Testing

Component (modular) testing verifies the functionality and looks for defects in parts of the application that are available and can be tested separately (program modules, objects, classes, functions, etc.).

2. Integration Testing

The interaction between the system components after component testing is checked.

3. System Testing (System Testing)

The main task of system testing is to check both functional and non-functional requirements in the system as a whole. This reveals defects, such as incorrect use of system resources, unintended combinations of user-level data, incompatibility with the environment, unintended usage scenarios, missing or incorrect functionality, inconvenience of use, etc.

4. Operational Testing (Release Testing).

Even if the system meets all requirements, it is important to make sure that it meets the user's needs and fulfills its role in the environment of its operation, as it was defined in the business model of the system. It should be noted that the business model may contain errors. Therefore, it is important to conduct operational testing as the final step of validation. In addition, testing in the operating environment allows you to identify non-functional problems, such as: conflict with other systems related in the business field or in software and electronic environments; insufficient system performance in the operating environment, etc. It is obvious that finding such things at the implementation stage is a critical and expensive problem. Therefore, it is so important to carry out not only verification, but also validation, from the very early stages of software development.

5. Acceptance Testing

A formal testing process that checks the compliance of the system with the requirements and is conducted with the aim of:

• determine whether the system meets the acceptance criteria;

• the decision of the customer or other authorized person is accepted by the application or not.

Types / types of testing

Functional types of testing

• Functional testing (Functional testing)

• Security Testing (Security and Access Control Testing)

• Interoperability Testing

Non-functional testing types

• All kinds of performance testing:

o load testing (Performance and Load Testing)

o stress testing (Stress Testing)

o stability or reliability testing (Stability / Reliability Testing)

o volume testing (Volume Testing)

• Installation testing

• Usability Testing

• Failover and Recovery Testing

• Configuration Testing (Configuration Testing)

Change of Test Types

• Smoke Testing

• Regression Testing

• Retesting

• Build Verification Test

• Sanitary Testing or Consistency / Health Testing (Sanity Testing)

Functional testing examines predetermined behavior and is based on an analysis of the specifications of the functionality of a component or system as a whole.

Security testing is a testing strategy used to test the security of a system, as well as to analyze risks related to ensuring a holistic approach to protecting an application, hacker attacks, viruses, and unauthorized access to confidential data.

Interoperability Testing is functional testing that tests the ability of an application to interact with one or more components or systems and includes compatibility testing and integration testing.

Load testing is an automated test that simulates the work of a certain number of business users on a shared (shared) resource.

Stress testing (Stress Testing) allows you to check how the application and the system as a whole work under stress and also evaluate the ability of the system to regenerate, i.e. to return to normal after cessation of exposure to stress. Stress in this context may be an increase in the intensity of operations to very high values or an emergency change in the configuration of the server. Also, one of the tasks in stress testing can be to evaluate performance degradation, so stress testing objectives can overlap with performance testing objectives.

Volume Testing . The task of volumetric testing is to obtain an assessment of performance when increasing the amount of data in the database

Stability / Reliability Testing . The task of testing stability (reliability) is to test the performance of the application during long-term (many hours) testing with an average load level.

Testing the installation is aimed at verifying successful installation and configuration, as well as updating or uninstalling software.

Usability testing is a testing method aimed at establishing the degree of usability, learnability, comprehensibility and attractiveness for users of a developed product in the context of specified conditions. This also includes:

User Interface Testing (UI Testing) is a type of testing research that is performed to determine if some artificial object (such as a web page, user interface, or device) is convenient for its intended use.

User eXperience (UX) is the feeling that a user experiences while using a digital product, while the User interface is a tool that allows user-web resource interaction.

Failover and Recovery Testing tests the product under test in terms of its ability to withstand and recover successfully from possible failures caused by software errors, hardware failures or communication problems (for example, a network failure). The purpose of this type of testing is to check the recovery systems (or duplicate basic functionality of the systems), which, in case of failure, will ensure the safety and integrity of the data of the tested product.

Configuration Testing - a special type of testing aimed at checking the operation of software with various system configurations (declared platforms, supported drivers, various computer configurations, etc.)

Smoke (Smoke) testing is considered as a short cycle of tests performed to confirm that after building the code (new or revised) the application to be installed starts and performs the basic functions.

Regression testing is a type of testing aimed at checking changes made in an application or the environment (fixing a defect, merging code, migrating to another operating system, database, web server or application server) to confirm that the existing functionality is working. as before. Regression tests can be either functional or non-functional tests.

Retesting - testing, during which test scripts are run that have detected errors during the last run, to confirm the success of correcting these errors.

What is the difference between regression testing and re-testing?

Re-testing - fixes bug fixes.

Regression testing - verifies that fixing bugs did not affect other software modules and did not cause new bugs.

Build testing or Build Verification Test - testing aimed at determining the compliance of the released version with the quality criteria for starting testing. According to its goals, it is analogous to Smoke Testing, aimed at accepting a new version for further testing or operation. It can penetrate further, depending on the quality requirements of the released version.

Sanitary testing is highly targeted testing is sufficient to prove that a particular function operates according to the requirements stated in the specification. It is a subset of regression testing. It is used to determine the health of a specific part of an application after changes made in it or the environment. Usually done manually.

Error Guessing (EG) . This is when the test analyst uses his knowledge of the system and the ability to interpret the specification in order to “predict” under what input conditions the system may give an error. For example, the specification says: “the user must enter the code”. The test analyst will think: “What if I do not enter the code?”, “What if I enter the wrong code? ", and so on. This is an error prediction.

Approaches to integration testing:

• Bottom Up (Bottom Up Integration)

All low-level modules, procedures, or functions are aggregated and then tested. After that, the next level of modules is collected for integration testing. This approach is considered useful if all or practically all modules of the developed level are ready. Also, this approach helps to determine the level of readiness of the application by the results of testing.

• Top Down Integration

First, all high-level modules are tested, and low-level ones are gradually added one by one. All modules of the lower level are simulated with plugs with similar functionality, then as they become ready, they are replaced with real active components. So we are testing from top to bottom.

• Big Bang (“Big Bang” Integration)

All or almost all developed modules come together as a complete system or its main part, and then integration testing is carried out. This approach is very good for saving time. However, if test cases and their results are not recorded correctly, the integration process itself will become very complicated, which will be an obstacle for the testing team to achieve the main goal of integration testing.

Principles of testing

Principle 1 - Testing demonstrates the presence of defects (Testing shows presence of defects)

Testing can show that defects are present, but cannot prove that they are not. Testing reduces the likelihood of defects in the software, but even if no defects were detected, this does not prove its correctness.

Principle 2 - Exhaustive Testing Unreachable (Exhaustive testing is impossible)

Full testing using all combinations of inputs and preconditions is physically impossible, except in trivial cases. Instead of exhaustive testing, risk analysis and prioritization should be used to more accurately focus testing efforts.

Principle 3 - Early testing

To find defects as early as possible, testing activities should be started as early as possible in the software or system development life cycle, and should be focused on specific goals.

Principle 4 - Defects clustering

Testing efforts should be concentrated in proportion to the expected and later real density of defects by module. As a rule, most of the defects found during testing or resulting in the majority of system failures are contained in a small number of modules.

Principle 5 - Pesticide Paradox (Pesticide paradox)

If the same tests are run many times, in the end, this set of test scenarios will no longer find new defects. To overcome this “pesticide paradox”, test scripts should be regularly reviewed and corrected, new tests should be versatile to cover all components of the software or systems, and to find as many defects as possible.

Principle 6 - Testing is context dependent (Testing is concept depending)

Testing is done differently depending on the context. For example, security-critical software is tested differently than an e-commerce site.

Principle 7 - Absence-of-errors fallacy

Detection and correction of defects will not help if the created system does not suit the user and does not satisfy his expectations and needs.

Static and dynamic testing

Static testing differs from dynamic testing in that it is done without launching the product code. Testing is carried out by analyzing the software code (code review) or compiled code. The analysis can be done both manually and with the help of special tools. The purpose of the analysis is the early detection of errors and potential problems in the product. Also static testing includes specification testing and other documentation.

Research / ad-hoc testing

The simplest definition of research testing is developing and executing tests at the same time. ( , ). , , .

ad hoc exploratory testing , , ad hoc , exploratory . , .

— () , .

, , . , .

:

•

•

•

•

• ()

•

•

— , , . ( «-») , . (« »).

:

• ;

• ;

• ;

• ;

• .

. , .

:

• -

•

•

• -

•

• -

(decision table) — , . , .

QA/QC/Test Engineer

, : — QC. QC — QA.

— , . .

: www.protesting.ru , www.bugscatcher.net , www.qalight.com.ua , www.thinkingintests.wordpress.com , ISTQB, www.quizful.net , www.bugsclock.blogspot.com , www.zeelabs.com , www.devopswiki.net , www.hvorostovoz.blogspot.com .

Source: https://habr.com/ru/post/279535/

All Articles