How we wrote the code Netflix

How exactly did Netflix implement the code up to the cloud phase? We told parts of this story before, but now it is time to add more details to it. In this post, we describe the tools and methods that allowed us to go from the source code to the comprehensive service that allows you to enjoy movies and TV shows to more than 75 million subscribers from around the world.

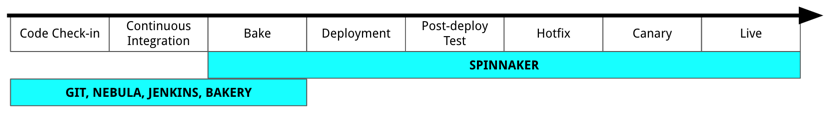

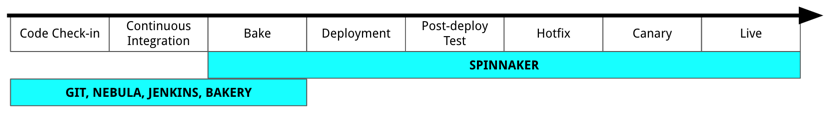

The diagram above is a reference to the previous post representing Spinnaker , our global continuous data transfer platform. But before getting into the Spinnaker line of code, you need to go through several stages:

')

In the rest of this post, we describe the tools and methods used at each of these stages and talk about the challenges that we encountered along the way.

Before delving into the description of the process of creating Netflix code, it is necessary to identify the key factors that influence our decisions: our organizational culture, the cloud, and microservices.

Netflix culture extends the ability of engineers to use any, in their opinion, suitable tools for the solution of the tasks. In our experience, in order for a solution to be universally accepted, it must be reasoned, useful, and reduce the cognitive load on most Netflix engineers. Teams are free to choose the way to solve problems, but they are paid for with additional responsibility for supporting these solutions. Offers from Netflix central teams are beginning to be considered part of the “paved road”. Now it is she who is in the center of our attention and supported by our specialists.

In addition, in 2008, Netflix began to stream data to AWS and transfer monolithic data center based on Java applications to Java cloud microservices. Their architecture allows Netflix teams not to be tightly connected with each other, which allows them to create and promote problem solving at a comfortable pace.

I think it will not surprise anyone that, before deploying a service or application, it must first be developed. We created Nebula - a set of plug-ins build system for Gradle , to cope with the main difficulties during the development. Gradle is a first-class tool for developing, testing and preparing Java applications for deployment, because it covers the basic needs of our code. He was chosen because it was easy to write testable plugins, while reducing the final file. Thus, Nebula, with the help of Gradle with its set of available plug-ins to manage relationships, release, deployment, and many other tools, provides reliable automated functionality.

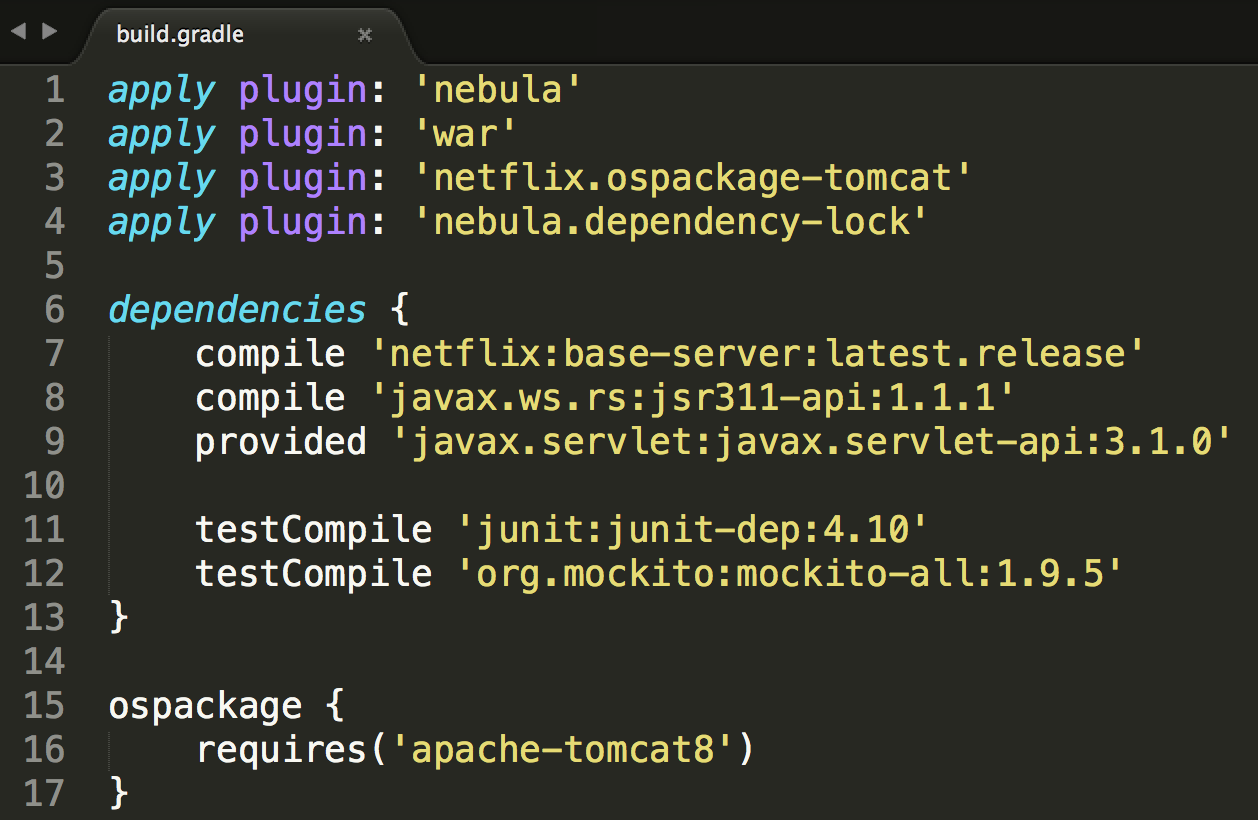

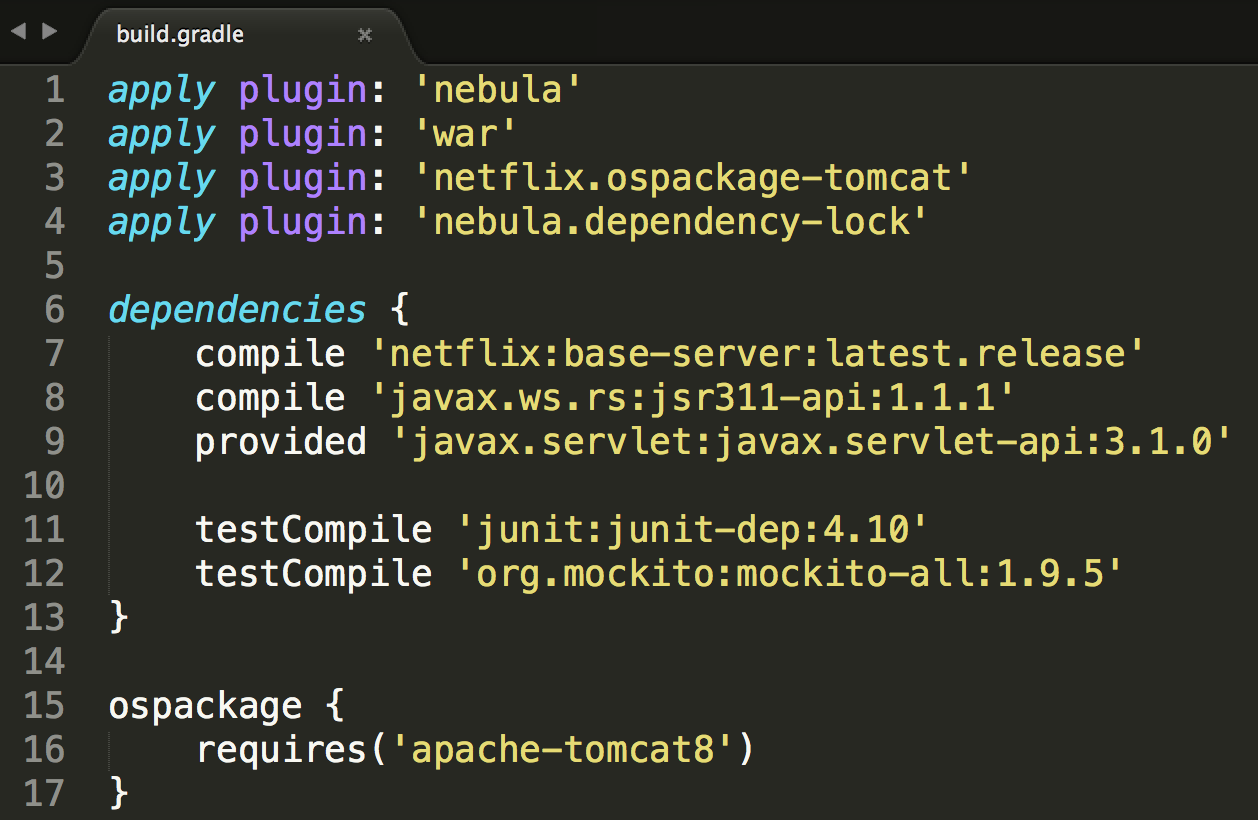

Simple Java application. Build.gradle file

The above build.gradle file is an example of building a regular Java application in Netflix. In the body of this project, we see Java commands and four Gradle plugins, three of which are either part of Nebula, or the internal settings associated with its plugins. The 'nebula' plugin is part of Gradle, providing the connections and settings necessary for integration with our infrastructure. The plugin 'nebula.dependency-lock' allows the project to create an a.lock file that builds variable dependencies with the possibility of repetition. The plugin 'netflix.ospackage-tomcat' and the ospackage block will be discussed below.

With the help of Nebula, we are trying to provide reusable and compatible functionality of the assembly, pursuing the task of reducing the temporal pattern in each application file. In the next post we will discuss in more detail Nebula and its various functions, the code for which is open. In the meantime, you can go to the Nebula website .

After local testing of the code with the help of Nebula, we can proceed to integration and deployment. First we need to run the updated source code in the Git repository, because if necessary, teams can freely find its workflow.

After confirming the change, the Jenkins function is triggered. Our use of Jenkins for continuous integration has evolved over the years. In our data center, we started with just one massive master Jenkins, but this resulted in the use of 25 masters Jenkins in AWS. In Netflix, it is used for a large number of automated tasks that are more complex than simple continuous integrations.

The Jenkins task is to call Nebula to create, test, and prepare to deploy the application code. If the repository becomes a library, Nebula will publish * .jar in our repository. If the repository is an application, then Nebula will launch the ospackage plugin. The ospackage plugin (the “operating system package”) will present the application artifact created as a Debian or RPM package, the content of which is determined using a simple DSL based on Gradle. Nebula will then publish the Debian file to the package repository, where it will be available for the next stage: baking.

Note: “Baking” and “bakery” means the analogy with the baking process in real life: recipe, consistency, compliance with the necessary conditions. The author of the original really likes this turnover and he will use it quite often in the future. Unfortunately, I did not find a Russian-language analogue in terms of the meaning embedded in these words.

Our deployment strategy is centered around the immutability of the server pattern . To reduce the likelihood of configuration drift and to ensure that repetition deployments come from one source, it is highly recommended not to modify the files manually. Each deployment on Netflix begins with the creation of a new AMI ( Amazon Machine Image ). To create AMI files directly from the source, we created the “Bakery”

Bakery creates an API, which makes it incredibly easy to create AMI. Then, the Bakery Service APIs “prepare” tasks for work nodes that use Animator to create images. To call the "bake" function, the user must declare the installation of the required package and the base image on which this package will be installed. It is this (basic AMI) that ensures that the Linux environment, configured with general convections, services, and tools, is required to fully integrate with the larger part of the Netflix ecosystem.

When Jenkins has successfully completed his part of the job, he usually calls the " Spinnaker pipeline ". They can be caused by Jenkins actions or Git commit. Spinnaker will read the operating system package generated by Nebula and access the Bakery API to get started.

After “baking” is completed, Spinnaker is able to deploy the resulting AMI to tens, hundreds and even thousands of copies. The same AMI can be used in several environments while at the same time Spinnaker identifies the execution environment for a particular instance, which allows applications to customize their execution time. Successful baking will launch the next phase of the Spinnaker pipeline - re-closing in a test environment.

Here, the teams begin to deploy, using the whole “battery” of automated integration tests. From this point on, the specificity of the pipeline makes the deployment process dependent on people with the ability to fine-tune. Teams use Spinnaker to manage multiple deployment areas, red / black deployments, and more. Suffice it to say that “Spinnaker pipelines” provide teams with flexible tools to control the development of code.

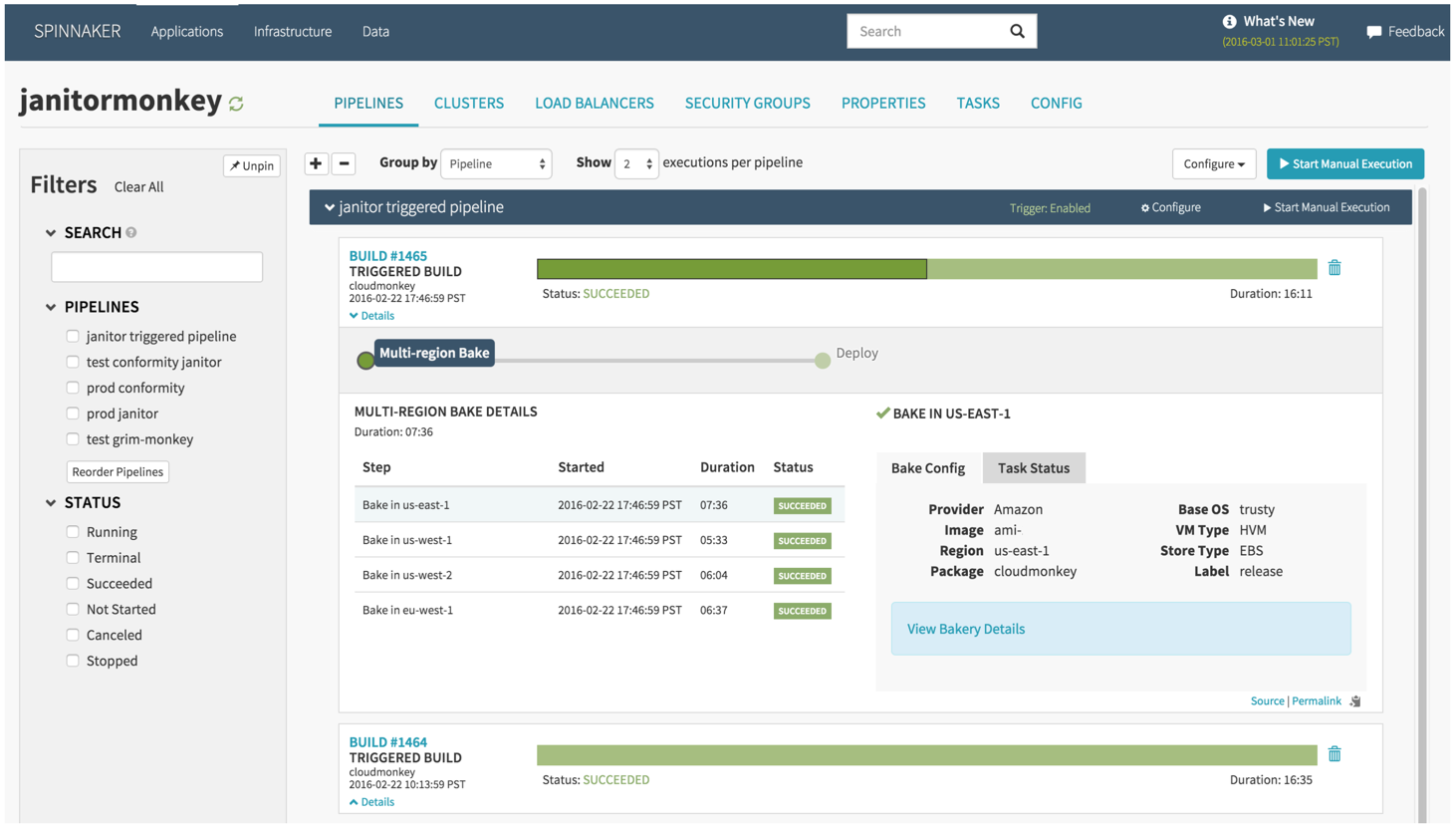

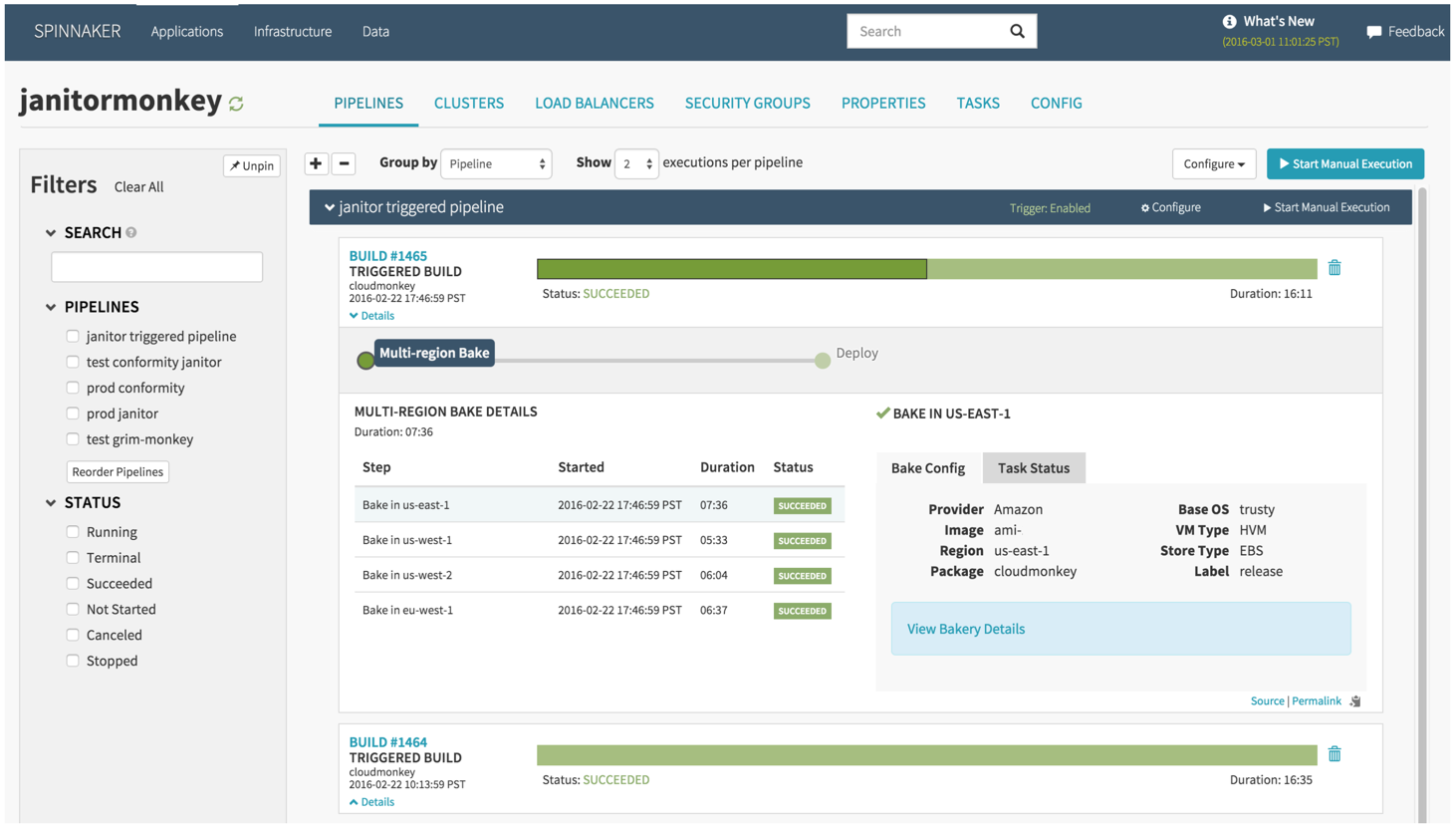

In general, these tools provide a high level of performance and automation. For example, it would take just 16 minutes for Janitor Monkey , our cloud serviceability and maintenance service, to go from the registration window to the multi-regional sweep.

Spinnaker "bakes" and deploys the pipeline, called Jenkins.

This suggests that we are always looking for ways to increase the experience of developers and constantly challenge ourselves - to do better, faster and easier.

We are often asked how we cope with binary dependencies in Netflix. Nebula provides us with Java dependency relief tools. For example, let's take the dependency-lock plugin, which helps applications calculate all their binary dependency graphics and creates a variable a.lock file. The " resolution rules " plugin for Nebula allows you to create dependency rules for the entire project that affect all its parts. These tools help to make managing binary dependencies easier, but still do not allow for reducing performance to an acceptable level.

We are also working on reducing the time of "baking". Not so long ago, 16 minutes of deployment seemed like a dream, but as the speed of other parts of the system increased, this dream turned into a potential obstacle. For example, take the deployment of Simian Army: the process of "baking" took 7 minutes, which is 44% of the whole process of "baking" and deployment. We found that the lead (in time) in the baking process was the installation of packages (including dependency resolution) and the AWS copy process itself.

As Netflix grows and develops, the demand for its build and sweep toolkit increases, as you need to provide first-class support for JavaScript / Node.js, Python, Ruby, and Go without a virtual machine. Now for these languages, we can recommend the Nebula ospackage plugin, which allows you to get a Debian package for subsequent “baking”, leaving the assembly and testing to the engineers and tools of the platform used. So far this solution is suitable for us, but we are trying to expand our toolkit to reduce dependence on specific languages.

Containers are an interesting opportunity to solve the last two tasks. We are now exploring how containers can help improve our build, bake and roll out processes. If we can create a local container environment that exactly mimics our cloud environments, we could potentially reduce the baking time required for the development and testing cycles, thereby increasing developer productivity and speeding the development of the product as a whole. The container, which could be deployed locally out of the box, reduces the cognitive load and allows our engineers to focus on solving tasks and innovations, instead of checking whether there is any error due to differences in environments.

The diagram above is a reference to the previous post representing Spinnaker , our global continuous data transfer platform. But before getting into the Spinnaker line of code, you need to go through several stages:

')

- The code should be written and locally tested with Nebula plugins;

- Changes are moved to the central git repository;

- Jenkins launches Nebula, which creates, tests and prepares applications for the cloud;

- The builds are “baked” in Amazon Machine Image;

- Spinnaker helps unlock and activate the modified code.

In the rest of this post, we describe the tools and methods used at each of these stages and talk about the challenges that we encountered along the way.

Organizational culture, cloud and microservices

Before delving into the description of the process of creating Netflix code, it is necessary to identify the key factors that influence our decisions: our organizational culture, the cloud, and microservices.

Netflix culture extends the ability of engineers to use any, in their opinion, suitable tools for the solution of the tasks. In our experience, in order for a solution to be universally accepted, it must be reasoned, useful, and reduce the cognitive load on most Netflix engineers. Teams are free to choose the way to solve problems, but they are paid for with additional responsibility for supporting these solutions. Offers from Netflix central teams are beginning to be considered part of the “paved road”. Now it is she who is in the center of our attention and supported by our specialists.

In addition, in 2008, Netflix began to stream data to AWS and transfer monolithic data center based on Java applications to Java cloud microservices. Their architecture allows Netflix teams not to be tightly connected with each other, which allows them to create and promote problem solving at a comfortable pace.

Development

I think it will not surprise anyone that, before deploying a service or application, it must first be developed. We created Nebula - a set of plug-ins build system for Gradle , to cope with the main difficulties during the development. Gradle is a first-class tool for developing, testing and preparing Java applications for deployment, because it covers the basic needs of our code. He was chosen because it was easy to write testable plugins, while reducing the final file. Thus, Nebula, with the help of Gradle with its set of available plug-ins to manage relationships, release, deployment, and many other tools, provides reliable automated functionality.

Simple Java application. Build.gradle file

The above build.gradle file is an example of building a regular Java application in Netflix. In the body of this project, we see Java commands and four Gradle plugins, three of which are either part of Nebula, or the internal settings associated with its plugins. The 'nebula' plugin is part of Gradle, providing the connections and settings necessary for integration with our infrastructure. The plugin 'nebula.dependency-lock' allows the project to create an a.lock file that builds variable dependencies with the possibility of repetition. The plugin 'netflix.ospackage-tomcat' and the ospackage block will be discussed below.

With the help of Nebula, we are trying to provide reusable and compatible functionality of the assembly, pursuing the task of reducing the temporal pattern in each application file. In the next post we will discuss in more detail Nebula and its various functions, the code for which is open. In the meantime, you can go to the Nebula website .

Integration

After local testing of the code with the help of Nebula, we can proceed to integration and deployment. First we need to run the updated source code in the Git repository, because if necessary, teams can freely find its workflow.

After confirming the change, the Jenkins function is triggered. Our use of Jenkins for continuous integration has evolved over the years. In our data center, we started with just one massive master Jenkins, but this resulted in the use of 25 masters Jenkins in AWS. In Netflix, it is used for a large number of automated tasks that are more complex than simple continuous integrations.

The Jenkins task is to call Nebula to create, test, and prepare to deploy the application code. If the repository becomes a library, Nebula will publish * .jar in our repository. If the repository is an application, then Nebula will launch the ospackage plugin. The ospackage plugin (the “operating system package”) will present the application artifact created as a Debian or RPM package, the content of which is determined using a simple DSL based on Gradle. Nebula will then publish the Debian file to the package repository, where it will be available for the next stage: baking.

"Bakery products"

Note: “Baking” and “bakery” means the analogy with the baking process in real life: recipe, consistency, compliance with the necessary conditions. The author of the original really likes this turnover and he will use it quite often in the future. Unfortunately, I did not find a Russian-language analogue in terms of the meaning embedded in these words.

Our deployment strategy is centered around the immutability of the server pattern . To reduce the likelihood of configuration drift and to ensure that repetition deployments come from one source, it is highly recommended not to modify the files manually. Each deployment on Netflix begins with the creation of a new AMI ( Amazon Machine Image ). To create AMI files directly from the source, we created the “Bakery”

Bakery creates an API, which makes it incredibly easy to create AMI. Then, the Bakery Service APIs “prepare” tasks for work nodes that use Animator to create images. To call the "bake" function, the user must declare the installation of the required package and the base image on which this package will be installed. It is this (basic AMI) that ensures that the Linux environment, configured with general convections, services, and tools, is required to fully integrate with the larger part of the Netflix ecosystem.

When Jenkins has successfully completed his part of the job, he usually calls the " Spinnaker pipeline ". They can be caused by Jenkins actions or Git commit. Spinnaker will read the operating system package generated by Nebula and access the Bakery API to get started.

Application

After “baking” is completed, Spinnaker is able to deploy the resulting AMI to tens, hundreds and even thousands of copies. The same AMI can be used in several environments while at the same time Spinnaker identifies the execution environment for a particular instance, which allows applications to customize their execution time. Successful baking will launch the next phase of the Spinnaker pipeline - re-closing in a test environment.

Here, the teams begin to deploy, using the whole “battery” of automated integration tests. From this point on, the specificity of the pipeline makes the deployment process dependent on people with the ability to fine-tune. Teams use Spinnaker to manage multiple deployment areas, red / black deployments, and more. Suffice it to say that “Spinnaker pipelines” provide teams with flexible tools to control the development of code.

The way ahead

In general, these tools provide a high level of performance and automation. For example, it would take just 16 minutes for Janitor Monkey , our cloud serviceability and maintenance service, to go from the registration window to the multi-regional sweep.

Spinnaker "bakes" and deploys the pipeline, called Jenkins.

This suggests that we are always looking for ways to increase the experience of developers and constantly challenge ourselves - to do better, faster and easier.

We are often asked how we cope with binary dependencies in Netflix. Nebula provides us with Java dependency relief tools. For example, let's take the dependency-lock plugin, which helps applications calculate all their binary dependency graphics and creates a variable a.lock file. The " resolution rules " plugin for Nebula allows you to create dependency rules for the entire project that affect all its parts. These tools help to make managing binary dependencies easier, but still do not allow for reducing performance to an acceptable level.

We are also working on reducing the time of "baking". Not so long ago, 16 minutes of deployment seemed like a dream, but as the speed of other parts of the system increased, this dream turned into a potential obstacle. For example, take the deployment of Simian Army: the process of "baking" took 7 minutes, which is 44% of the whole process of "baking" and deployment. We found that the lead (in time) in the baking process was the installation of packages (including dependency resolution) and the AWS copy process itself.

As Netflix grows and develops, the demand for its build and sweep toolkit increases, as you need to provide first-class support for JavaScript / Node.js, Python, Ruby, and Go without a virtual machine. Now for these languages, we can recommend the Nebula ospackage plugin, which allows you to get a Debian package for subsequent “baking”, leaving the assembly and testing to the engineers and tools of the platform used. So far this solution is suitable for us, but we are trying to expand our toolkit to reduce dependence on specific languages.

Containers are an interesting opportunity to solve the last two tasks. We are now exploring how containers can help improve our build, bake and roll out processes. If we can create a local container environment that exactly mimics our cloud environments, we could potentially reduce the baking time required for the development and testing cycles, thereby increasing developer productivity and speeding the development of the product as a whole. The container, which could be deployed locally out of the box, reduces the cognitive load and allows our engineers to focus on solving tasks and innovations, instead of checking whether there is any error due to differences in environments.

Source: https://habr.com/ru/post/279295/

All Articles