Nutanix Big Update: NutanixOS 4.6

While some " market leaders " suddenly "invent hyperconvergence," Nutanix has been selling and selling on the market for five years. And a couple of weeks ago, Nutanix was rolling out a large and important update pulling on a major release, but since we have an even bigger and more important update ahead, we were modest, and this is version 4.6.

First of all, it is necessary to tell about a sharp and significant increase in productivity at times. No, they didn’t “remove the forgotten debug sleep 10 in the code”, you don’t need dirt;) The developers named the following reasons for such an impressive increase:

- Reducing the number of dynamic memory allocations

- Reduced locking overhead

- Reduced overhead on context switching

- Using new language features and C ++ v11 compiler optimizations

- More granular cheksum calculation

- Faster I / O categorization for further optimization

- Improved metadata caching

- Improved write caching algorithms.

')

And the results, so as not to be unfounded, are such. This is our test system NX-3460-G4, in a top configuration, for 4 nodes. 2x E5-2680v3b 512GB DDR4 RAM per node, 2x SSD 1.2TB, 4x SATA 2TB per node, only 4 nodes, our test data for the entire cluster, total for all four nodes, for IOPS results are divided by four.

Conclusion - the result of our embedded test. On each node, VM is deployed in a CentOS configuration, 1x vCPU 1GB RAM, 7 vDisk, and six of them are being chased by fio in sequential read and write in large blocks (1MB), random read and write in small blocks (4K).

Results:

Update results from 4.5.1.2 to 4.6

It was (AHV NOS 4.5.1.2):

Waiting for the hot cache to flush ............ done.

2016-02-24_05-36-02: Running test "Sequential write bandwidth" ...

1653 MBps, latency (msec): min = 9, max = 1667, median = 268

Average CPU: 10.1.16.212: 36% 10.1.16.209: 48% 10.1.16.211: 38% 10.1.16.210: 39%

Duration fio_seq_write: 33 secs

************************************************** ******************************

Waiting for the hot cache to flush ....... done.

2016-02-24_05-37-15: Running test "Sequential read bandwidth" ...

3954 MBps, latency (msec): min = 0, max = 474, median = 115

Average CPU: 10.1.16.212: 35% 10.1.16.209: 39% 10.1.16.211: 30% 10.1.16.210: 31%

Duration fio_seq_read: 15 secs

************************************************** ******************************

Waiting for the hot flush ....... done.

2016-02-24_05-38-22: Running test "Random read IOPS" ...

115703 IOPS , latency (msec): min = 0, max = 456, median = 4

Average CPU: 10.1.16.212: 73% 10.1.16.209: 75% 10.1.16.211: 74% 10.1.16.210: 73%

Duration fio_rand_read: 102 secs

************************************************** ******************************

Waiting for the hot cache to flush ....... done.

2016-02-24_05-40-44: Running test "Random write IOPS" ...

113106 IOPS , latency (msec): min = 0, max = 3, median = 2

Average CPU: 10.1.16.212: 64% 10.1.16.209: 65% 10.1.16.211: 65% 10.1.16.210: 63%

Duration fio_rand_write: 102 secs

************************************************** ******************************

It became after the update (AHV NOS 4.6):

Waiting for the hot cache to flush ............ done.

2016-03-11_03-50-03: Running test "Sequential write bandwidth" ...

1634 MBps, latency (msec): min = 11, max = 1270, median = 281

Average CPU: 10.1.16.212: 39% 10.1.16.209: 46% 10.1.16.211: 42% 10.1.16.210: 47%

Duration fio_seq_write: 33 secs

************************************************** ******************************

Waiting for the hot flush ....... done.

2016-03-11_03-51-13: Running test "Sequential read bandwidth" ...

3754 MBps, latency (msec): min = 0, max = 496, median = 124

Average CPU: 10.1.16.212: 22% 10.1.16.209: 37% 10.1.16.211: 23% 10.1.16.210: 28%

Duration fio_seq_read: 15 secs

************************************************** ******************************

Waiting for the hot cache to flush ............ done.

2016-03-11_03-52-24: Running test "Random read IOPS" ...

218362 IOPS , latency (msec): min = 0, max = 34, median = 2

Average CPU: 10.1.16.212: 80% 10.1.16.209: 91% 10.1.16.211: 80% 10.1.16.210: 82%

Duration fio_rand_read: 102 secs

************************************************** ******************************

Waiting for the hot flush ....... done.

2016-03-11_03-54-43: Running test "Random write IOPS" ...

156843 IOPS , latency (msec): min = 0, max = 303, median = 2

Average CPU: 10.1.16.212: 69% 10.1.16.209: 72% 10.1.16.211: 64% 10.1.16.210: 74%

Duration fio_rand_write: 102 secs

************************************************** ******************************

Waiting for the hot cache to flush ............ done.

2016-02-24_05-36-02: Running test "Sequential write bandwidth" ...

1653 MBps, latency (msec): min = 9, max = 1667, median = 268

Average CPU: 10.1.16.212: 36% 10.1.16.209: 48% 10.1.16.211: 38% 10.1.16.210: 39%

Duration fio_seq_write: 33 secs

************************************************** ******************************

Waiting for the hot cache to flush ....... done.

2016-02-24_05-37-15: Running test "Sequential read bandwidth" ...

3954 MBps, latency (msec): min = 0, max = 474, median = 115

Average CPU: 10.1.16.212: 35% 10.1.16.209: 39% 10.1.16.211: 30% 10.1.16.210: 31%

Duration fio_seq_read: 15 secs

************************************************** ******************************

Waiting for the hot flush ....... done.

2016-02-24_05-38-22: Running test "Random read IOPS" ...

115703 IOPS , latency (msec): min = 0, max = 456, median = 4

Average CPU: 10.1.16.212: 73% 10.1.16.209: 75% 10.1.16.211: 74% 10.1.16.210: 73%

Duration fio_rand_read: 102 secs

************************************************** ******************************

Waiting for the hot cache to flush ....... done.

2016-02-24_05-40-44: Running test "Random write IOPS" ...

113106 IOPS , latency (msec): min = 0, max = 3, median = 2

Average CPU: 10.1.16.212: 64% 10.1.16.209: 65% 10.1.16.211: 65% 10.1.16.210: 63%

Duration fio_rand_write: 102 secs

************************************************** ******************************

It became after the update (AHV NOS 4.6):

Waiting for the hot cache to flush ............ done.

2016-03-11_03-50-03: Running test "Sequential write bandwidth" ...

1634 MBps, latency (msec): min = 11, max = 1270, median = 281

Average CPU: 10.1.16.212: 39% 10.1.16.209: 46% 10.1.16.211: 42% 10.1.16.210: 47%

Duration fio_seq_write: 33 secs

************************************************** ******************************

Waiting for the hot flush ....... done.

2016-03-11_03-51-13: Running test "Sequential read bandwidth" ...

3754 MBps, latency (msec): min = 0, max = 496, median = 124

Average CPU: 10.1.16.212: 22% 10.1.16.209: 37% 10.1.16.211: 23% 10.1.16.210: 28%

Duration fio_seq_read: 15 secs

************************************************** ******************************

Waiting for the hot cache to flush ............ done.

2016-03-11_03-52-24: Running test "Random read IOPS" ...

218362 IOPS , latency (msec): min = 0, max = 34, median = 2

Average CPU: 10.1.16.212: 80% 10.1.16.209: 91% 10.1.16.211: 80% 10.1.16.210: 82%

Duration fio_rand_read: 102 secs

************************************************** ******************************

Waiting for the hot flush ....... done.

2016-03-11_03-54-43: Running test "Random write IOPS" ...

156843 IOPS , latency (msec): min = 0, max = 303, median = 2

Average CPU: 10.1.16.212: 69% 10.1.16.209: 72% 10.1.16.211: 64% 10.1.16.210: 74%

Duration fio_rand_write: 102 secs

************************************************** ******************************

And for Allflash configurations, the growth is even more significant .

And you get this growth simply by optimizing and refactoring the code by our programmers, on the system that already exists for you! Without any hardware upgrade. Is free. Automatic update from GUI for half an hour. Nutanix is such a Tesla, flooded a new firmware - it went faster and the autopilot appeared. :)

In my opinion, such cases are the best illustration of the power of software-defined solutions.

What else is new, by simple enumeration (in more detail, you should probably write a separate note about each of these features):

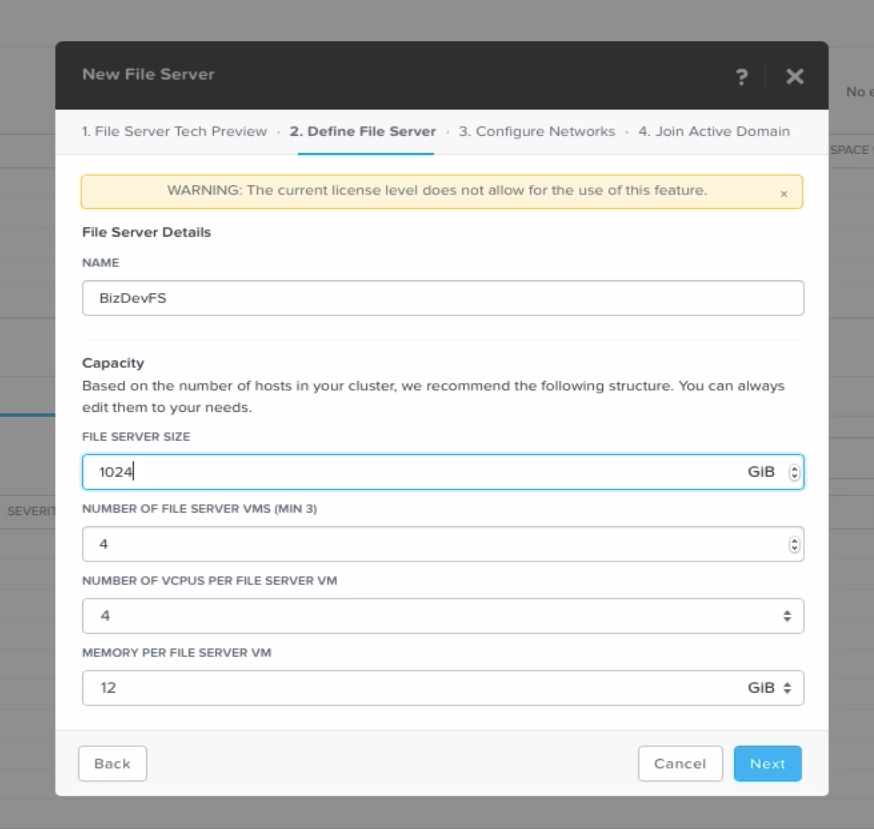

Nutanix's built-in distributed scale-out, single namespace SMB 2.1 file service, which we call Project Minerva inside. With it, Nutanix can work as a NAS for external consumers, giving the files "out". Many have asked this, because there are still areas where I would like file storage for external users, the simplest example is the storage of user profiles for VDI workstations. Now we can do this within Nutanix only.

Project Minerva is a separate VM (NVM, “NAS VM”) with a NAS service in it, that is, if you don’t need it, you don’t install it, it doesn’t take up disk space and you need memory, you deploy and install from inside Nutanix. Currently SMB 2.1 is made, NFS will probably be added in the future. One of the developers of SAMBA is engaged in them, however Minerva is not SAMBA, it is a “from scratch” written file service written to be able to be distributed over an unlimited number of nodes in a cluster while maintaining a single “namespace” on it, single namespace.

About Project Minerva and all its technical details I will tell you in more detail in a separate article, when it comes to release, but you can watch now, it is published in the Technology Preview status.

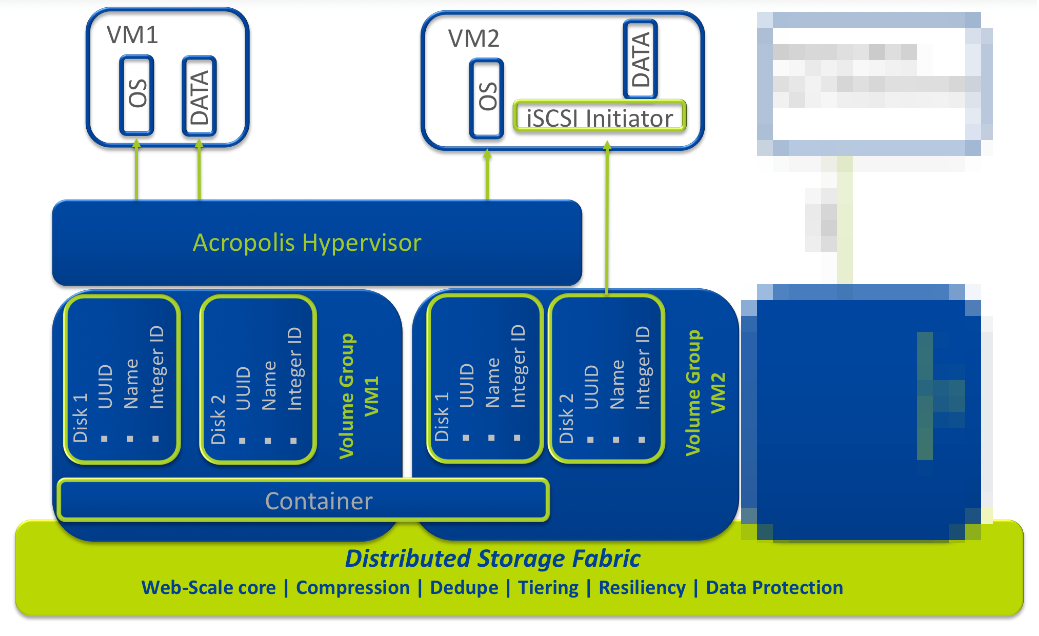

Volume Groups, which appeared in 4.5 and configured there in the CLI, are now controlled from the Prism GUI. Volume Groups are iSCSI block access volumes available for a VM, if you need to give a block access partition to a VM running inside Nutanix. Examples of such applications are Microsoft Exchange on ESXi, Windows 2008 Guest Clustering, Microsoft SQL 2008 Clustering, and Oracle RAC.

Blurred on the screenshot, some not yet released features.

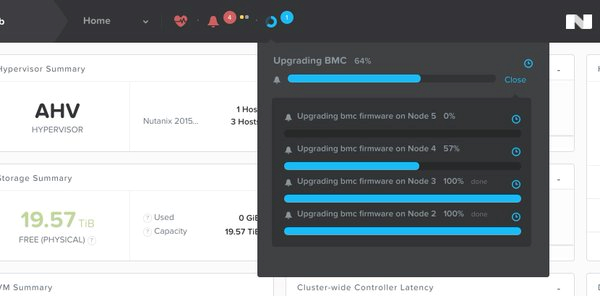

Update 1-Click Upgrade now works including for BMC and BIOS platform. Without stopping work, you can update the BIOS of the server platform with our regular update. Fully automatically, the host platform migrates from VMs to its neighbors, goes into Maintenance Mode, updates the BIOS, reboots with a new one, and returns its VMs to itself, after which it will be done in turn with the rest of the cluster hosts, without administrator intervention.

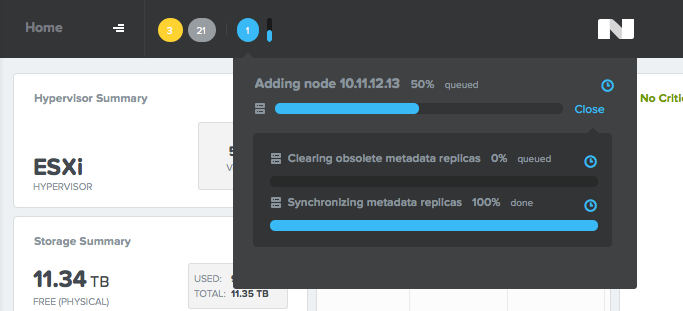

Also updated is our firmware embedded and embedded in the hypervisor and CVM - Foundation, now embedded in Nutanix. Now the user himself can add the new node to the cluster in the Foundation Foundation without the involvement of Nutanix engineers. A server node comes from the factory with Acropolis Hypervisor flooded into it and the built-in Foundation. The user has a small Java applet that is launched from the administrator’s computer, which independently detects a server node not connected to the cluster (each Nutanix has a unique IPv6 address from the link local segment generated from its serial), allows you to fill it with hypervisor, such as ESXi, and include the node being added to your cluster. Convenient and fast.

Metro Availability has received a long-awaited opportunity not to restart VM after their migration to the DR-site. Previously, this was required because of the need to switch NFS descriptors to the new site and its storage (the data was synchronously replicated), now the VMs work through the NFS proxy, and can migrate “profitably” while continuing to work.

Another long-awaited feature - Self-Service Restore. Now the VM user can independently recover the data stored in the VM's snapshot. To do this, the so-called Nutanix Guest Tool is installed in the VM, and with its help, VM communicates with Nutanix, allowing you to mount the snapshot as a separate disk inside the VM, from where you can extract the snapshot data by simple copying. Users who often “roll back” to snapshots, for example, various Test / Dev systems often use this, can do it themselves, not tugging for this admin, and not restoring the entire VM, but only the necessary separate files.

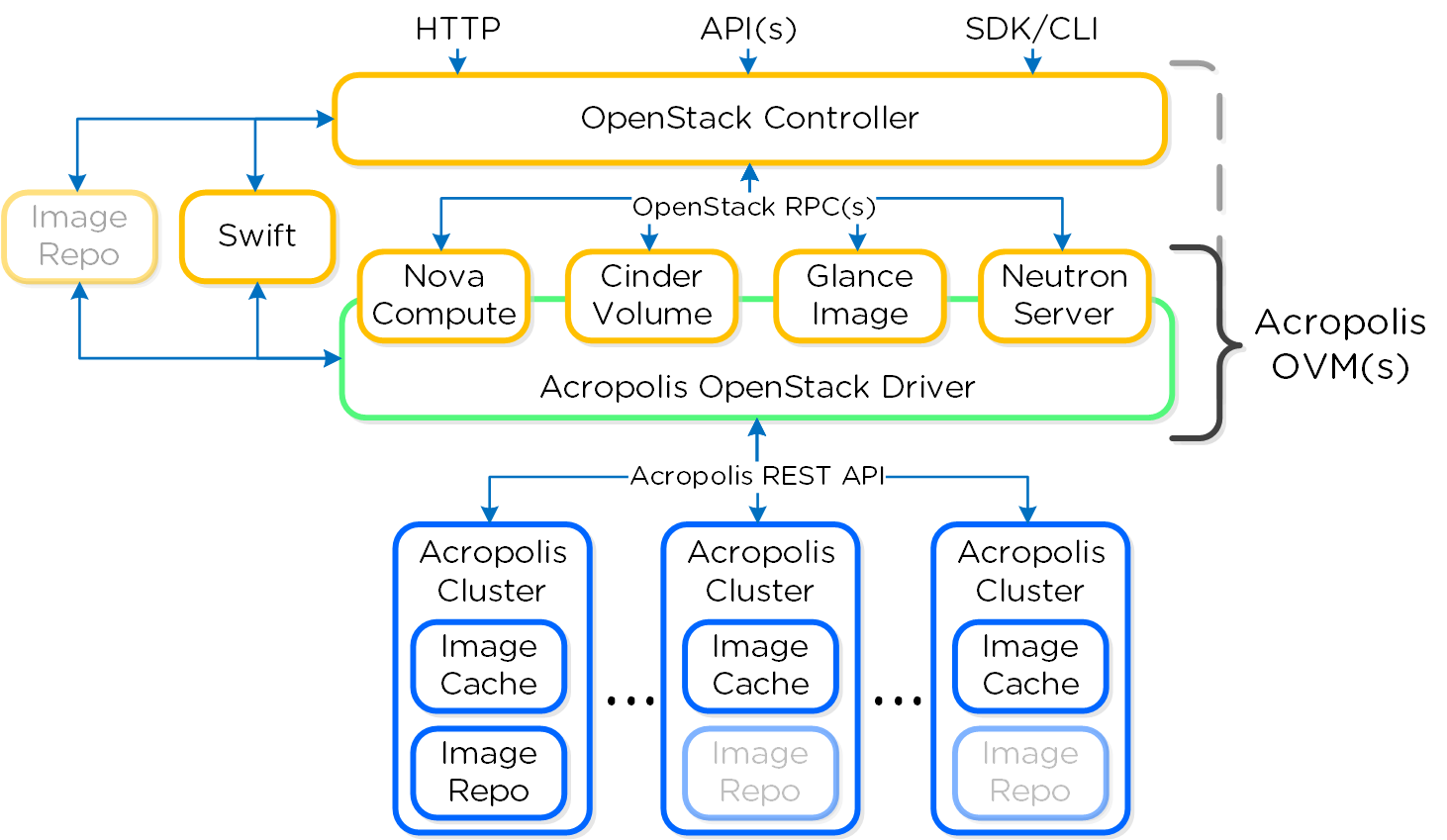

There was integration with OpenStack, new drivers for Nova, Cinder, Glance and Neutron. The integration works through a special Server VM (SVM), an image of which is available for download and installation in Nutanix. Thus, Nutanix is seen from the Open Stack as a hypervisor. About integration with OpenStack, I will tell you more later.

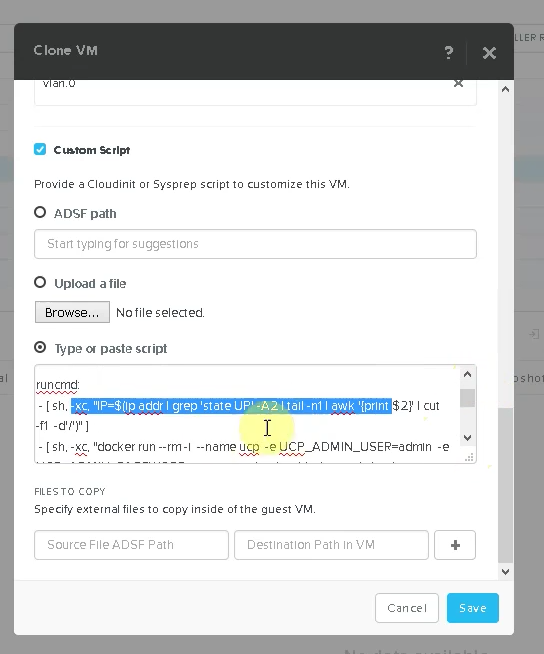

Added support for cloudinit and sysprep scripts. It has long been asked to do. Now you can set the post-configuration script for the created or cloned machine, and get a ready-to-use VM with individual settings on output. Thousands of them. :)

Replication between clusters is now possible between different hypervisors. For example, a production cluster under ESXi can replicate its data to a cluster in a backup data center, under Acropolis Hypervisor. I like ESXi, but I don’t like to pay licenses for the sockets of the hosts in the DR used a couple of times a year - OK, use our AHV for it.

In the Tech Preview status published Project Dial - 1-click in-place hypervisor conversion , the entire cluster migration from ESXi to AHV. Cluster hypervisors, its CVMs, as well as the user's VM are automatically converted. Of course, all this will be done without stopping the system (but the VM will restart). GA release in upcoming NOS versions. But this is certainly worth a separate big story.

So, who already has Nutanix - update, who have not yet - envy :)

For experimenting with Nutanix CE - the update for CE

Source: https://habr.com/ru/post/279127/

All Articles