Containers: The quest for the "magic framework" and why it became Kubernetes

We at Latera are creating billing for telecom operators . In a blog on Habré, we not only talk about the features of our system and the details of its development (for example, ensuring fault tolerance ), but also publish materials about working with the infrastructure as a whole. The project engineer Haleby.se wrote a material in a blog about the reasons for choosing Kubernetes technology as the orchestration tool of the Docker containers. We present to you the main thoughts of this article.

Prehistory

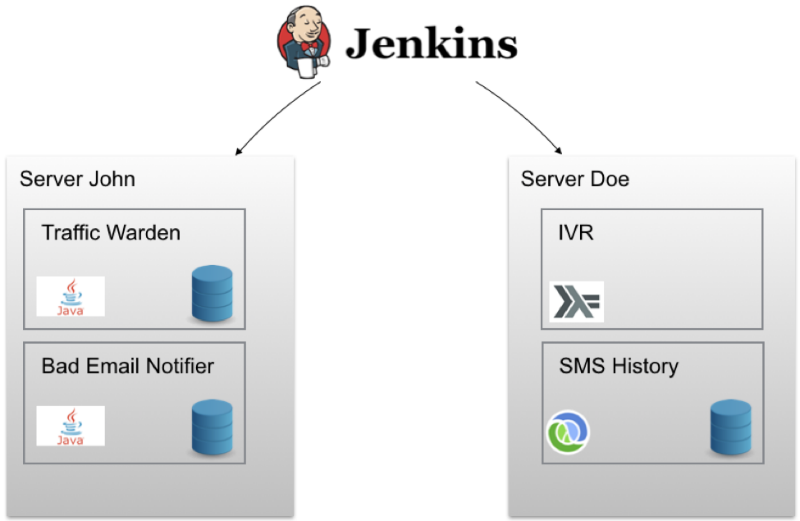

The project engineering team initially used the Jenkins continuous delivery methodology for each service. This made it possible to run integration tests for each commit, generate an artifact and a Docker image, and then deploy it on a test server. With a single click, any image could be deployed on a “combat” server. The scheme looked like this:

')

Cells called John and Doe contain sets of Docker containers deployed on a specific node. In addition, Ansible is used for provisioning. As a result, you can create a new cloud server and install the correct version of Docker / Docker-Compose on it, configure the firewall rules and perform other settings using one command. For each service running in the Docker-container, there are guard scripts that “pick up” it in the event of a crash. But in this configuration, there may be problems.

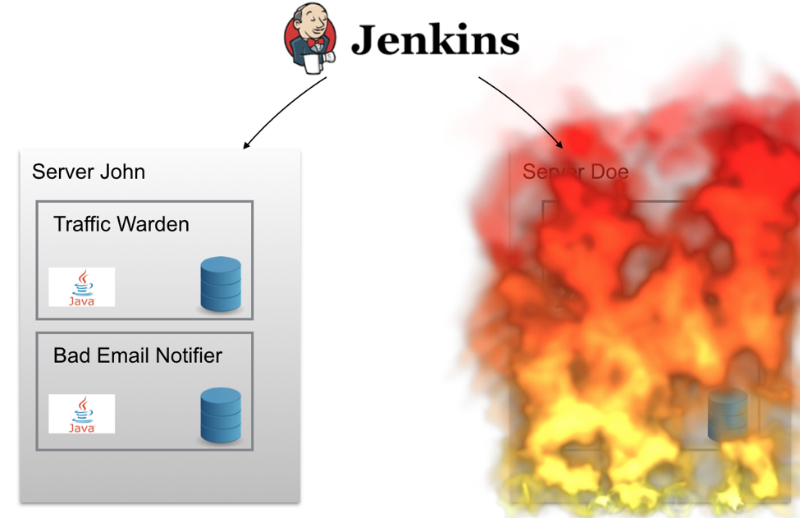

If the Doe server “burns out” one night (or just a short-term serious failure occurs on it), the technical project team will have to rise in the middle of the night to return the system to a healthy state. The services installed on Doe do not automatically move to another server — for example, John, so the system would stop working for a while.

There are other difficulties - the location of the maximum number of containers on a single node, network discovery (service discovery), the "fill" of updates, the aggregation of log data. It was necessary to somehow cope with all this.

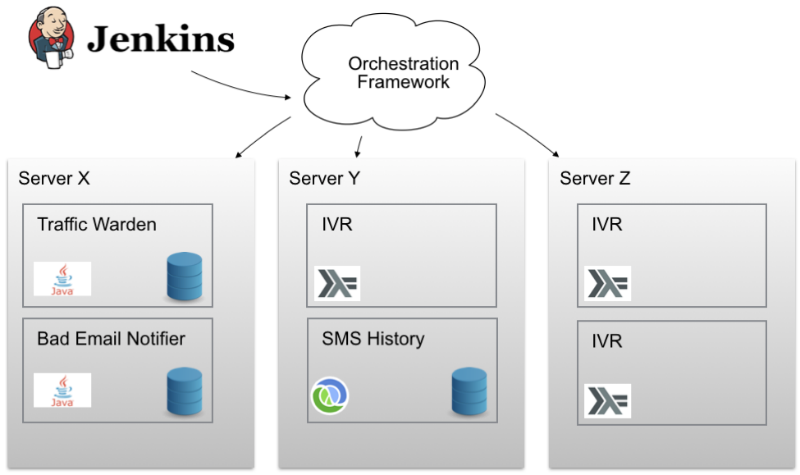

We needed a kind of magic orchestration framework (orchestration framework), with which it would be possible to distribute the containers on the cluster nodes. It was necessary to ensure that one could work with a multitude of nodes as with a single node. It was necessary for this magical framework to be able to say "unwrap 3 instances of such a Docker container somewhere in the cluster and make sure that everything works." Great plan! The case remained for the small - to find such a framework.

the wish list

Technologies are developing very fast, so even to meet very moderate standards, it is still necessary to choose the tools so that they are alive and maintained for some time. Haleby engineers would like their “dream framework” to use something like the docker-compose they had already used — this would eliminate the need for a large amount of reconfiguration and tuning work.

In addition, a plus would be the simplicity of the framework and the absence in it of too many magical features that are difficult to control. Any modular solution would be perfect, which would leave the possibility of replacing some parts if necessary. An open license would also be a plus - in this case it would be easy to monitor the progress of the project and make sure that it has an active community.

In addition, members of the Haleby team would like the selected tool to have support for working with containers in several clouds at the same time, as well as the presence of network detection functionality. Also, engineers did not want to spend a lot of time setting up, so the preferred option would be to use the framework as service model.

Choosing a framework

Analysis of existing options on the market allowed us to identify several candidate frameworks. Among them:

AWS ECS

AWS ECS Container Service is a highly scalable and fast container management tool with which you can start, stop and work with Docker containers in a cluster of Amazon EC2 instances. If a project is already working in this cloud, then it is just strange to not consider this option. However, Haleby did not work at Amazon, so the move seemed to the company's engineers not the best solution.

In addition, it turned out that the system does not have built-in network detection functionality, which was one of the key requirements. In addition, in the case of AWS ECS, it would not have been possible to launch multiple containers using a single port on a single node.

Docker swarm

Docker Swarm is a native clustering tool for Docker. Since Swarm uses the Docker API, any tool that can work with the Docker daemon can be scaled with its help. Since Haleby uses docker-compose, the last option sounded very tempting. In addition, the use of a familiar technology familiar tool meant that the team would not have to learn something new.

However, not everything was so rosy. Despite the fact that Swarm supports several engines for network detection, it is not an easy task to set up a workable integration. In addition, Swarm is not a managed service, so it would be necessary to configure and maintain its work independently, which was not originally included in the plans of the project team.

Moreover, the renewal of containers would also have been impossible in automatic mode. For this, it would be necessary, for example, to configure Nginx as a load balancer for various groups of containers. Also, many engineers on the Internet expressed doubts about the performance of Swarm - among them, for example, Google employees .

Mesosphere DCOS

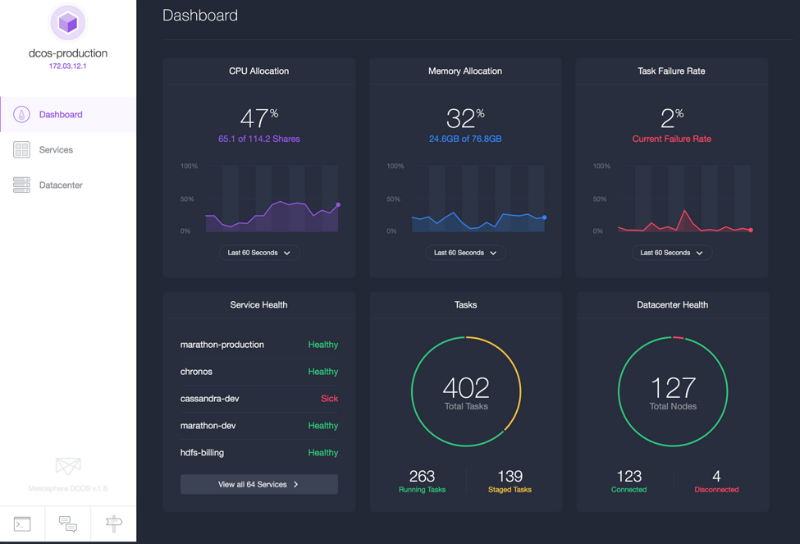

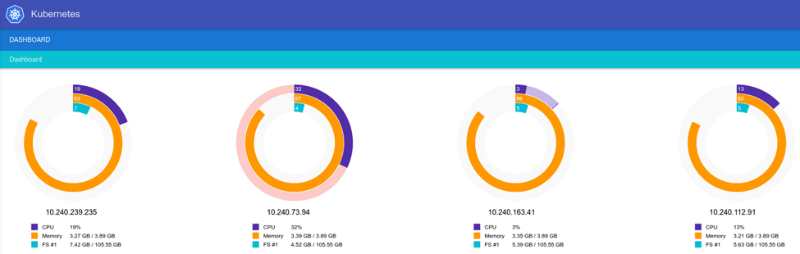

After setting up DCOS (data center operating system) on AWS and starting work, the Mesosphere seems like a good option. Using the convenient interface, you can get all the information about the cluster, including running services, CPU usage and memory consumption. DCOS can work in any cloud and with several clouds at once. Mesos is a tool proven to work in large clusters. Also DCOS allows you to run not only containers, but also individual applications, like Ruby, and you can install important services using the simple dcos install command (this is like apt-get for a data center).

Moreover, using this tool, you can work with several clusters at the same time - you can run Marathon, Kubernetes, Docker and Swarm within the same system.

However, along with the advantages, this option had its drawbacks. For example, support for working with multiple clouds is present only in the Enterprise version of the Mesosphere DCOS, but the price of this version is not listed on the site, and no one has responded to requests.

Also in the documentation at that time (October / November 2015) there was no information on how to move to a new cluster without the need to interrupt the system - it is important to understand that this is also not a managed service, so problems may arise during the move .

Tutum

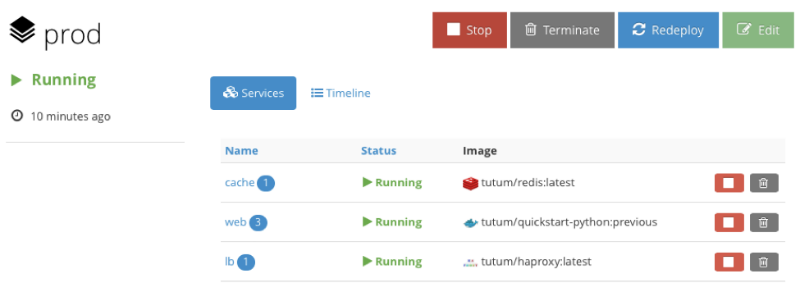

The project team describes it as a “Docker platform for Dev and Ops”, and the slogan sounds like “build, deploy and manage your applications in any cloud”. The interface looks like this:

It may not seem as impressive to someone as DCOS, but nevertheless, the system allows you to perform most of the necessary manipulations with simple mouse clicks, although Tutum also has good command line support. To start working with the system, you need to install a Tutum agent on each node of the cluster, after which the node starts to appear in the administrative panel. An interesting point is that Tutum uses the ad stack format from several services or containers, which is similar to docker-compose - so it will be even easier for professionals familiar with Docker to get started.

In addition, Tutum supports volumes using cluster integration in Flocker . Tests in which certain containers were “killed” were passed successfully - they were recreated in the same state (sometimes on different nodes). Another advantage was the function of creating links between containers - it can be used to link certain containers in a group.

HAProxy, which supports virtual hosts, is used for load balancing, so that several services can be placed behind a single instance of HAProxy. Deploying services can also be done in different ways, and to update Docker in the whole cluster, just a couple of clicks are enough. In the future, Docker bought the Tutum service, so that in the future it will be fully integrated into the ecosystem.

However, at the time of searching for the best framework, Tutum was in beta testing. And yet, the main argument against this option was the incident, when during the test, the engineers deliberately made the node completely unavailable - the CPU utilization of 100% did not even allow connecting to the machine via SSH, the containers from it were not transferred to another node. Technical support Tutum said that he was familiar with this problem, but it has not yet been eliminated. It was necessary to look further.

Kubernetes

The Kubernetes team describes the project as a tool for "managing a cluster of Linux containers as a single system to speed up Dev and simplify Ops." This is an open source project that supports Google. A number of other companies are also involved in its development, including Red Hat, Microsoft, SaltStack, Docker, CoreOS, Mesosphere, IBM.

What is really good about Kubernetes is that it combines Google’s ten-year experience in building and working with clusters - all for free! You can run Kubernetes on your hardware or at the facilities of cloud providers like AWS for which there are even special formation patterns.

In addition, Google offers a managed version of Kubernetes called the Google Container Engine . Due to the drawbacks of the interface, it is not as easy to start working with it as, for example, with Tutum, but after the user manages to understand the system, it becomes easy to work with it.

A team of engineers conducted many tests during which nodes were “killed” in the process of uploading updates, containers were accidentally destroyed, their number increased above the stated maximum bar, etc. Not always everything went smoothly, but in each case, after certain searches, it was possible to find a way to remedy the situation.

Another significant advantage of Kubernetes was the ability to update the entire cluster without interrupting work. Also, the tests confirmed the operability of network discovery — all you need to do is type the name of the required service (

http://my-service ) into the address bar, and Kubernetes create a connection to the required container. Kubernetes is a modular system whose components can be combined.The framework has its drawbacks - when working with the Google Container Engine, the system is limited to one data center. If anything happens to him, nothing good will happen. You can get out of any situation, for example, by organizing several clusters with the help of a load balancer, but there is no “out of the box” solution. However, support for working with several data centers is planned in new versions of the lighter version of the Ubernetes framework. In addition, Kubernetes uses its yaml format, which leads to the need to convert docker-compose files. In addition, the project is not as developed as Mesos, which allows working with a large number of nodes.

Conclusion

After exploring the options available on the market, the Haleby team focused on Kubernetes, which seemed to engineers to be the most appropriate tool for solving their problems. At the same time, other reviewed services also showed themselves from the good side, however they had their drawbacks, which in this particular case turned out to be unacceptable.

Source: https://habr.com/ru/post/279105/

All Articles